ATOM User & Admin Guide

version 11.8

Table of Contents

Configuration Change Management – Create/Update/Delete32

Configure Policy50

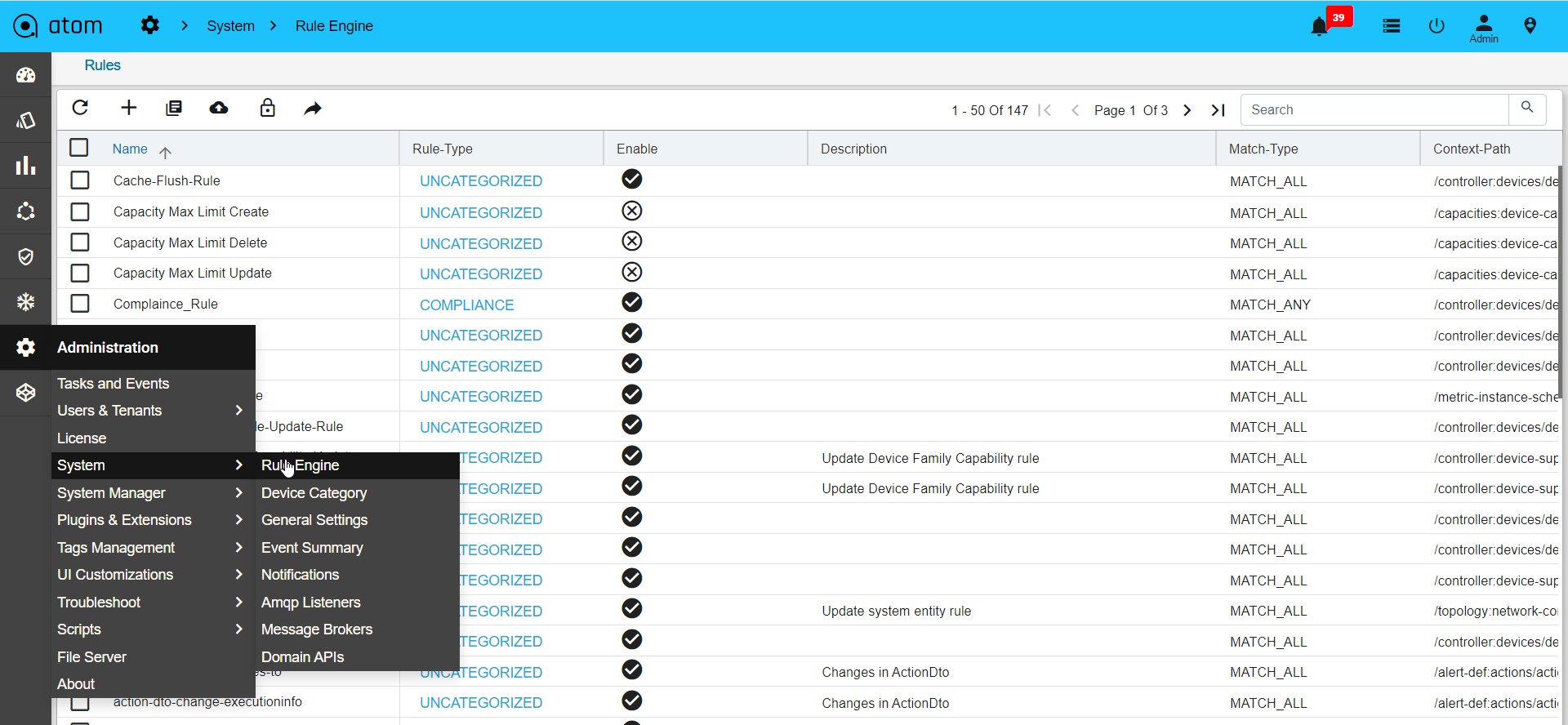

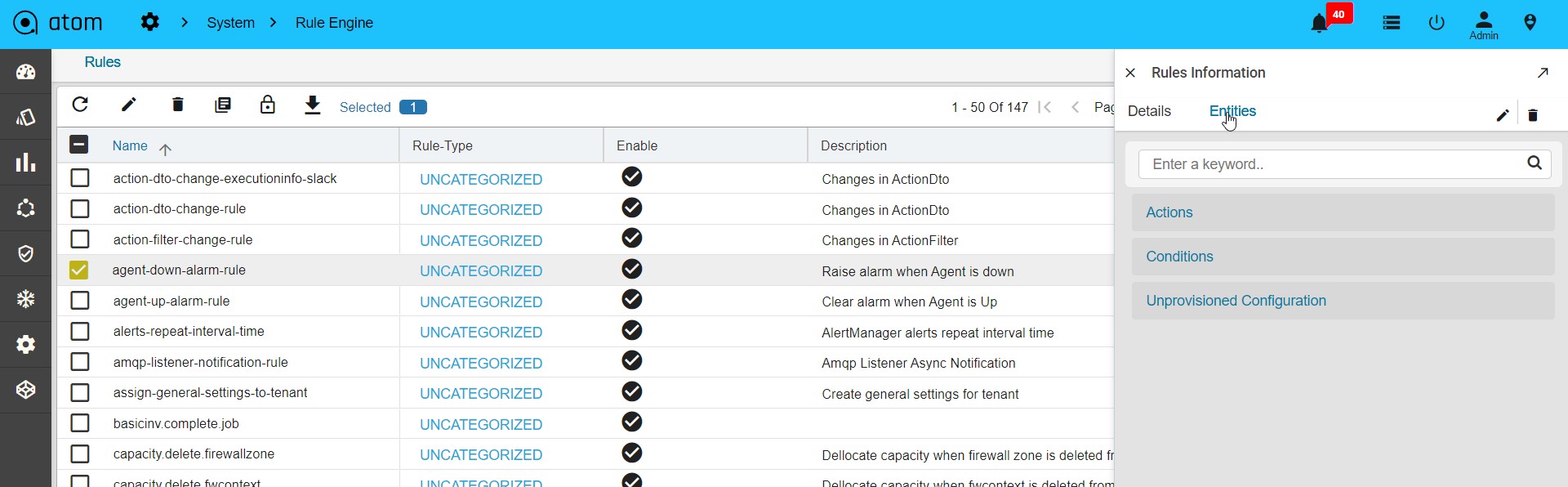

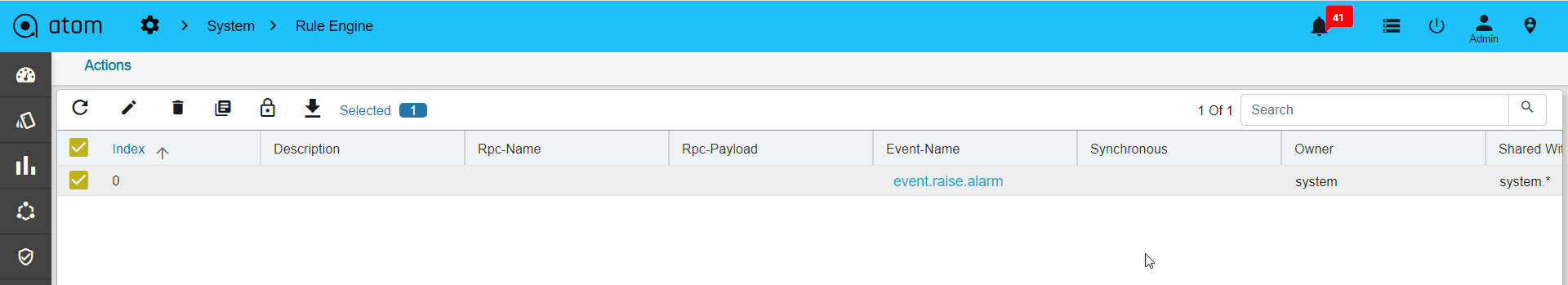

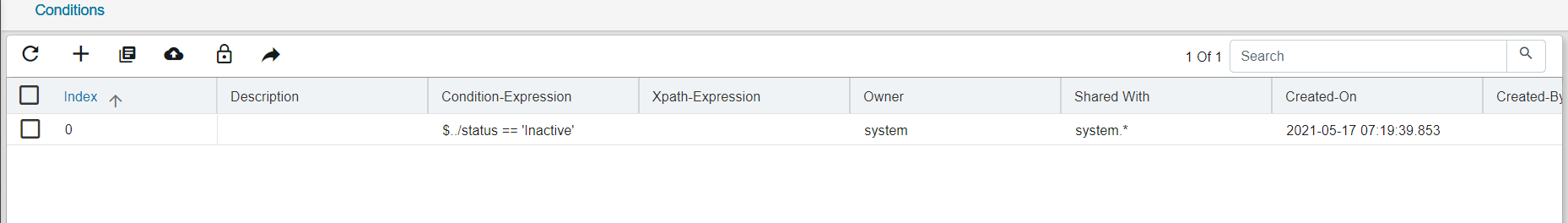

Configure Rules51

Scenario 2: NTP Server configuration check59

Scenario 3: Interface configuration check64

Scenario 4: Enforce VTY Session Timeouts68

Scenario 5: Enforce OSPF Router Id as Loopback072

Scenario 6: BGP TTL Hop-count80

Policy creation with Xpath Expressions85

Scenario 8: IP Name-server check88

Scenario 9 : NTP server Check89

Scenario 10 : Interface Check with rule_variable91

Scenario 11 : VRF Check with rule_variable94

How to derive the X-path expressions97

Policy creation with XML Template Payload97

Scenario 12 : IP Domain name check98

Scenario 13 : IP Name Server check100

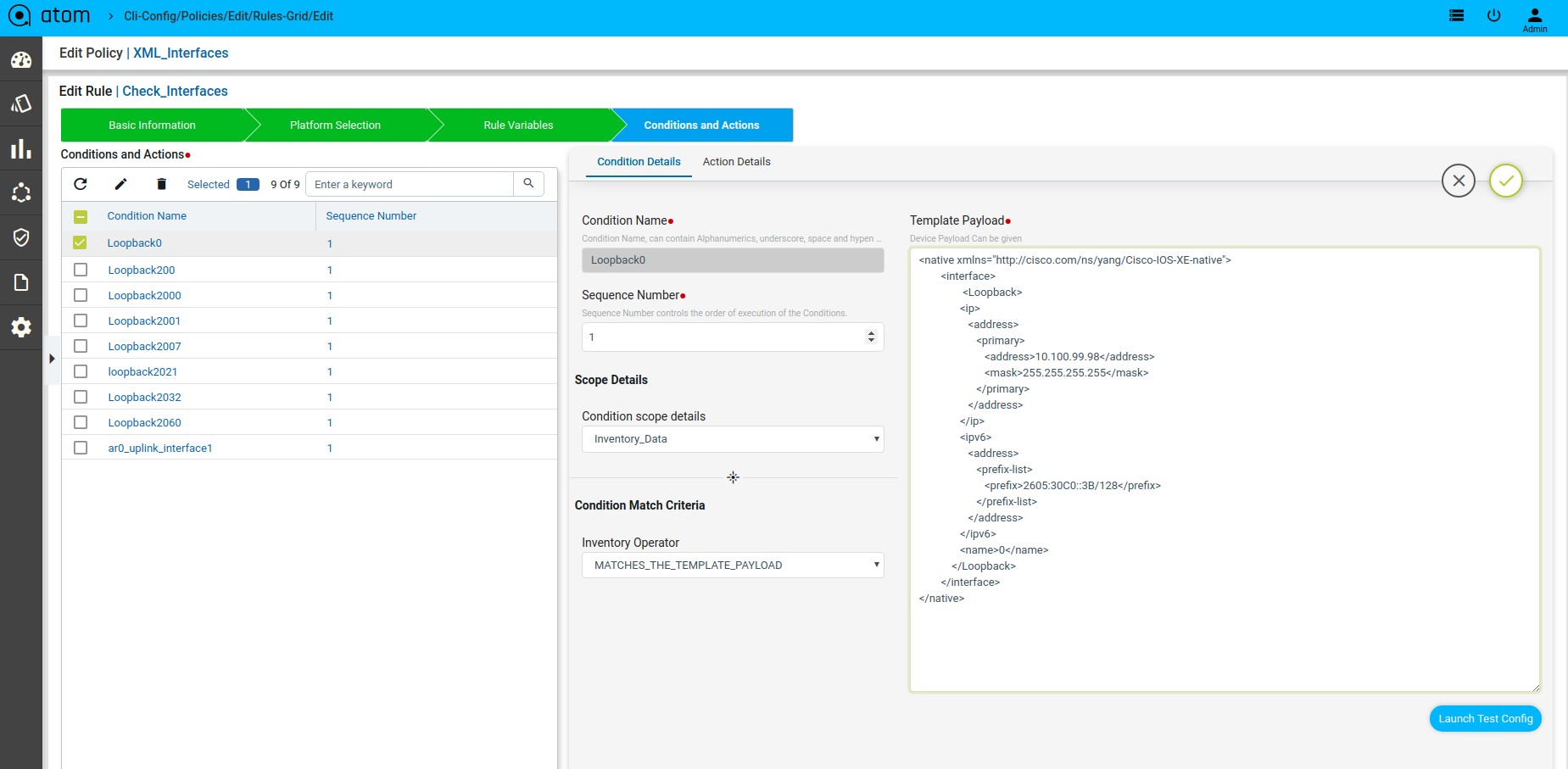

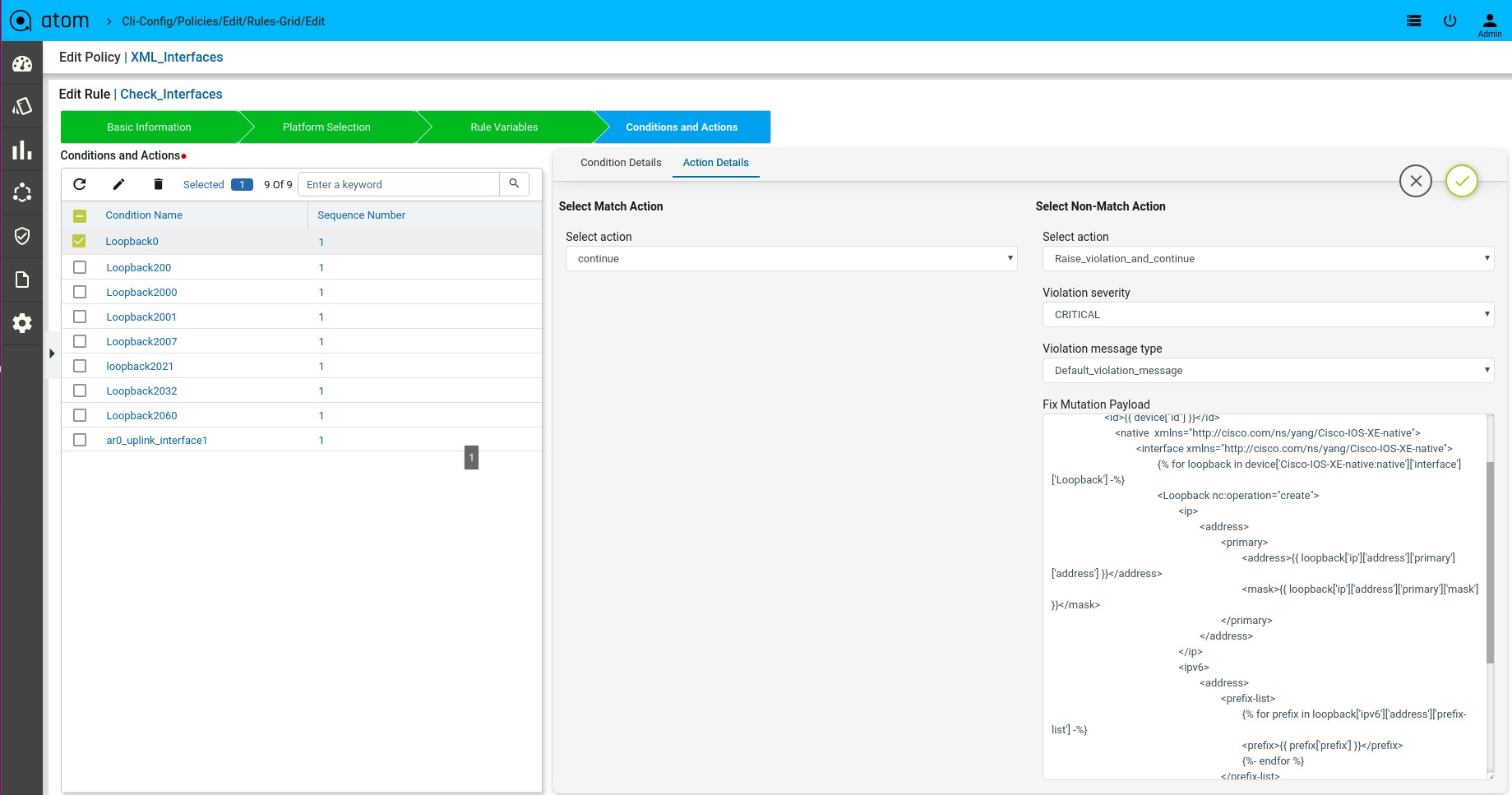

Scenario 14 : Interface check103

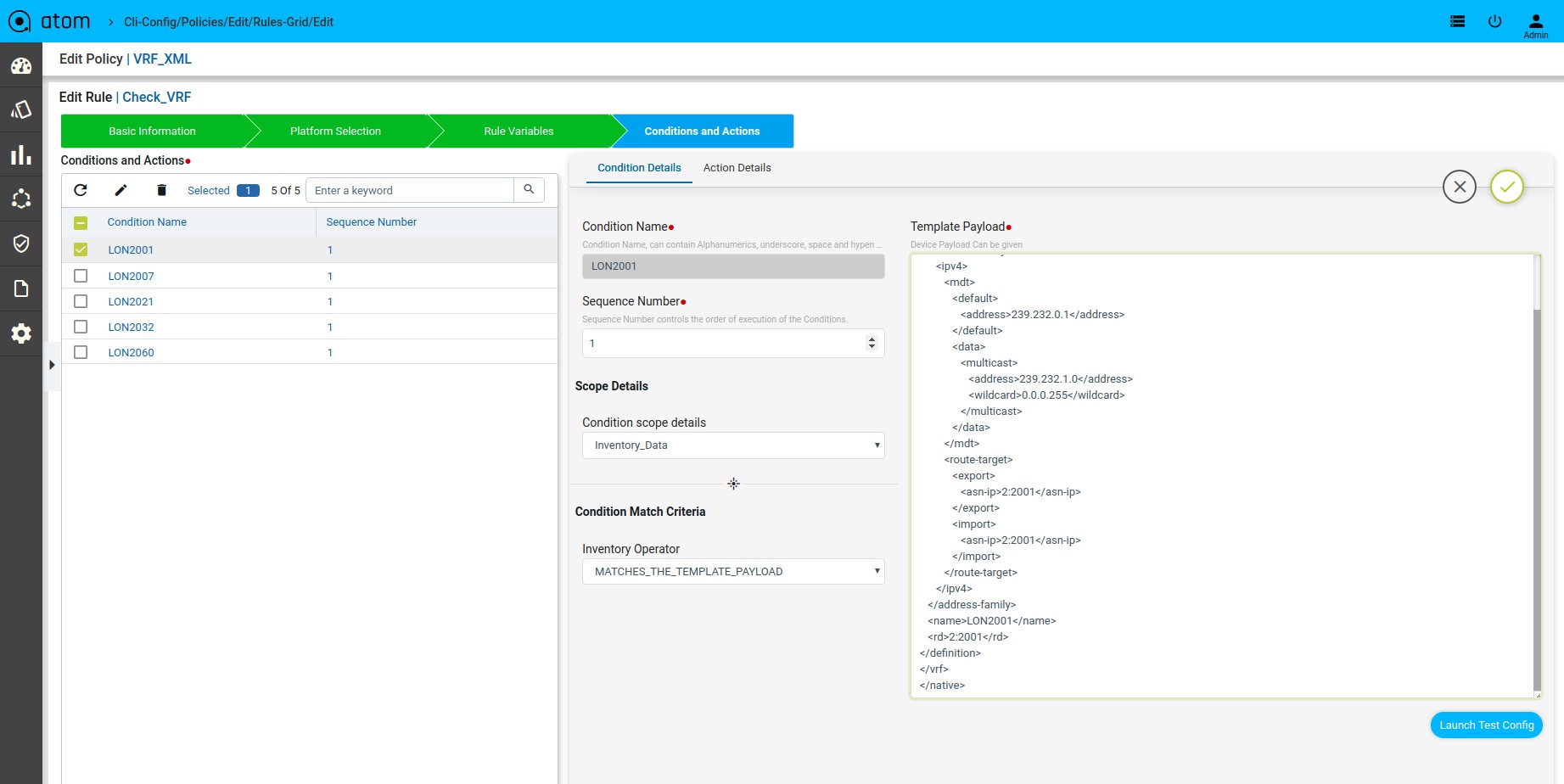

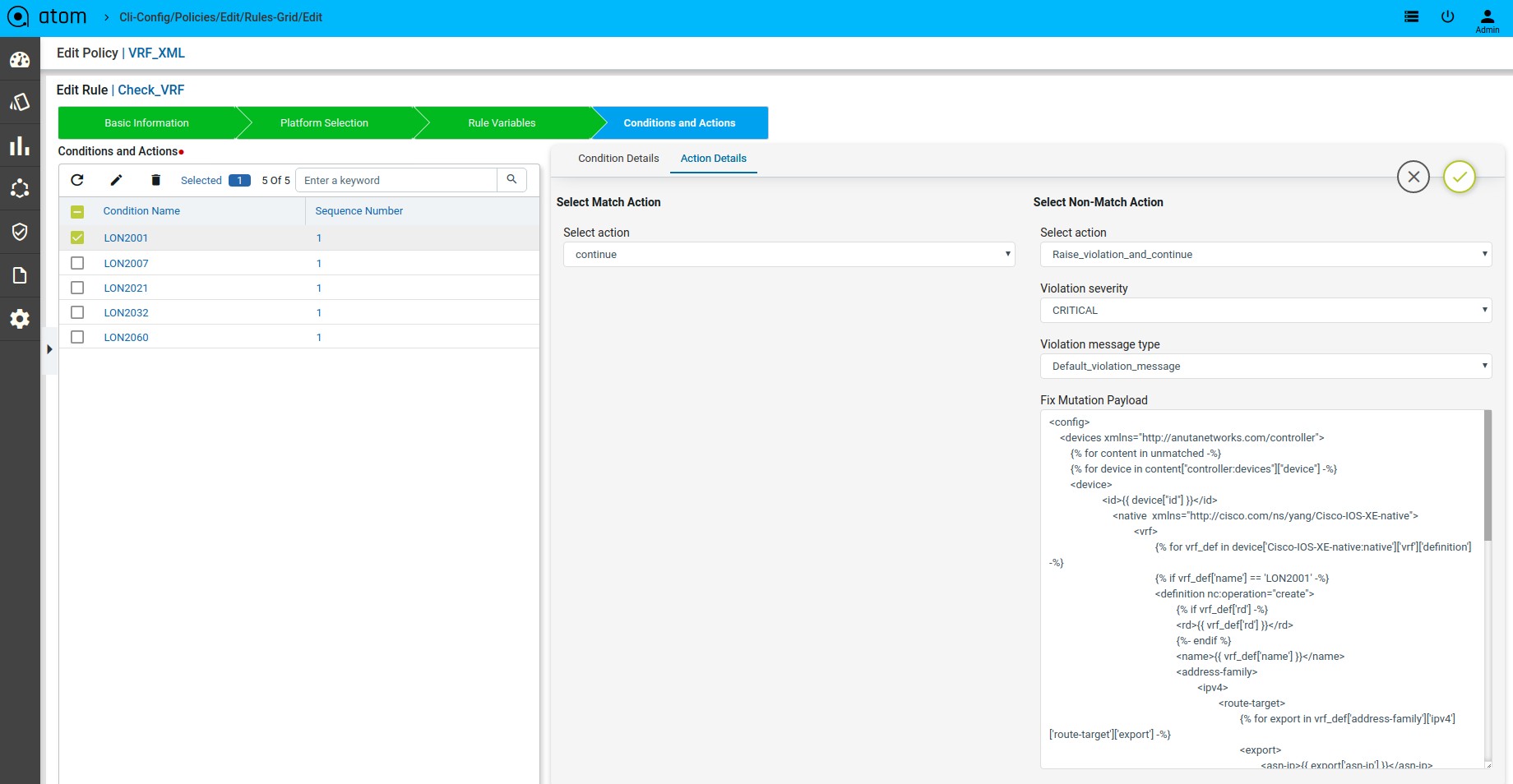

Scenario 15 : VRF check105

How to derive the XML Template payload109

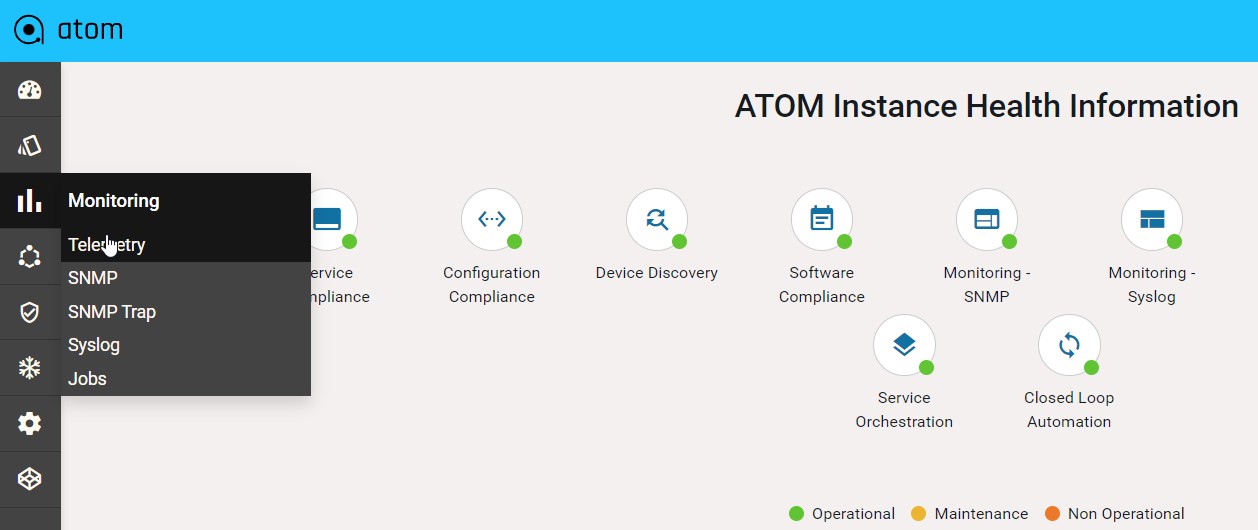

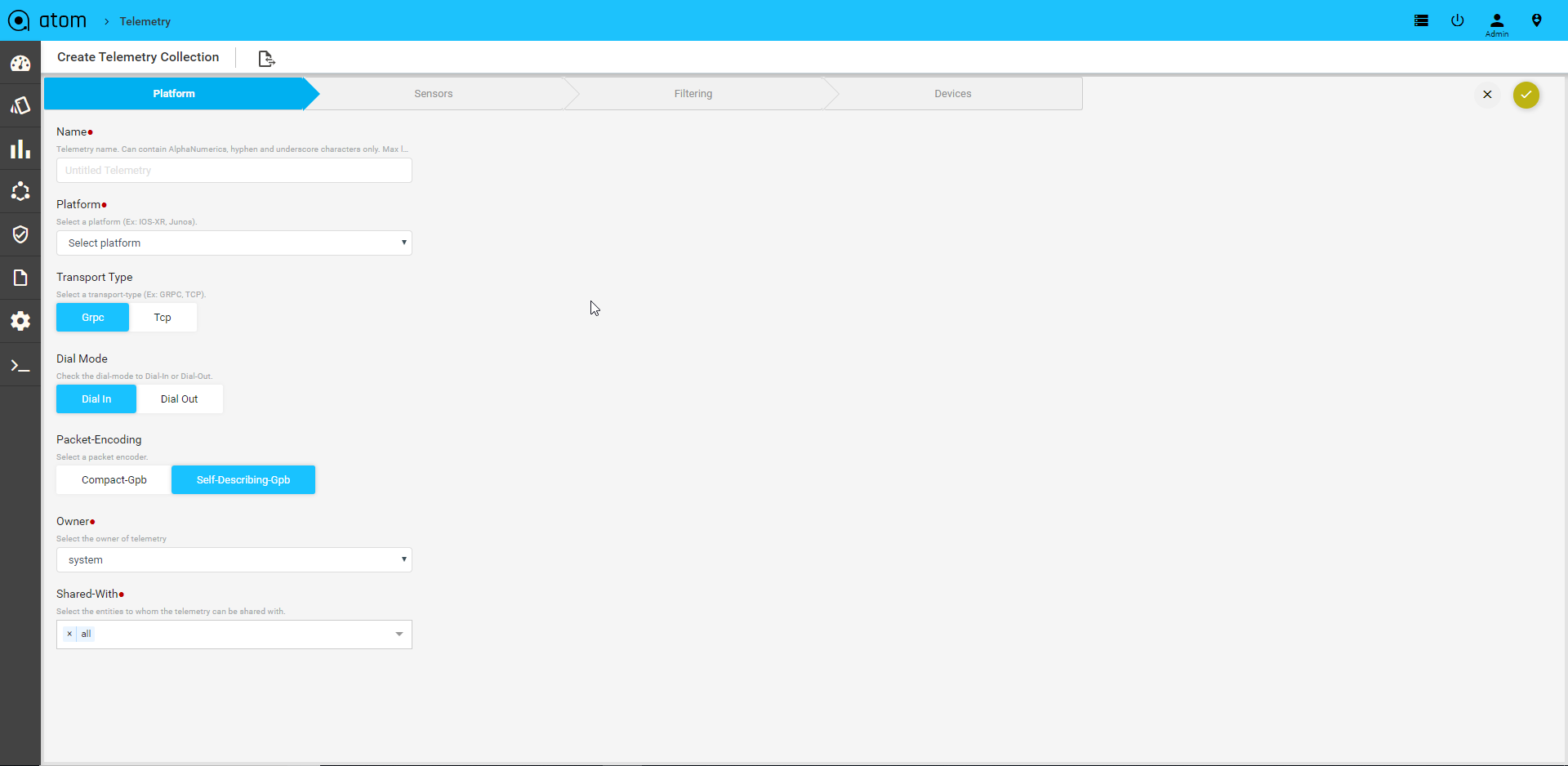

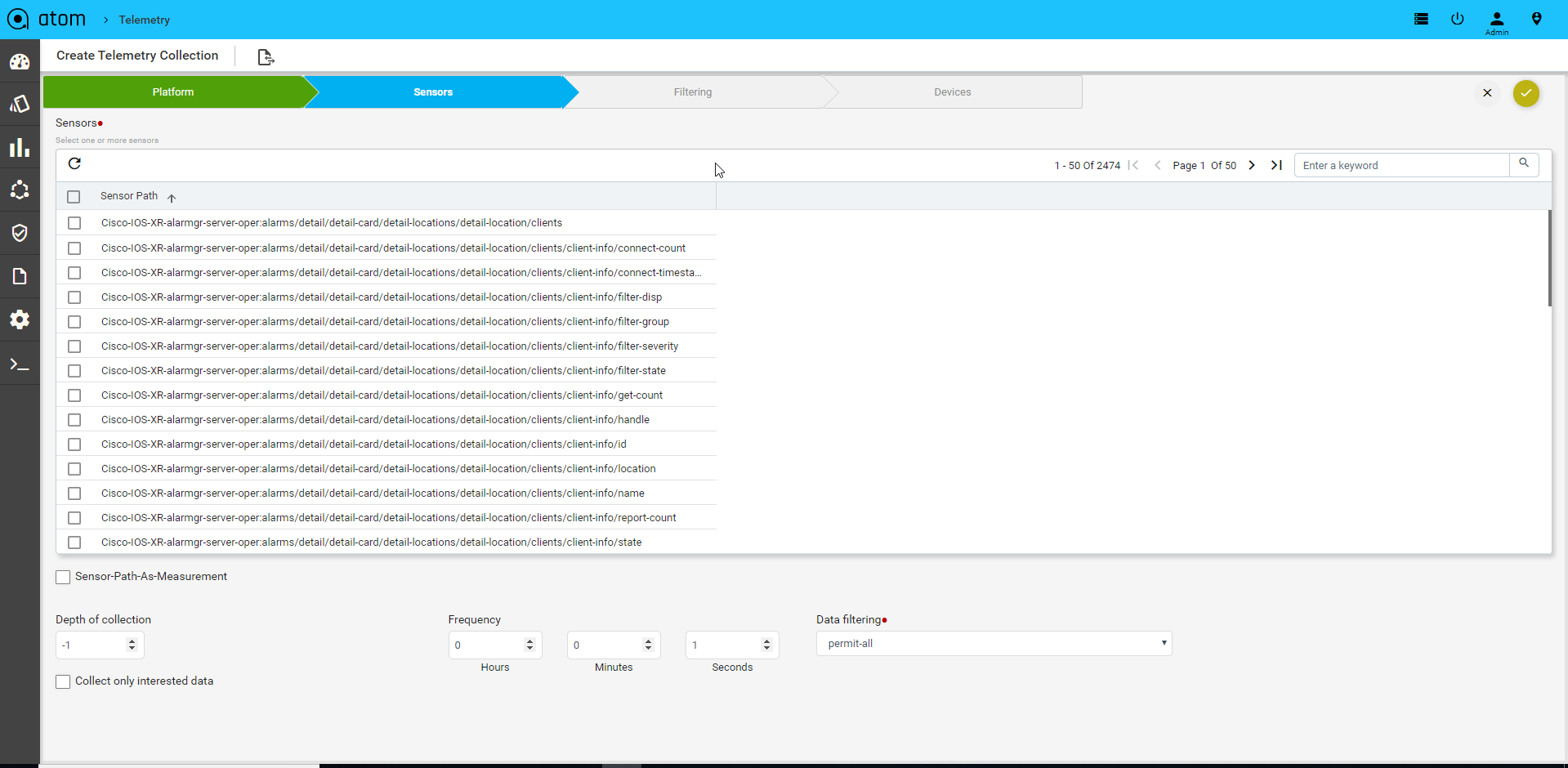

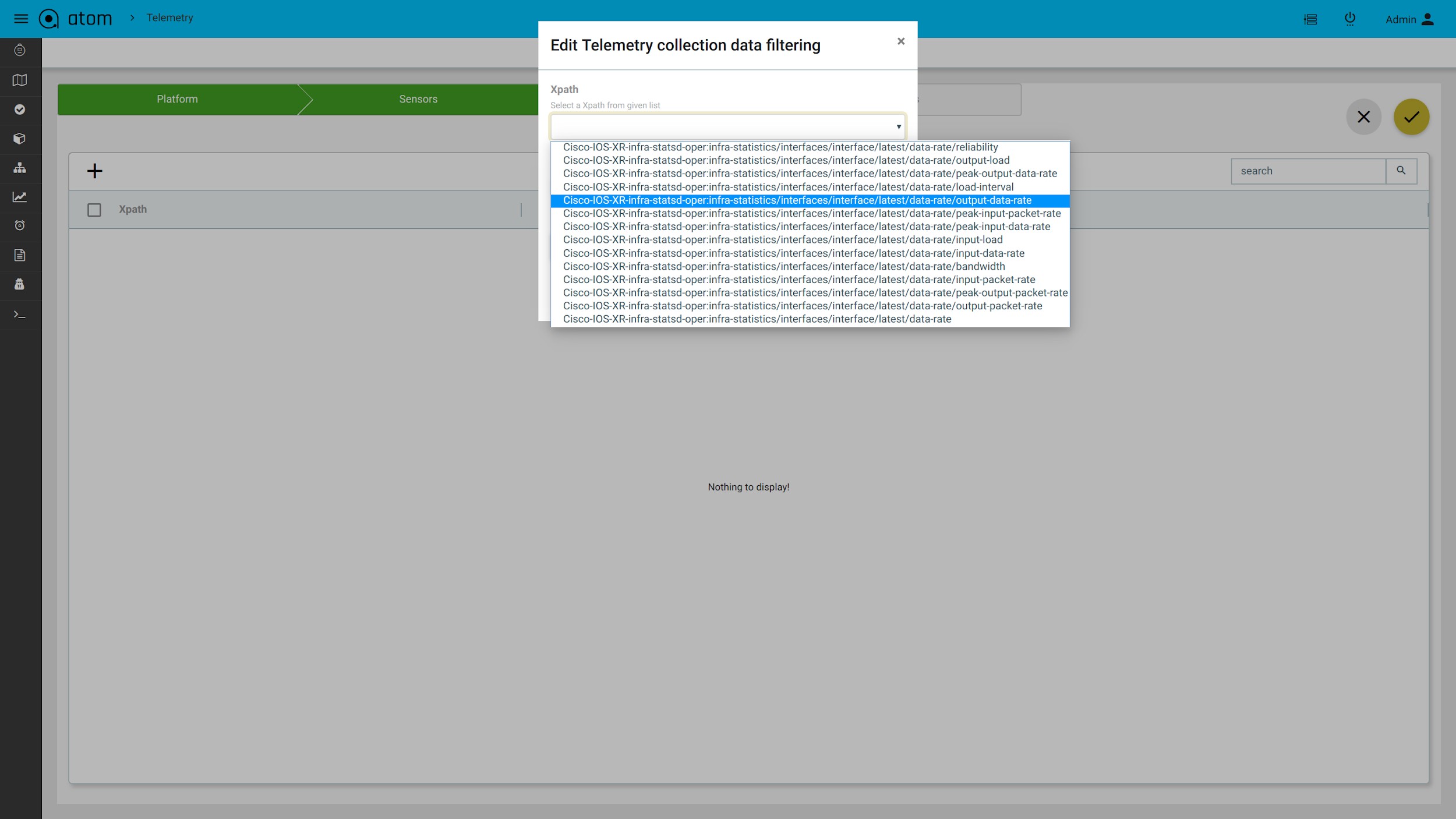

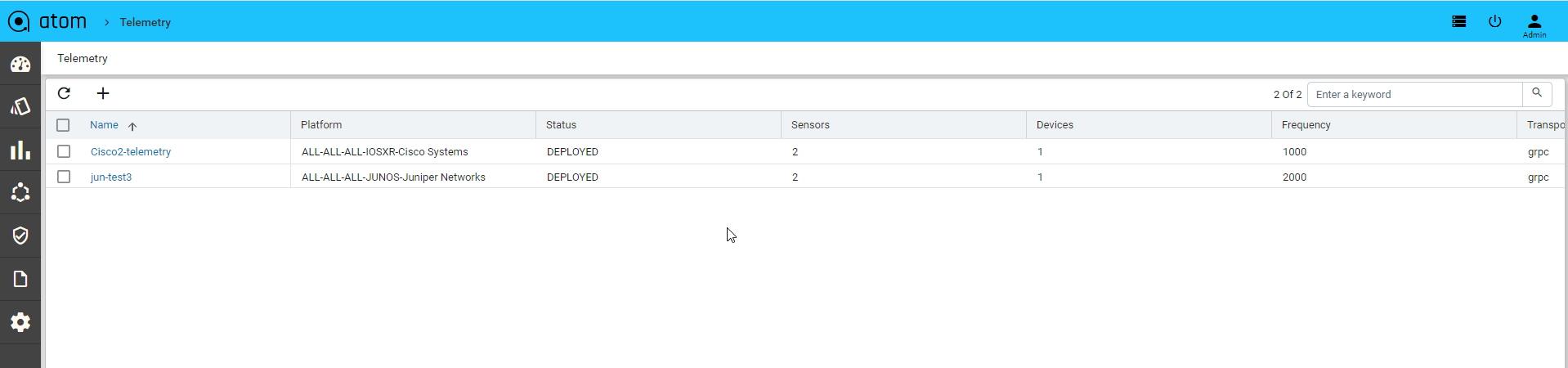

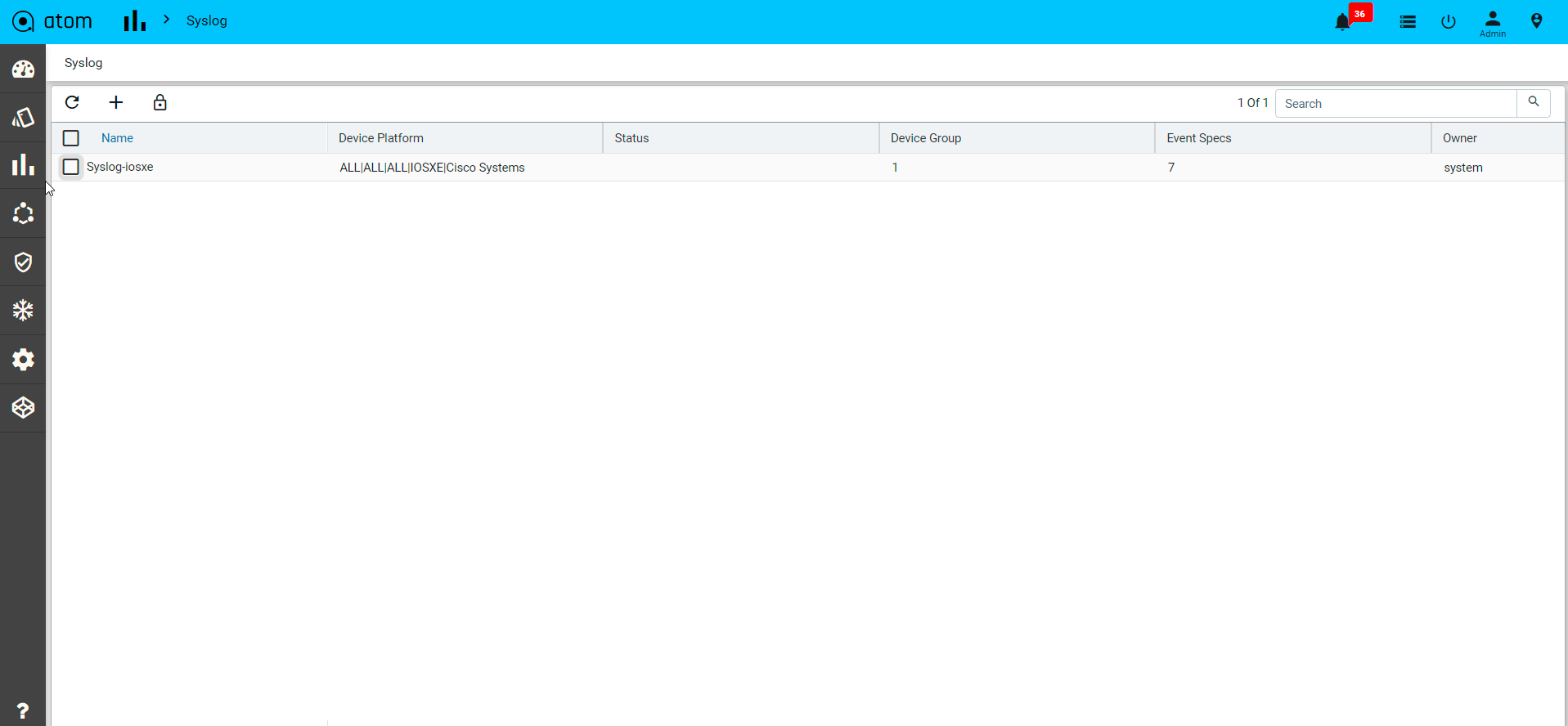

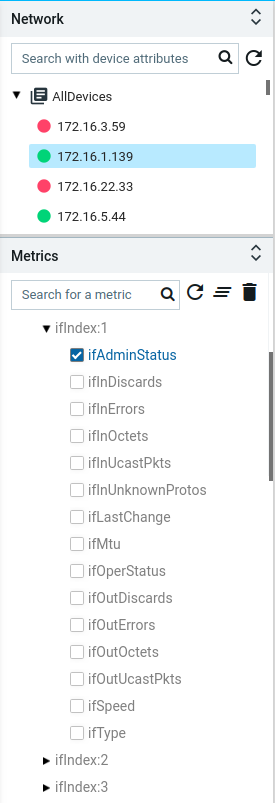

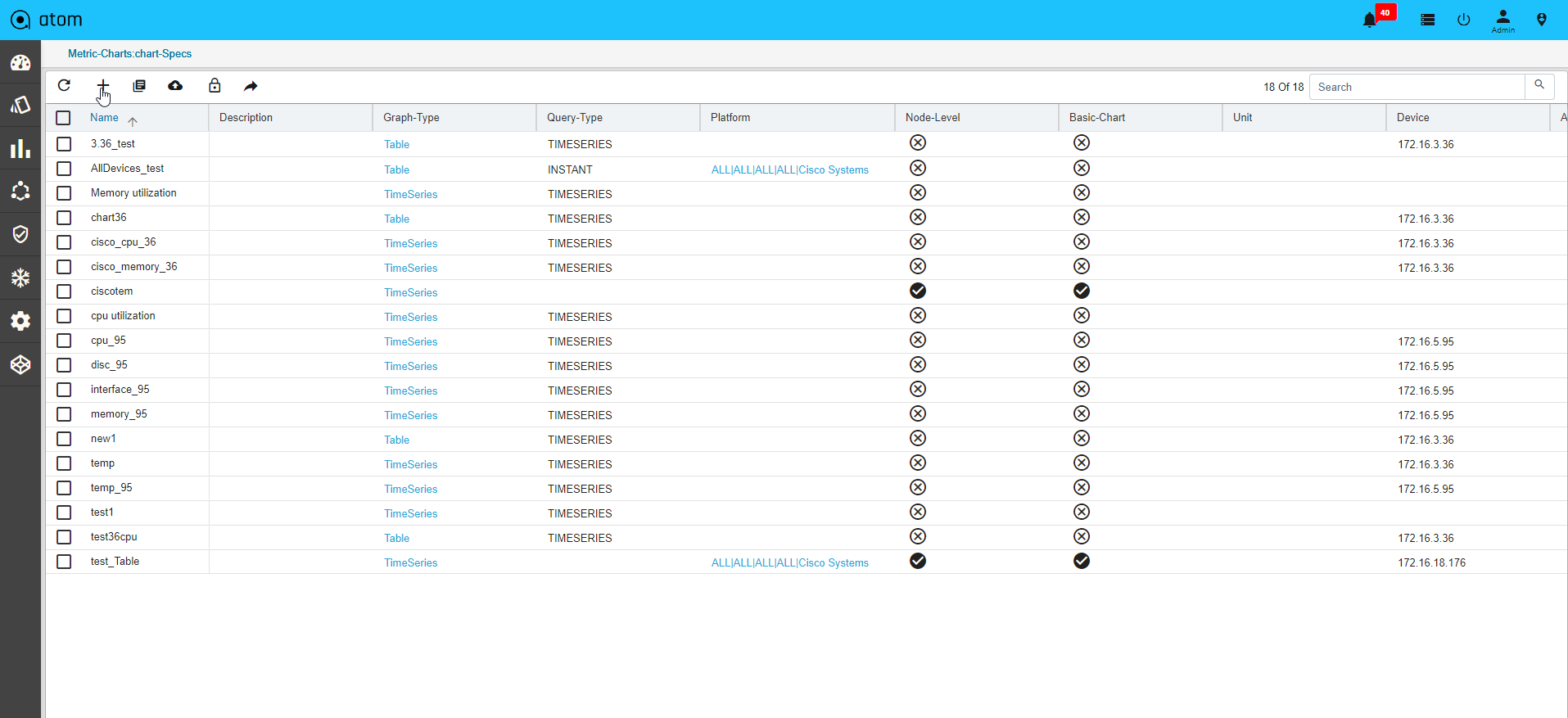

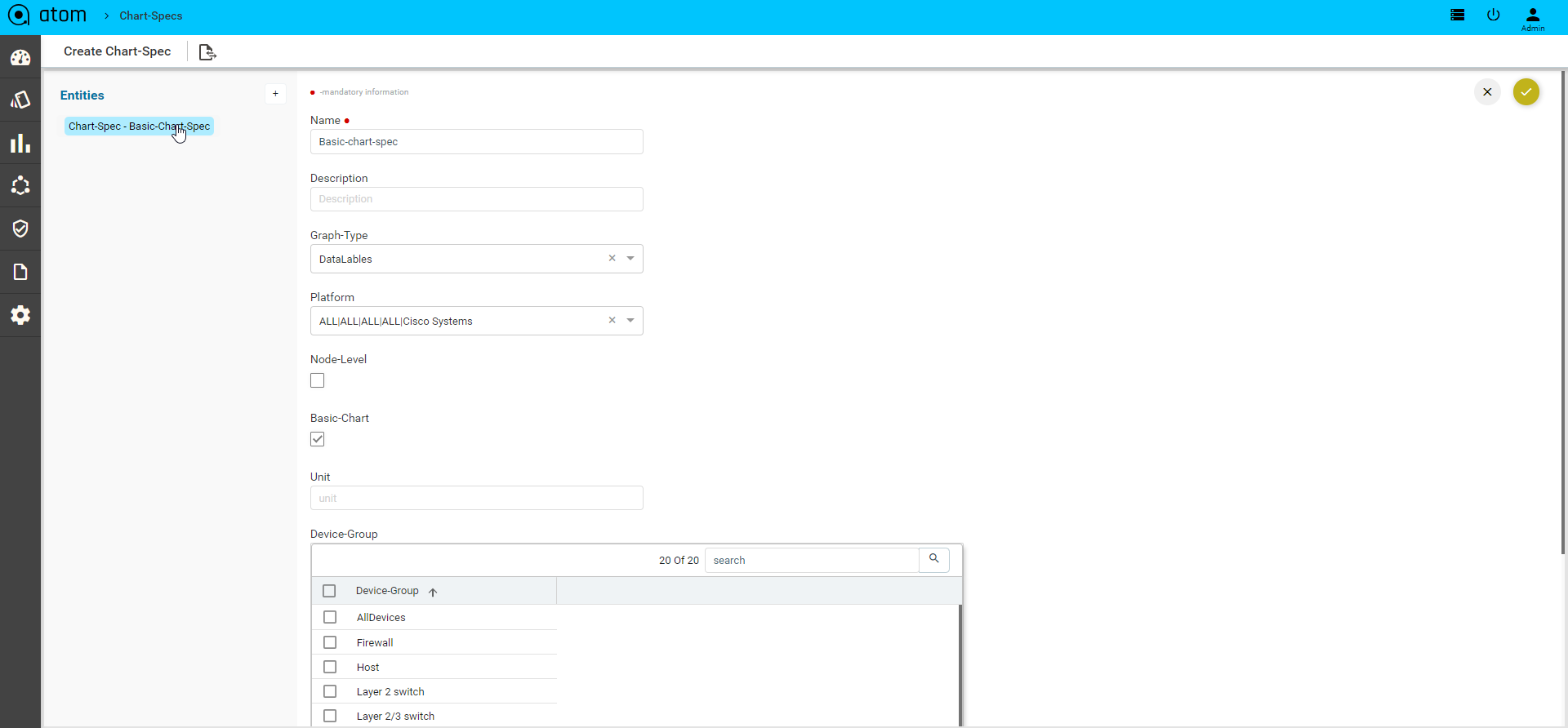

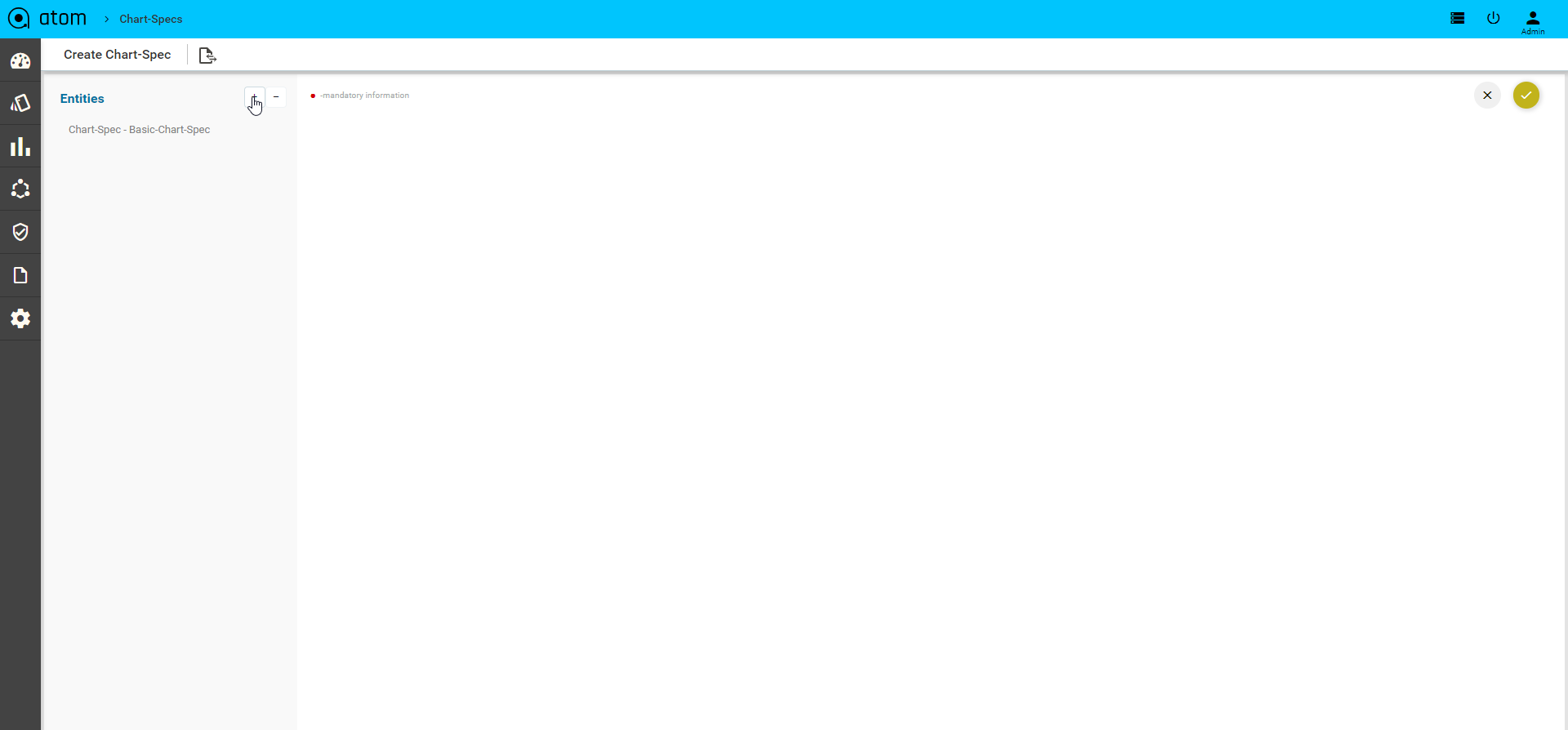

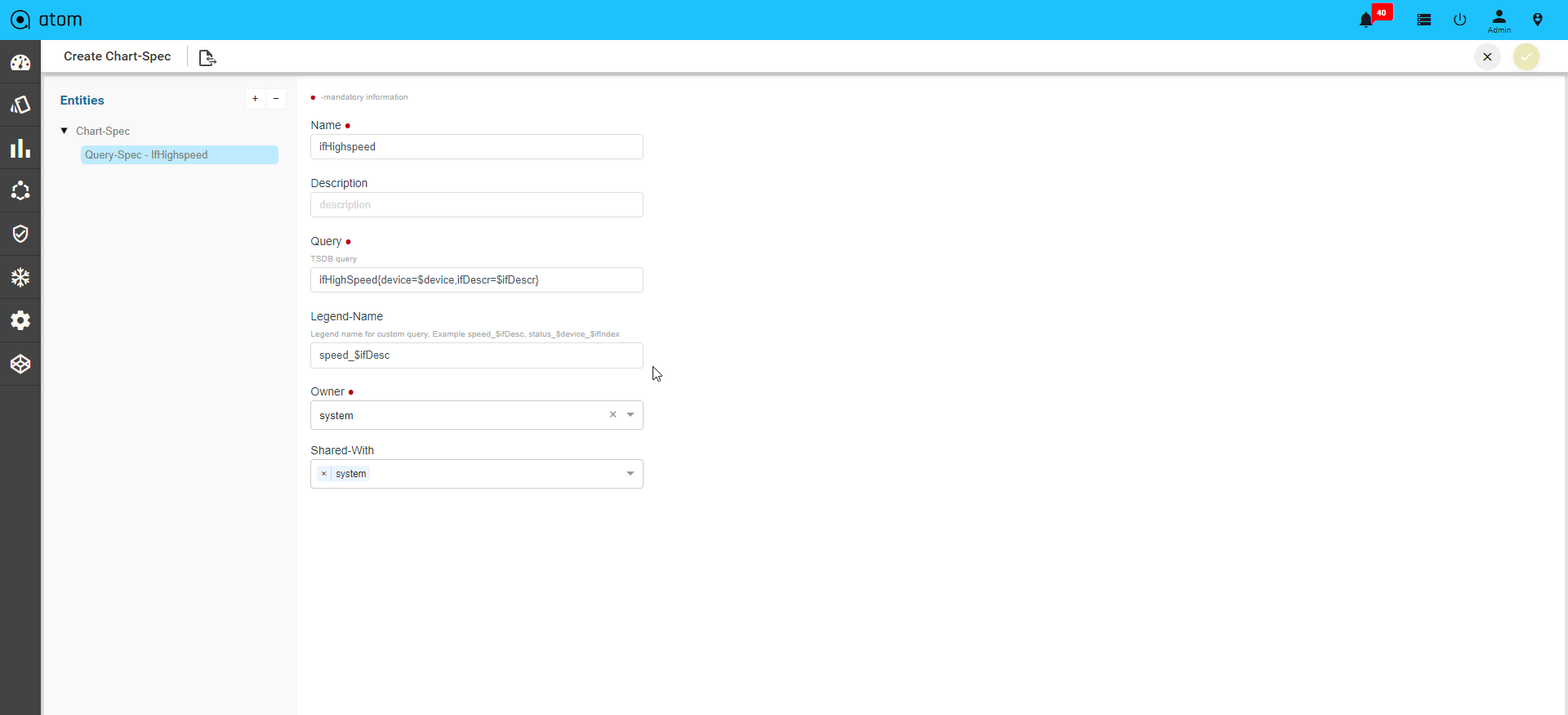

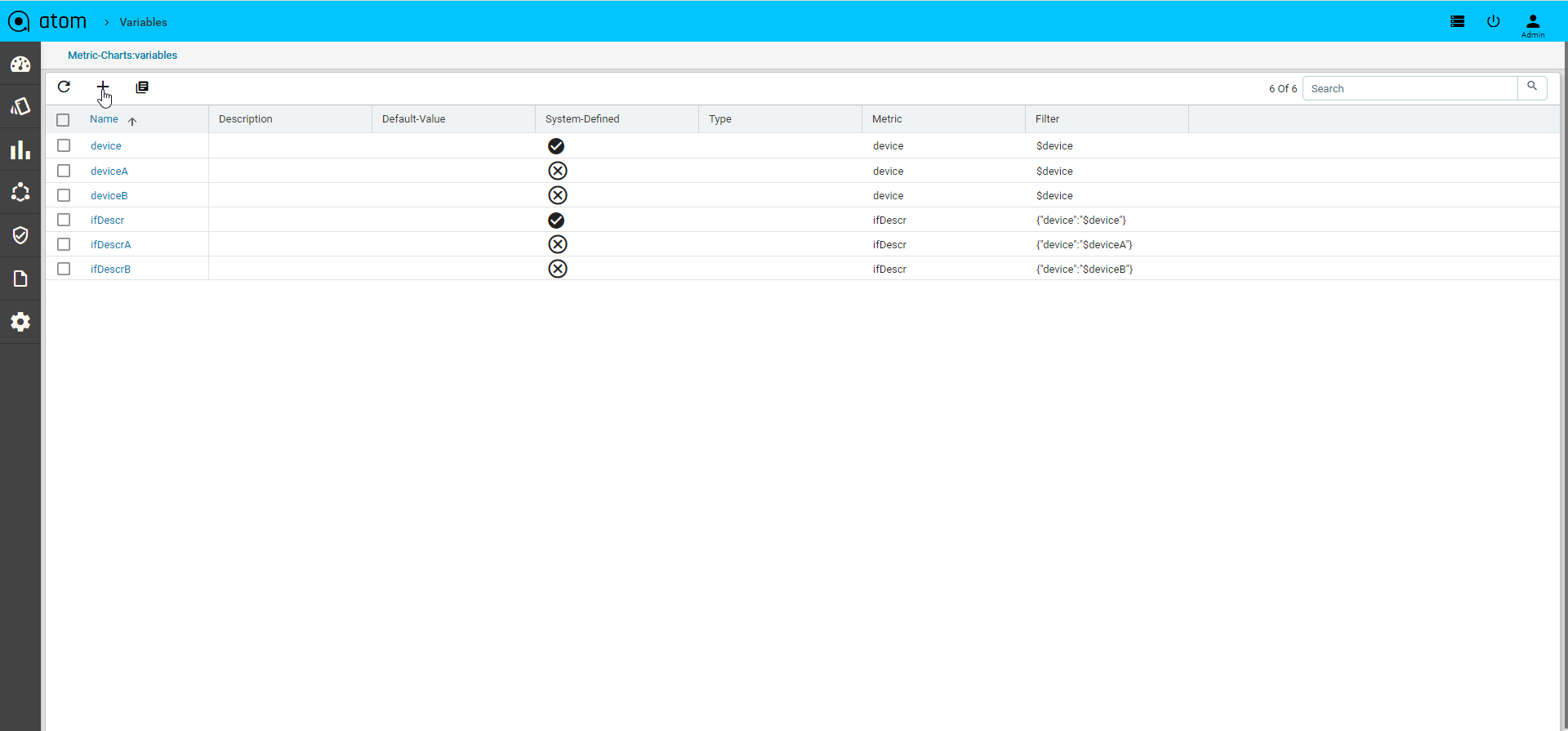

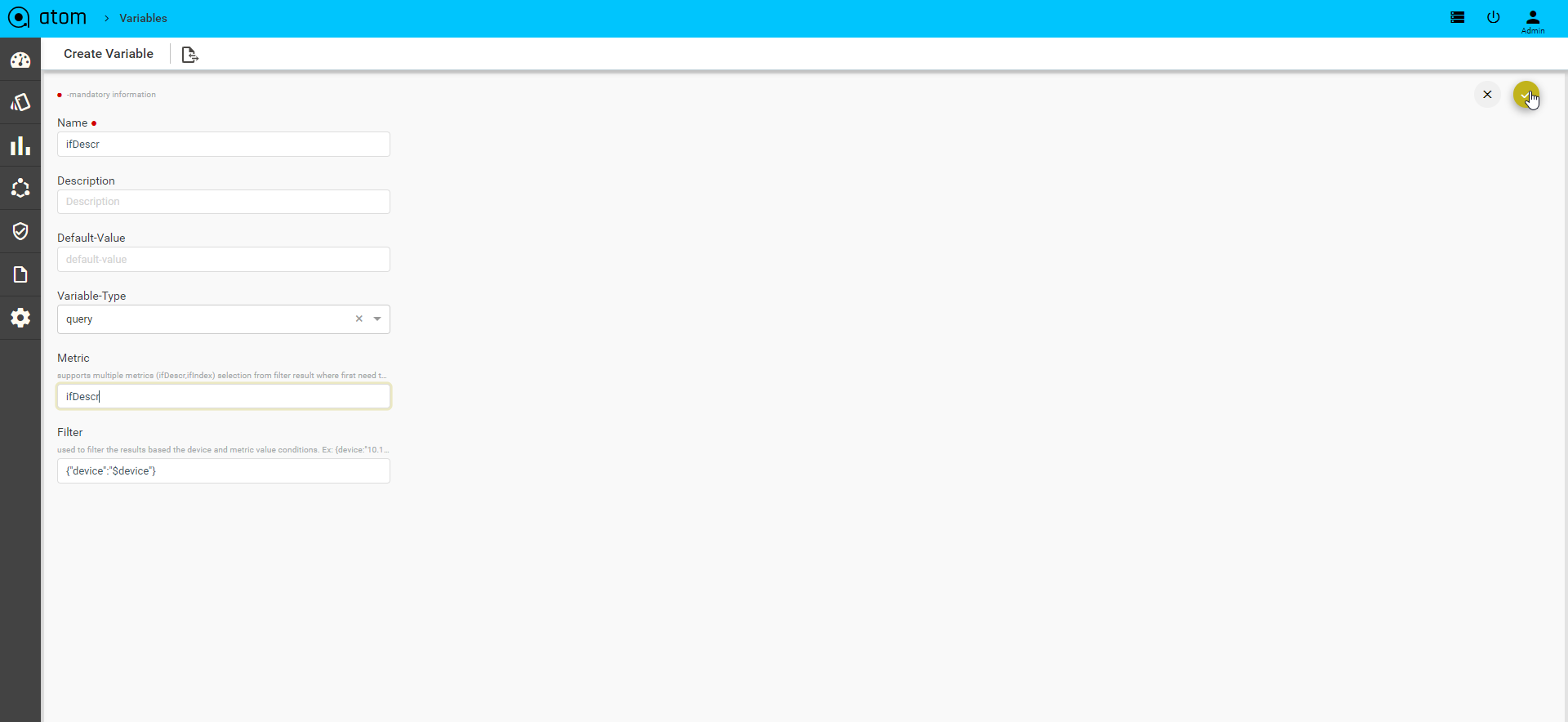

Configure Telemetry Collection160

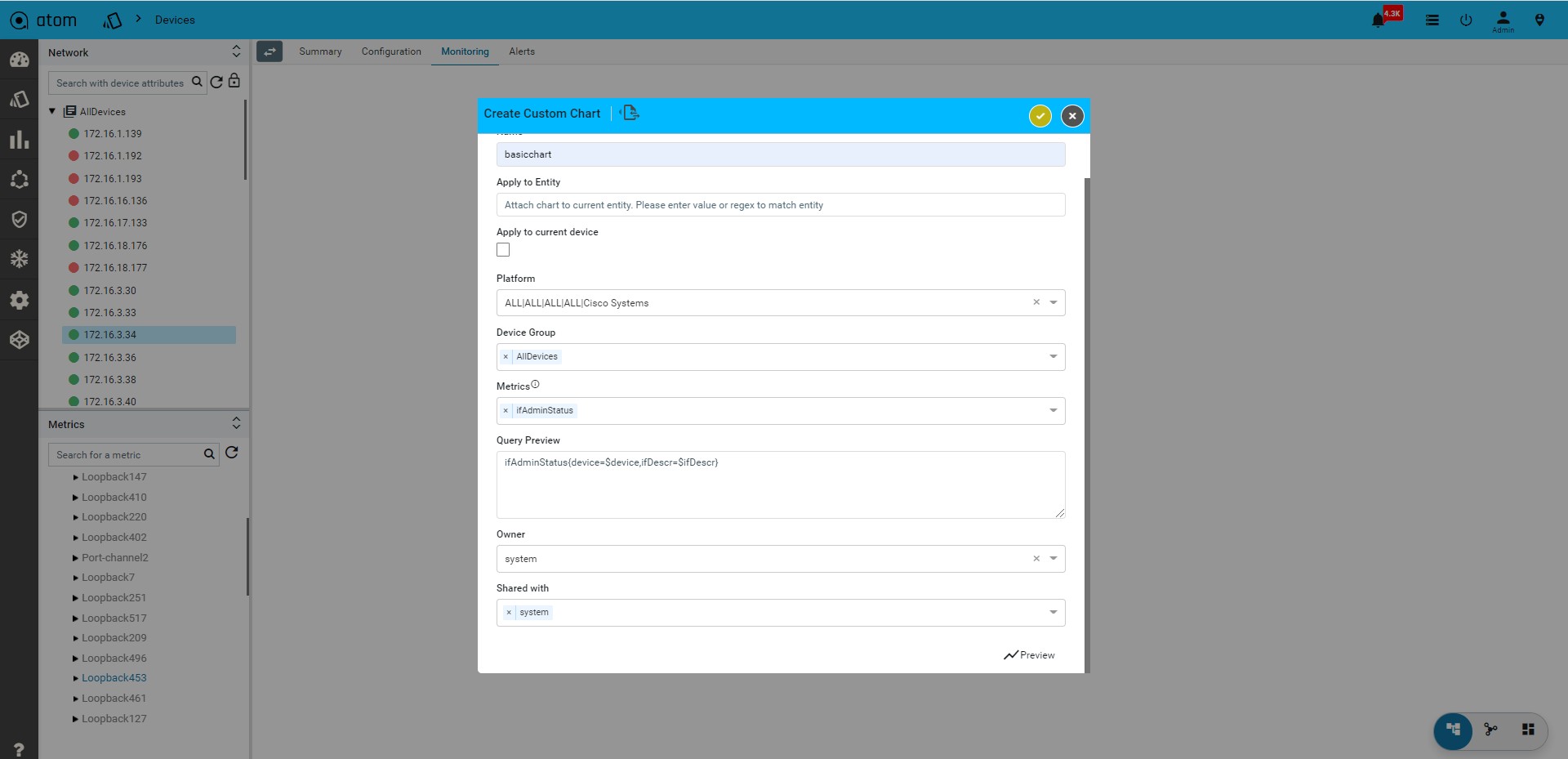

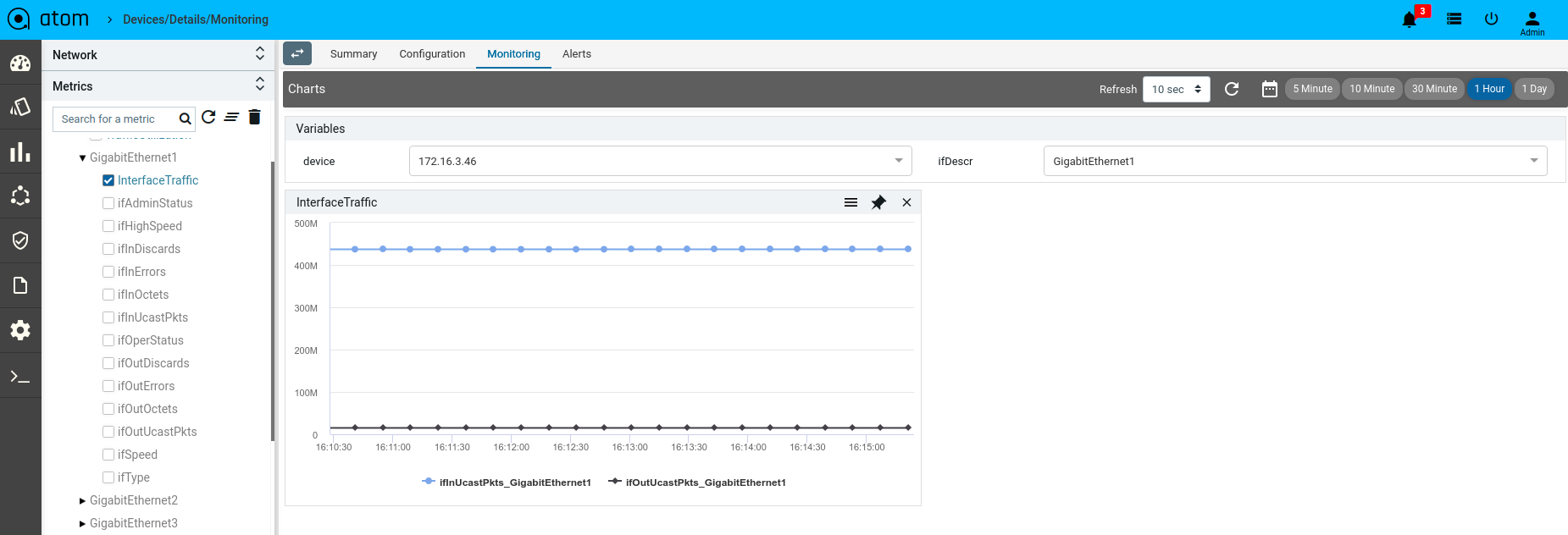

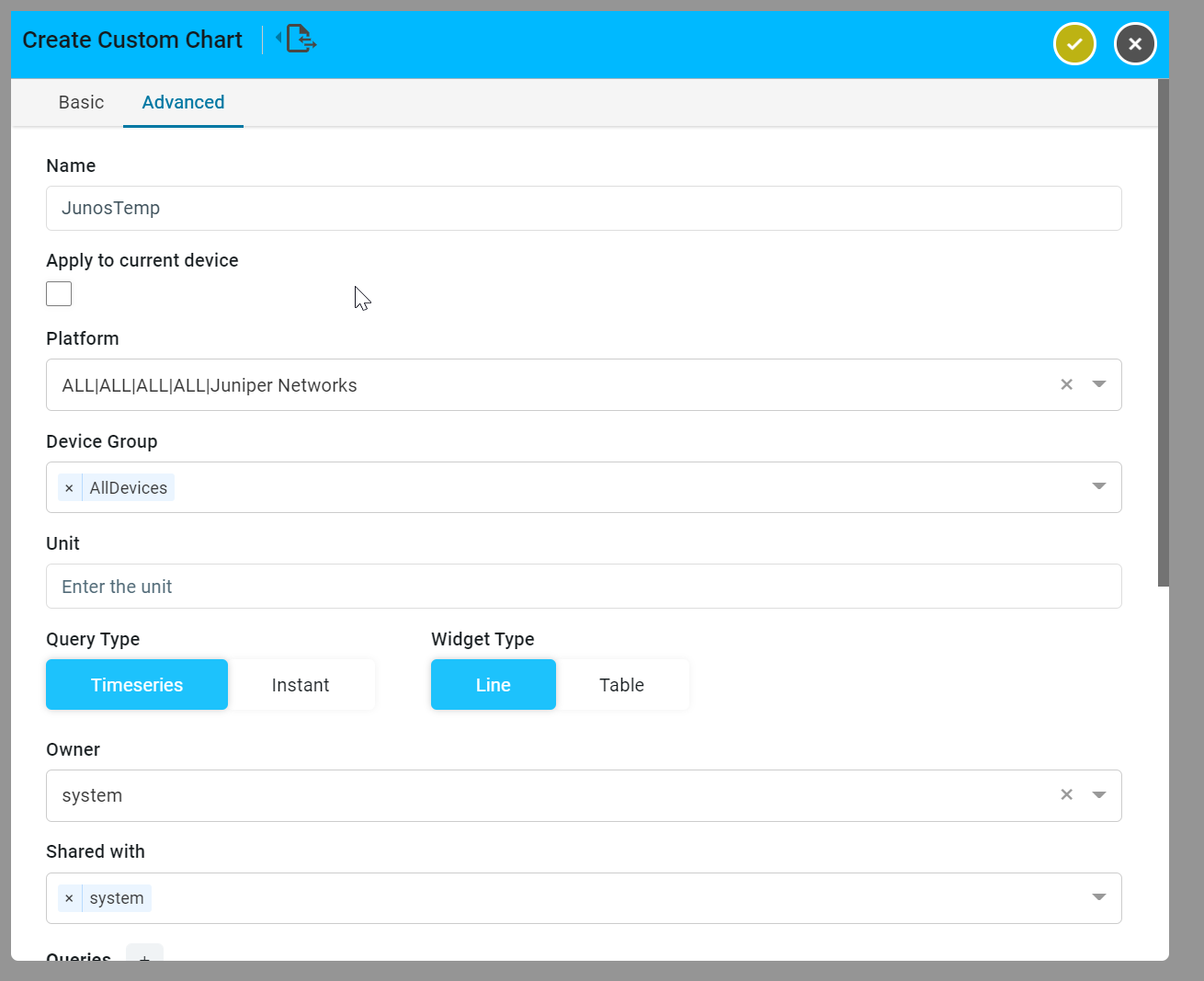

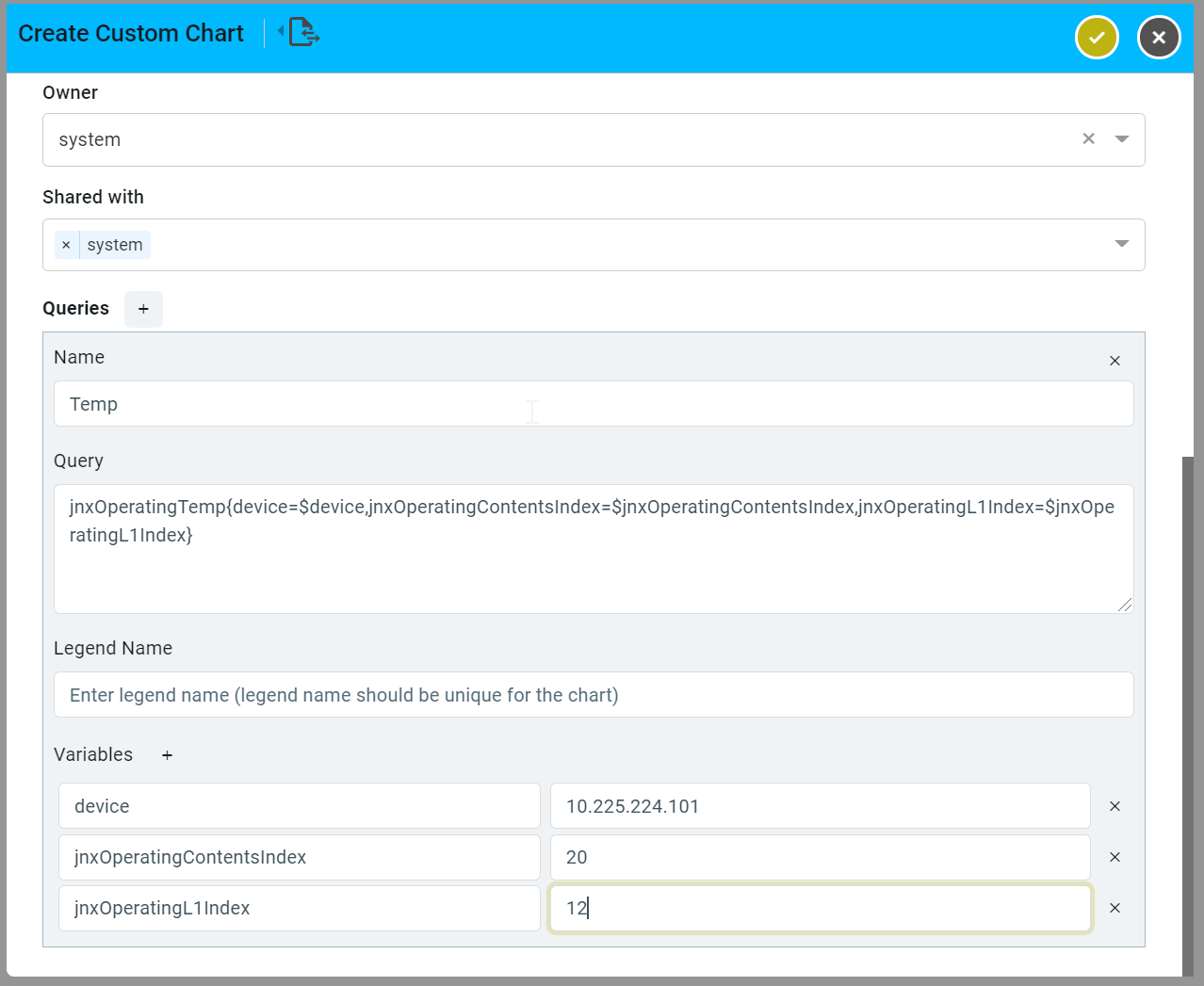

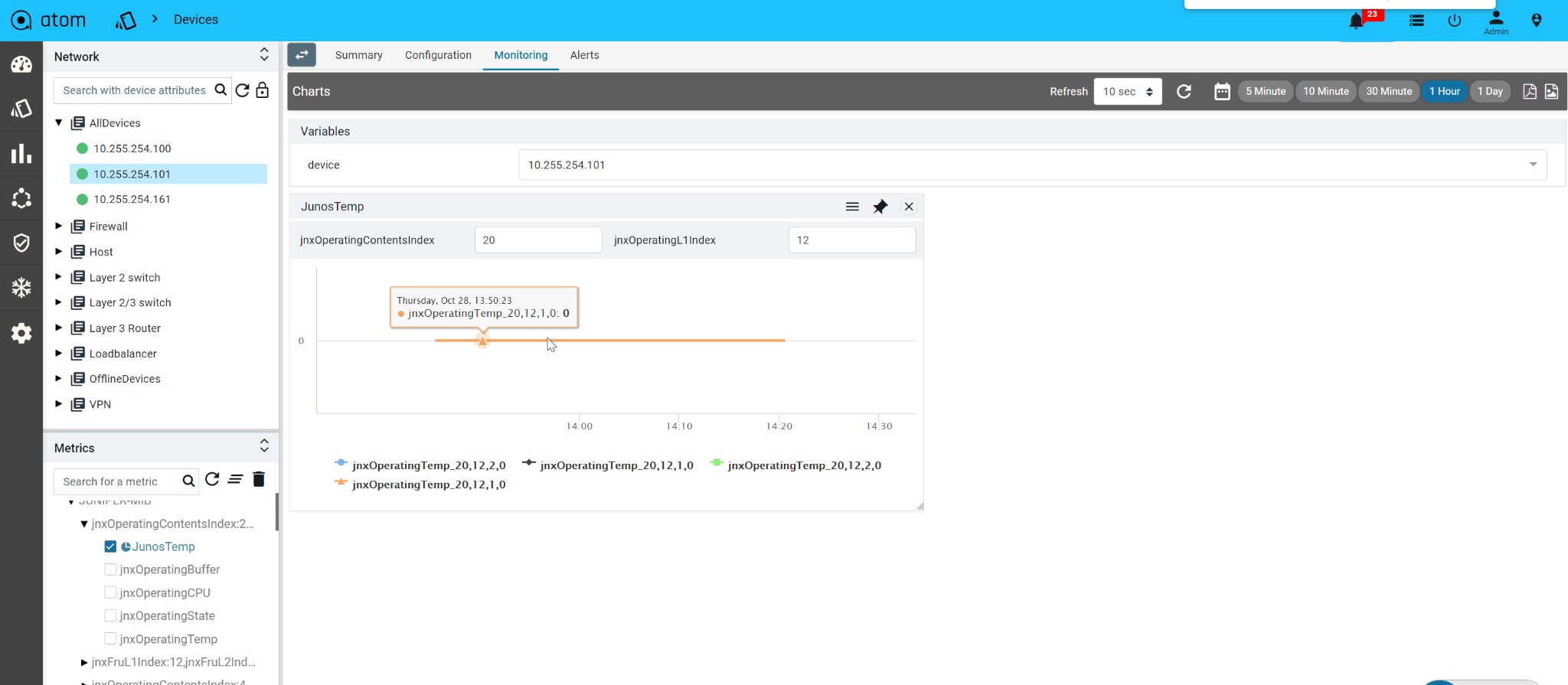

Create an Advanced Custom Chart176

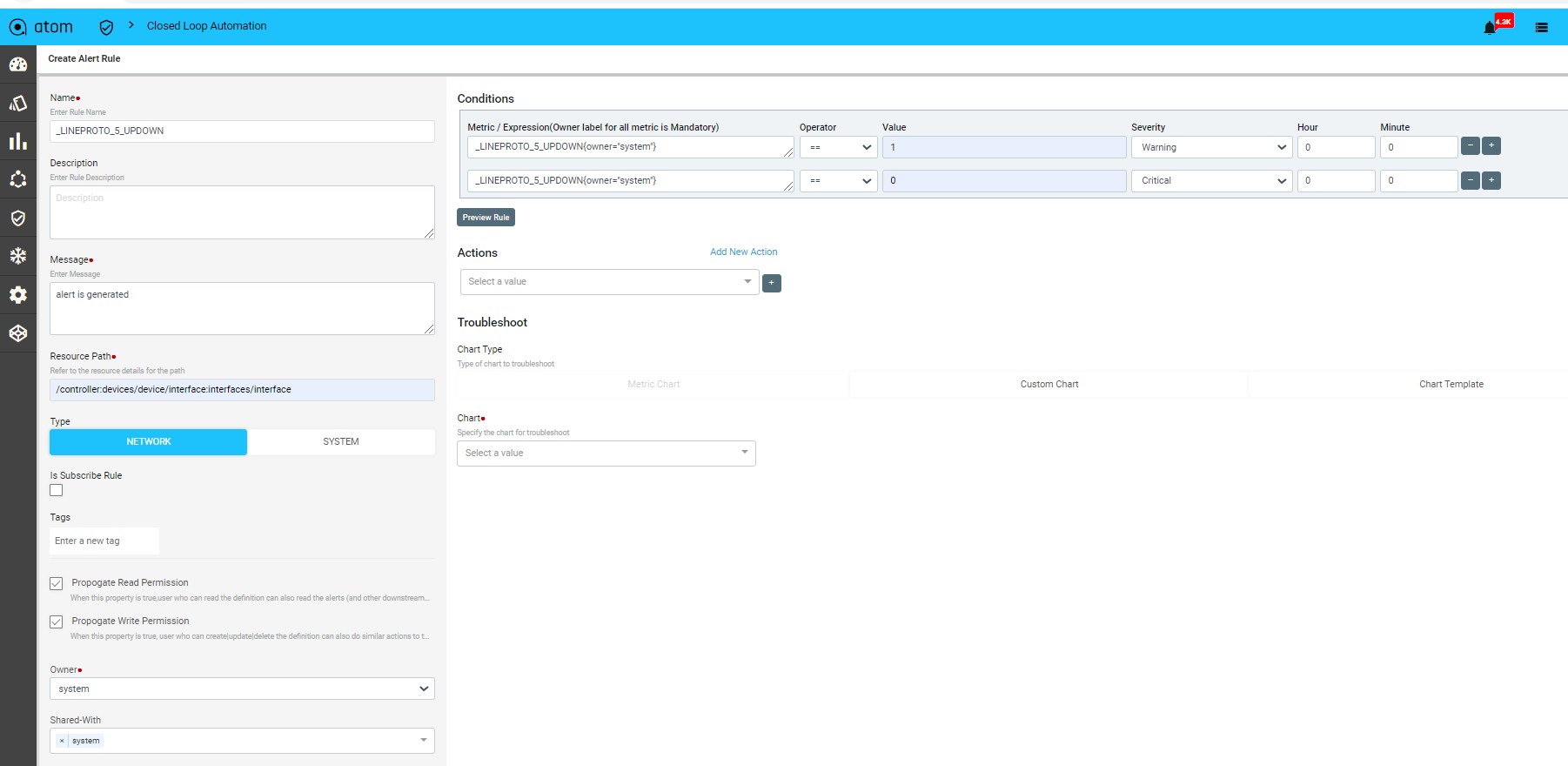

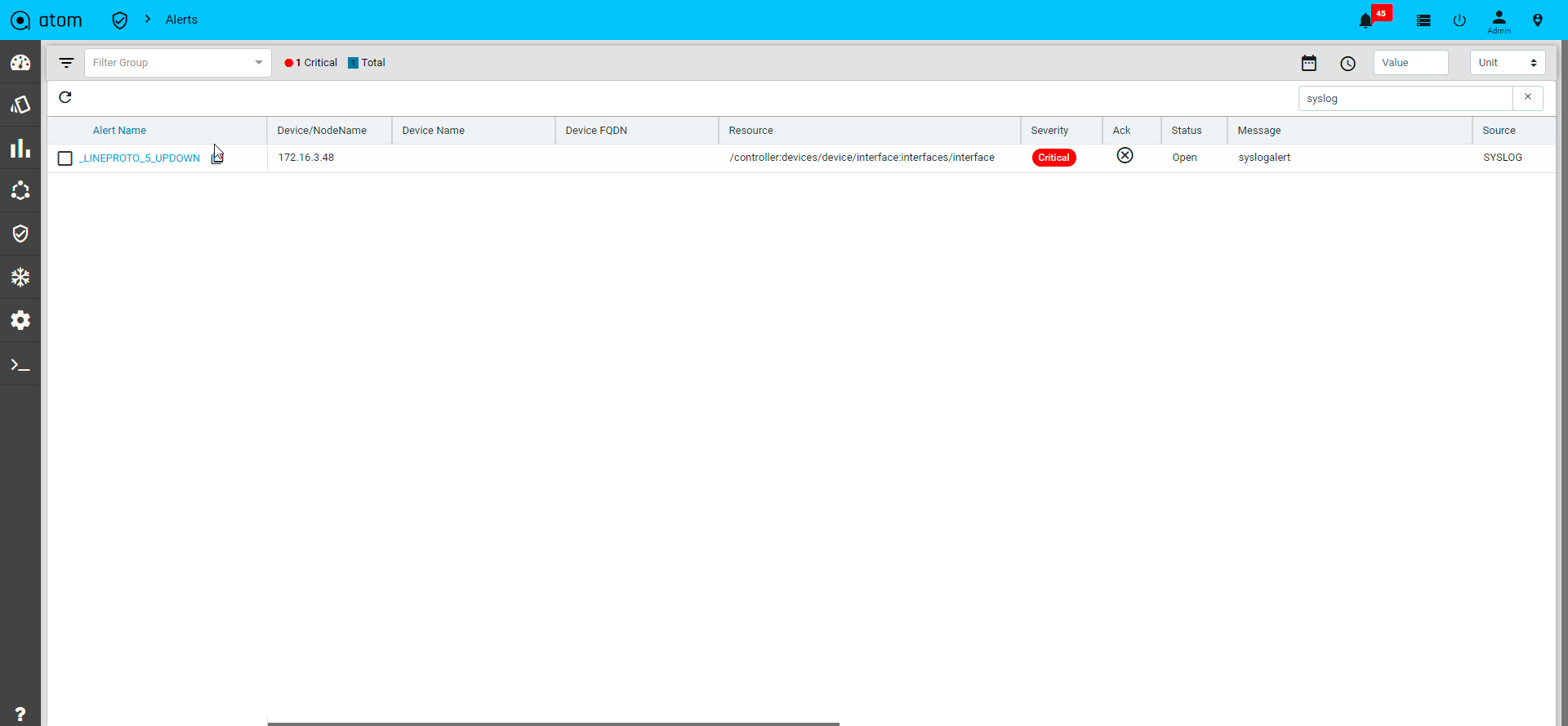

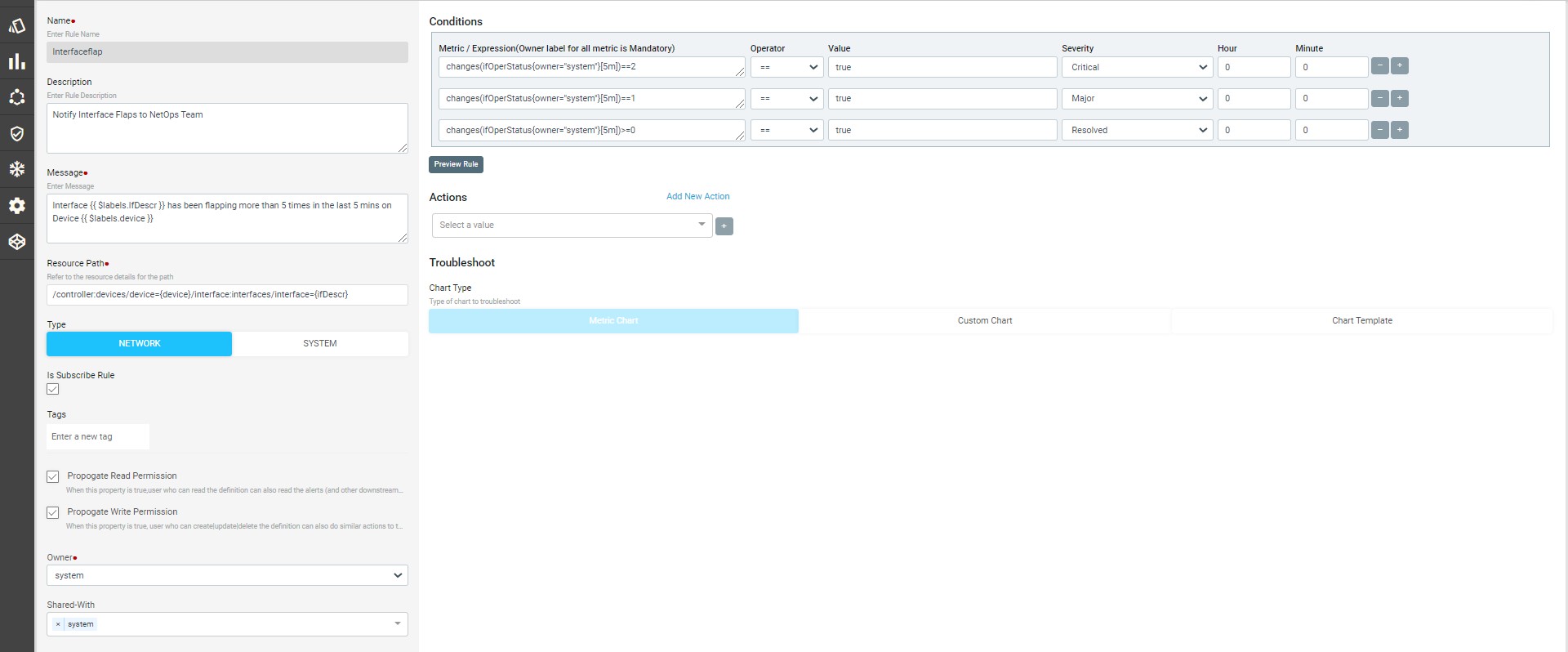

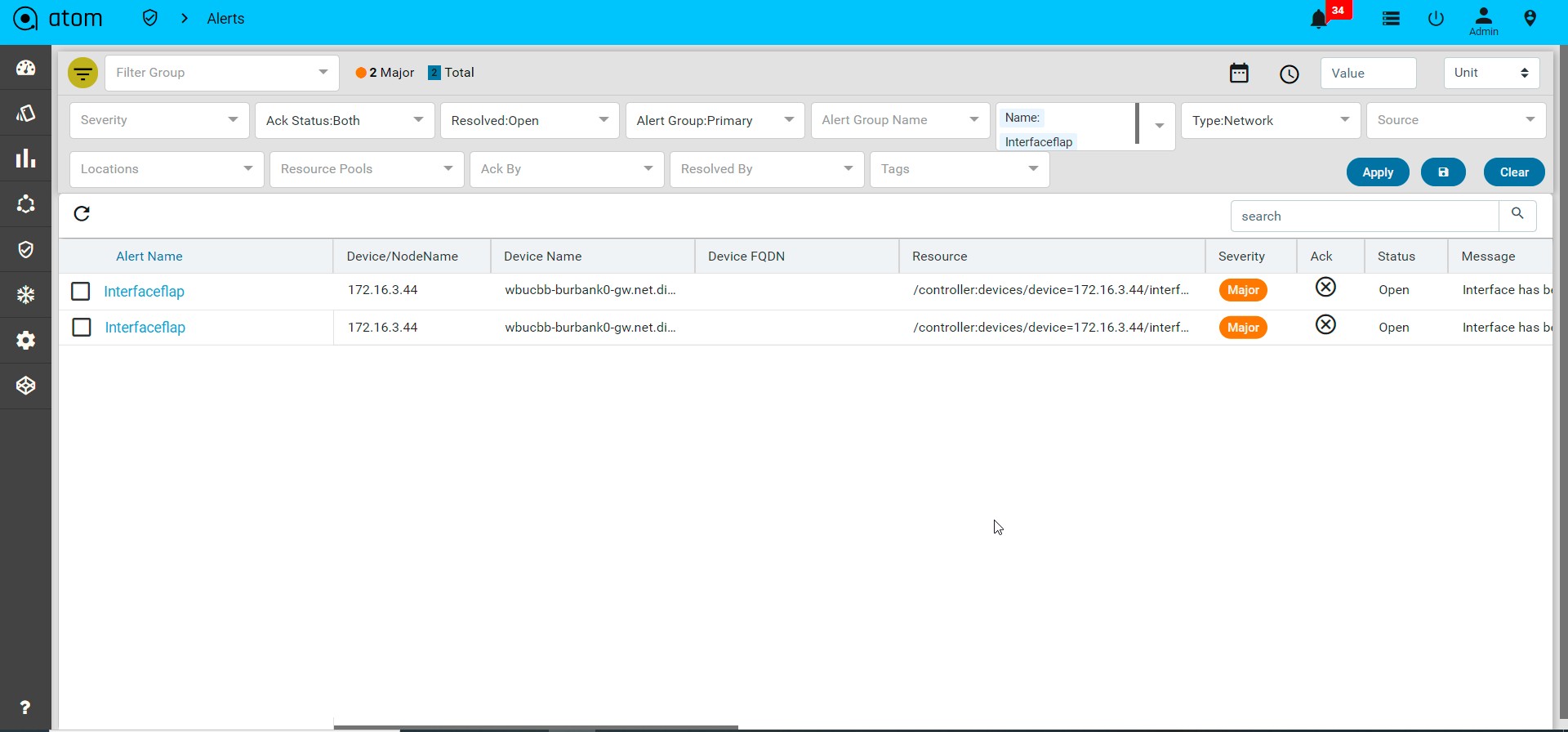

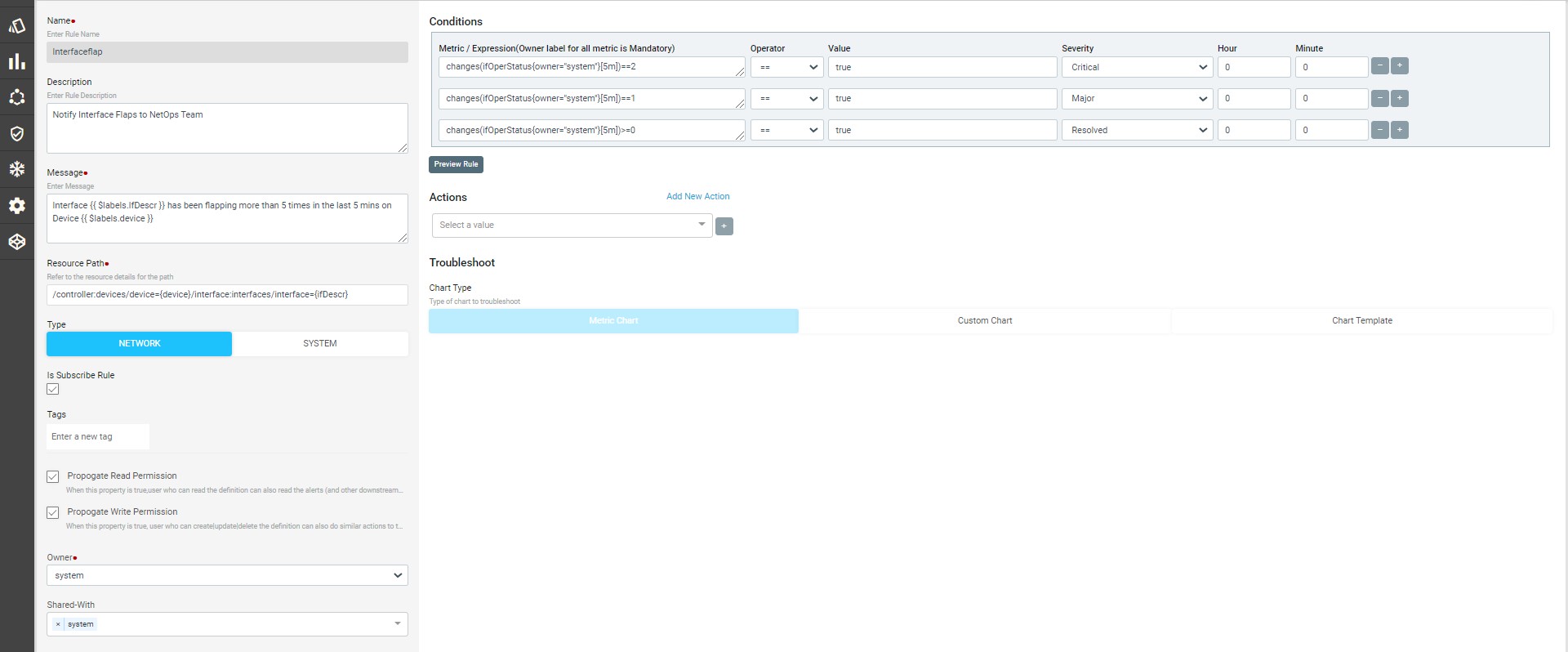

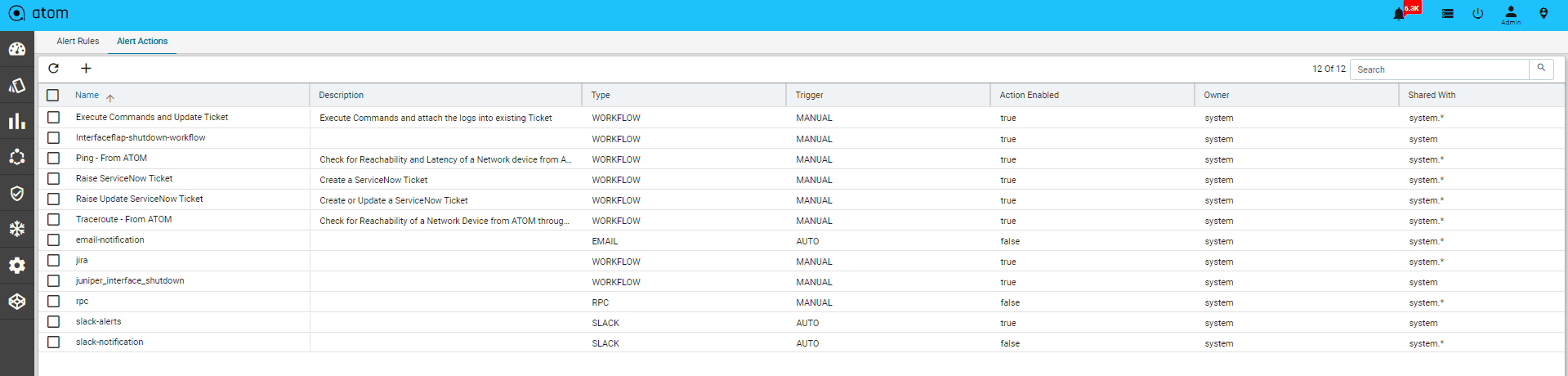

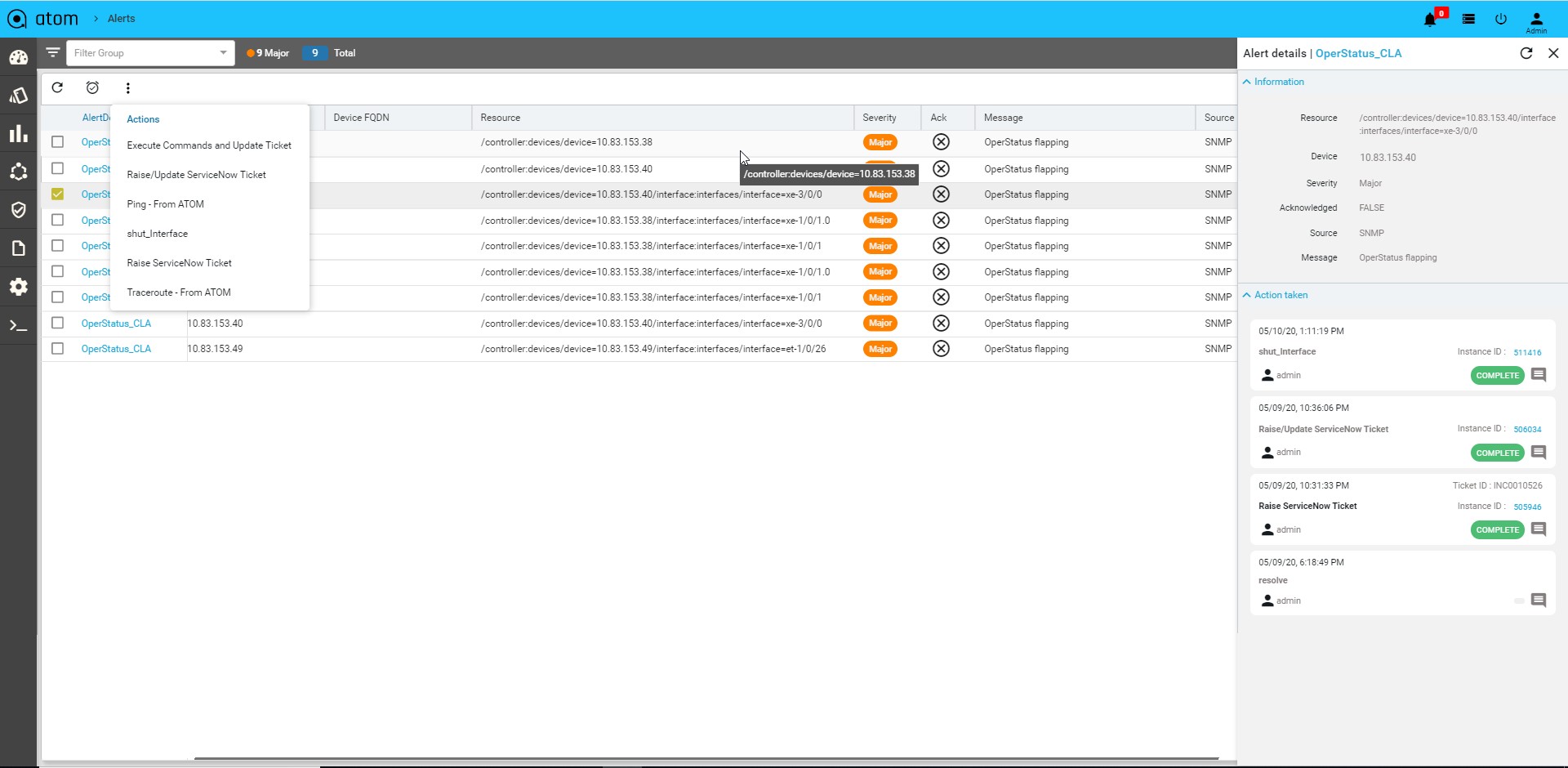

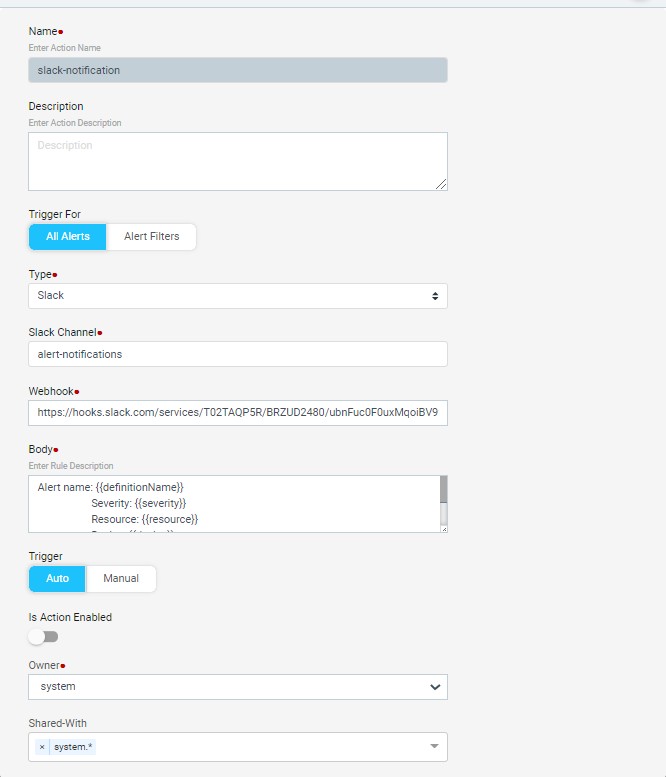

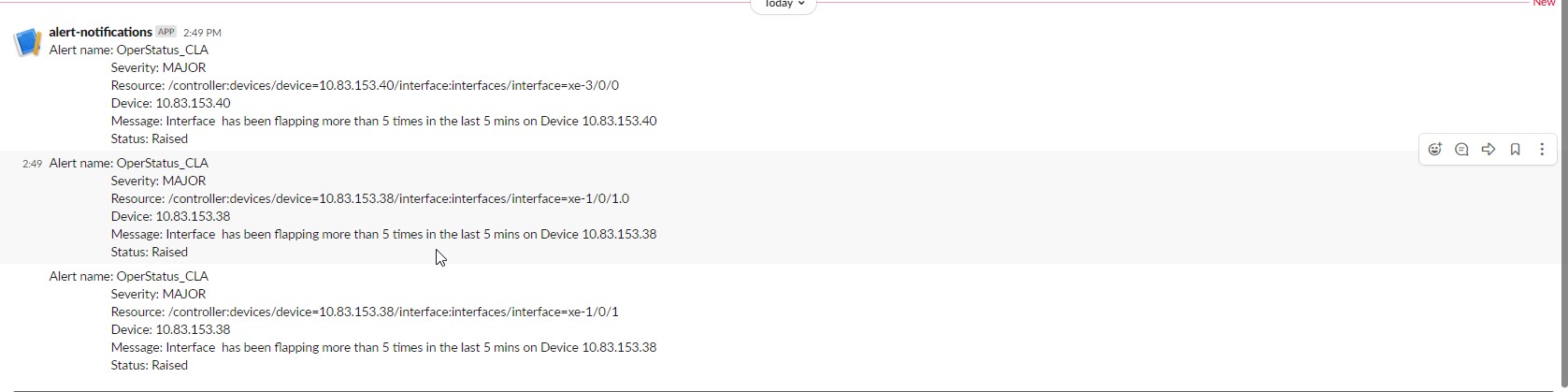

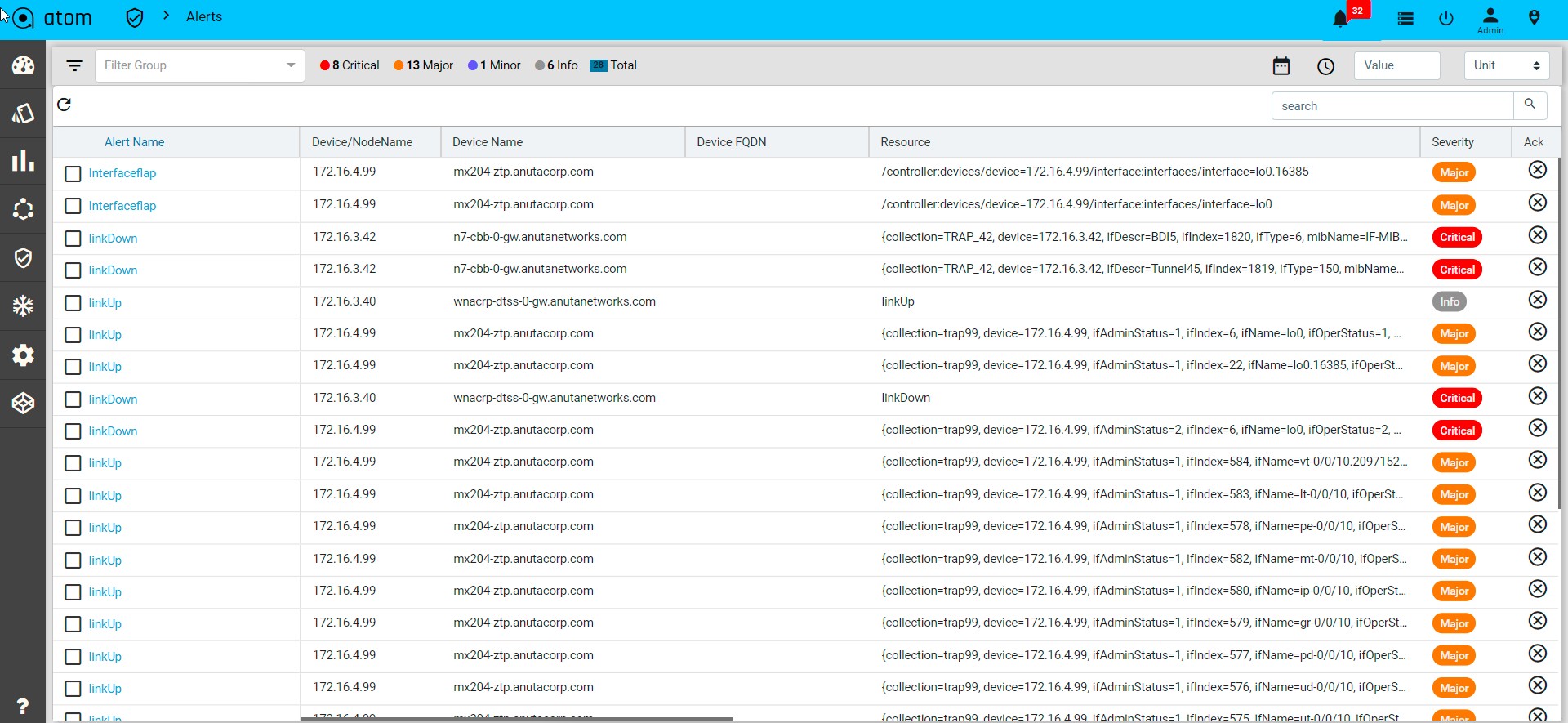

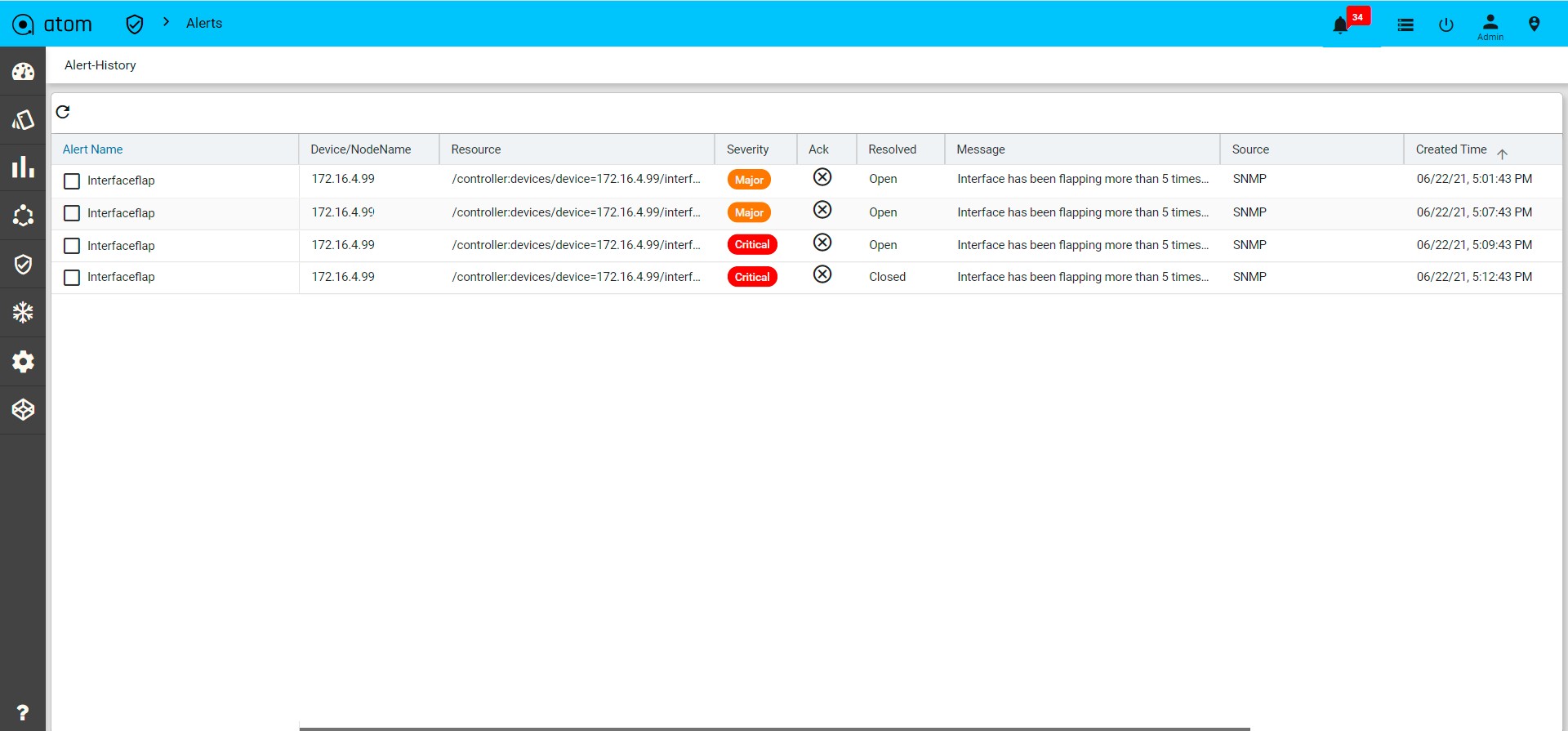

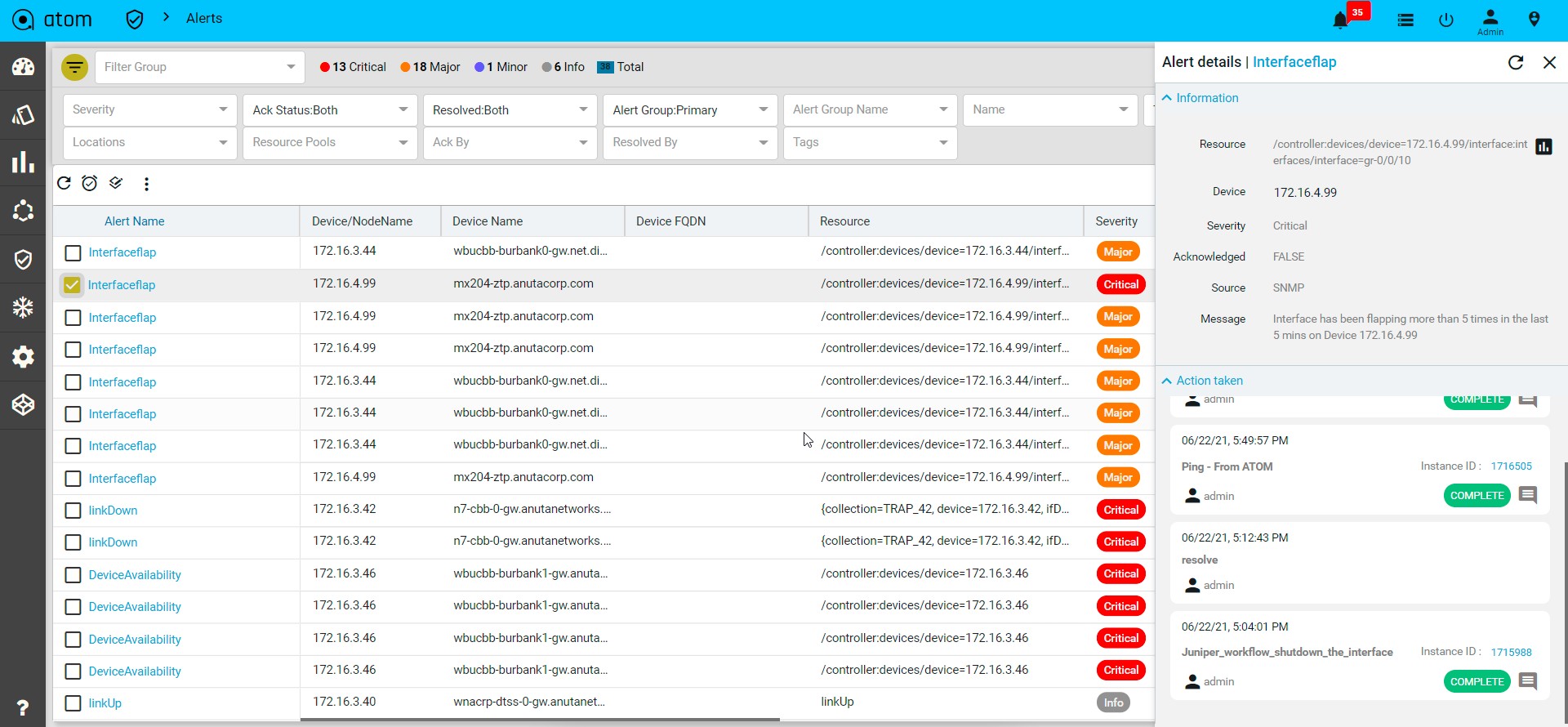

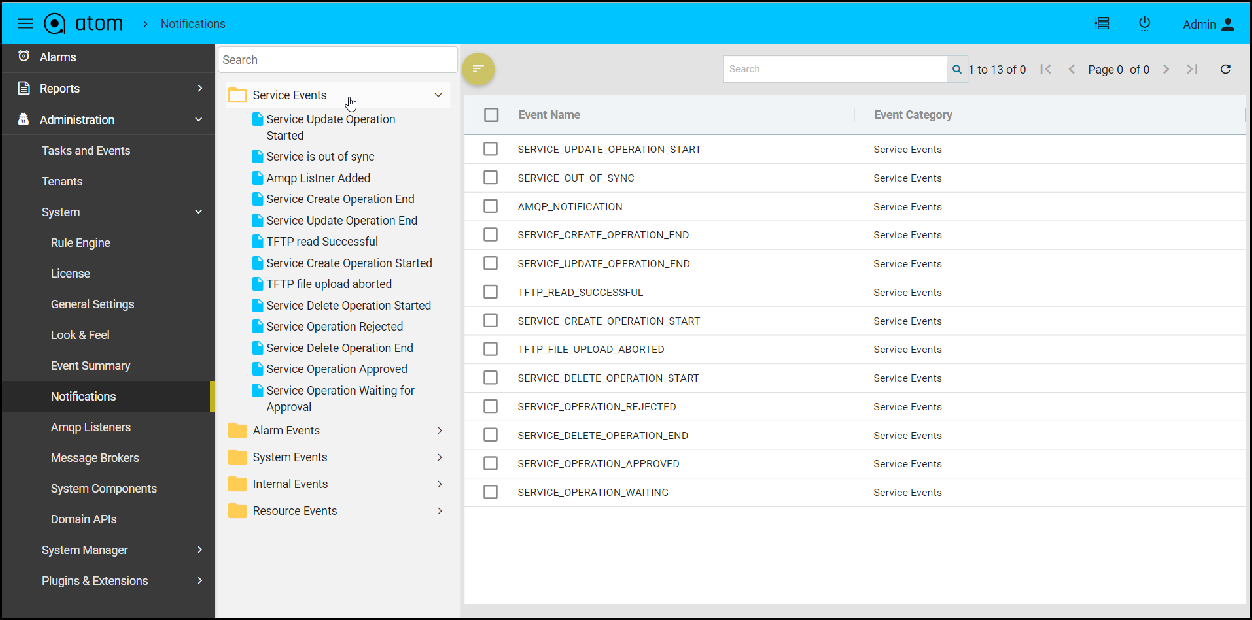

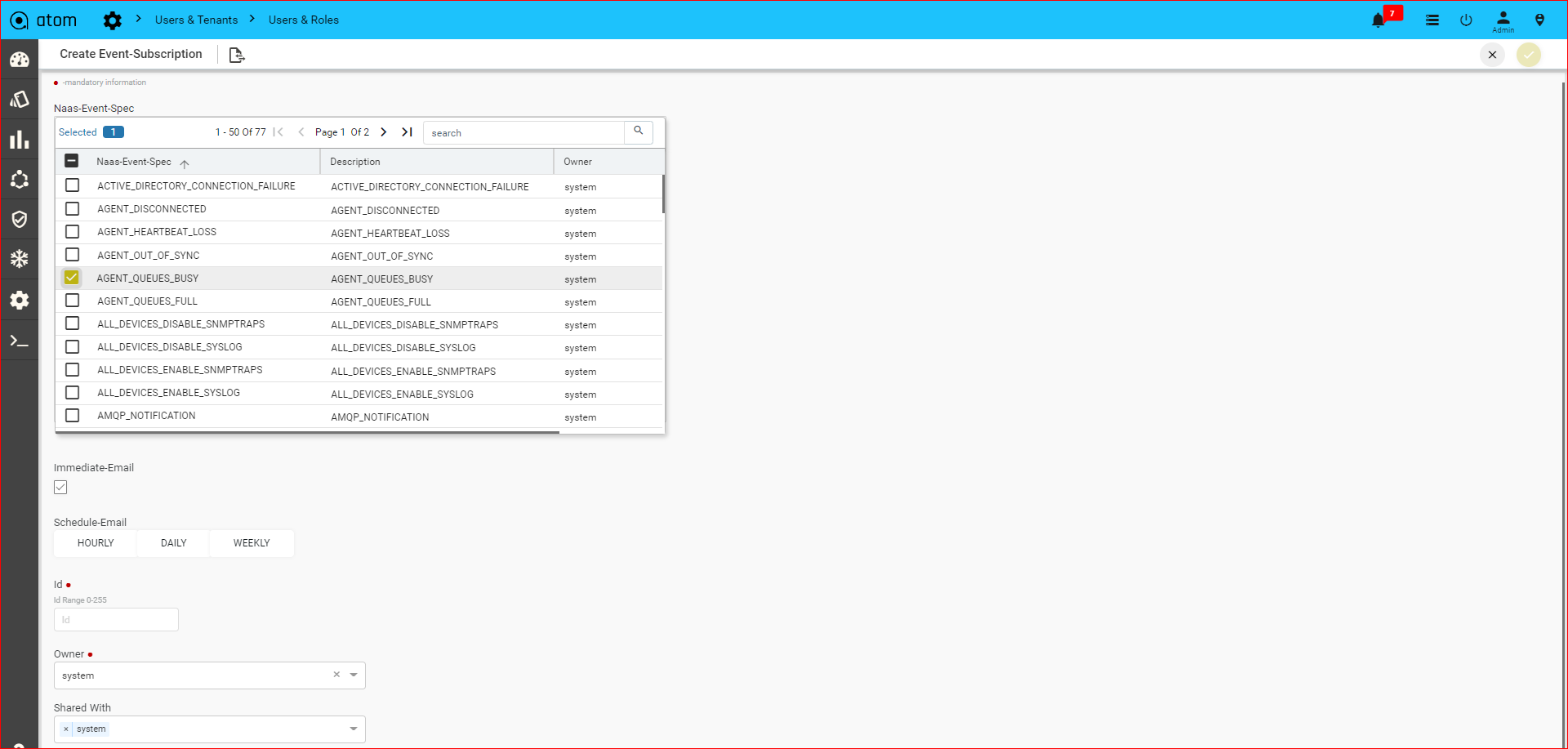

Alert, Actions & Closed Loop Automation183

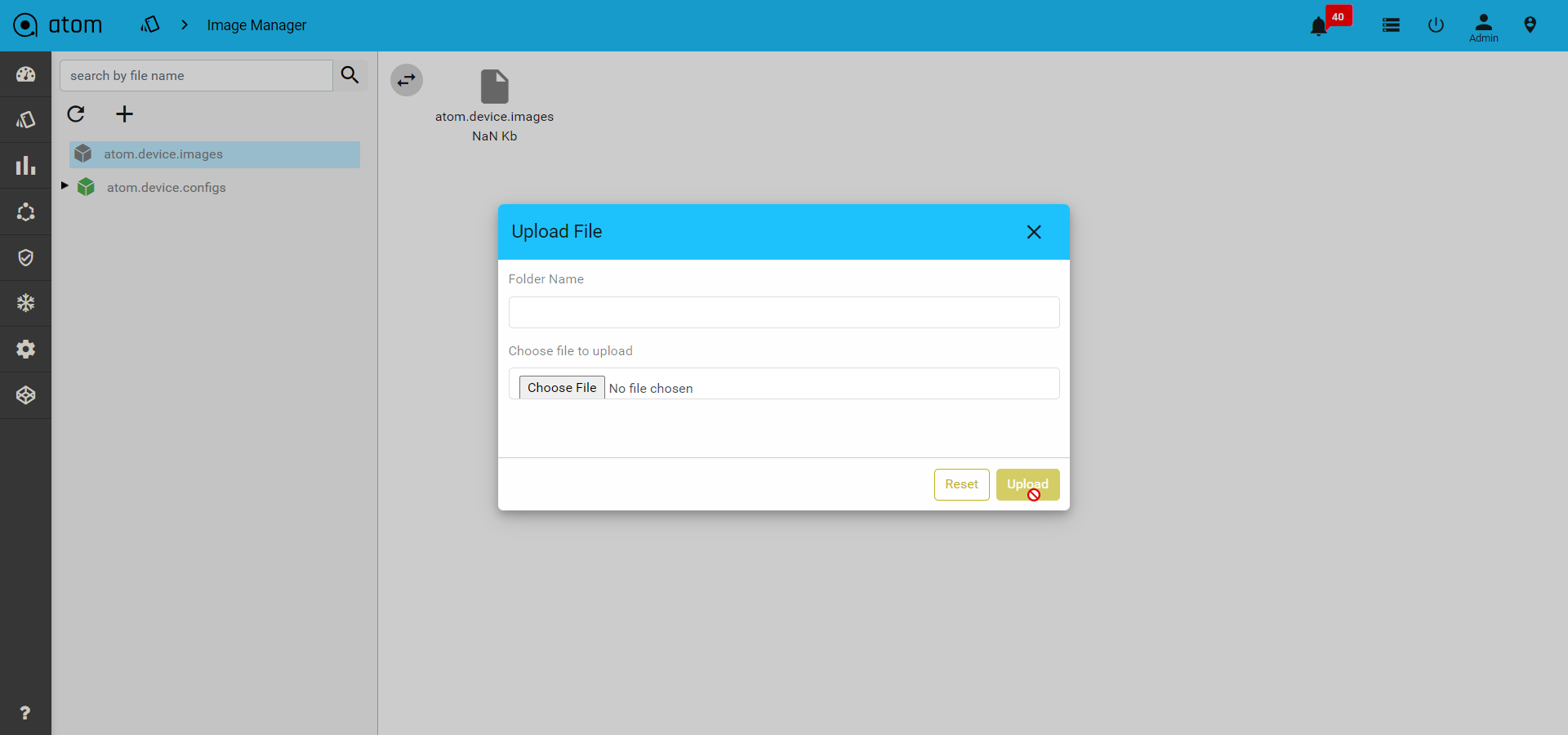

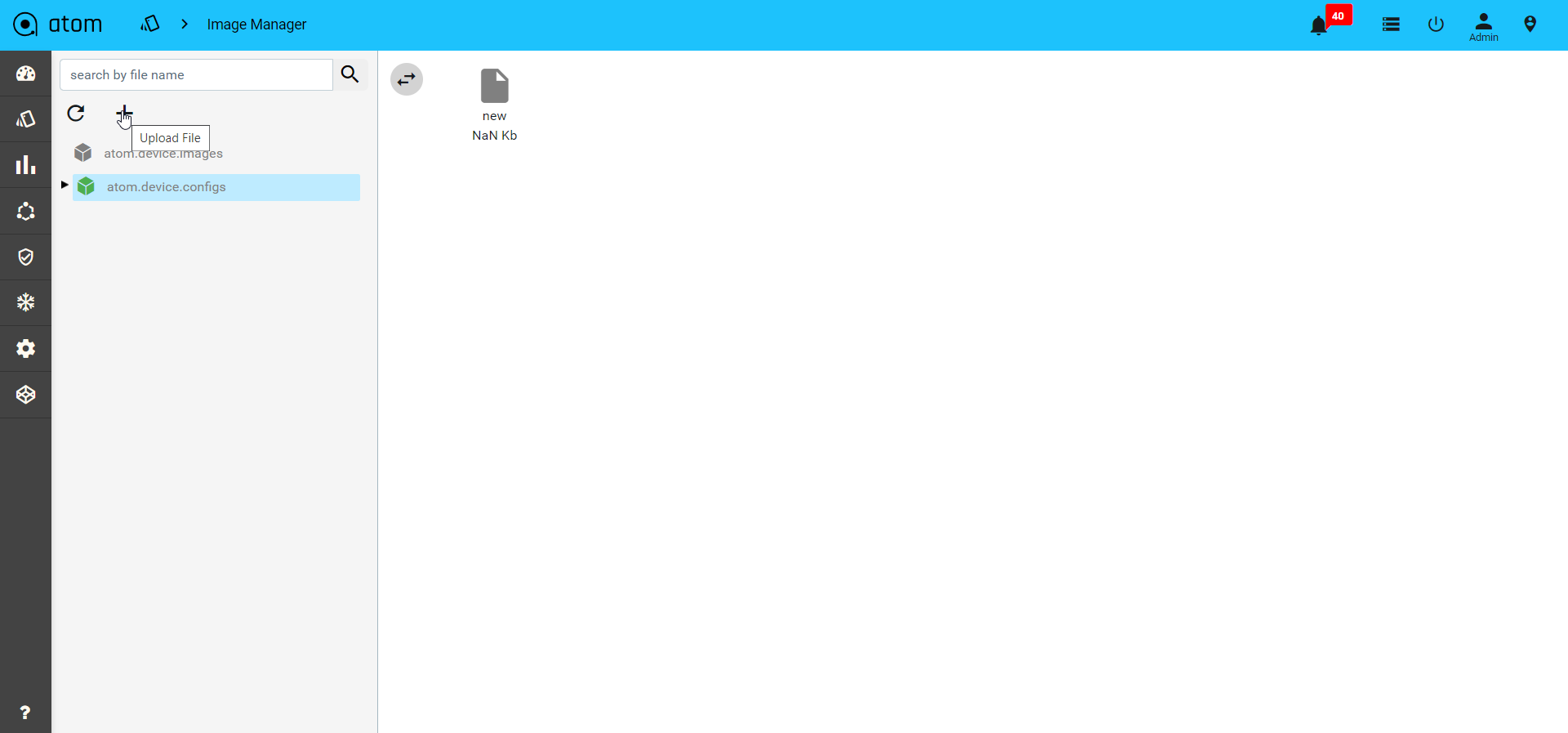

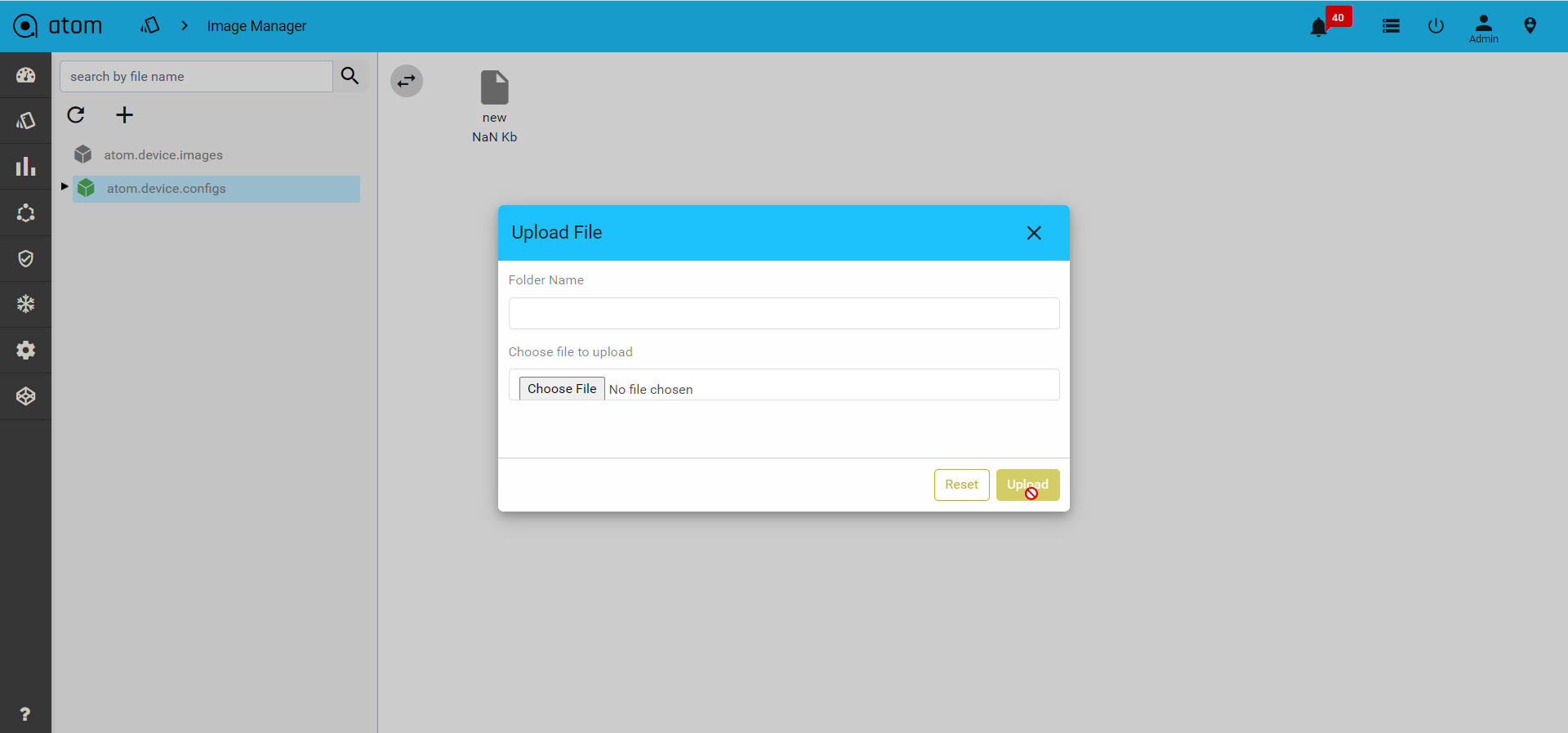

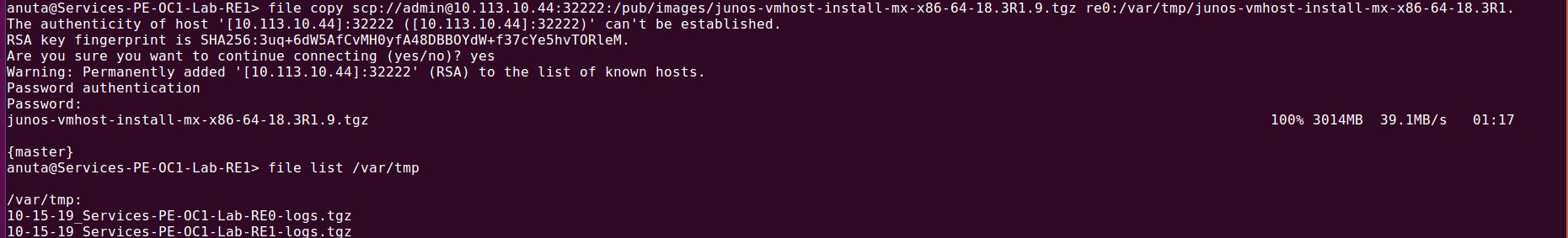

Software Image Management202

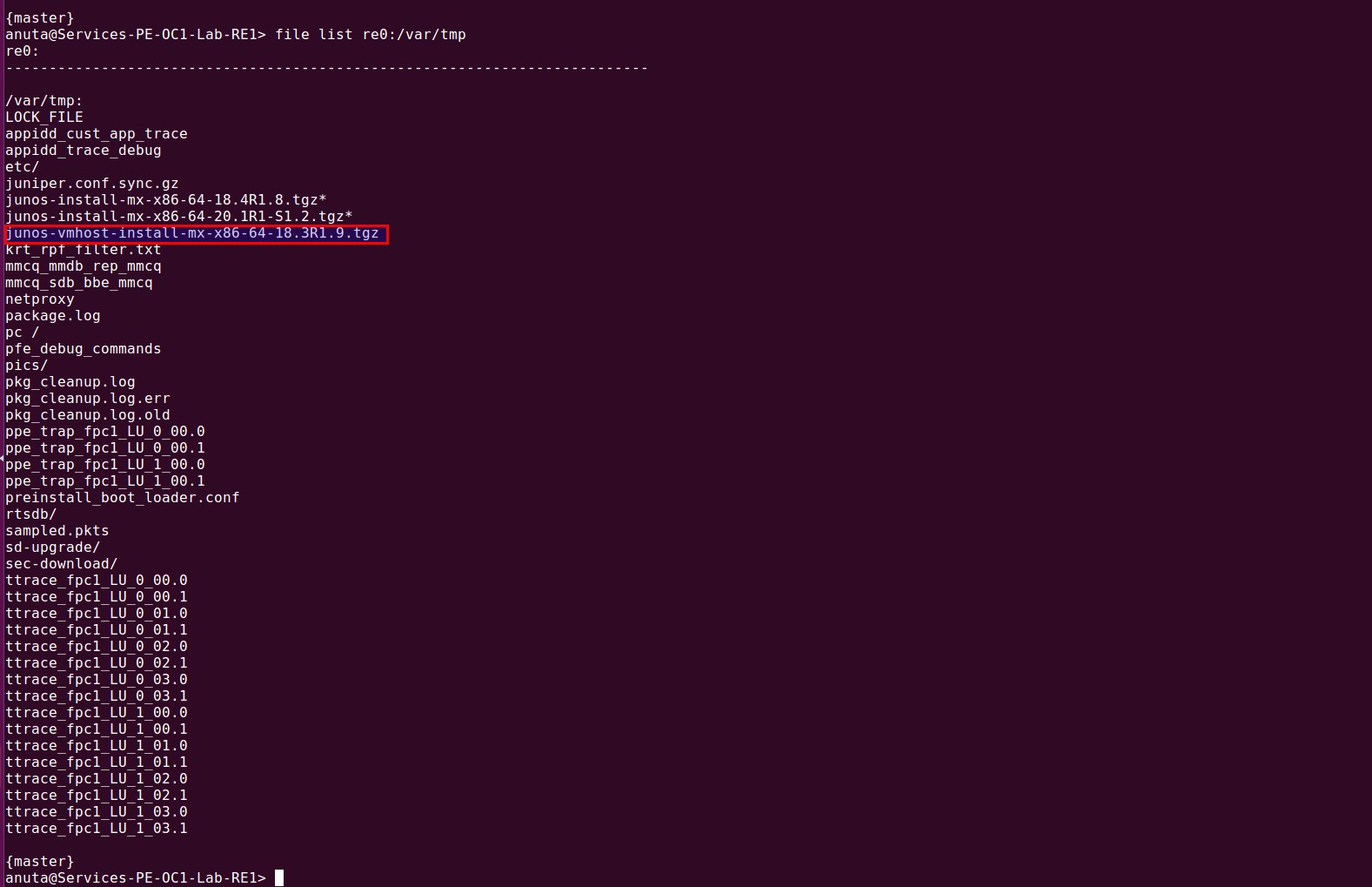

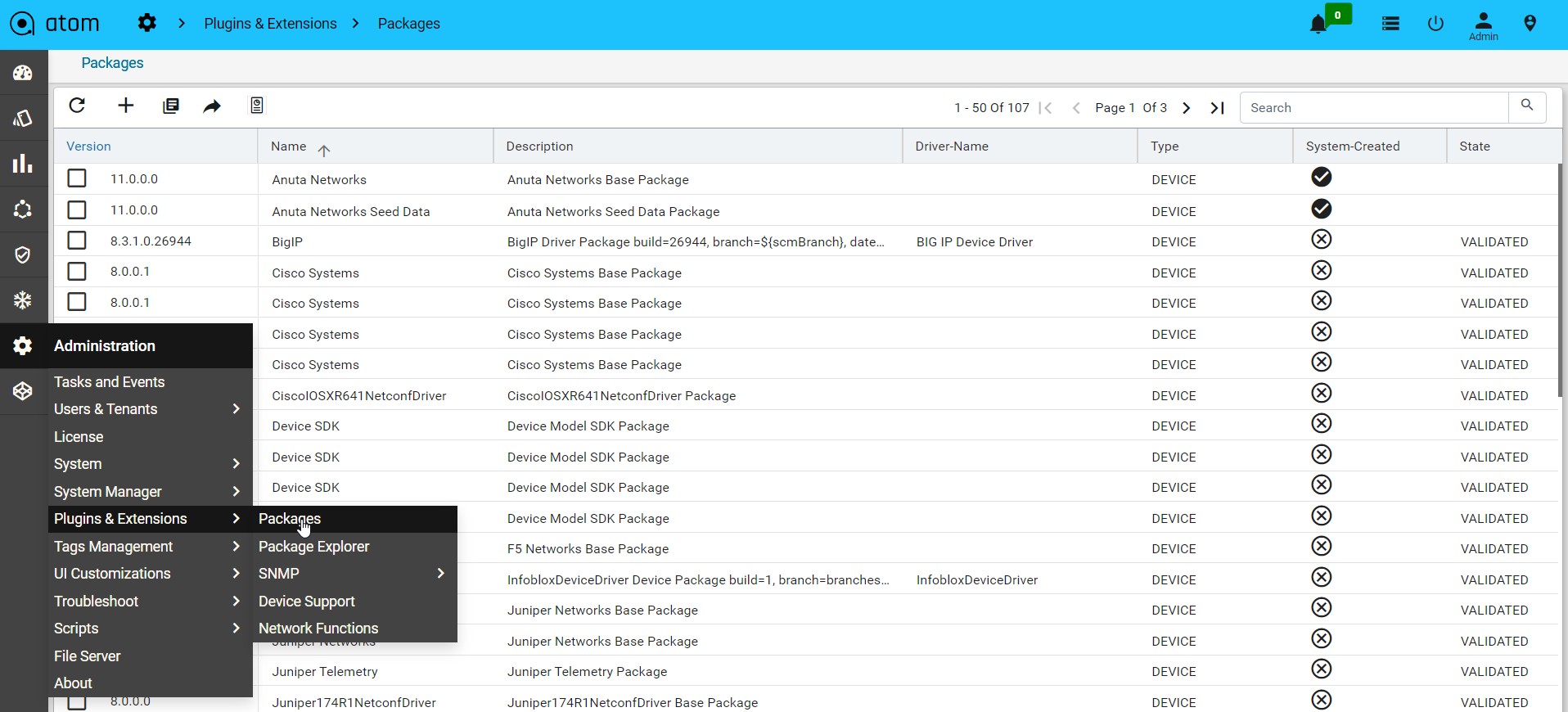

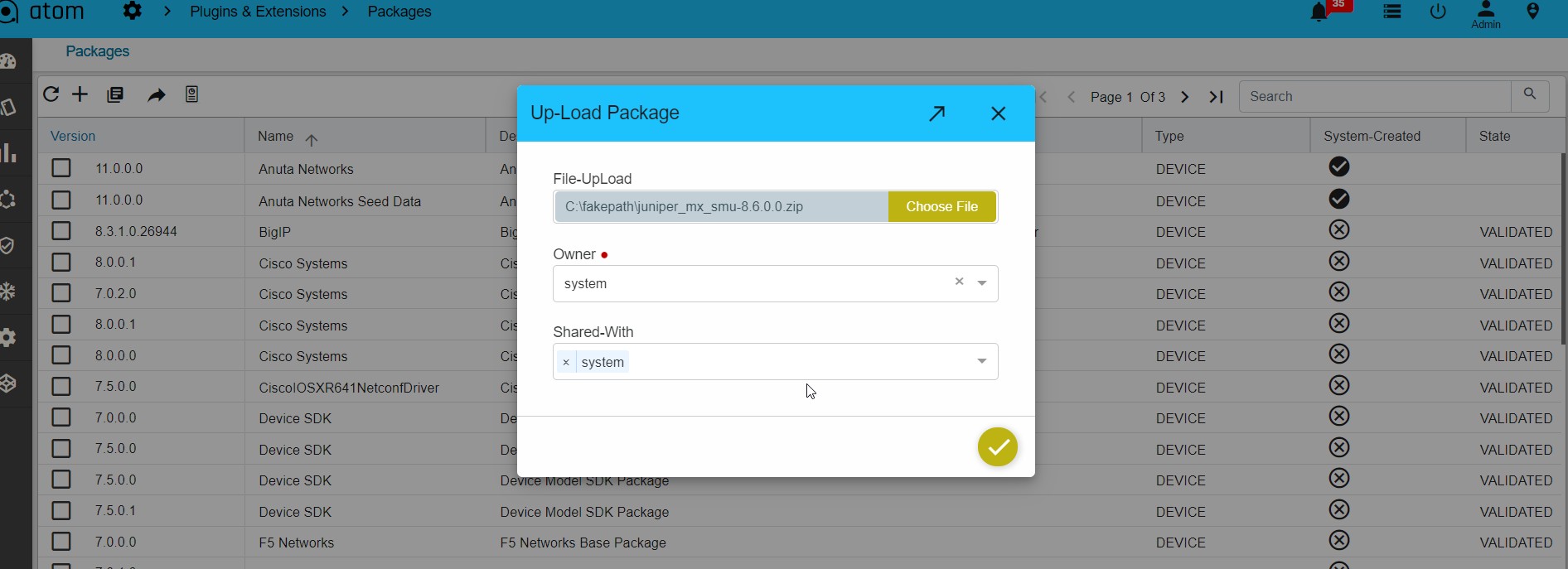

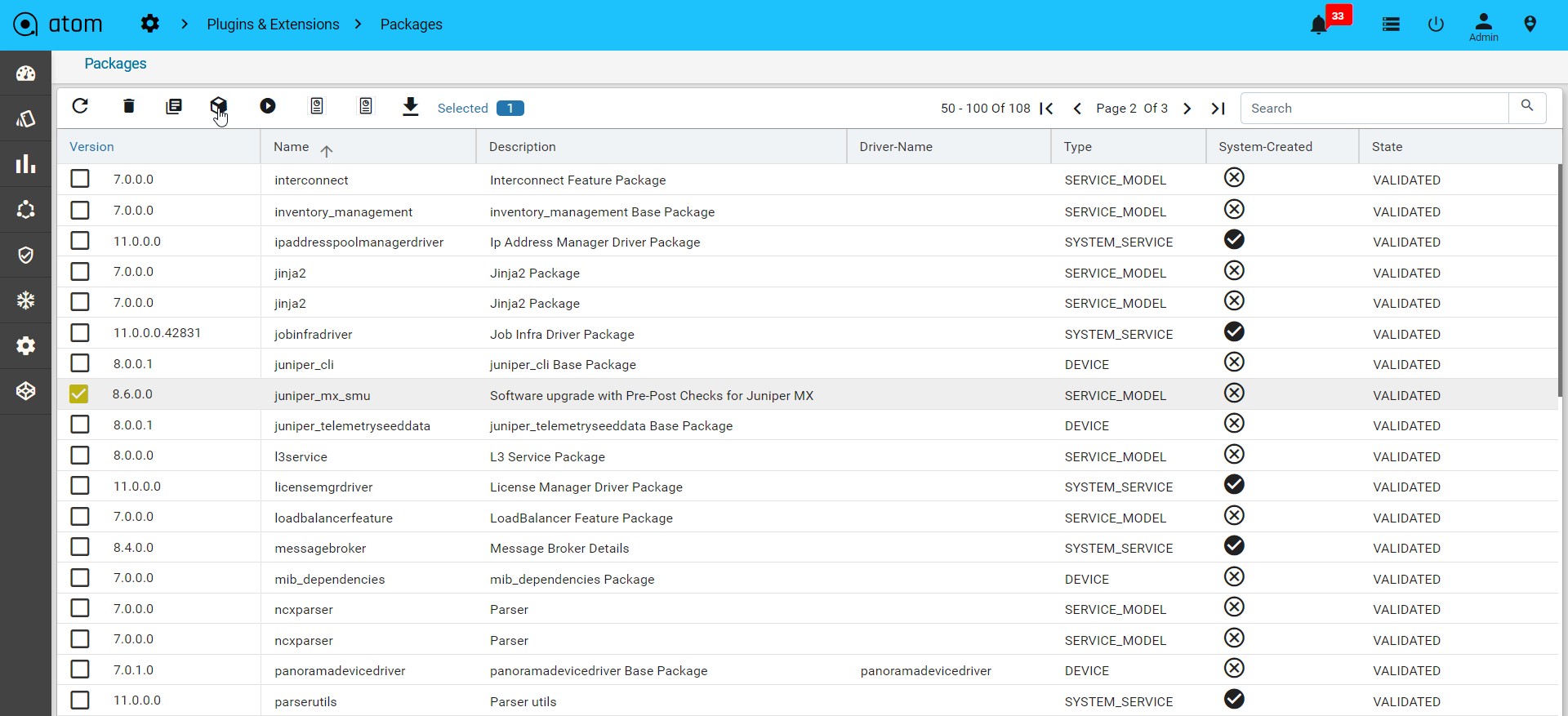

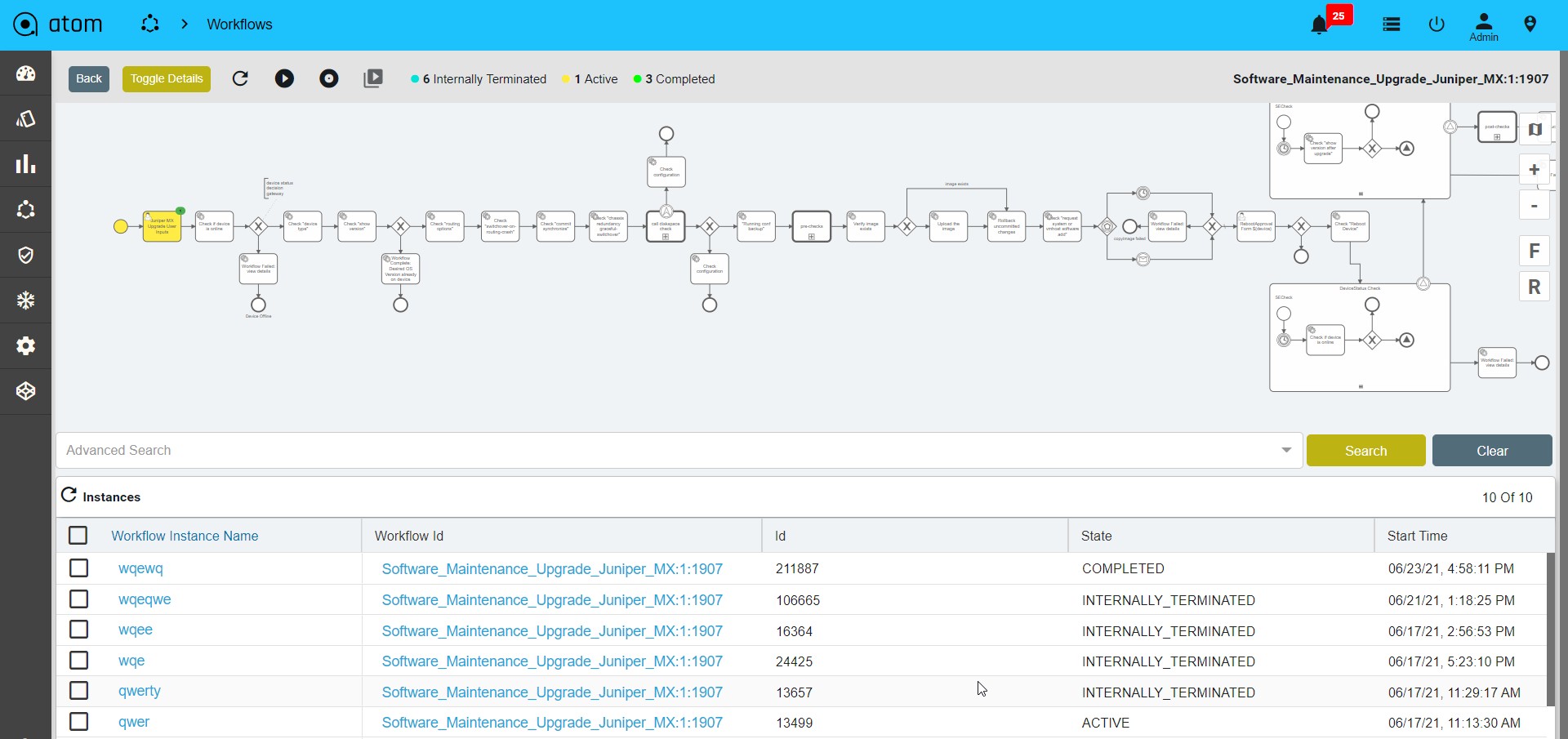

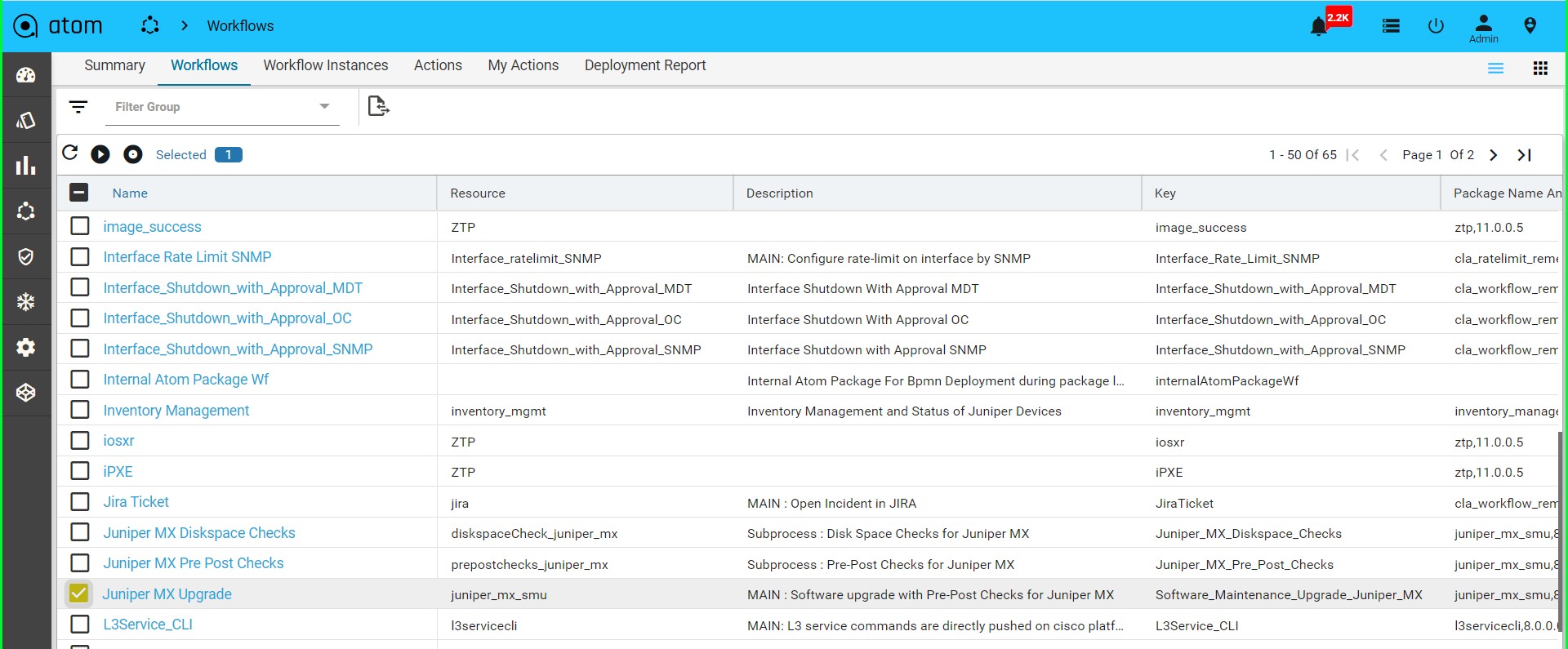

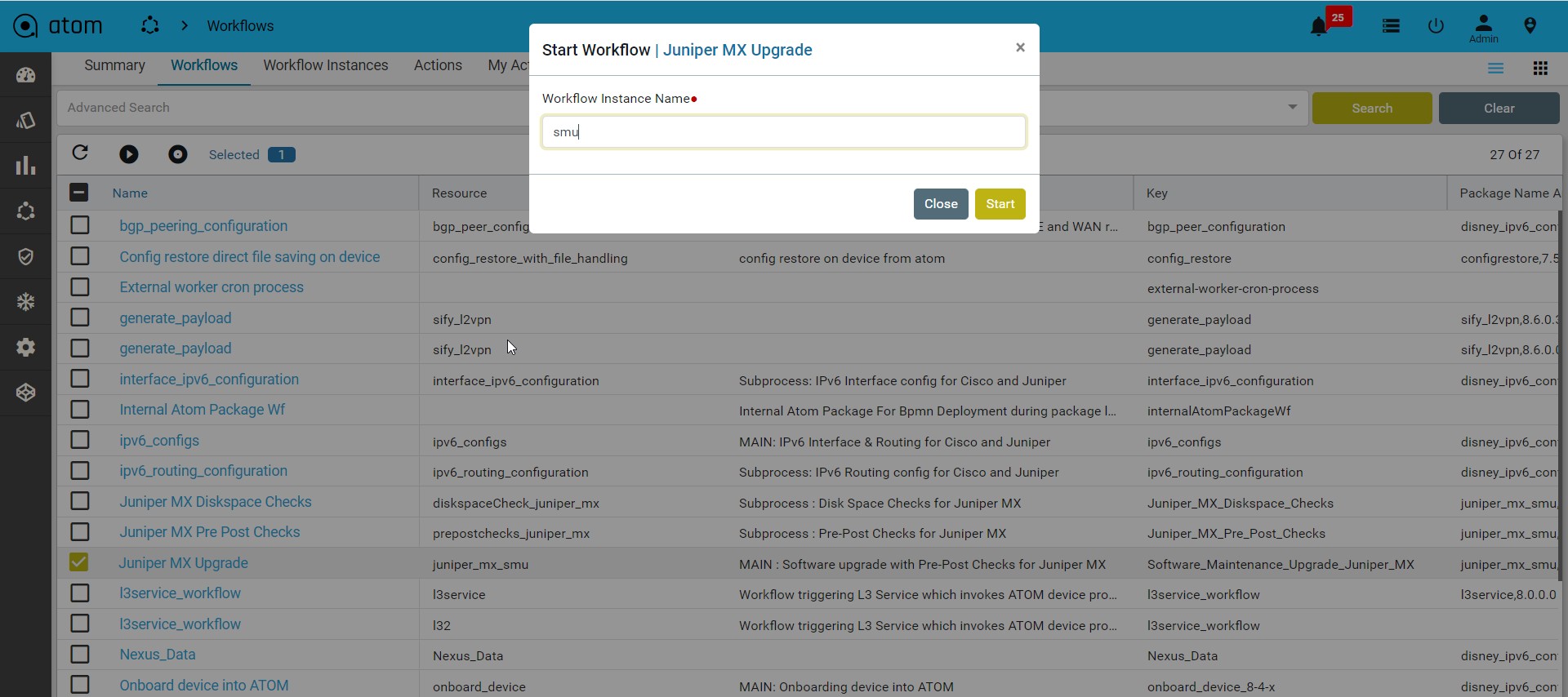

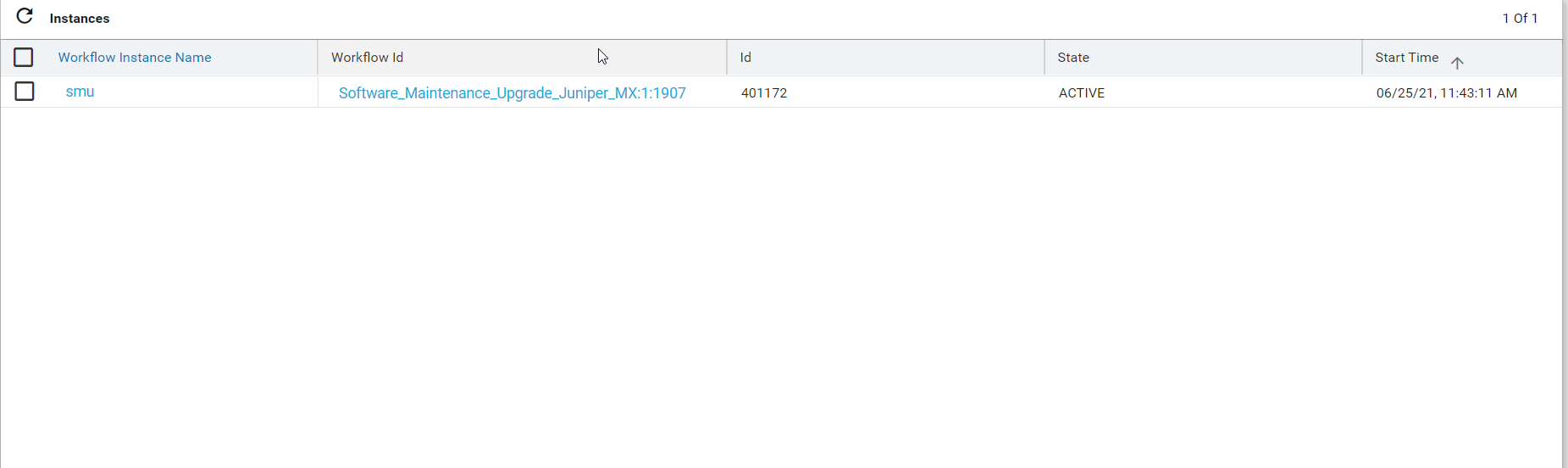

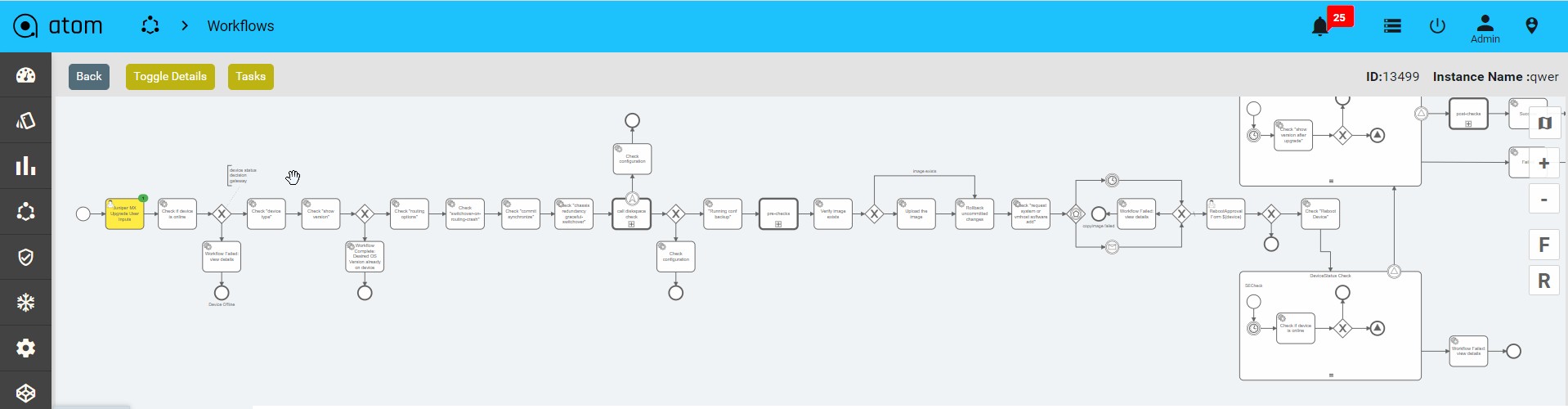

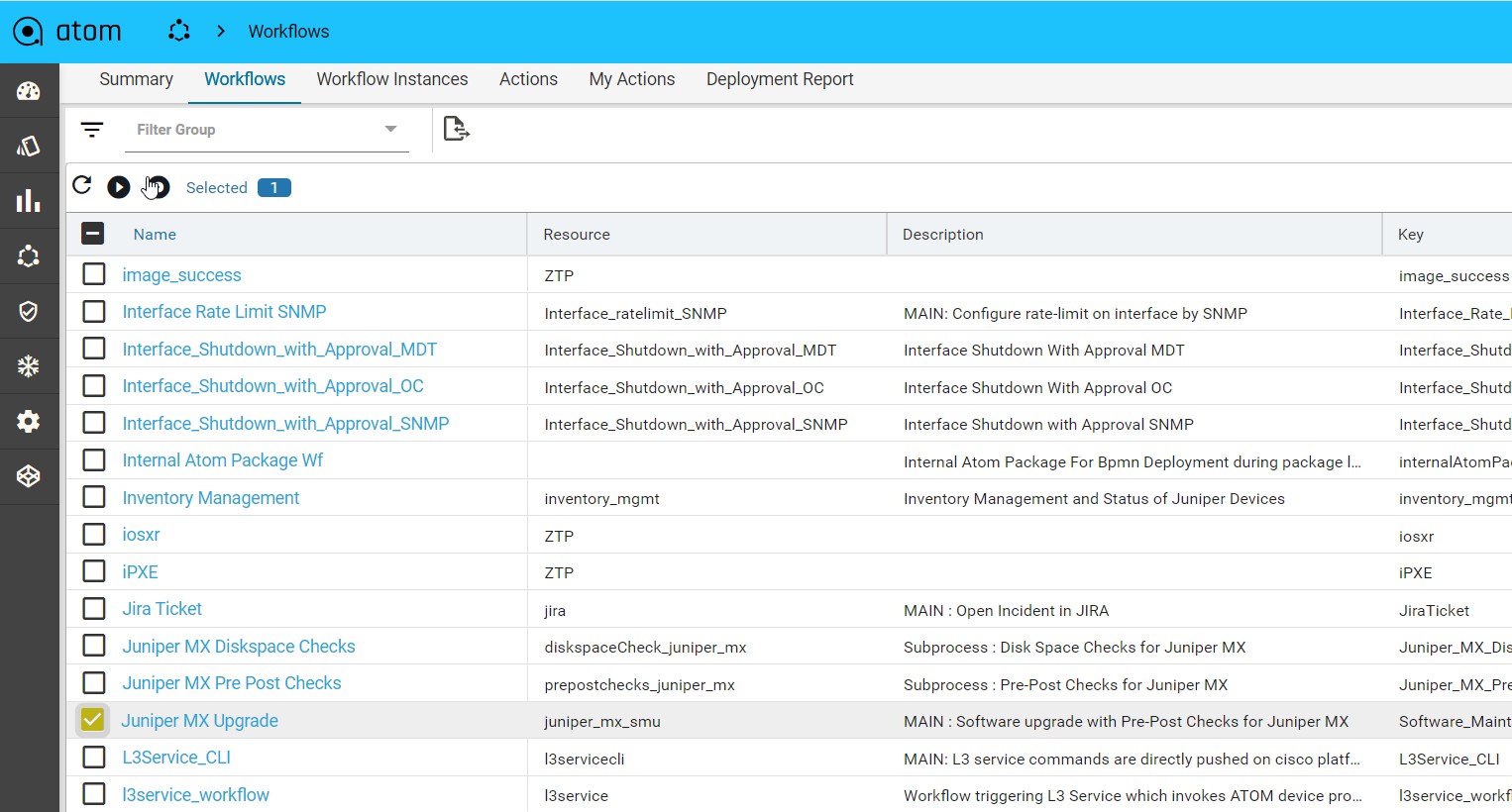

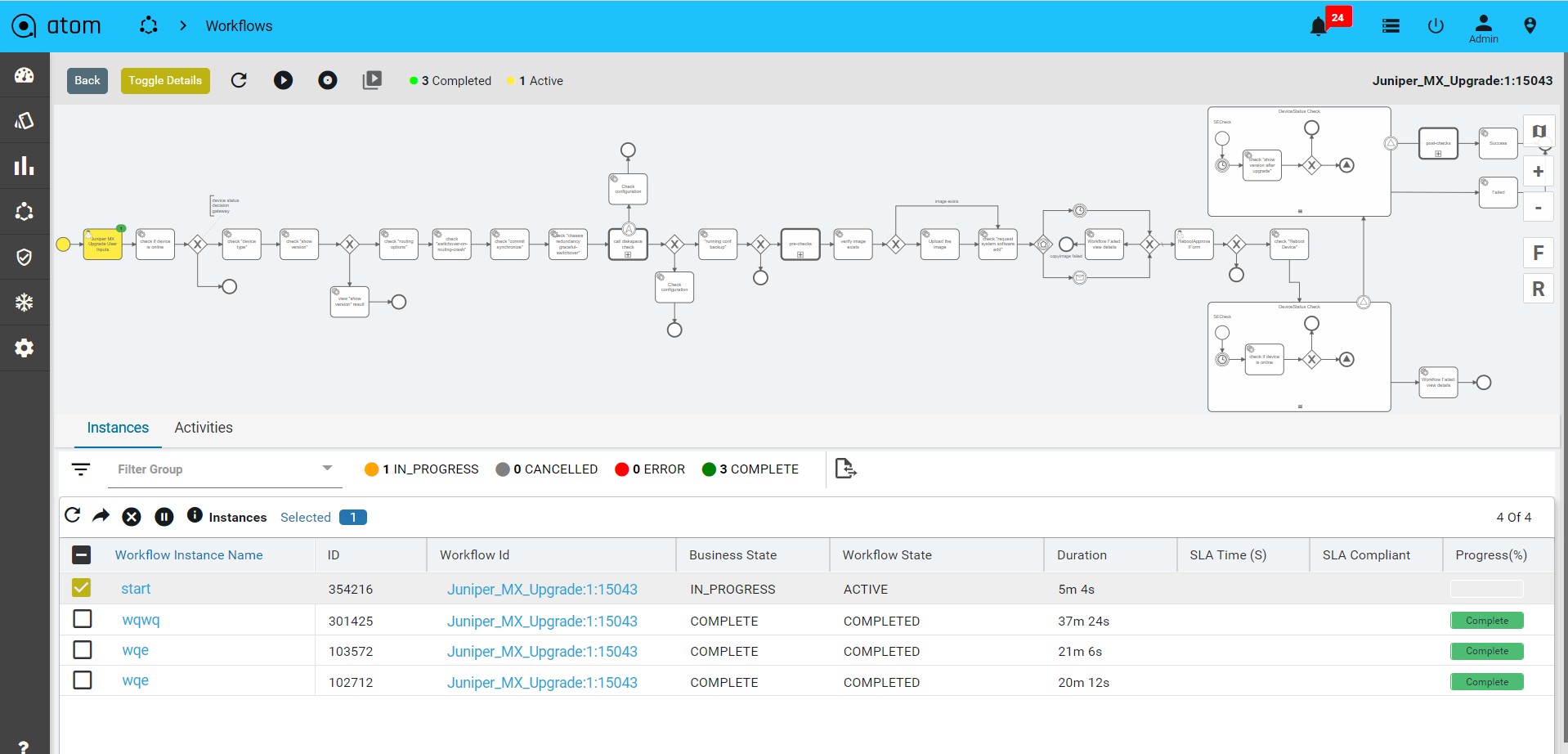

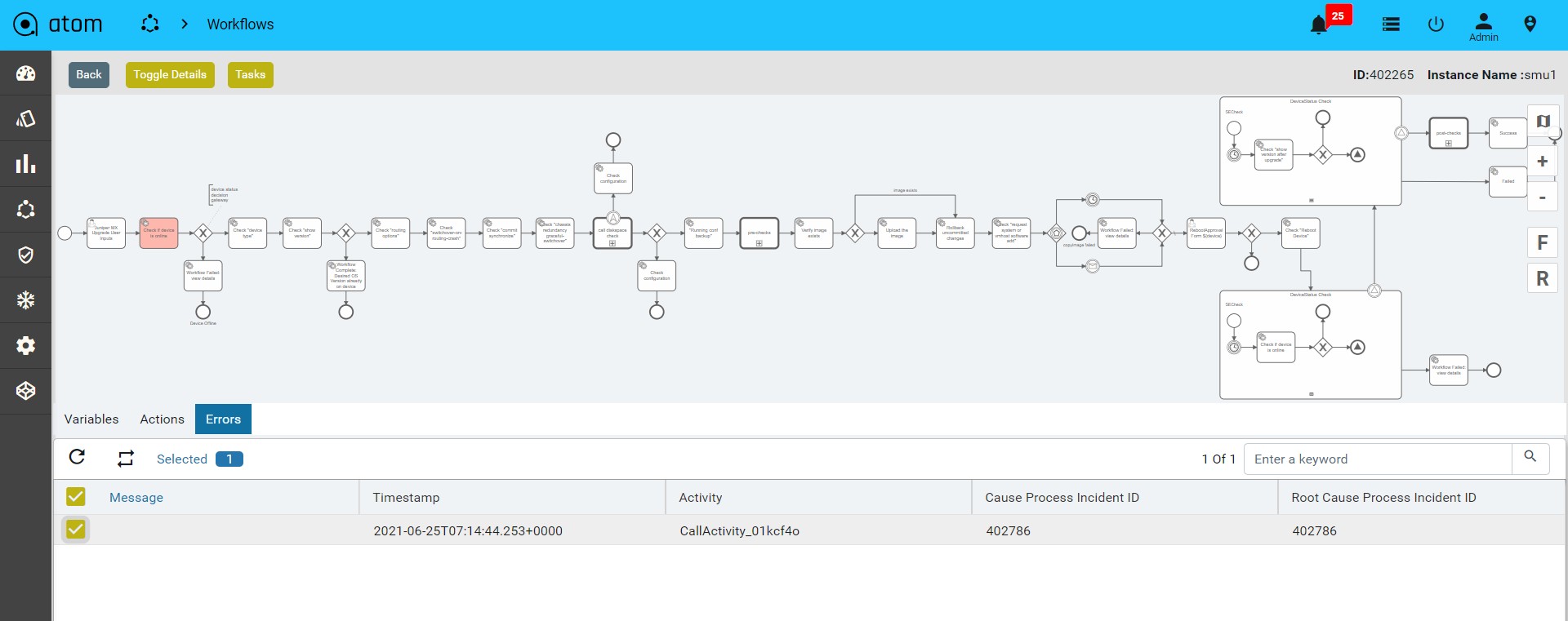

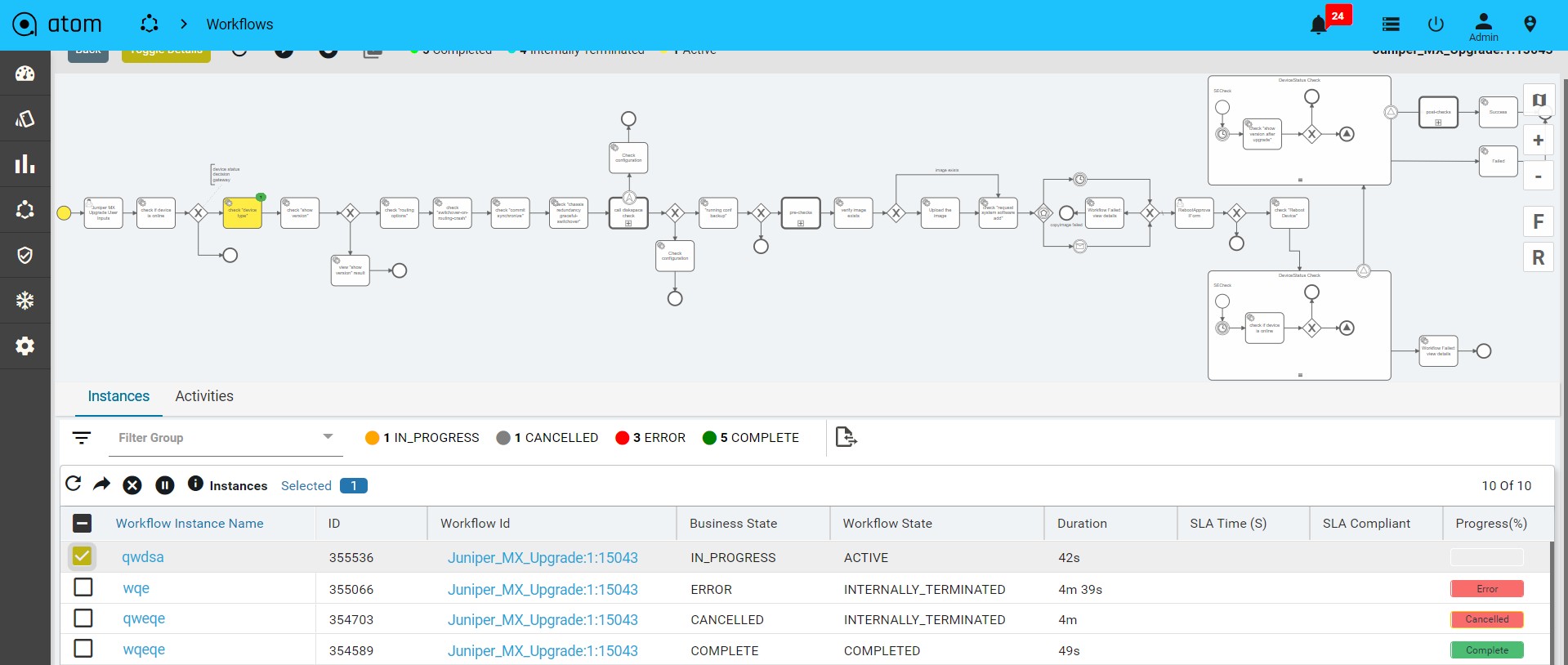

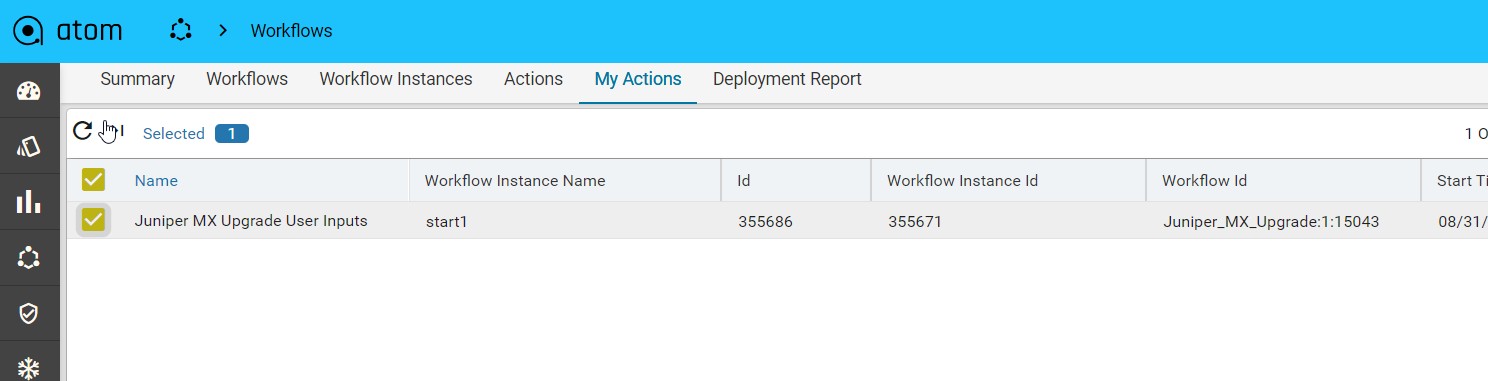

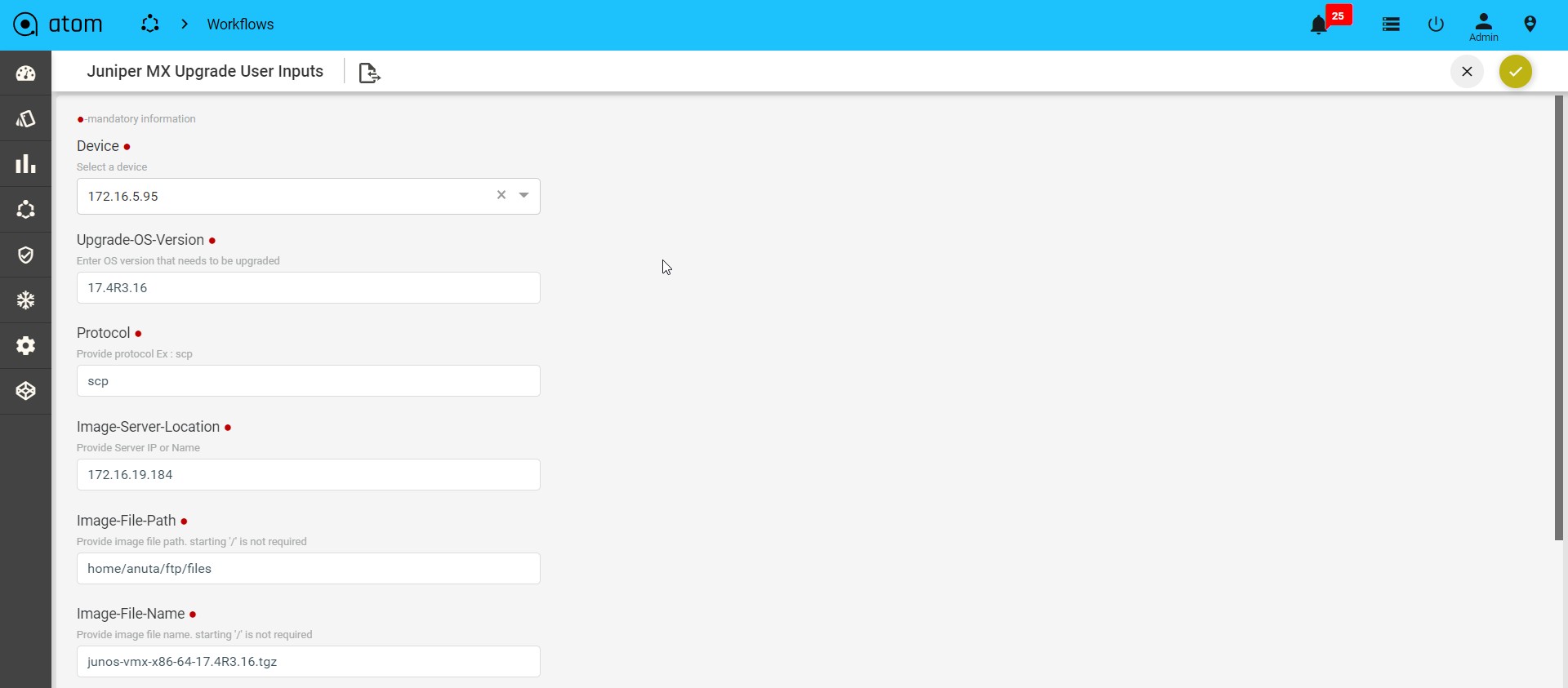

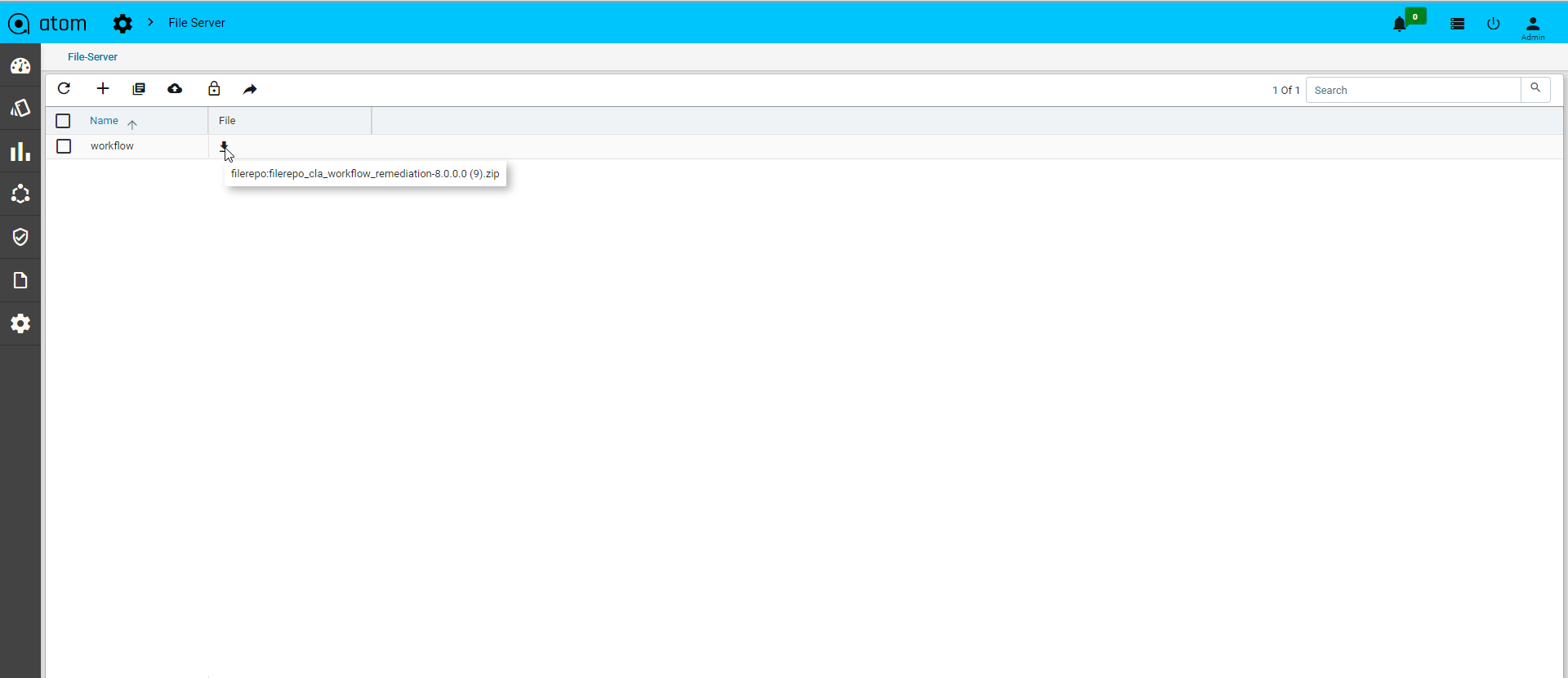

Image Upgrade Workflow

Image Repository

Software Version Compliance

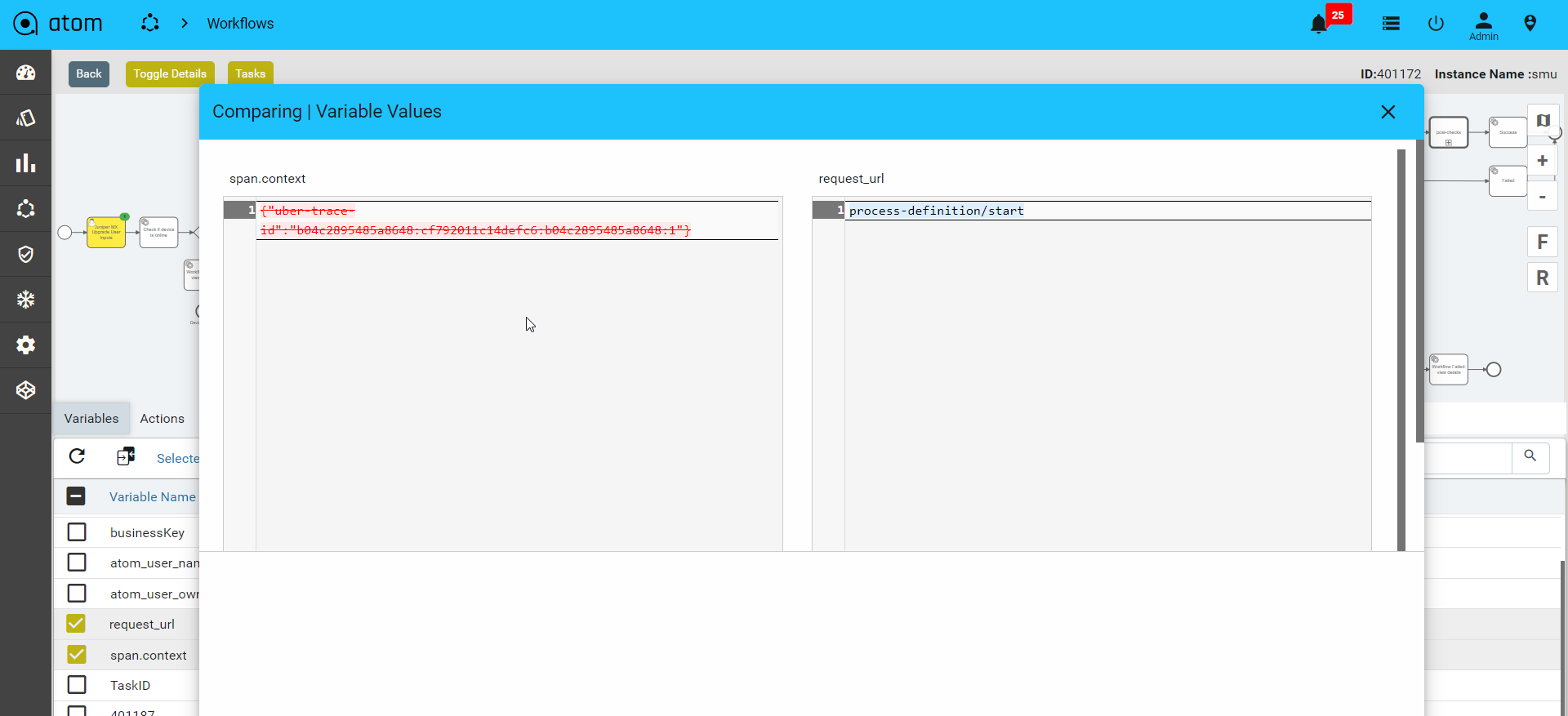

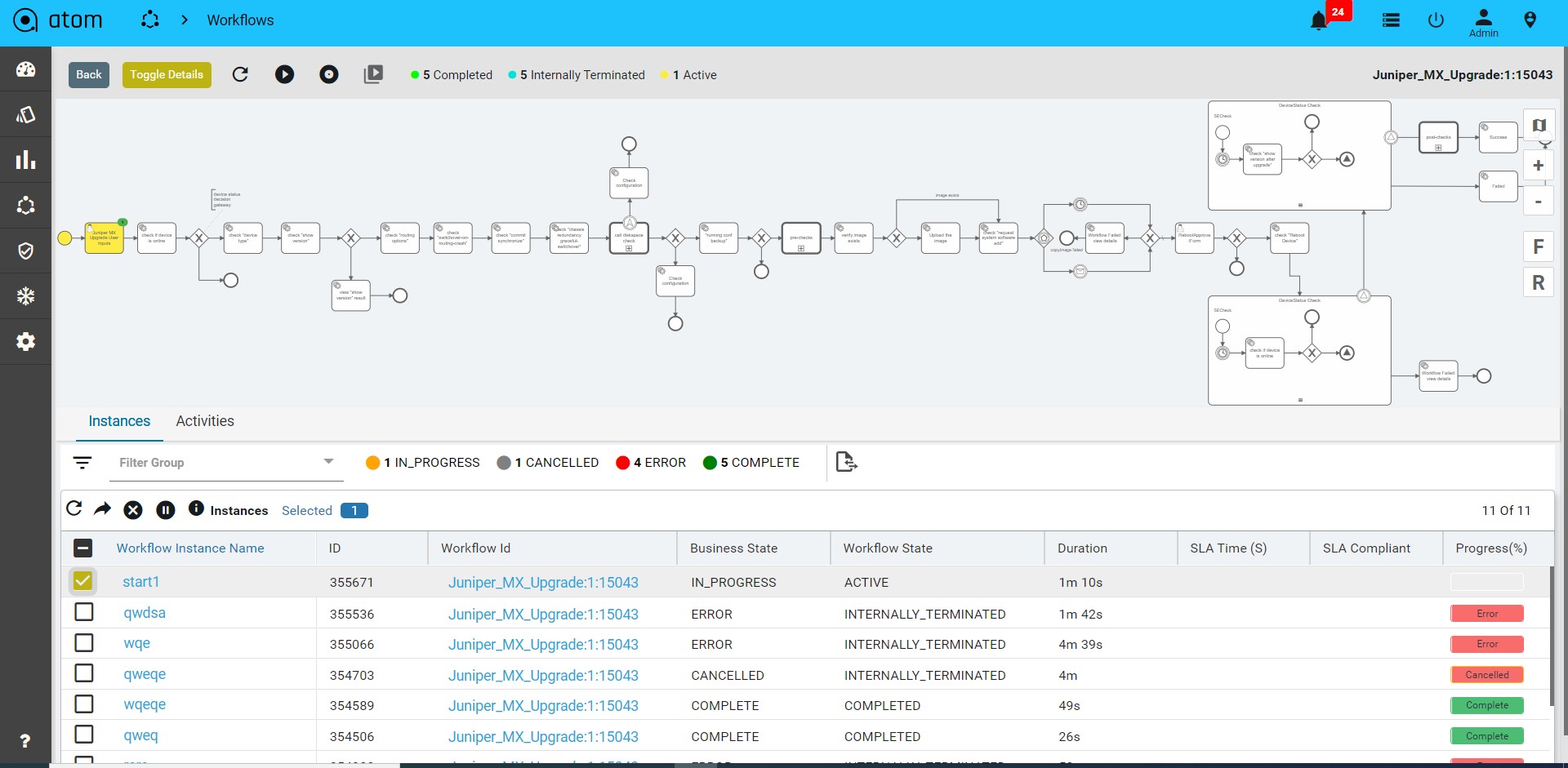

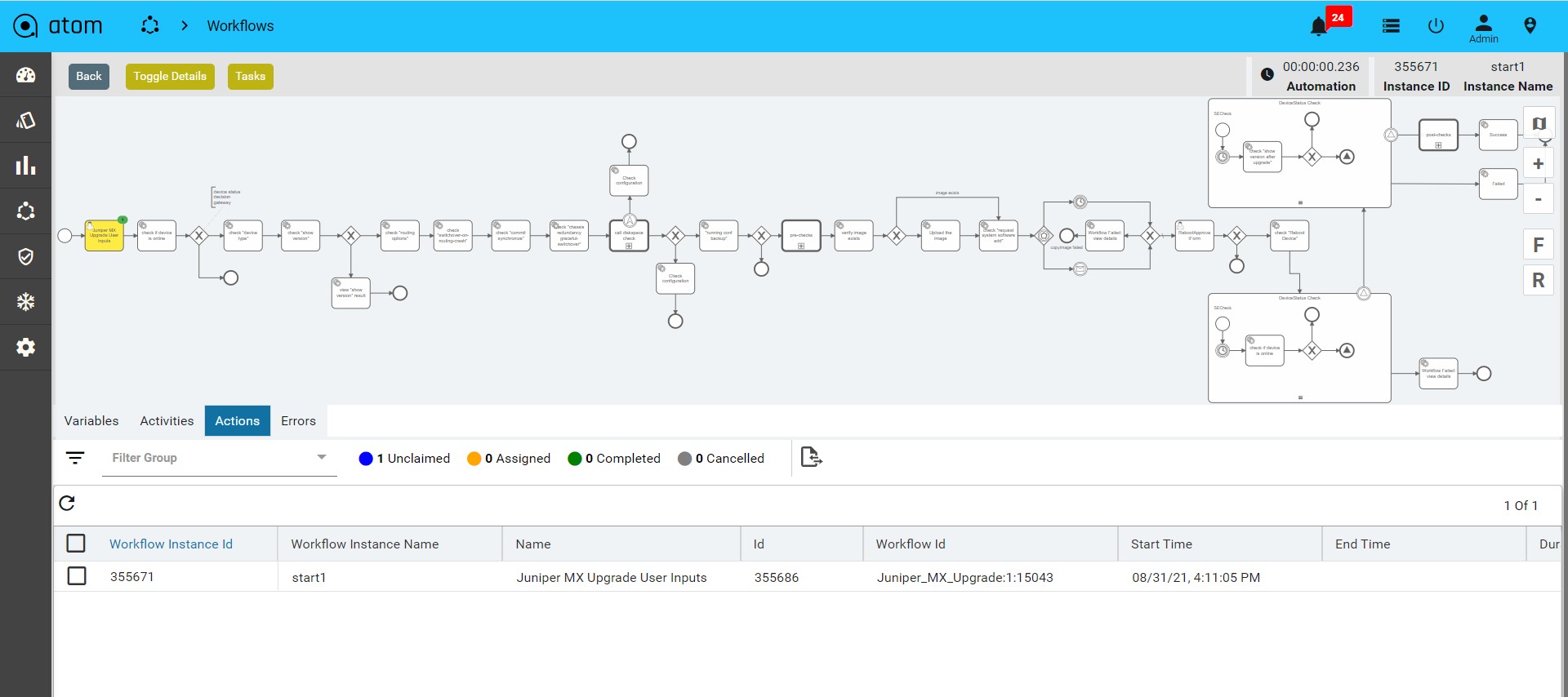

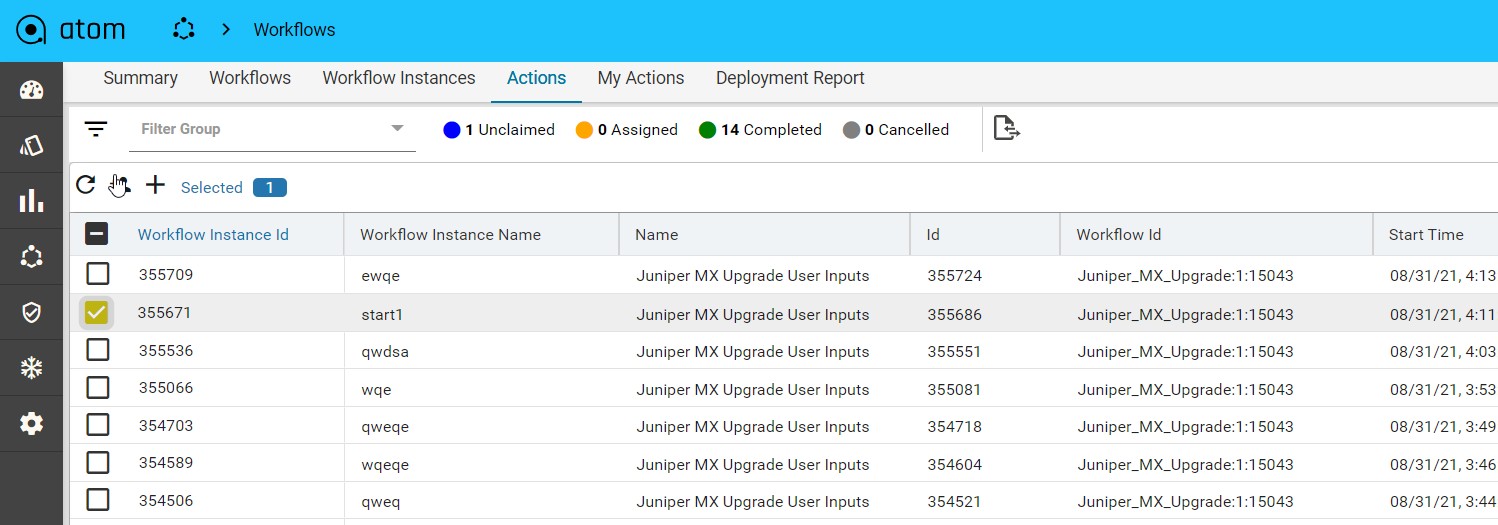

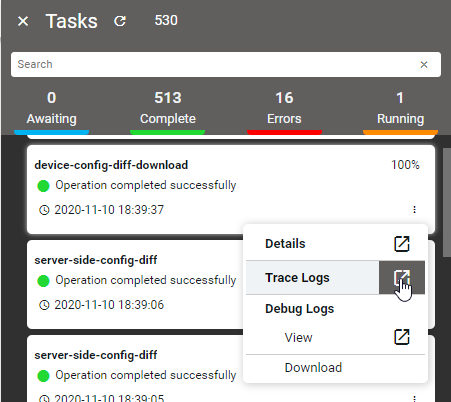

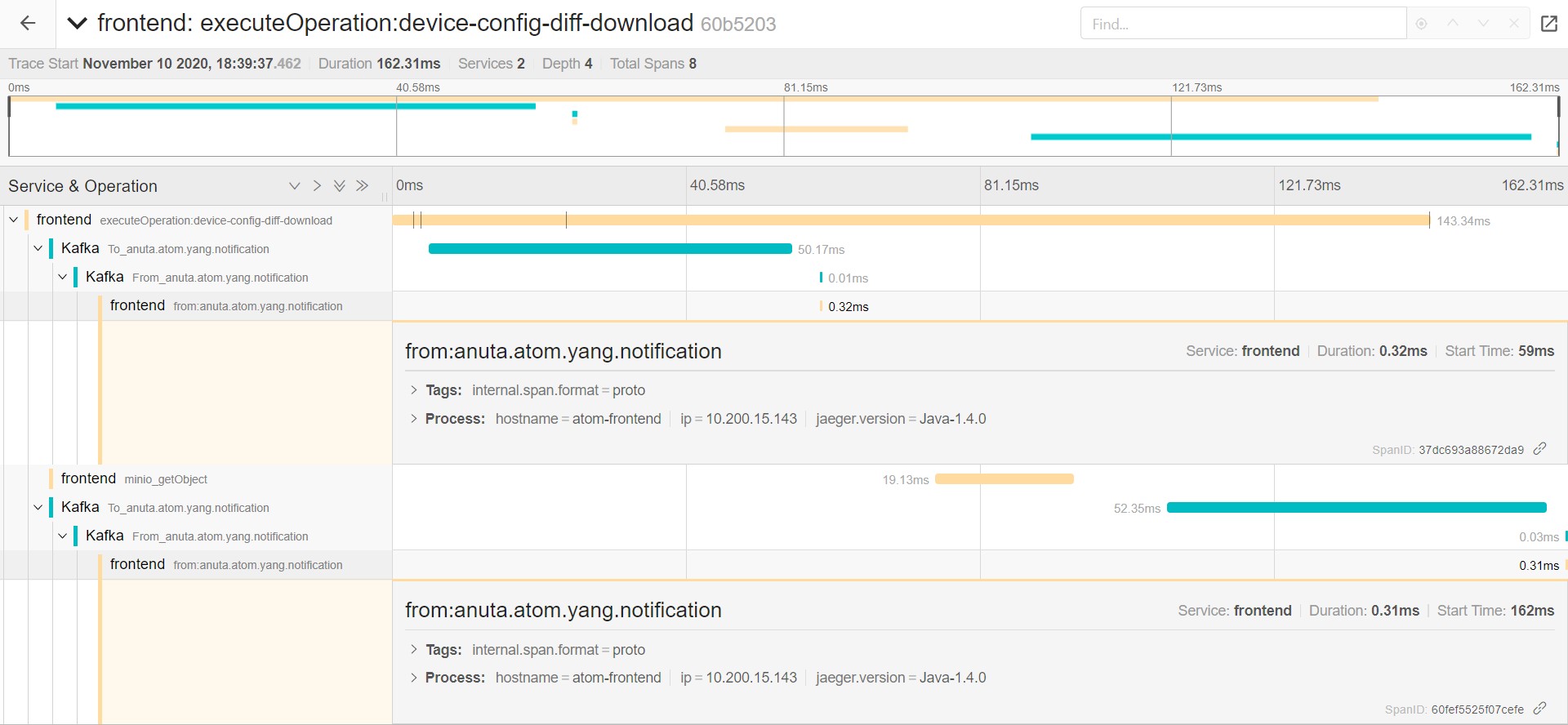

Network Workflow & Low Code Automation206

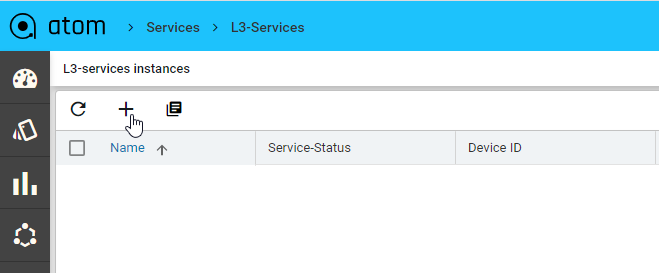

Transactional control at the Service level220

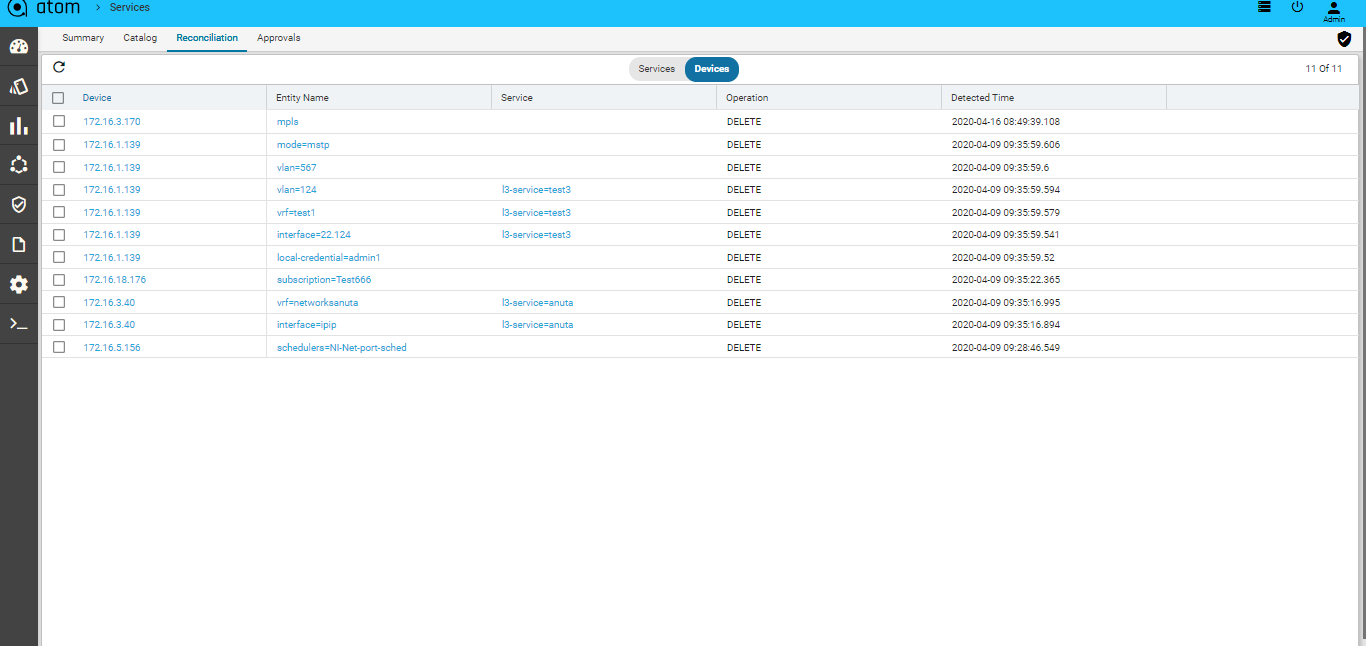

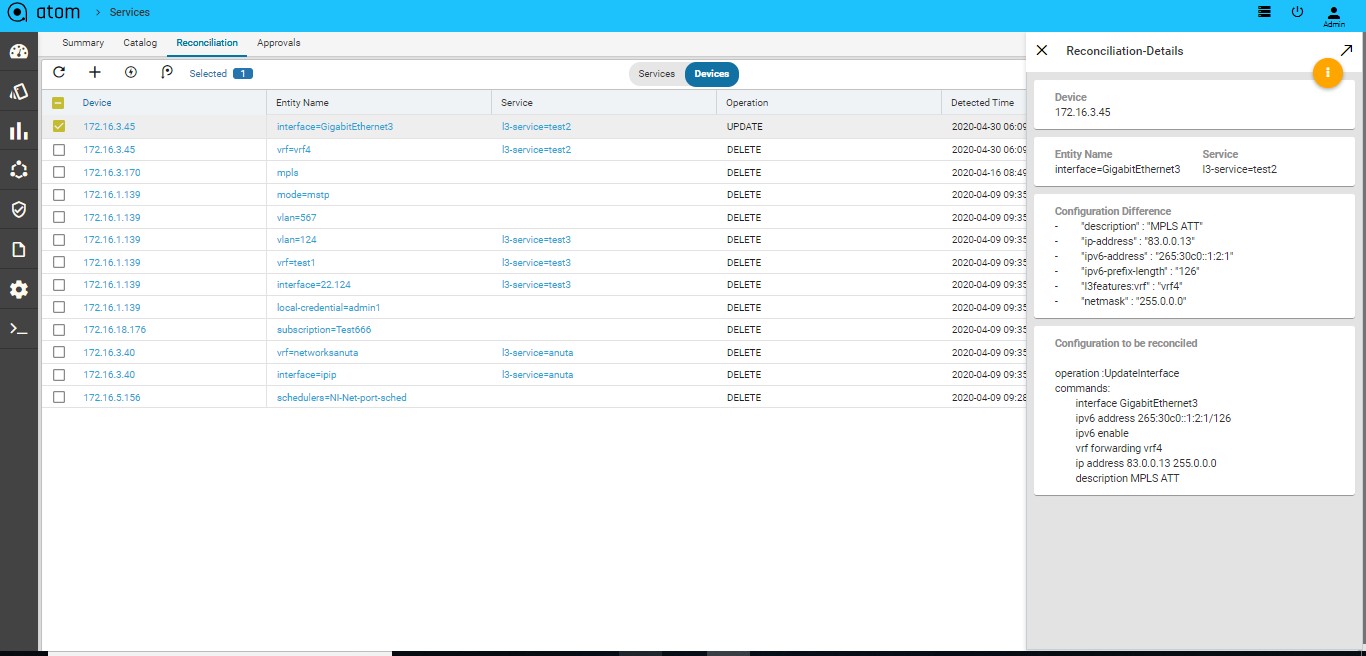

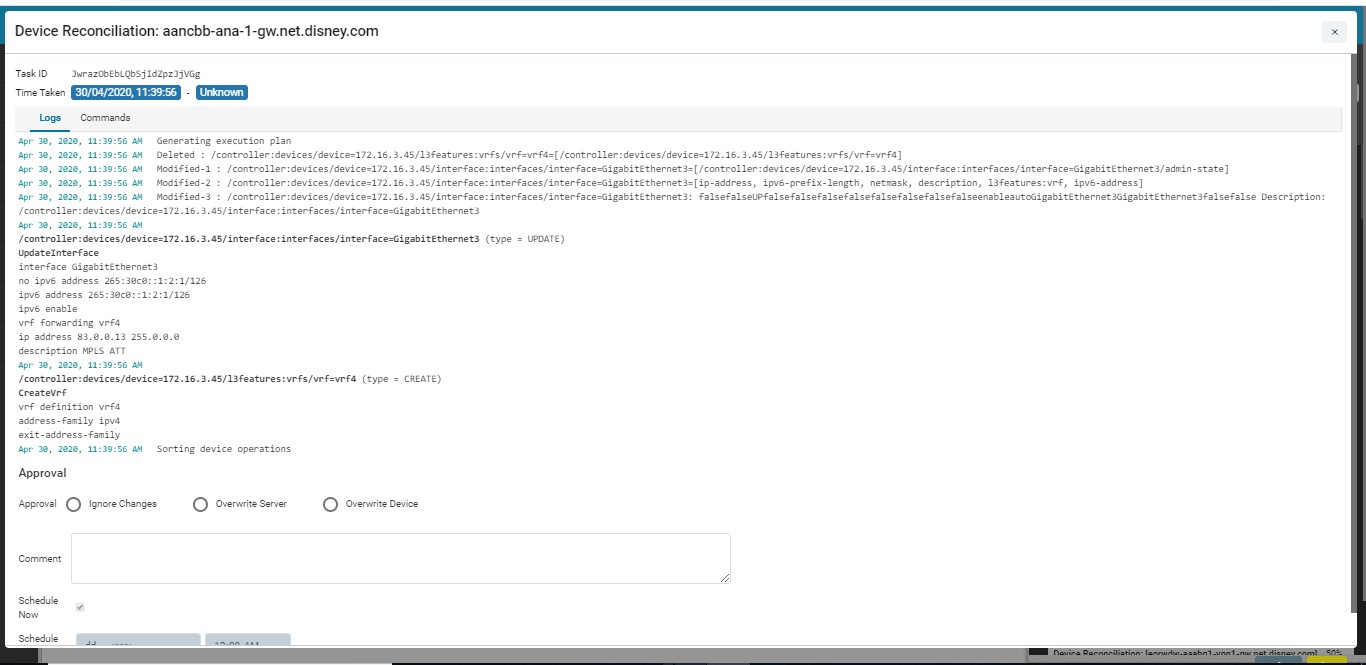

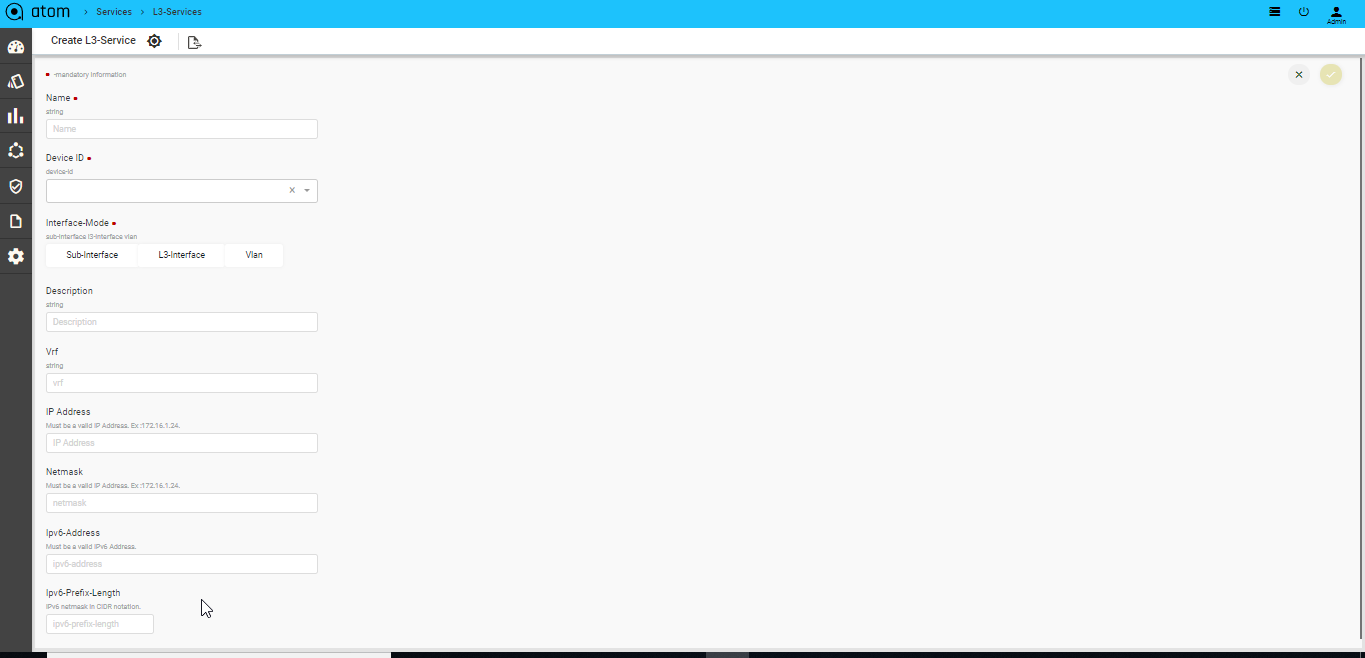

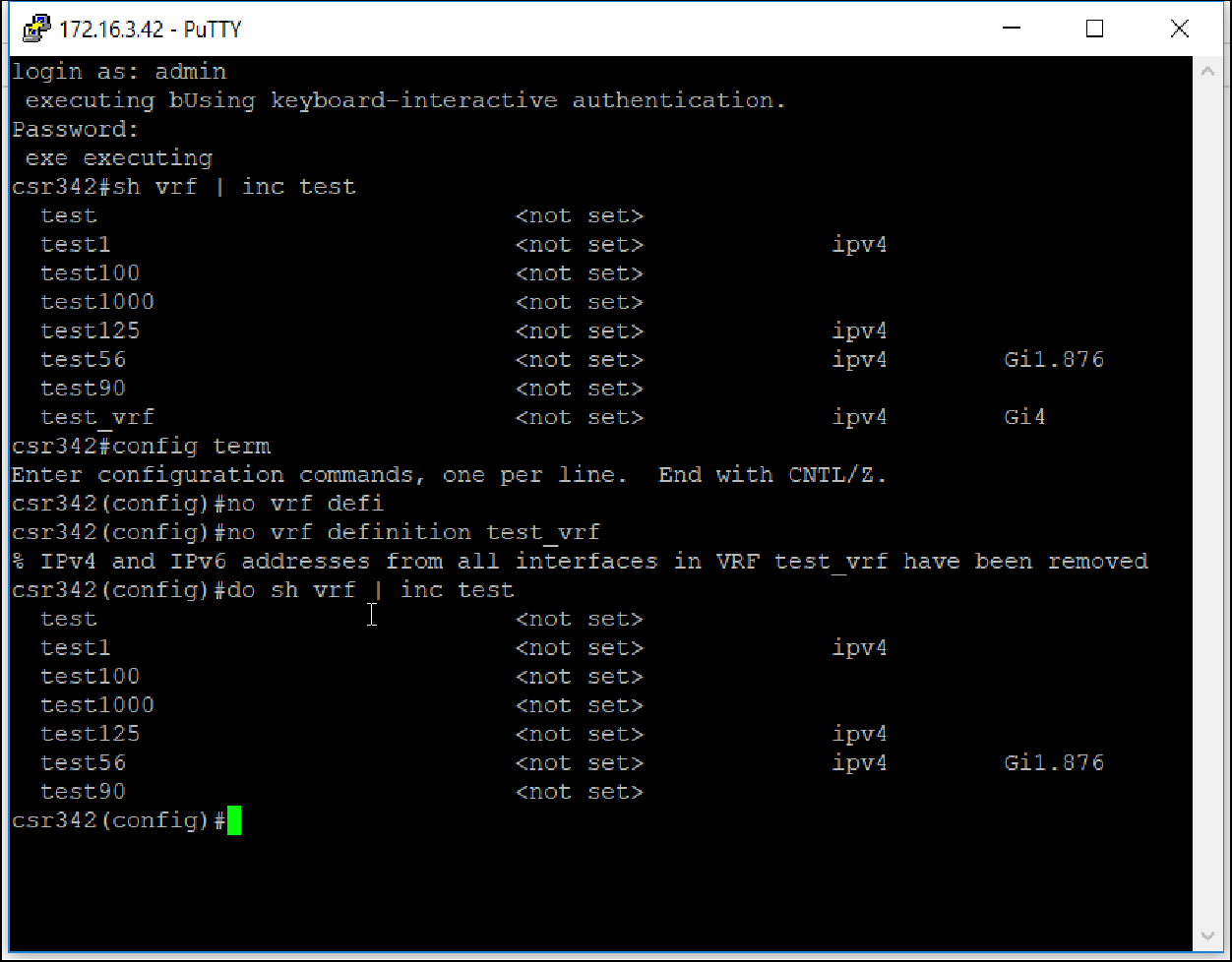

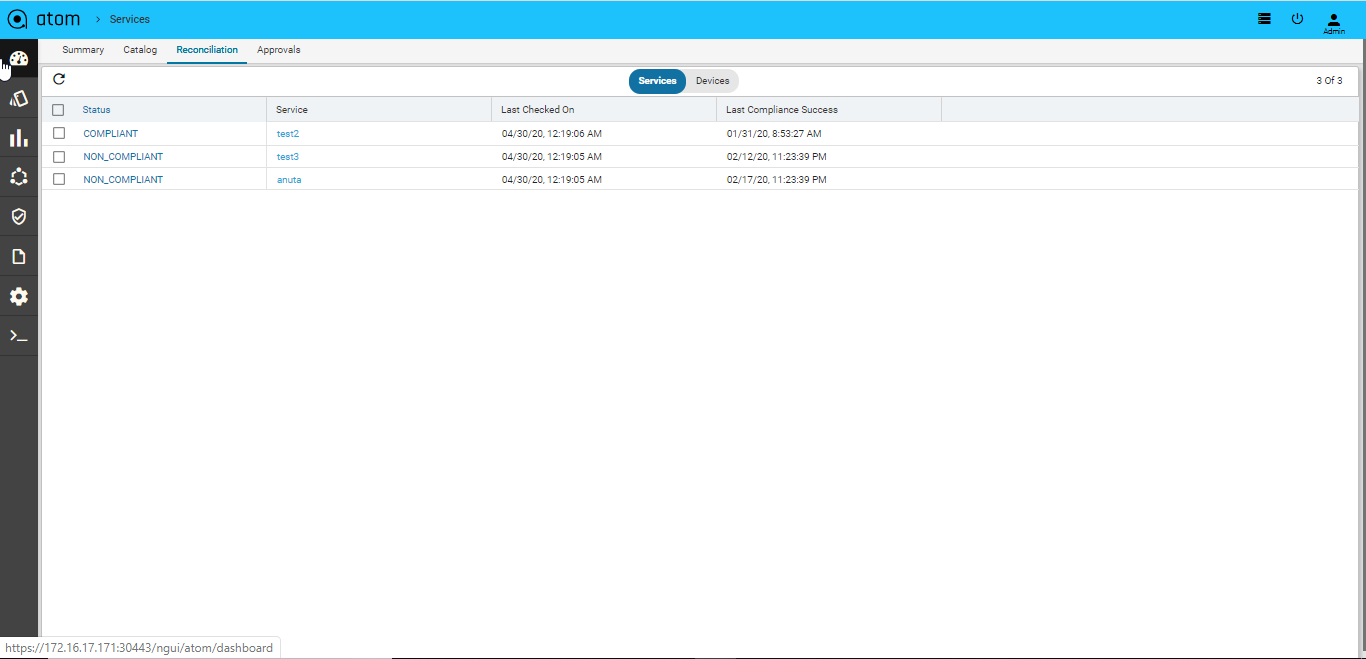

Configuration Drift (Network Services)226

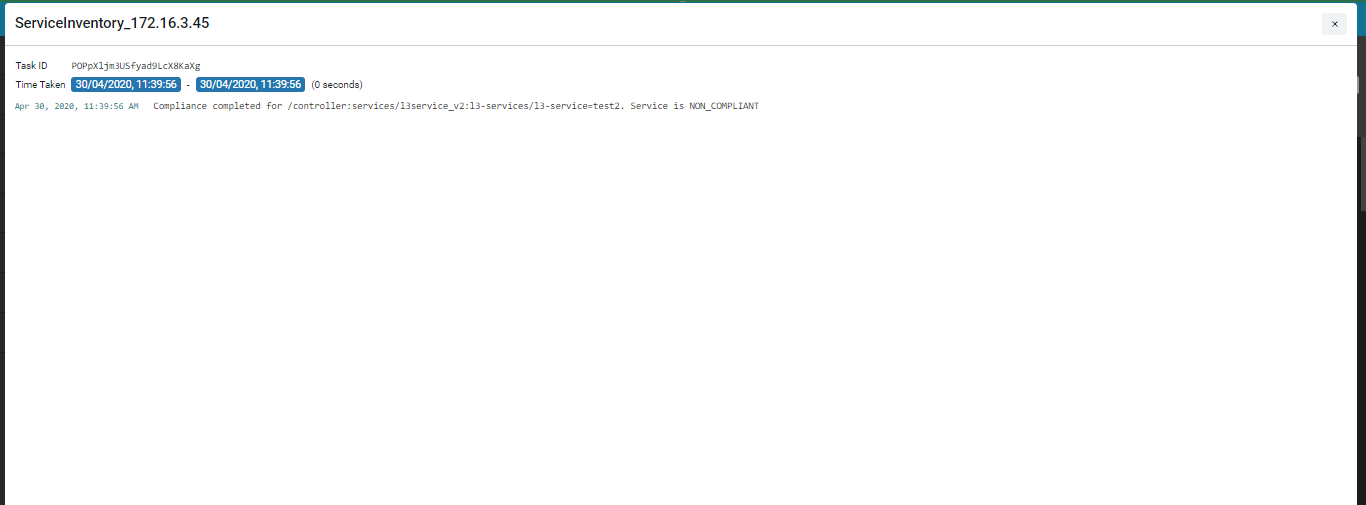

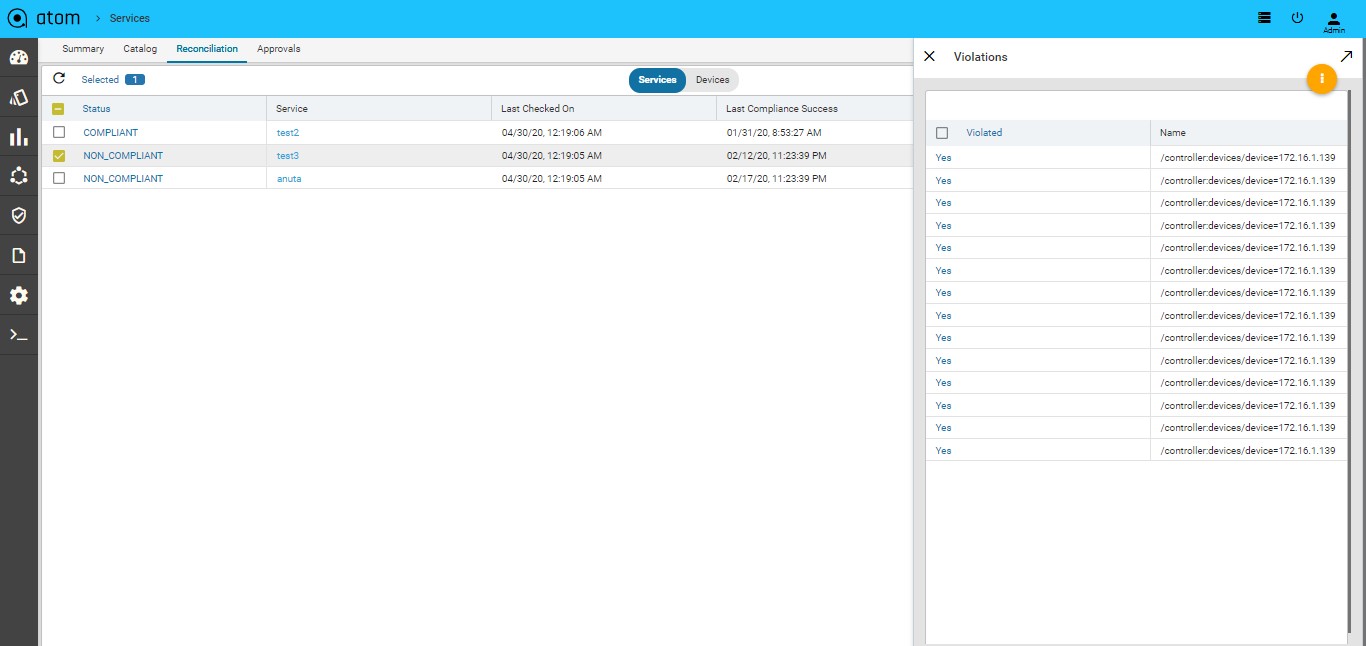

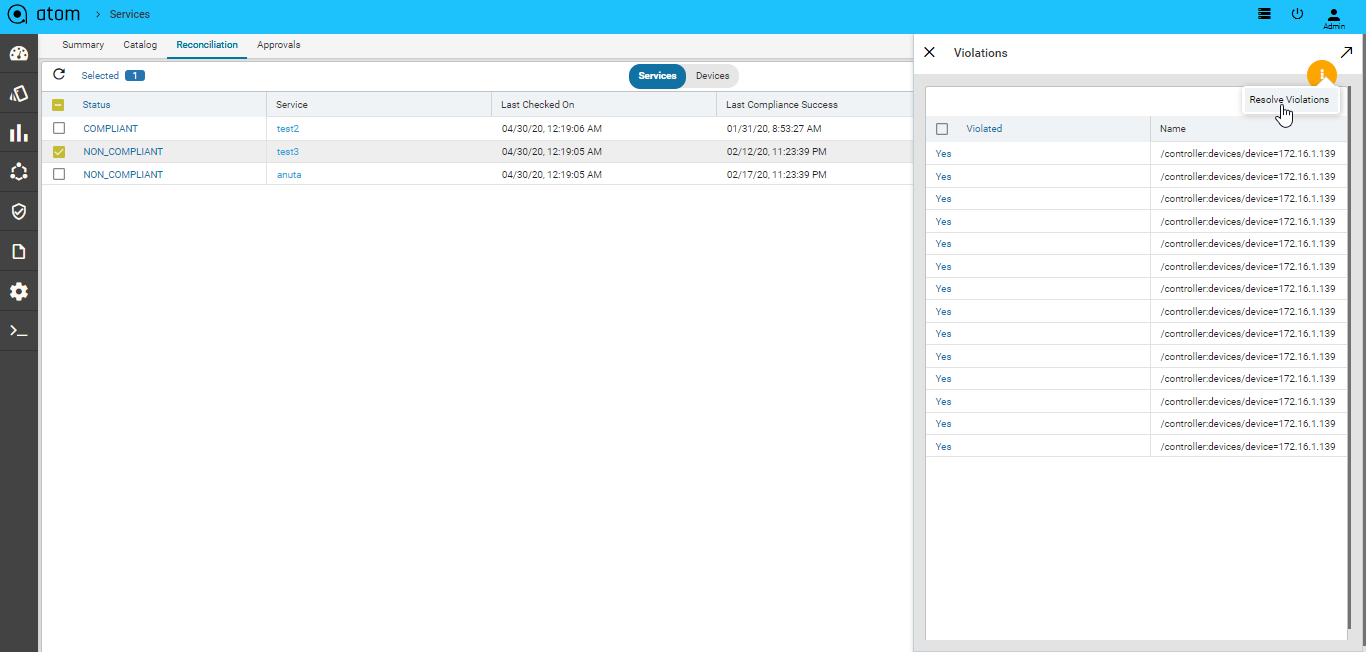

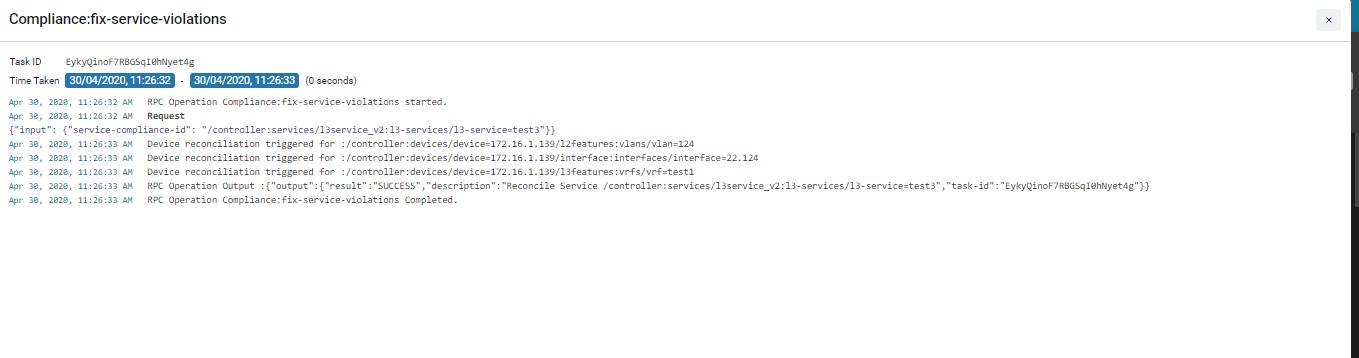

Resolving Service Violations231

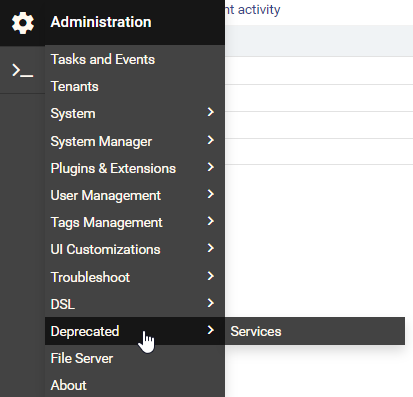

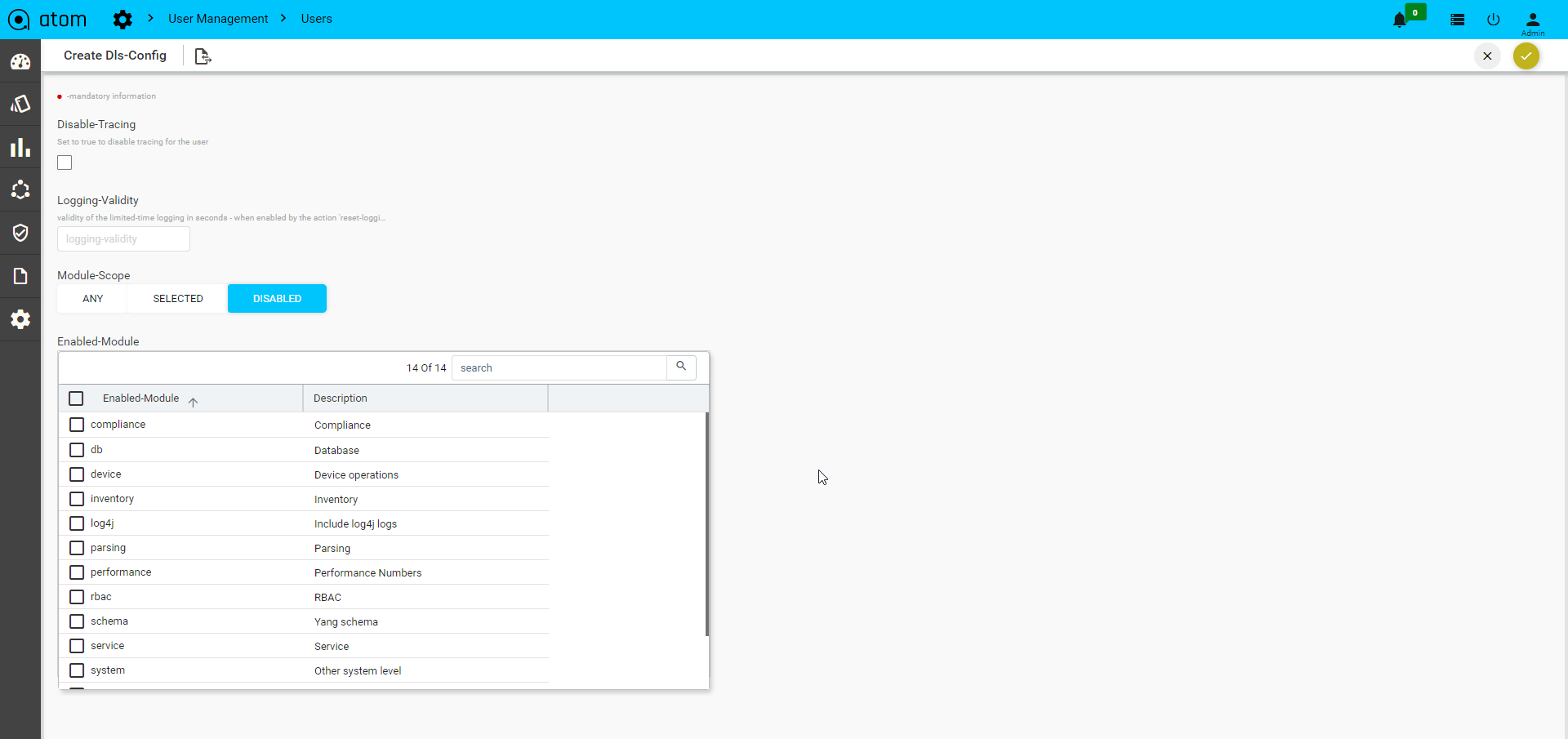

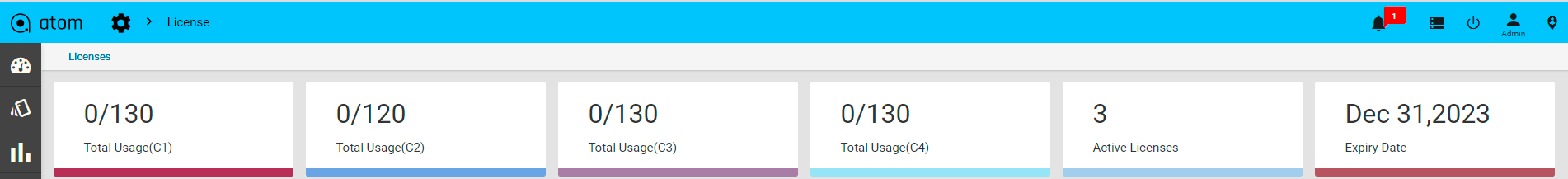

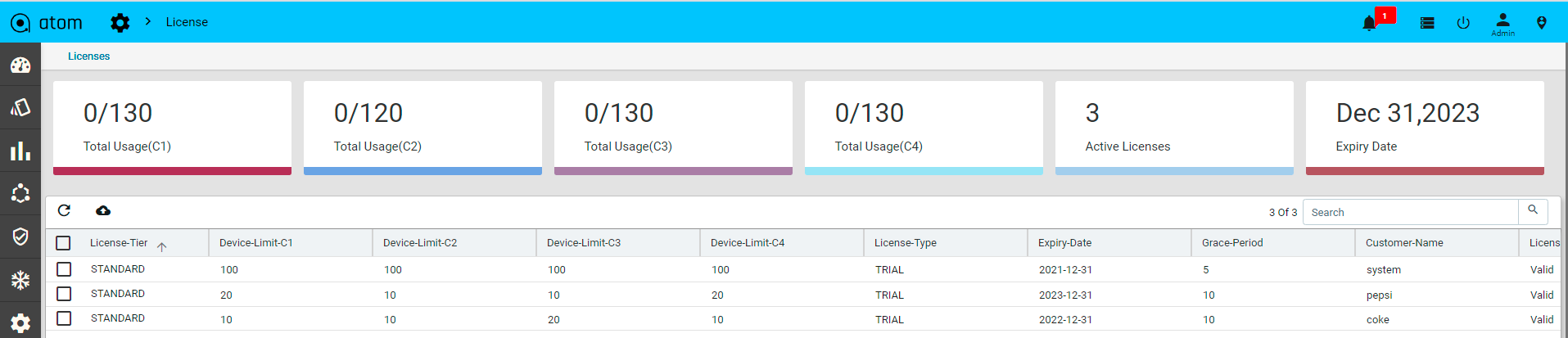

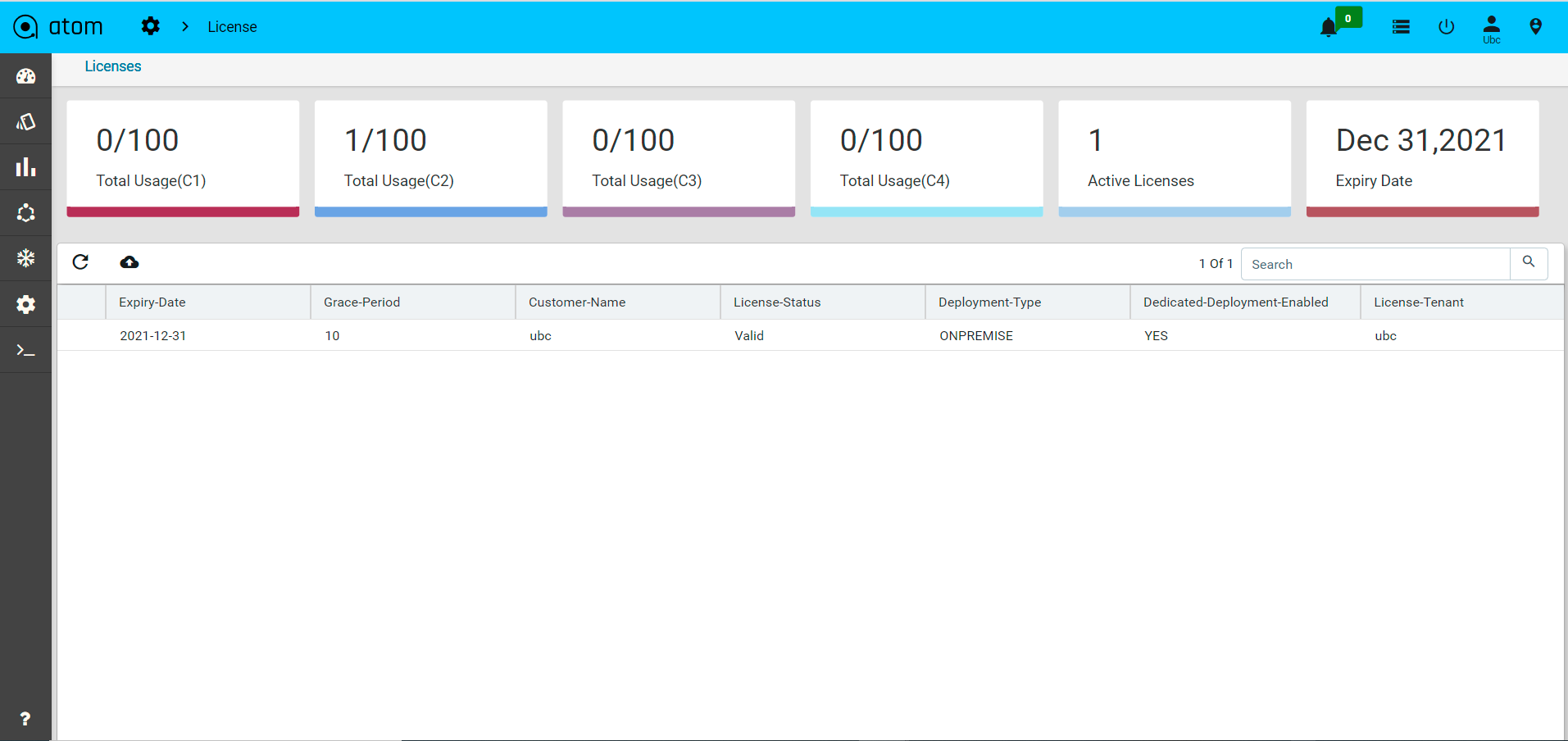

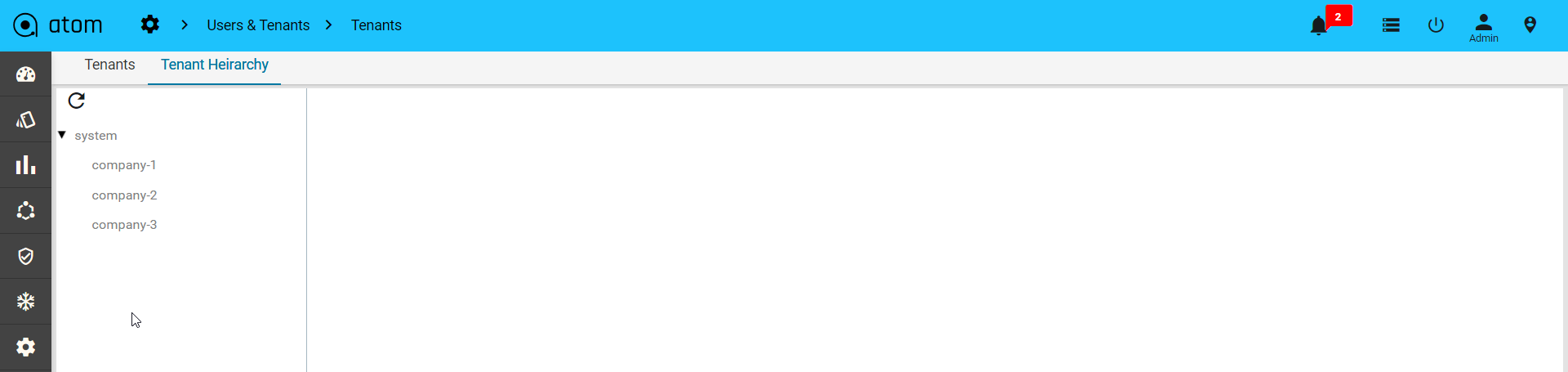

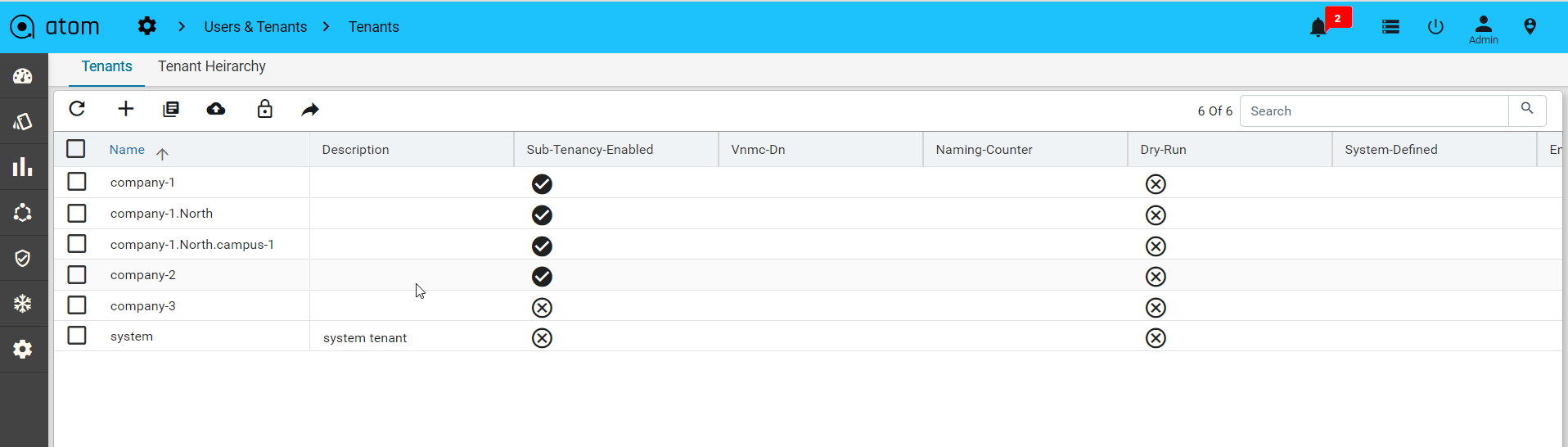

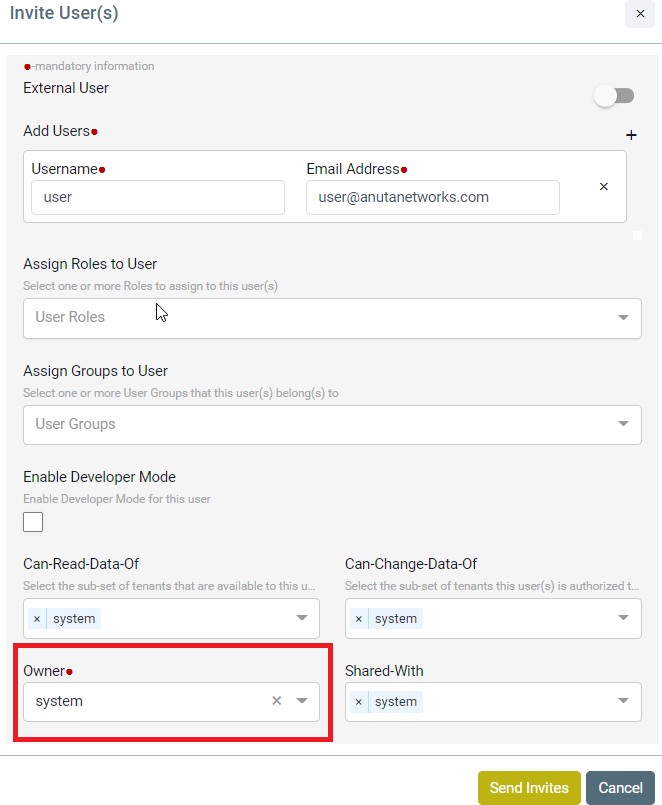

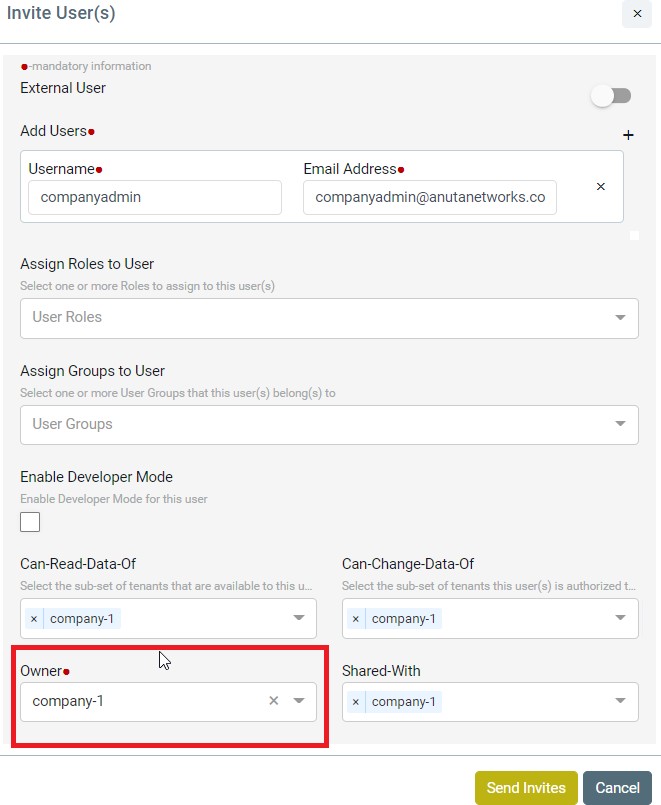

ATOM in Multi-Tenant or Shared Mode242

Primary container load limit248

Concept Of Visibility And Usability275

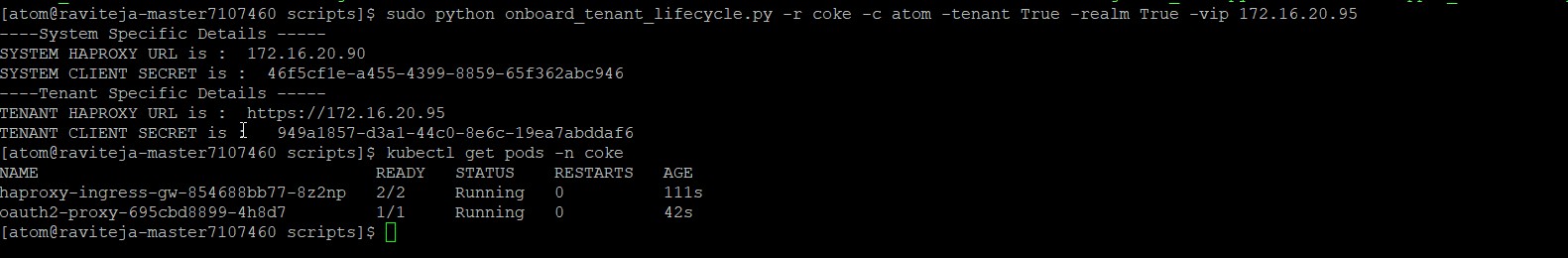

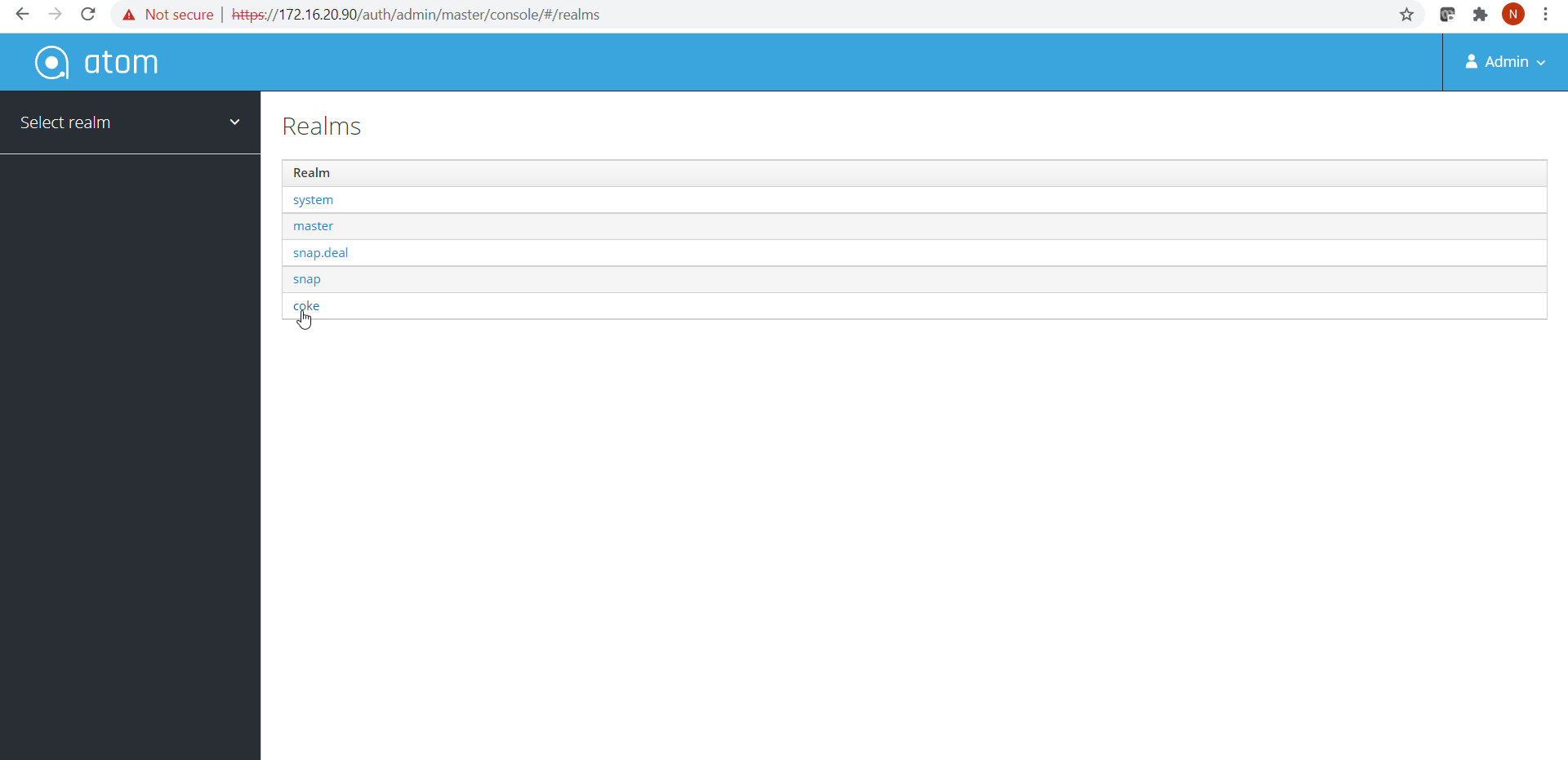

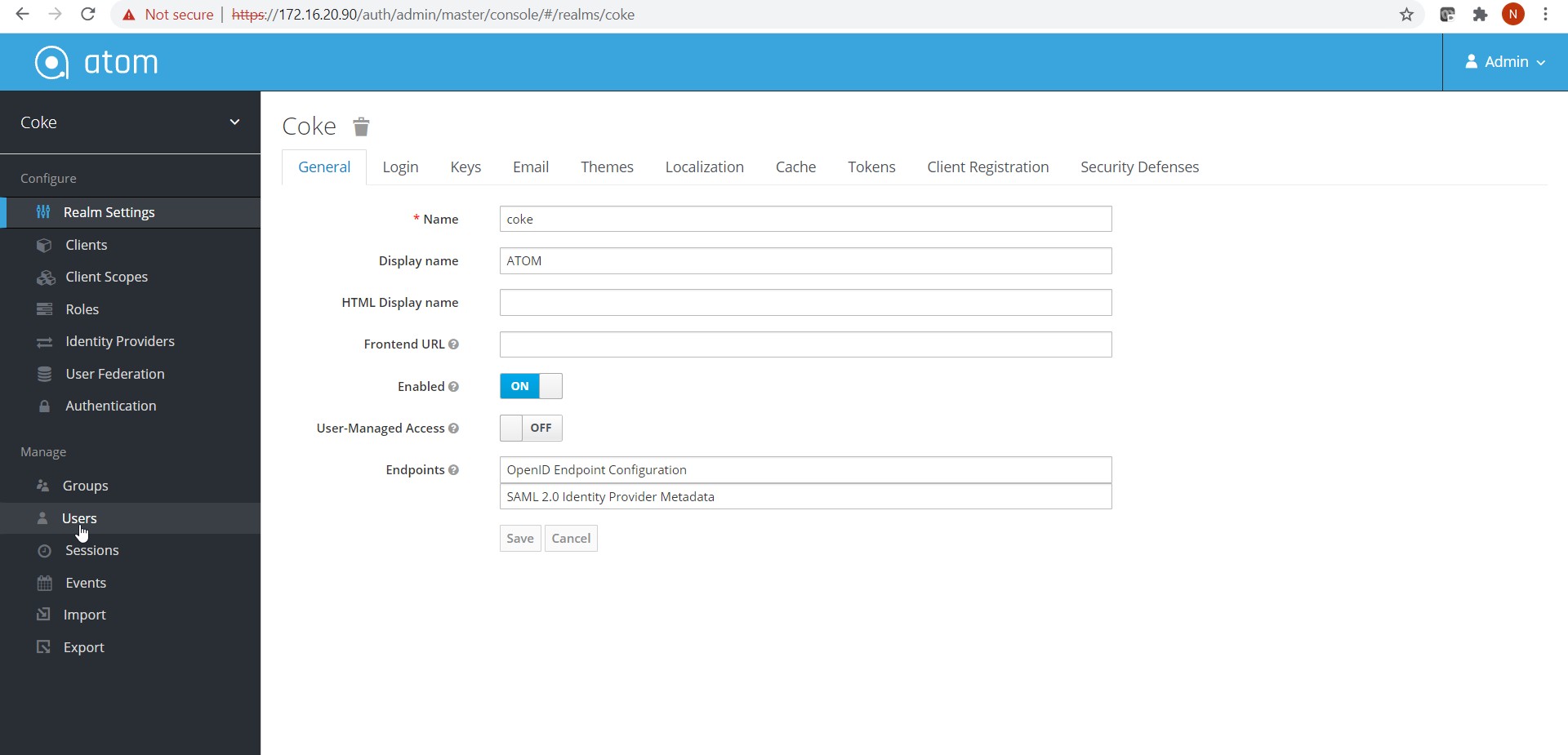

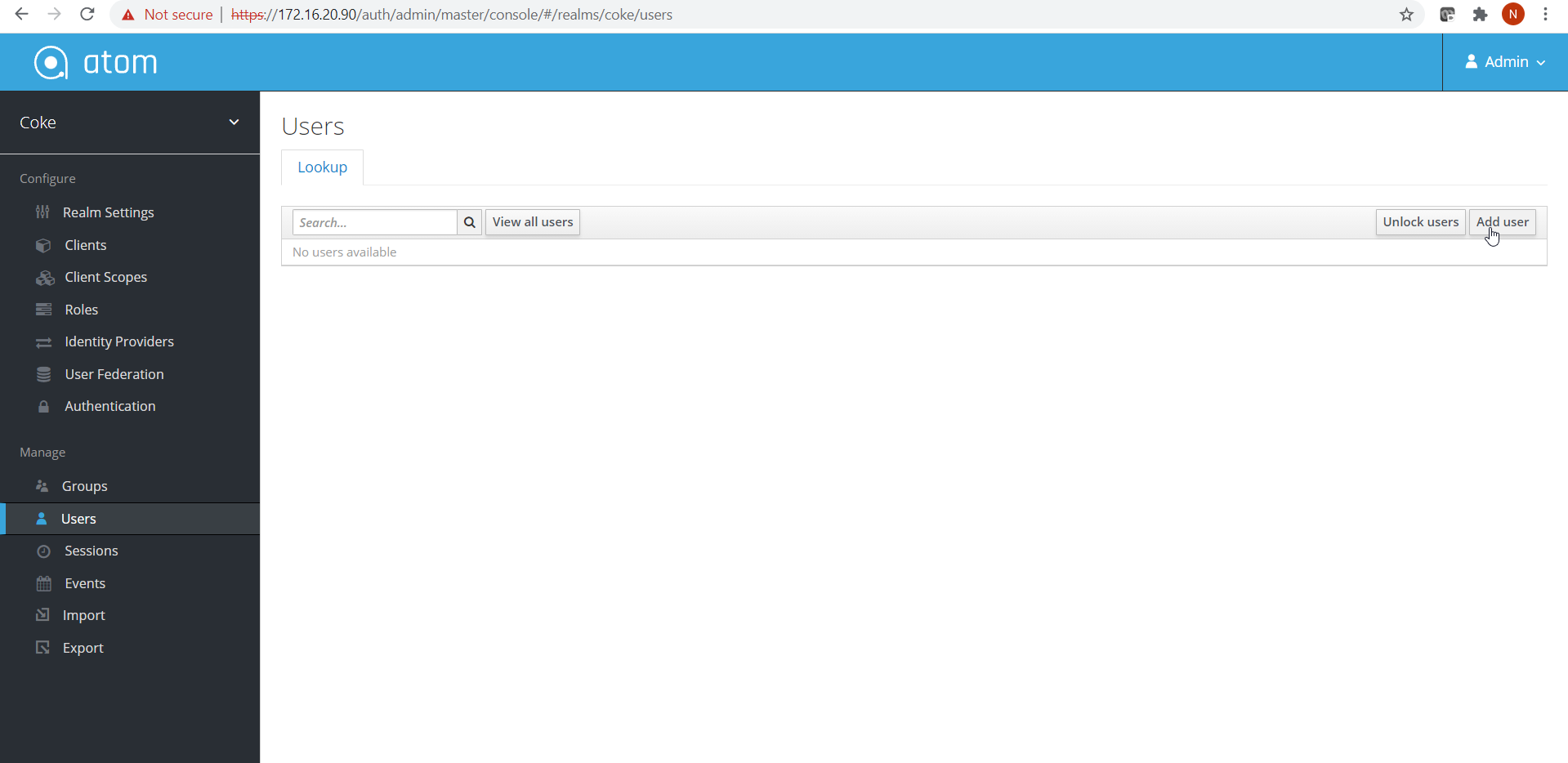

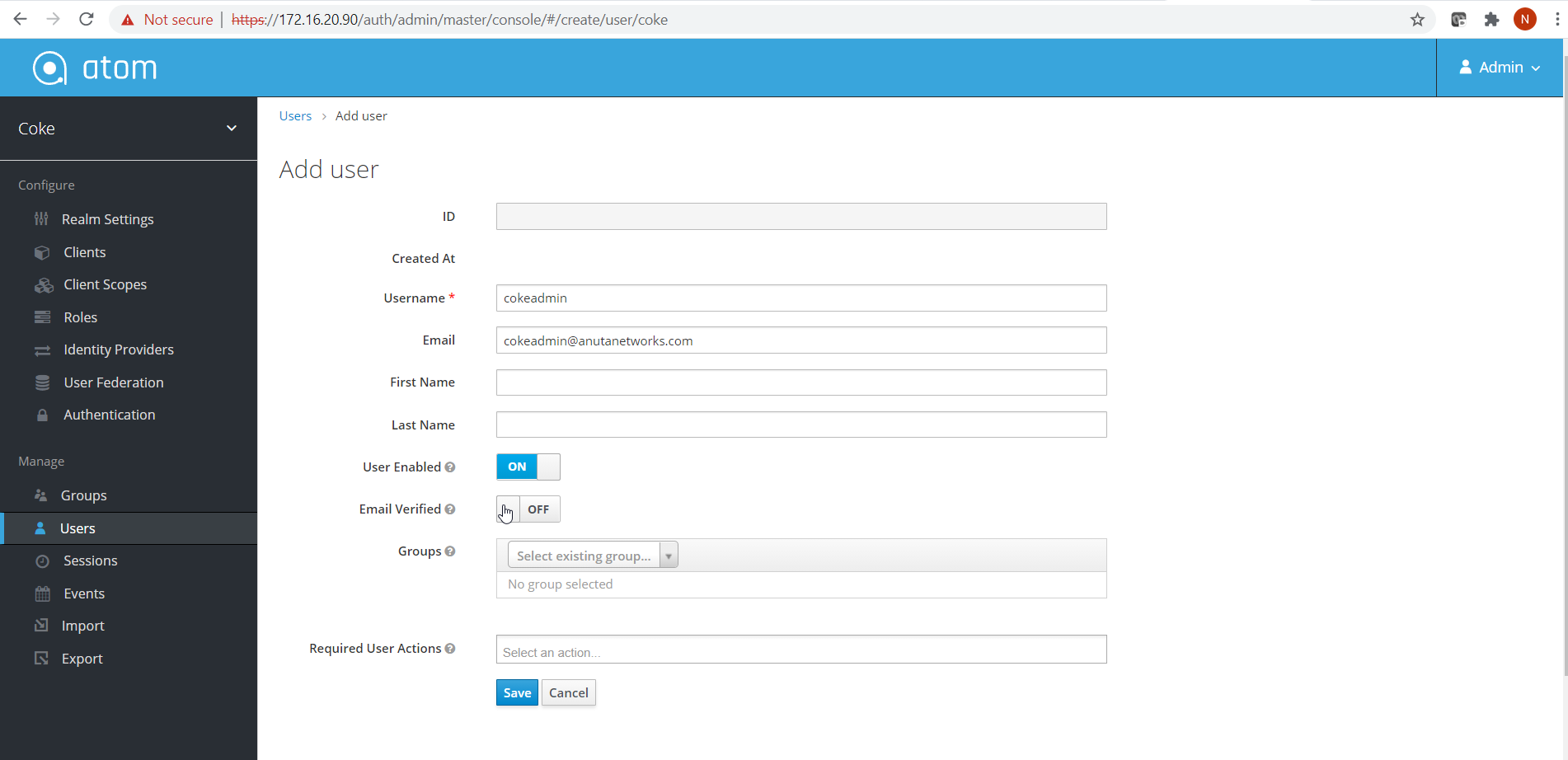

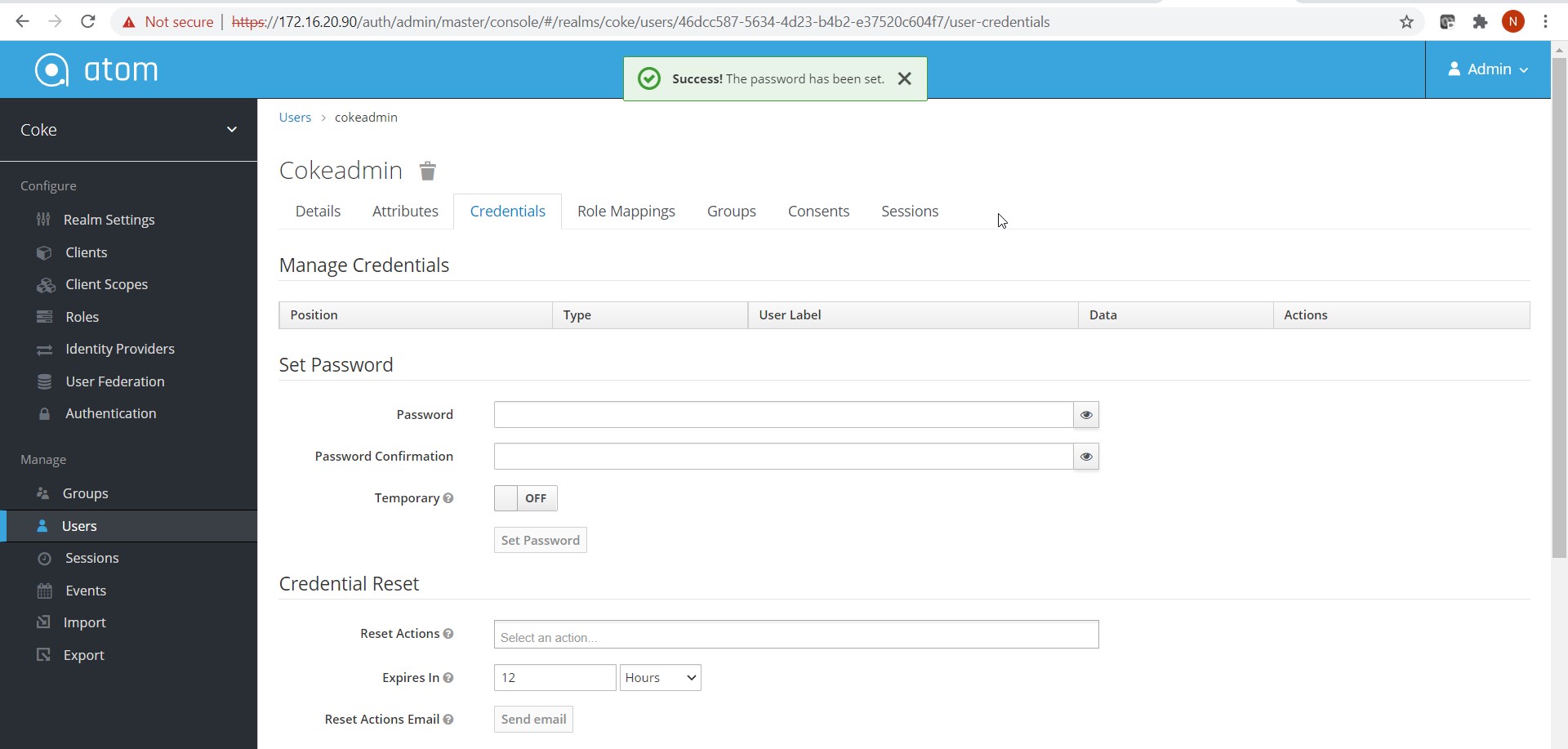

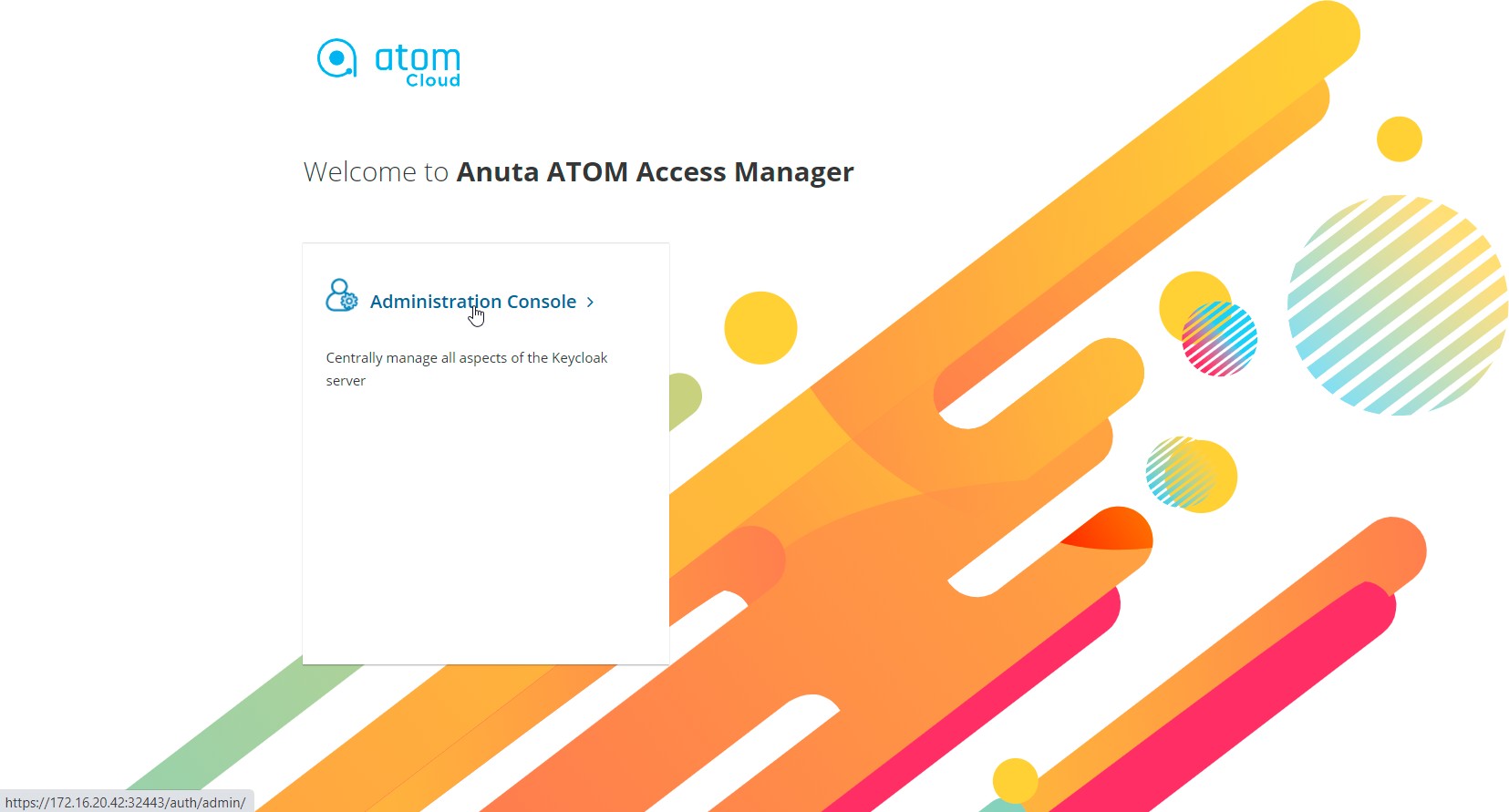

Onboarding Tenants through Keycloak scripts279

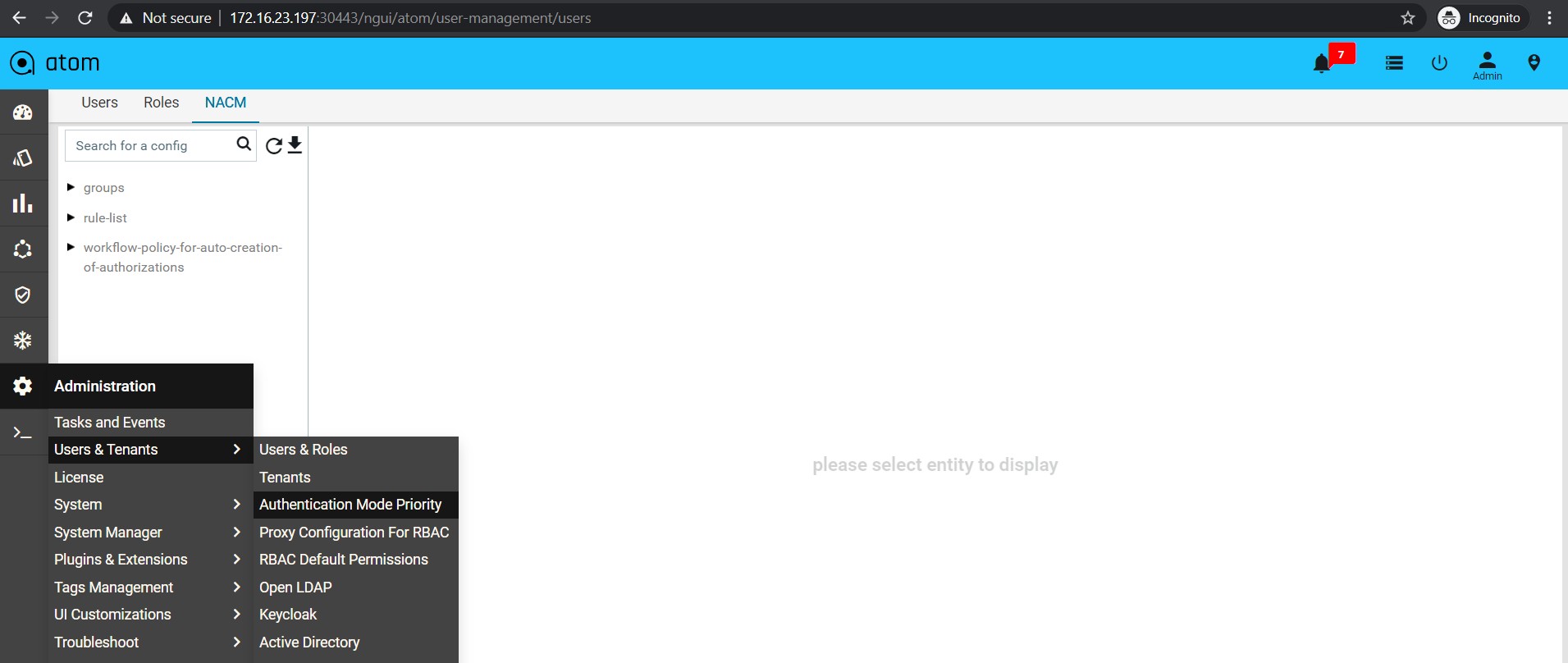

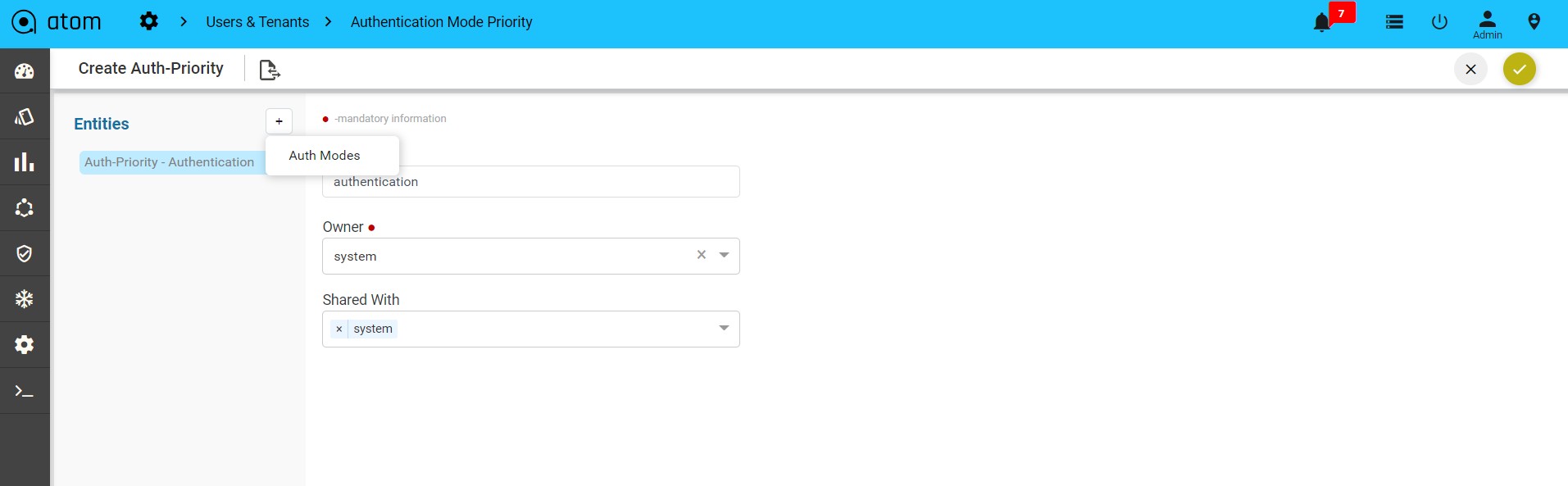

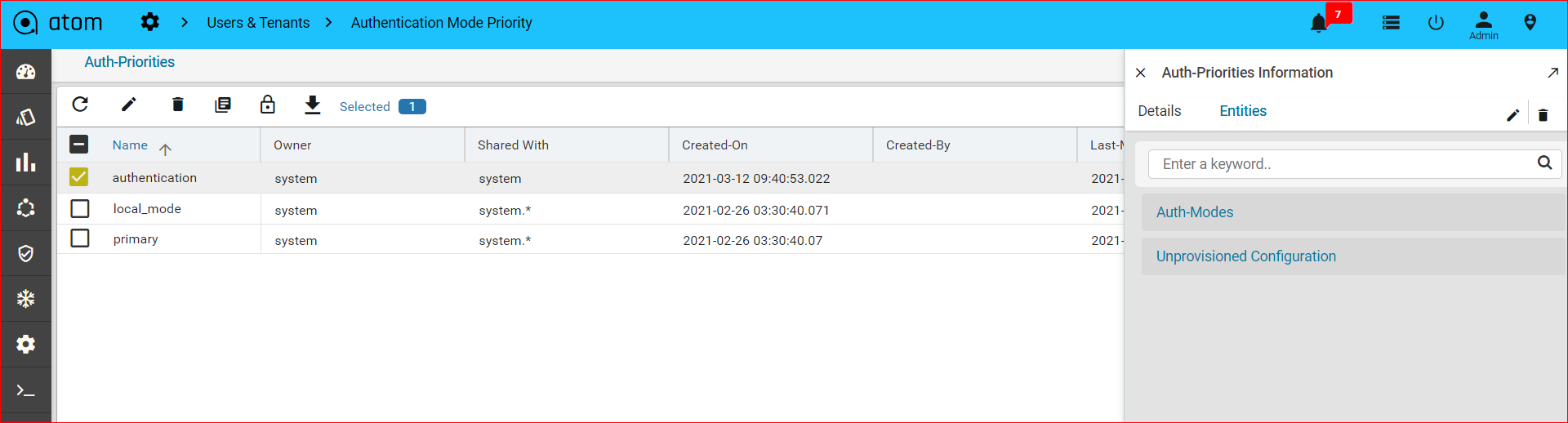

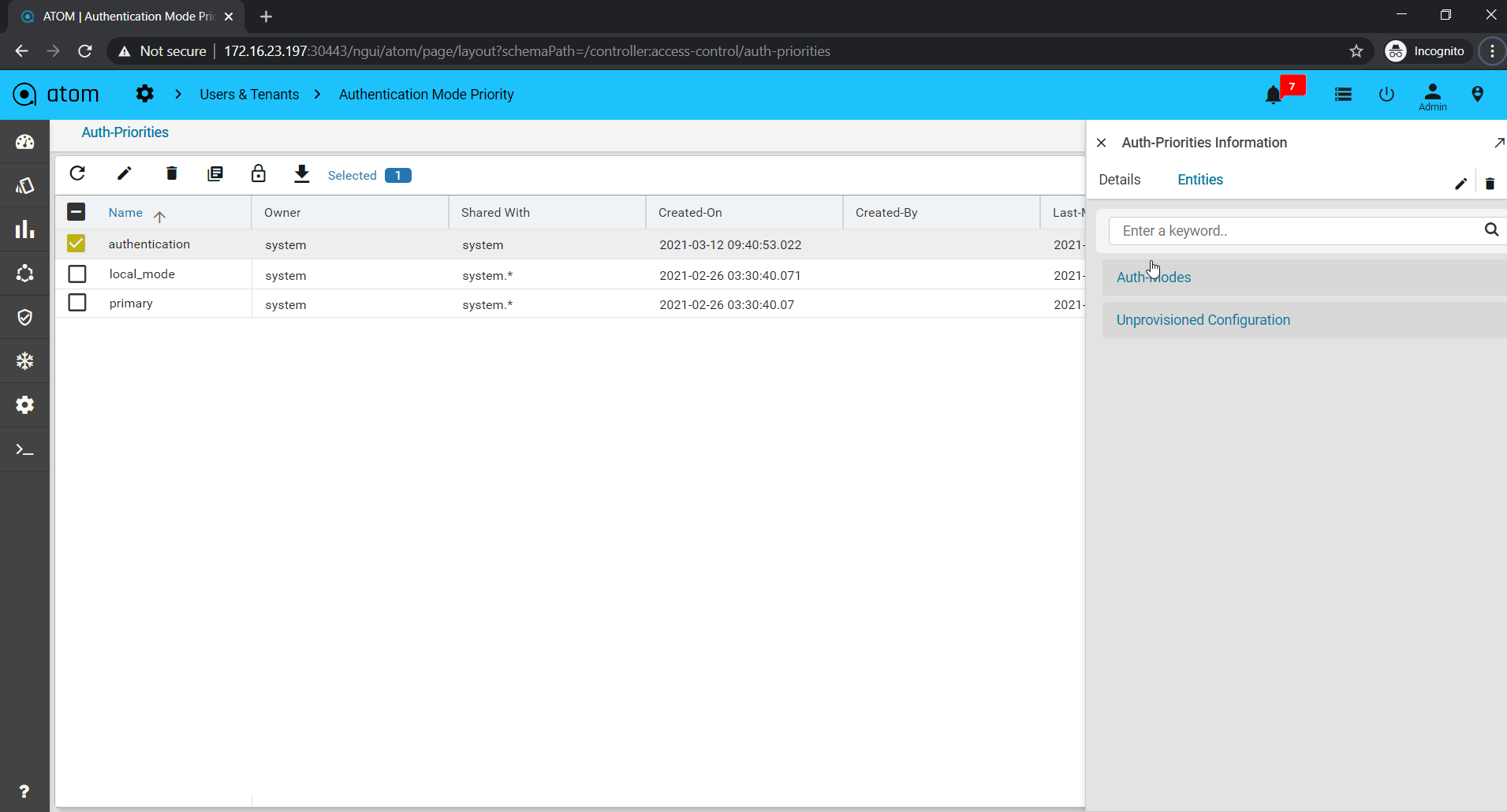

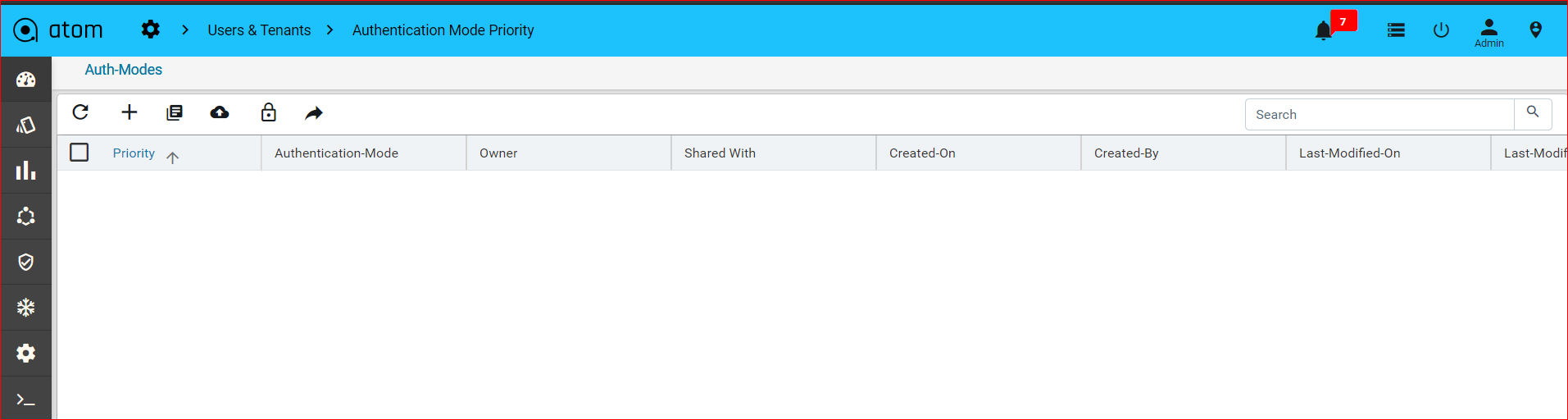

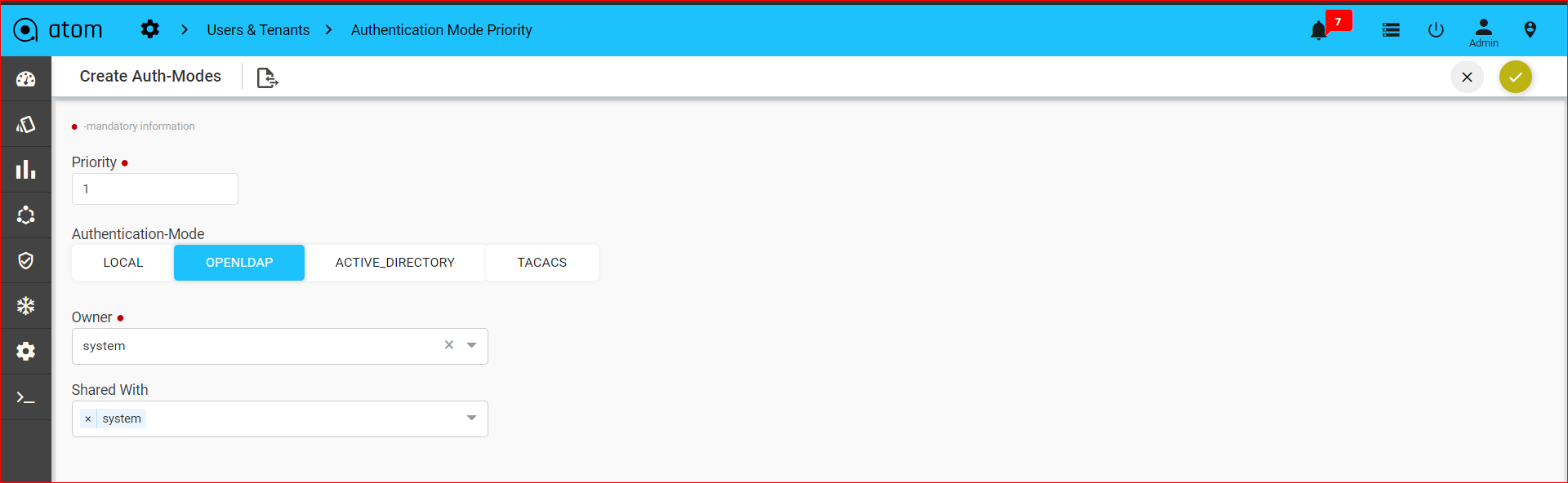

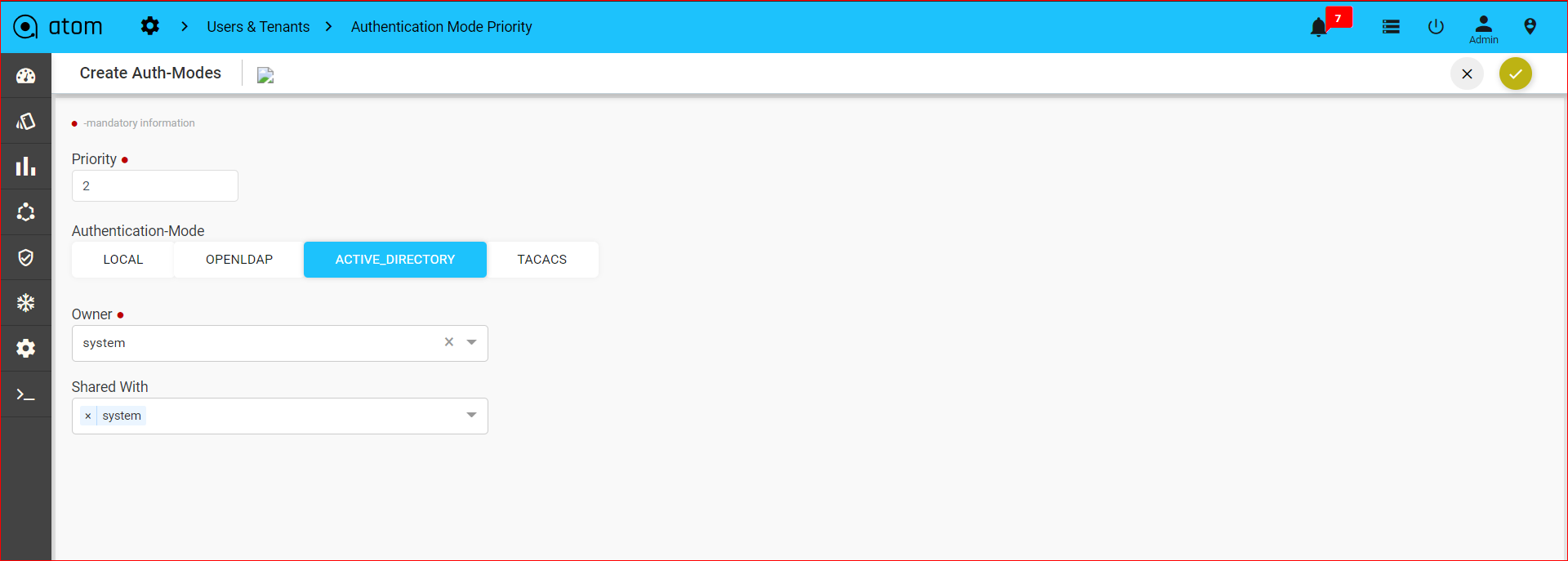

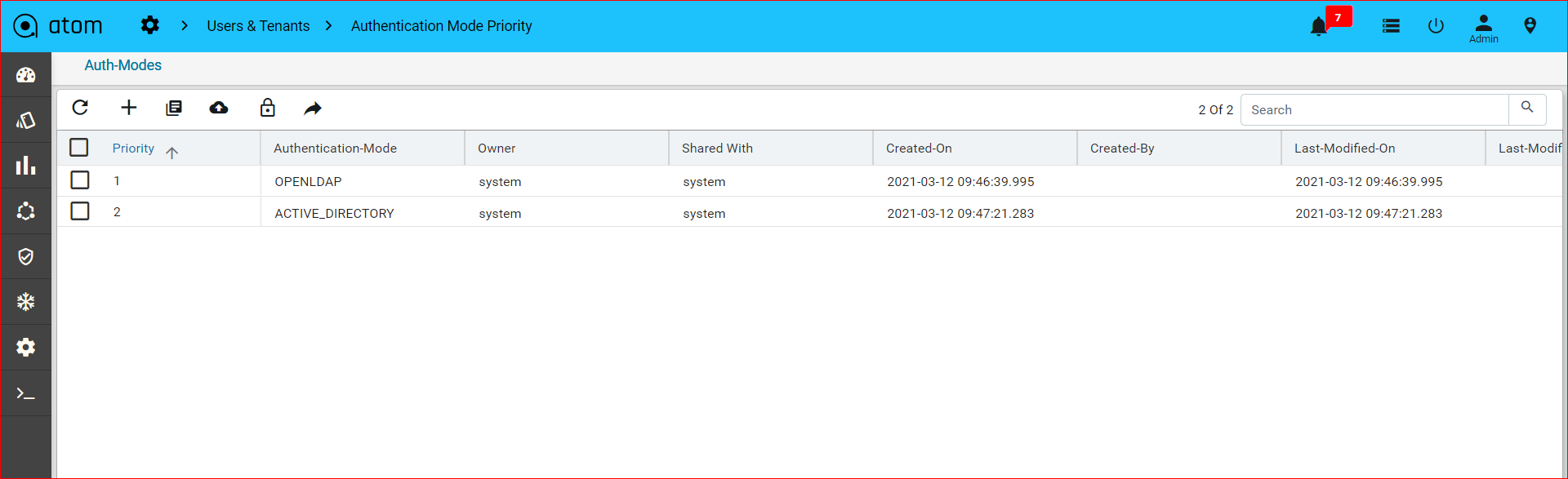

Creating Authentication Mode Priority288

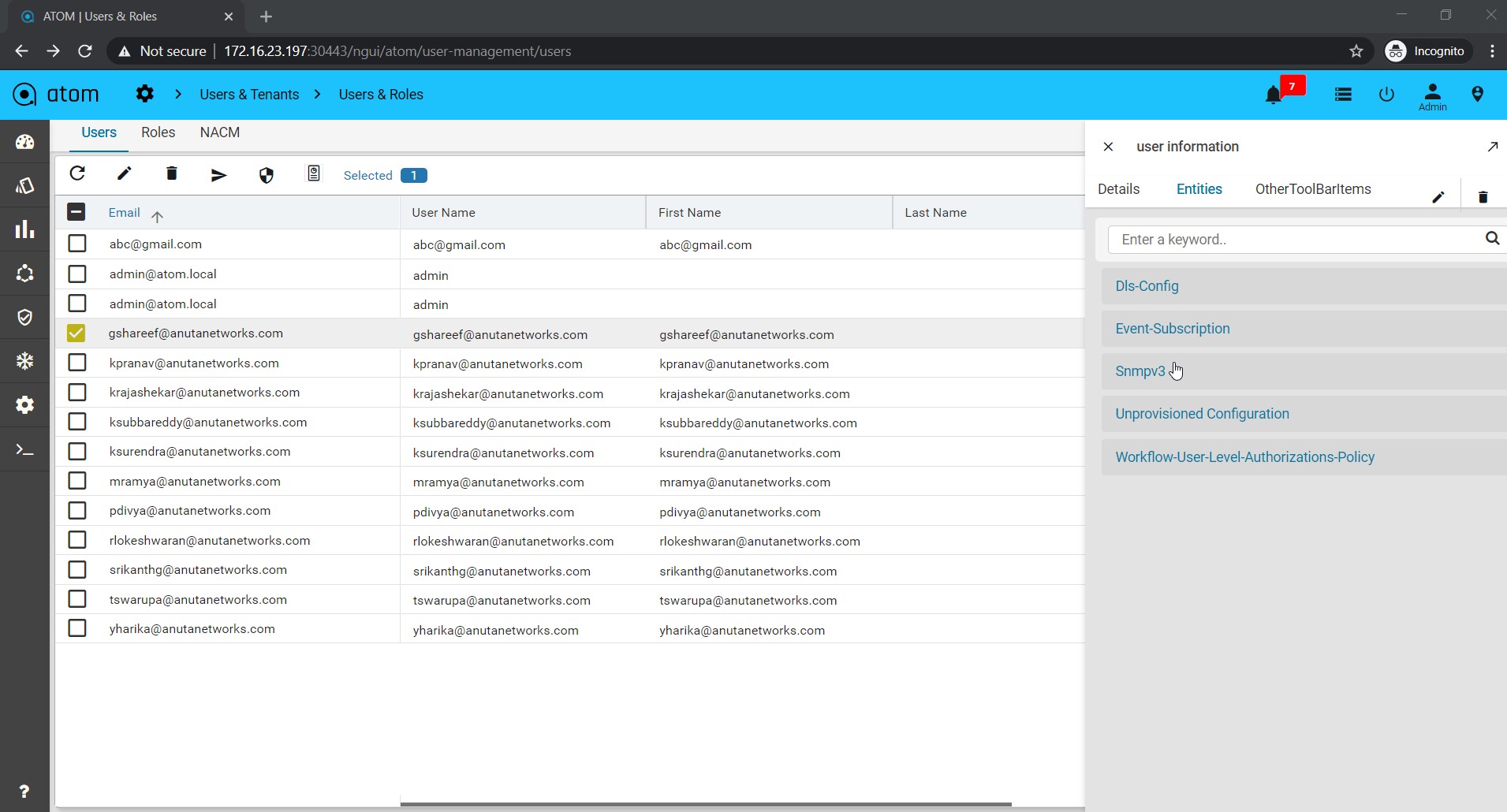

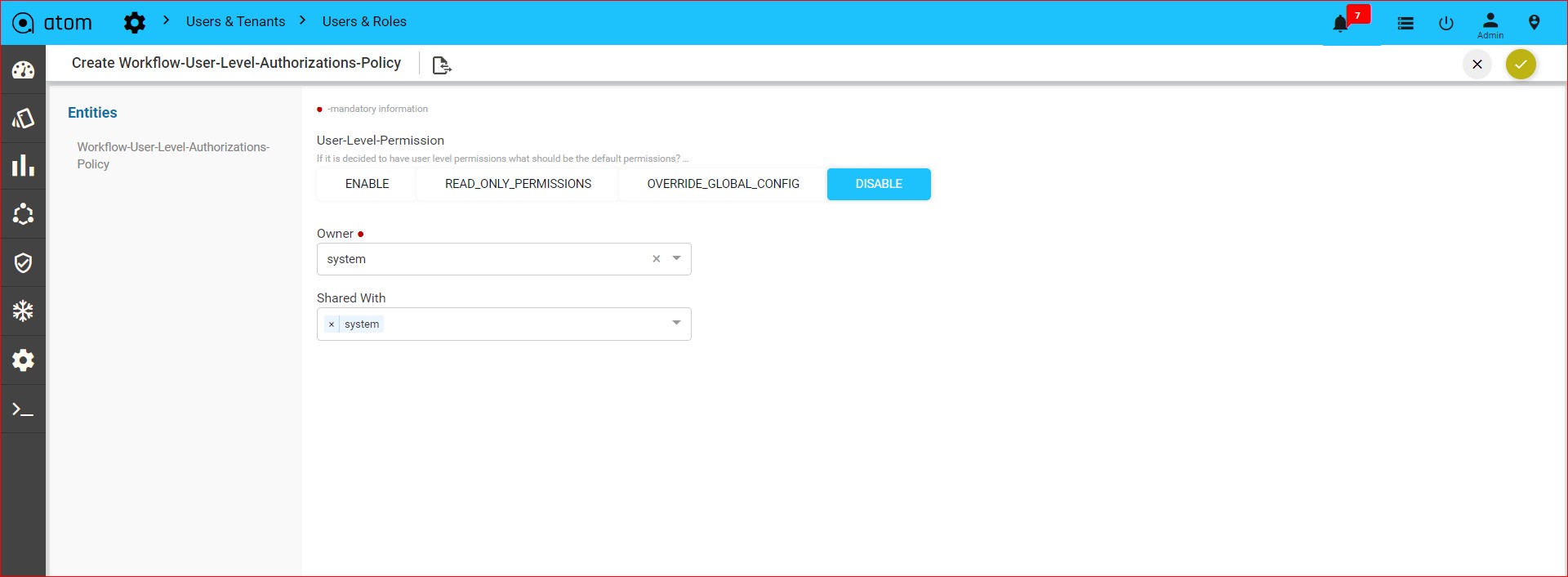

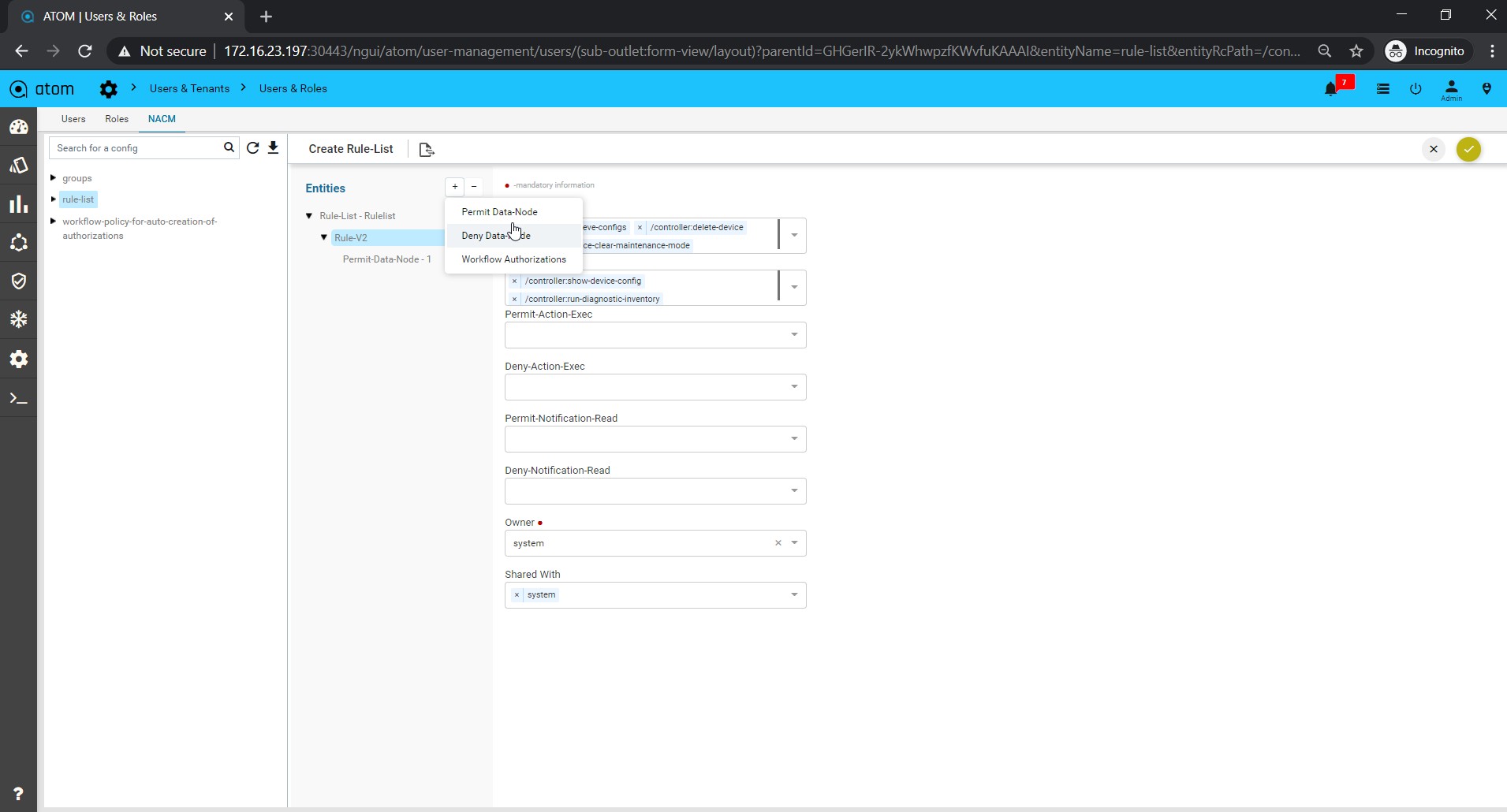

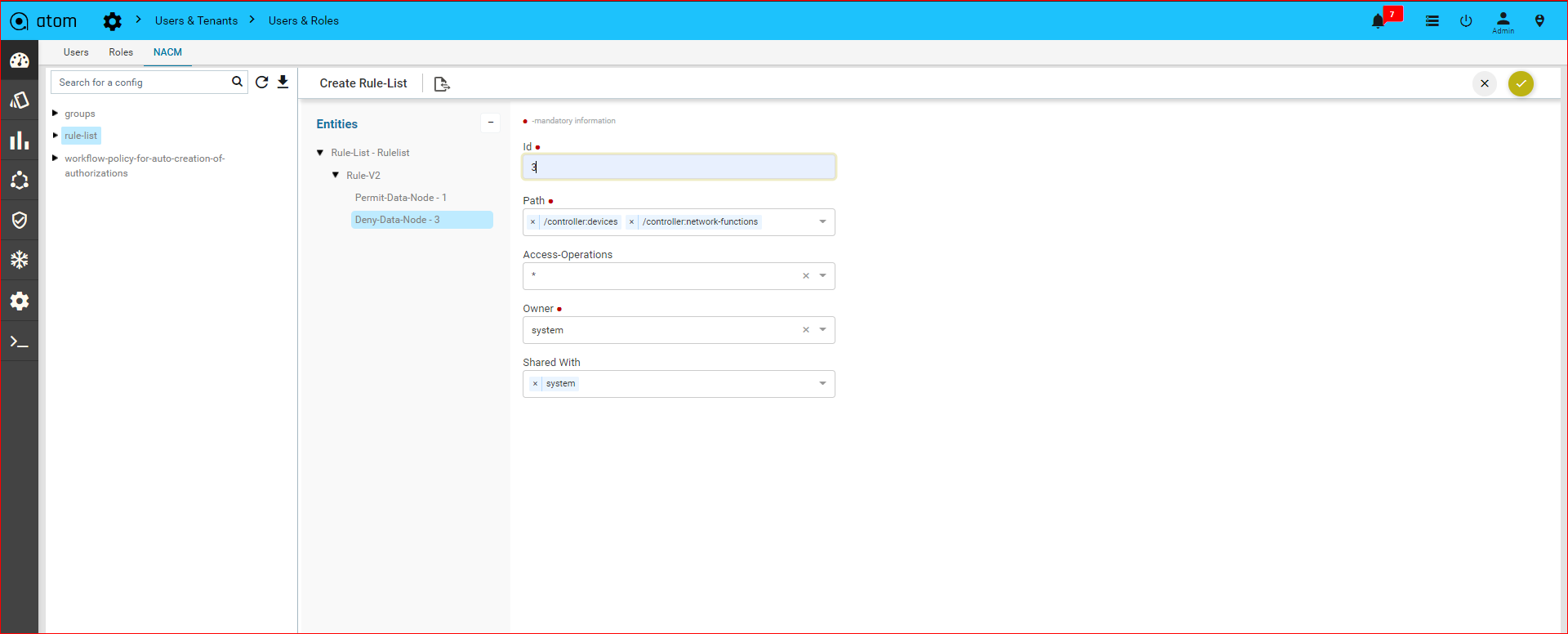

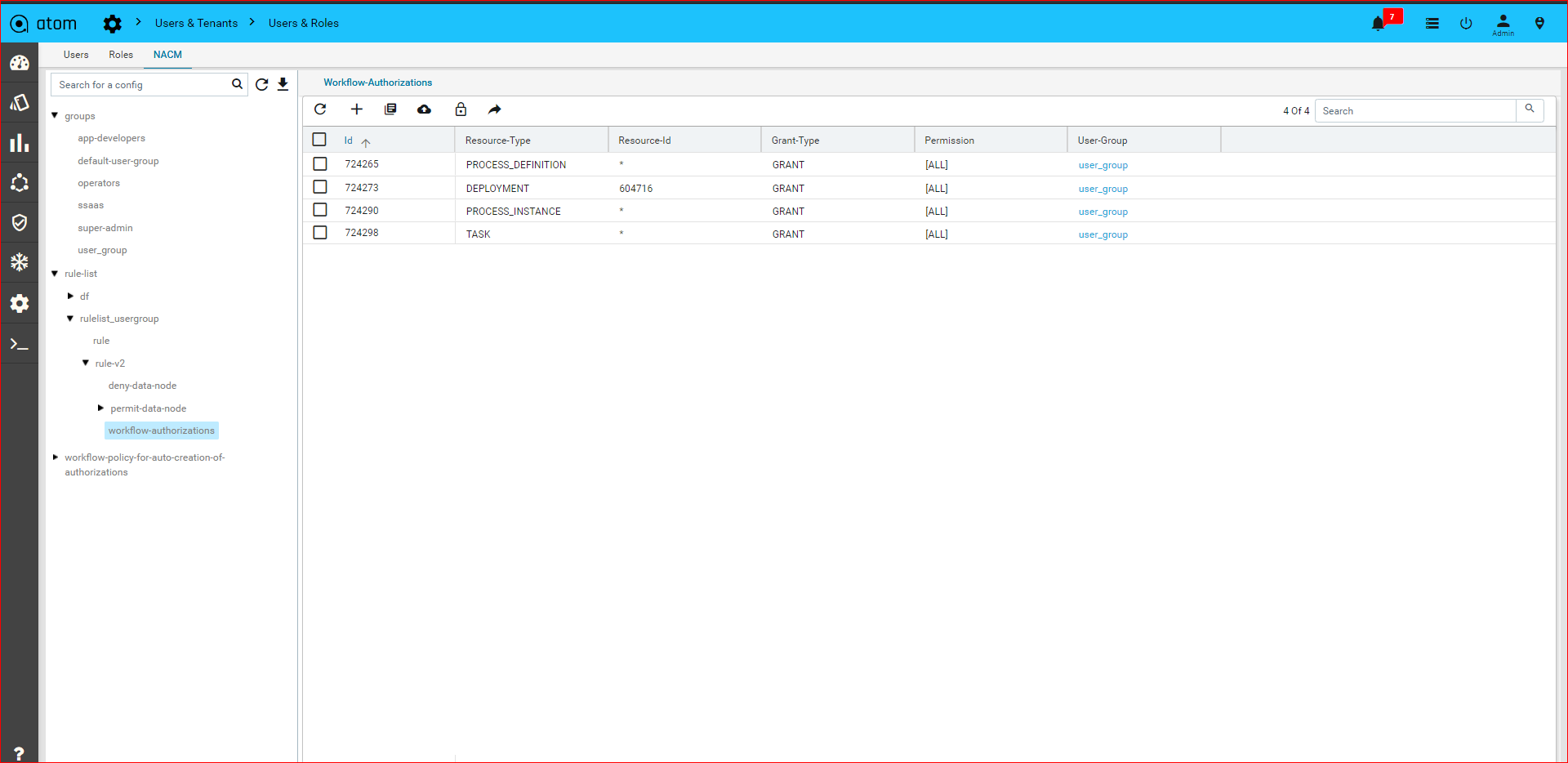

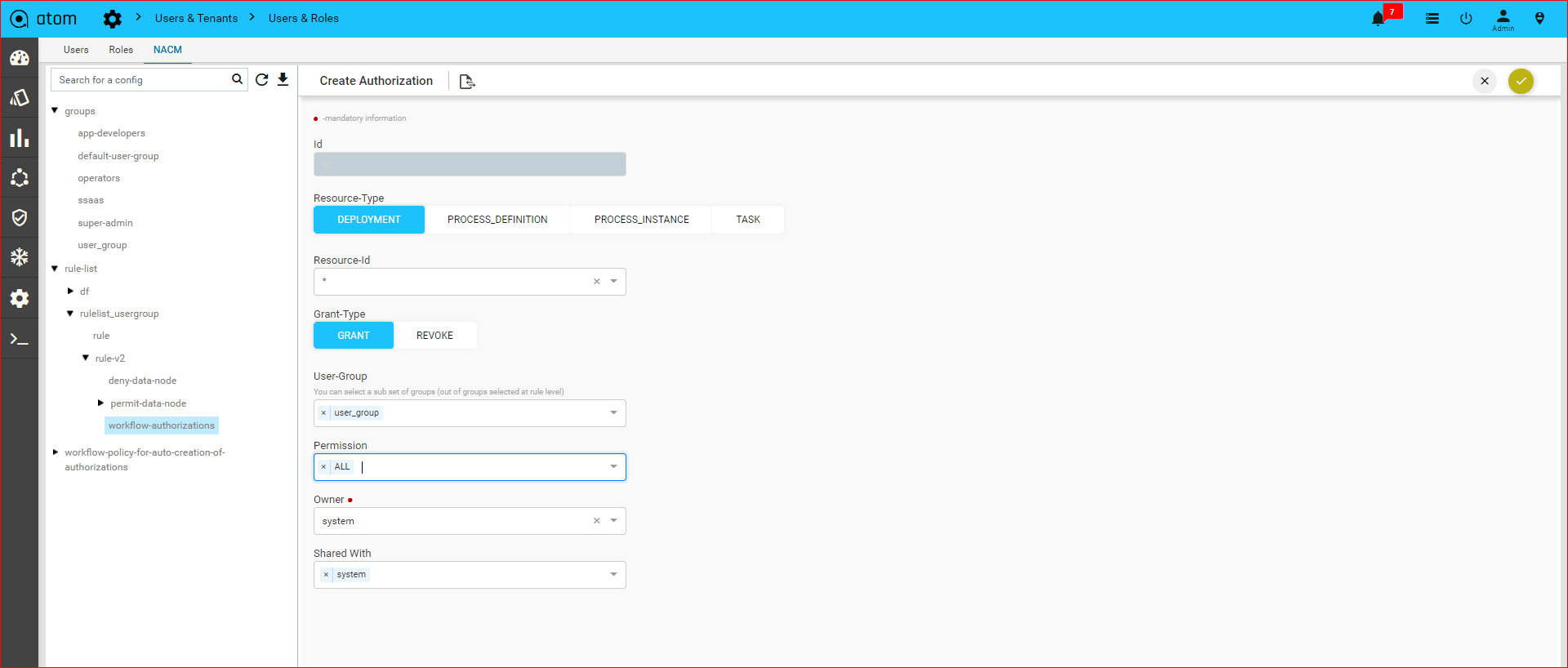

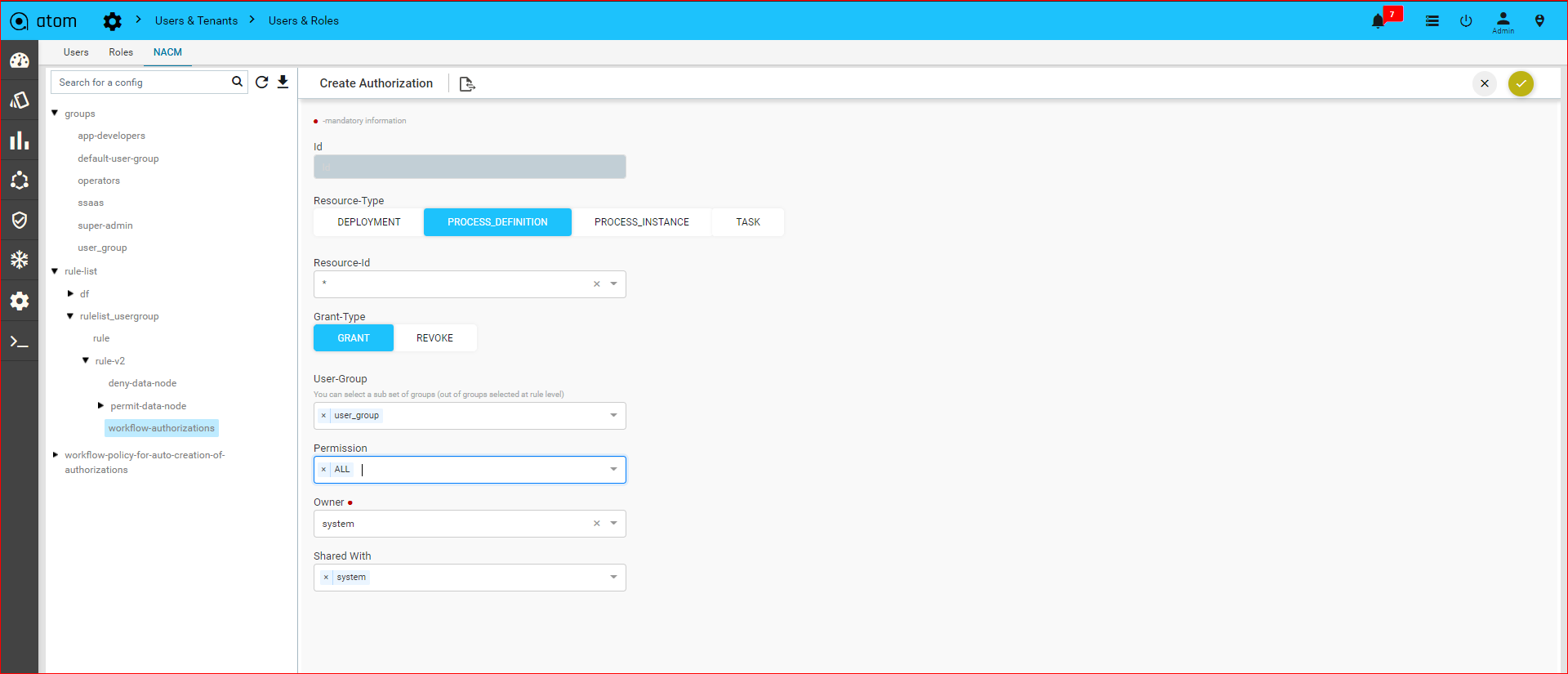

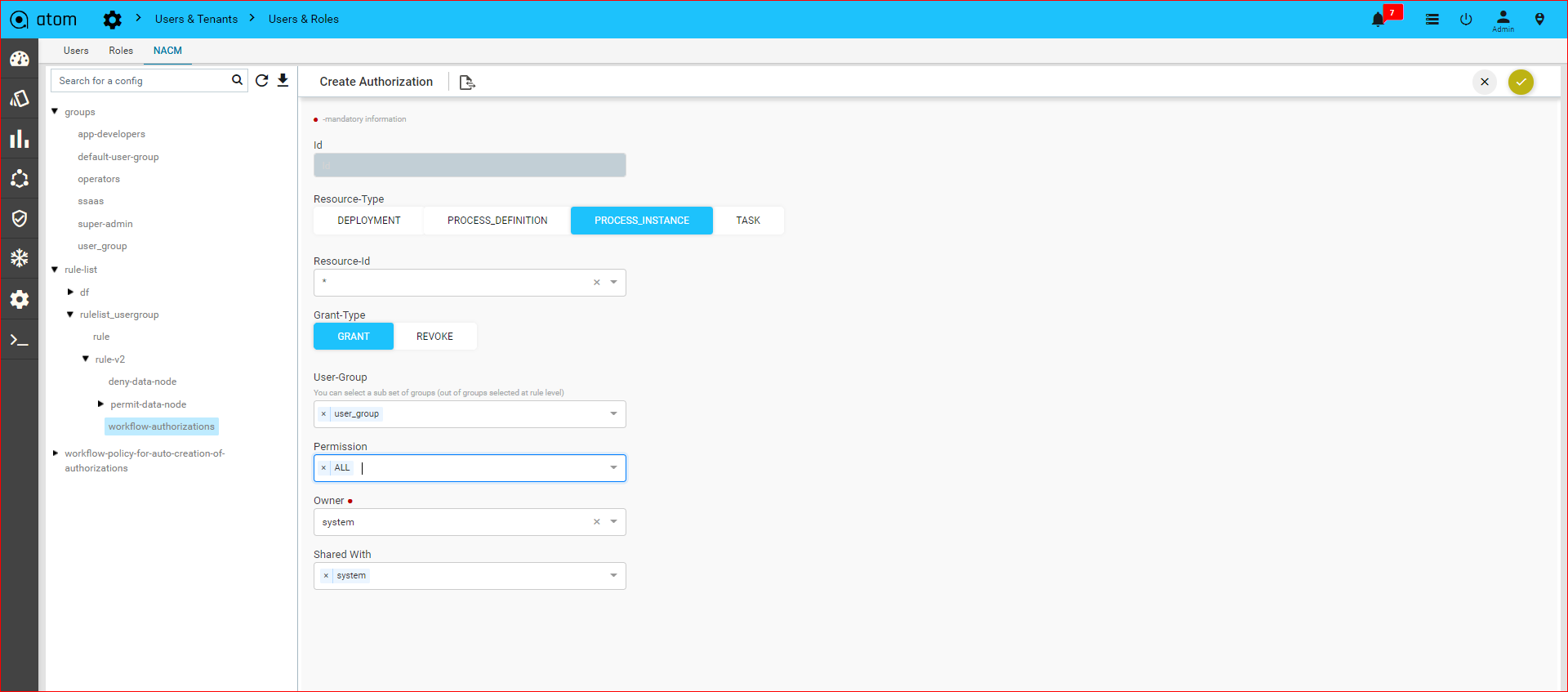

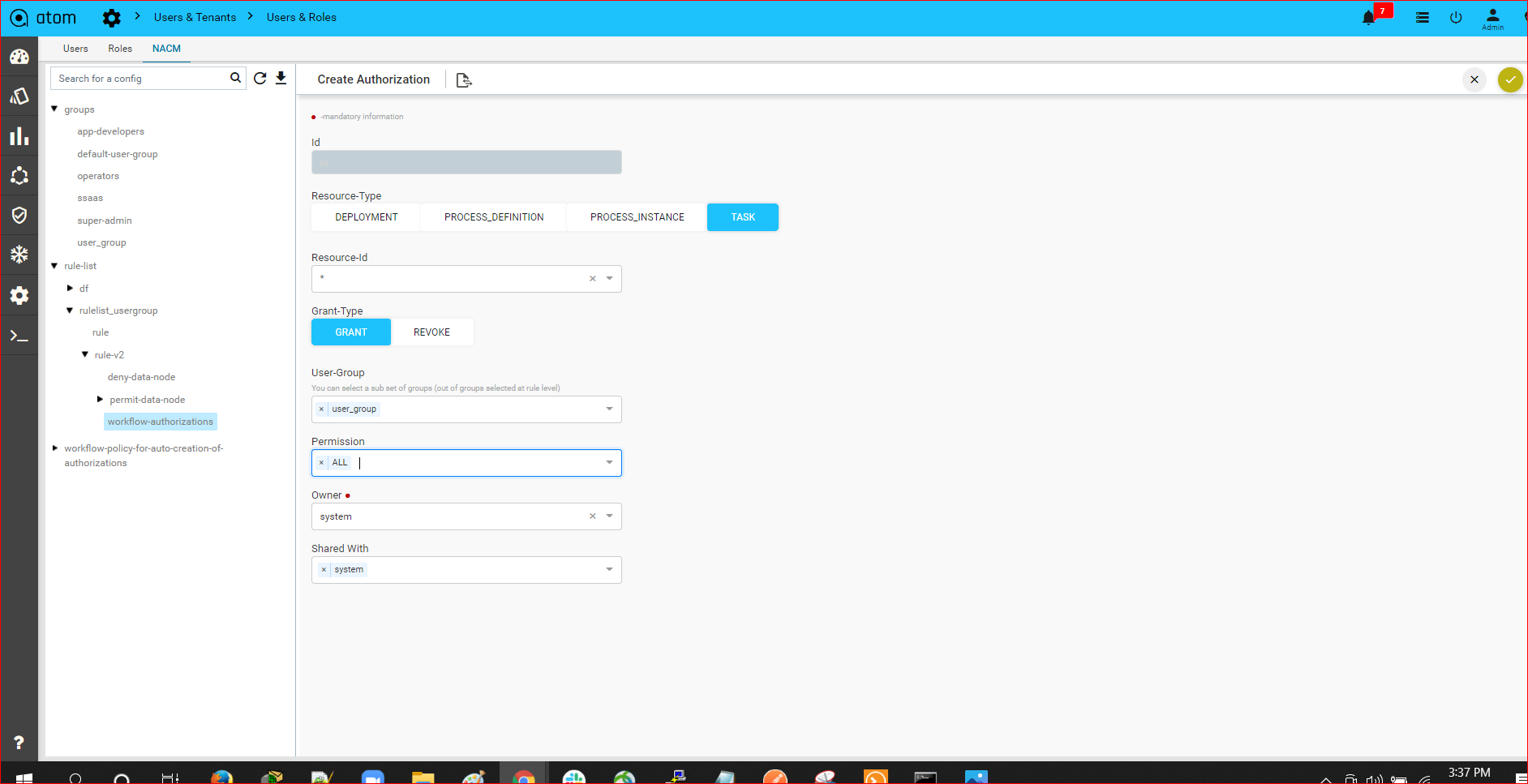

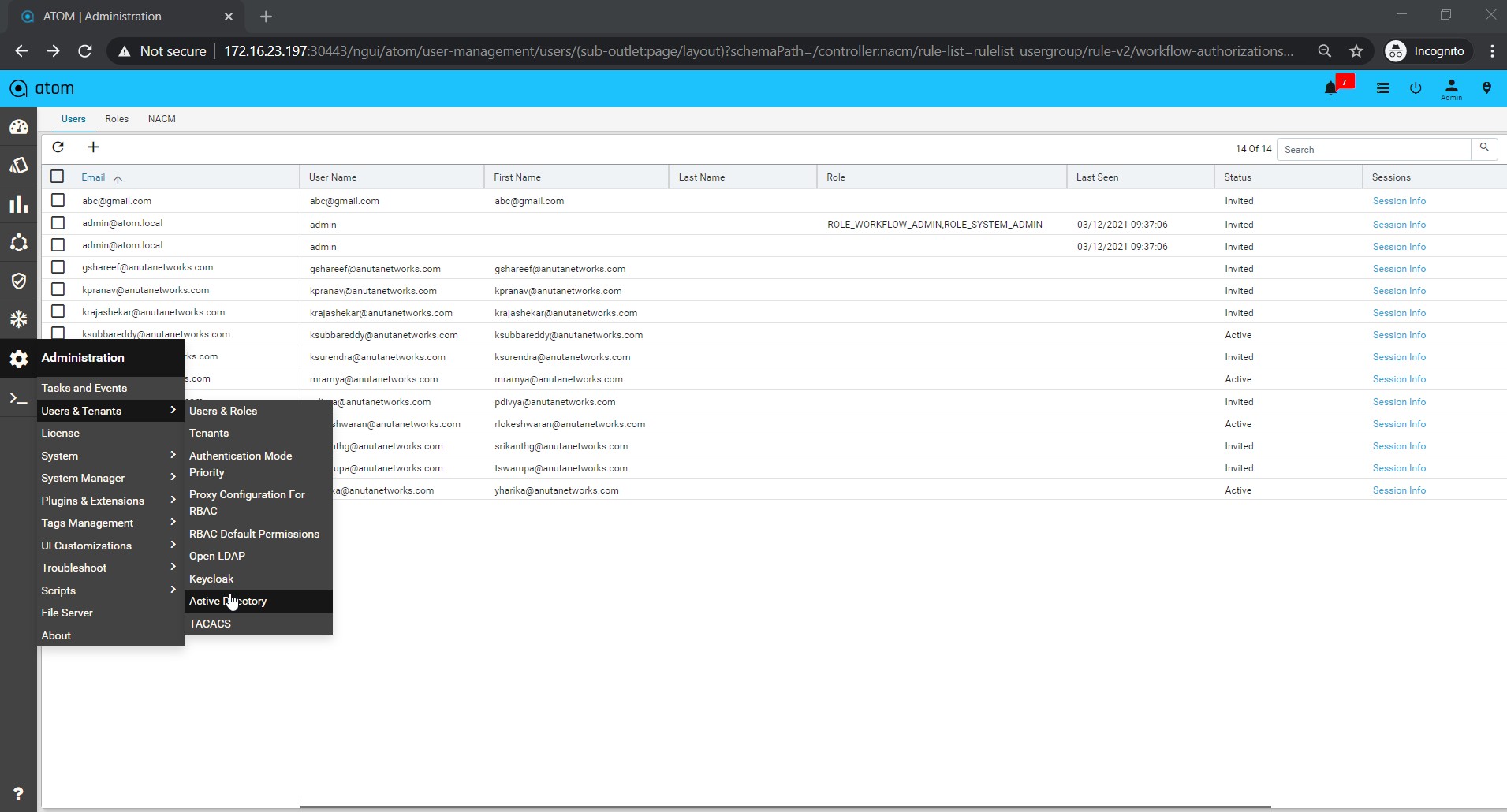

Workflow-User-Level-Authorization302

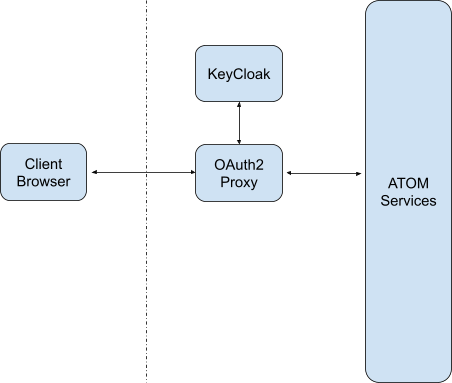

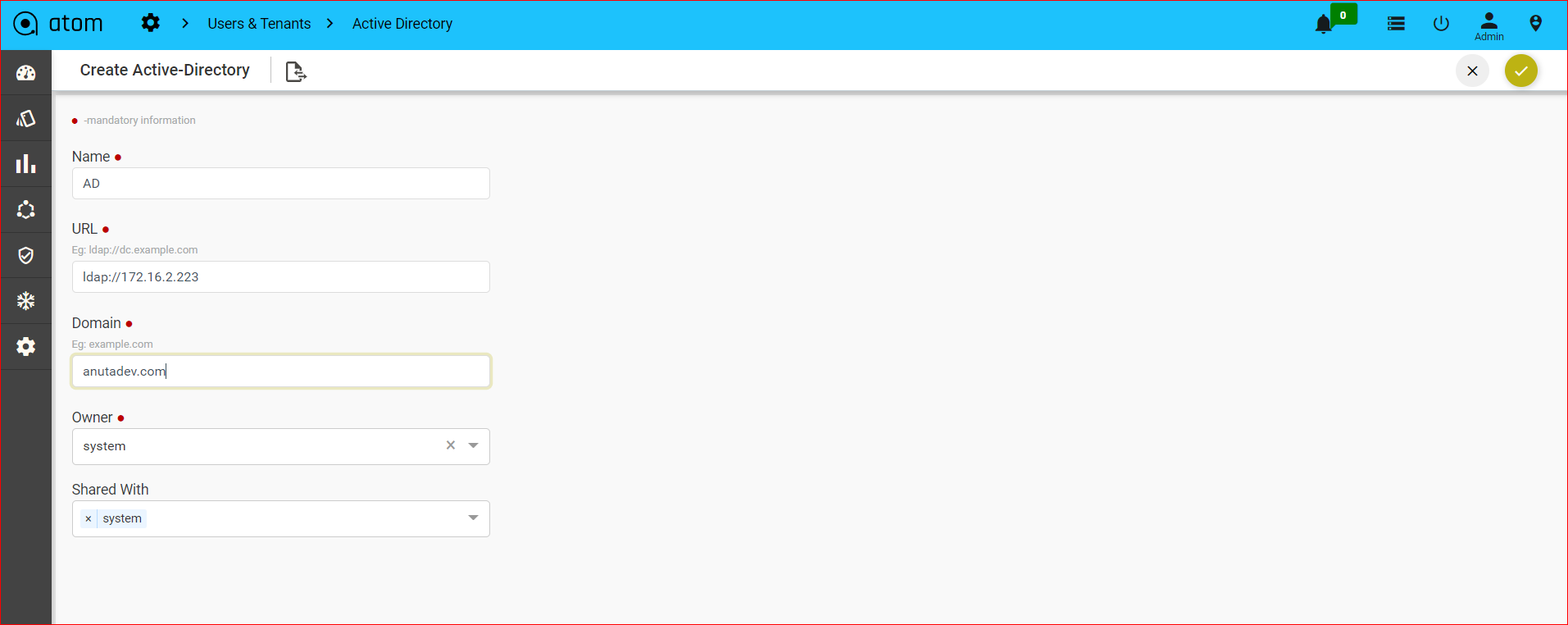

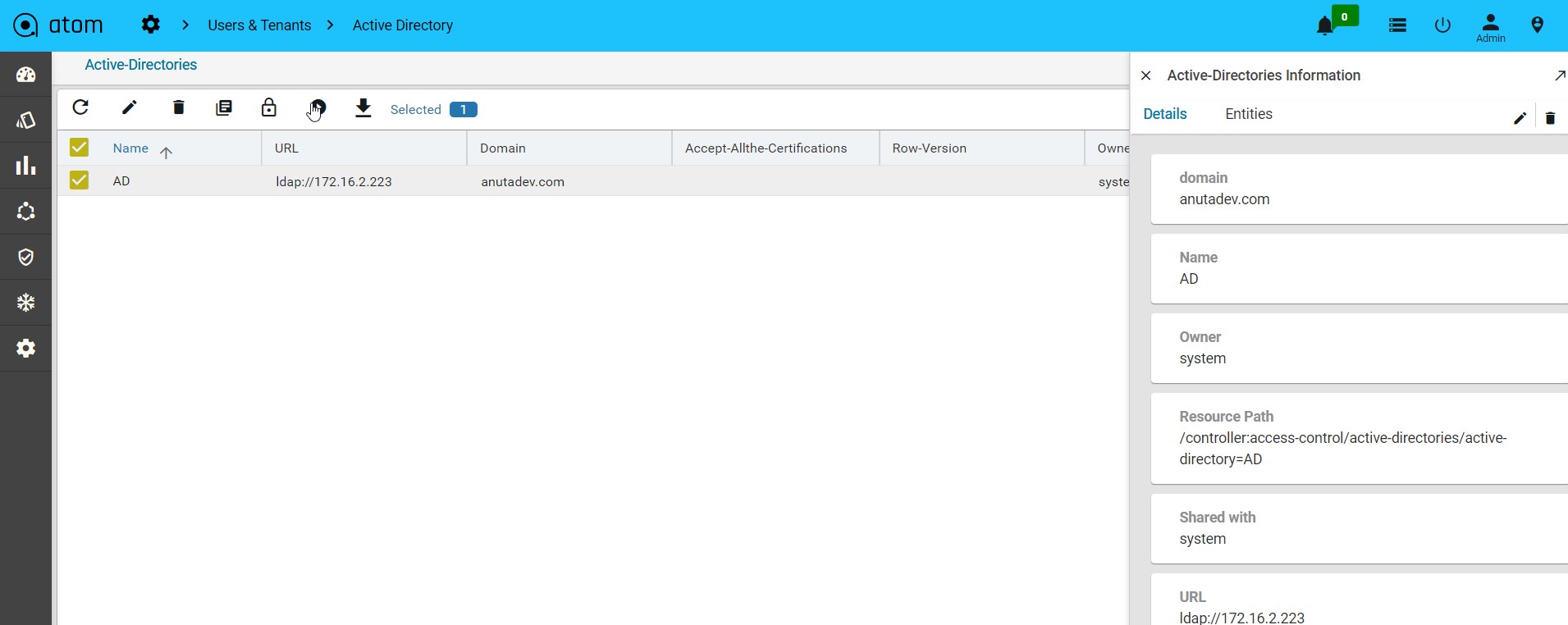

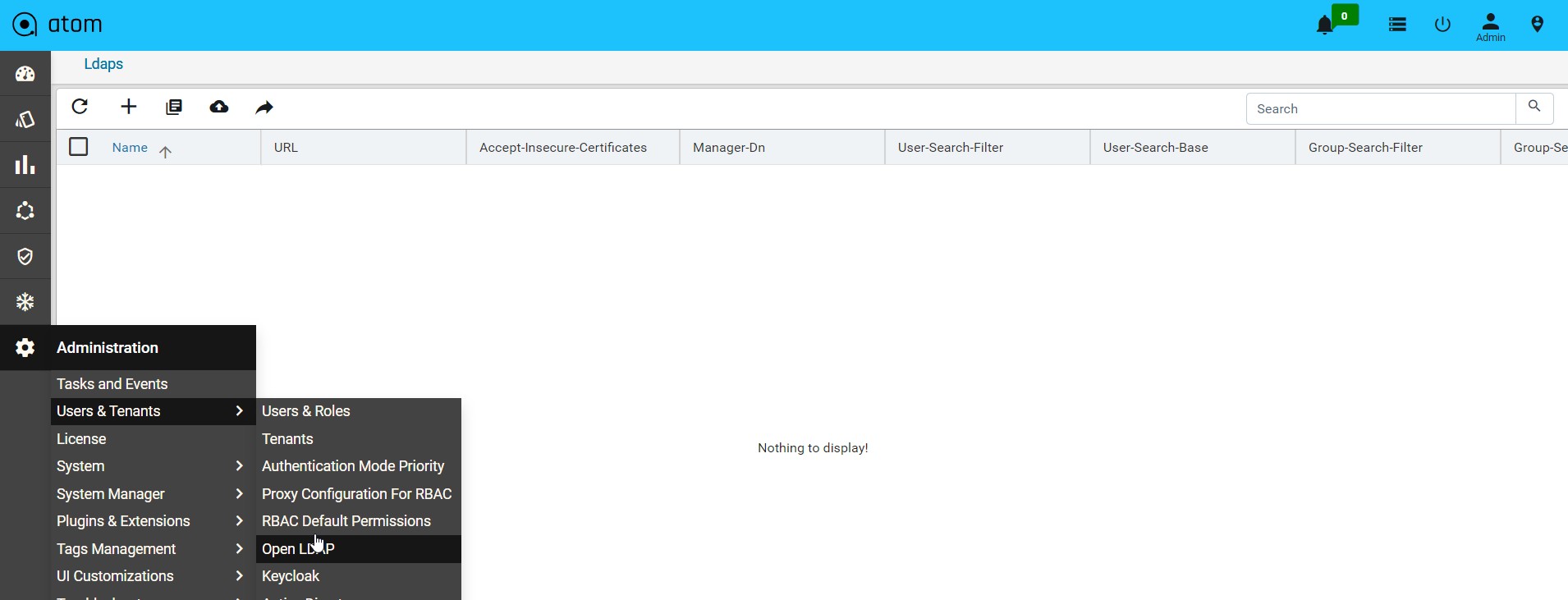

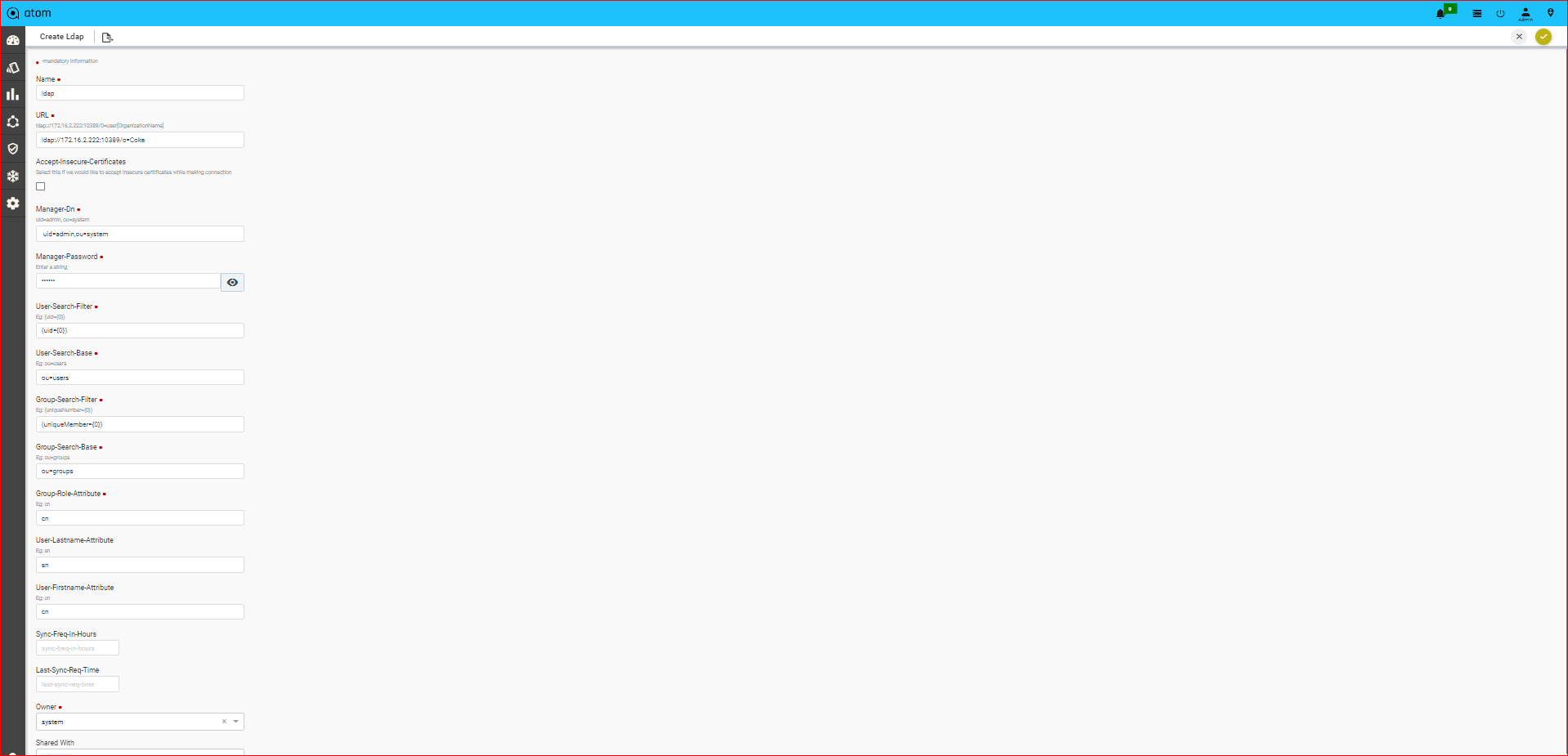

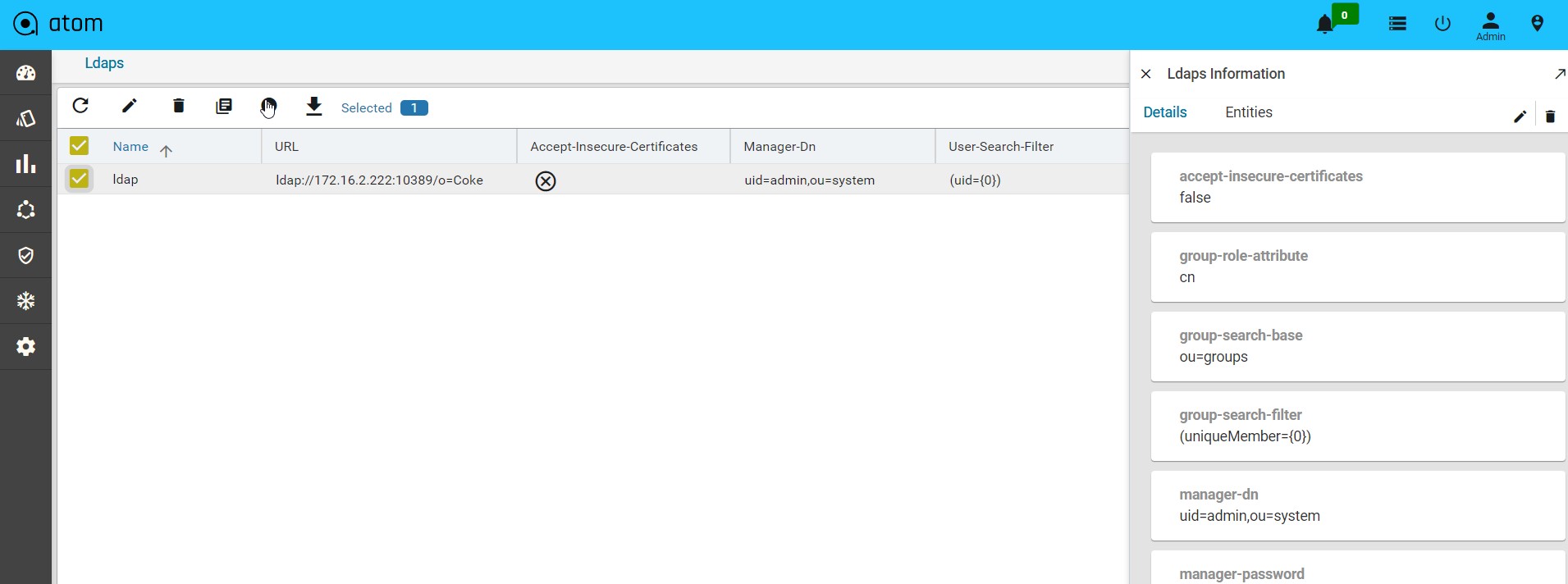

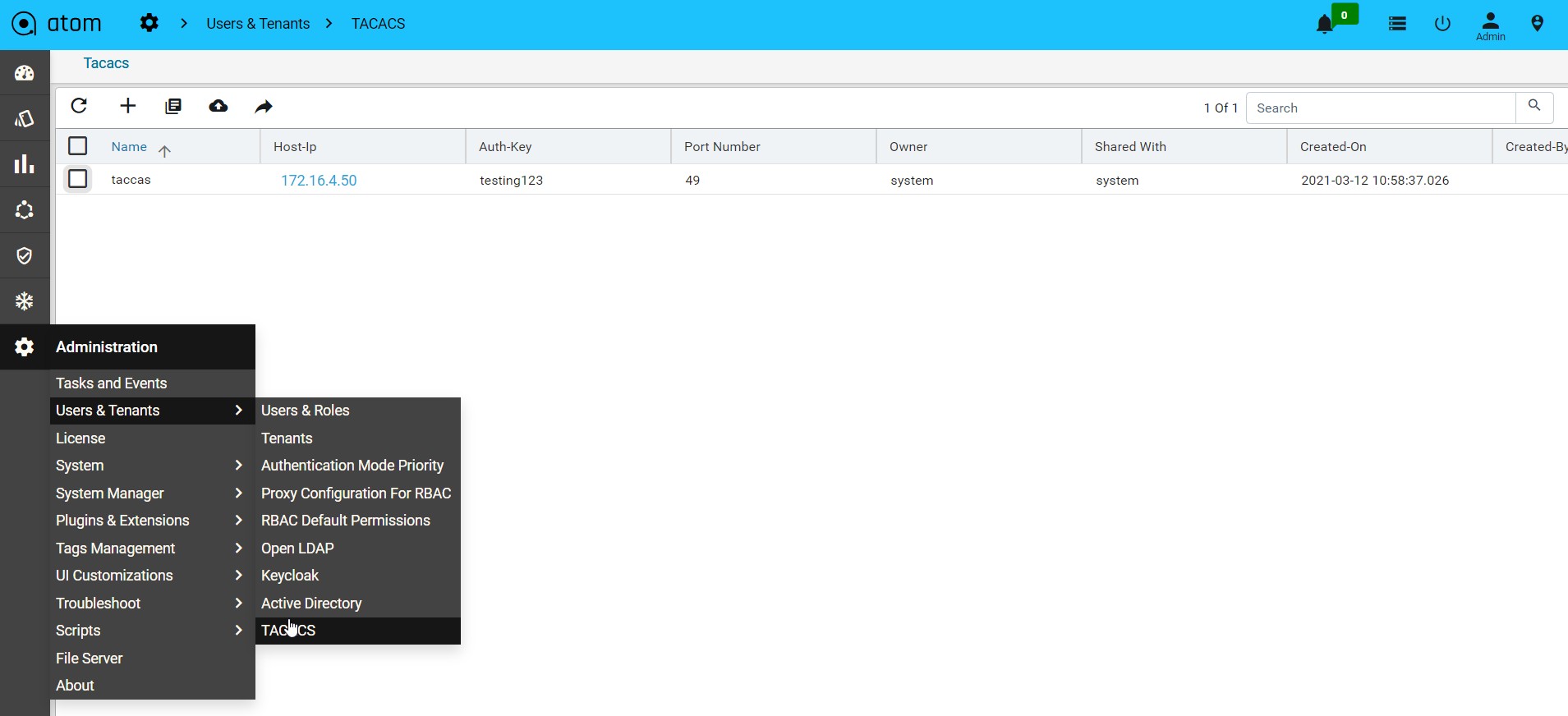

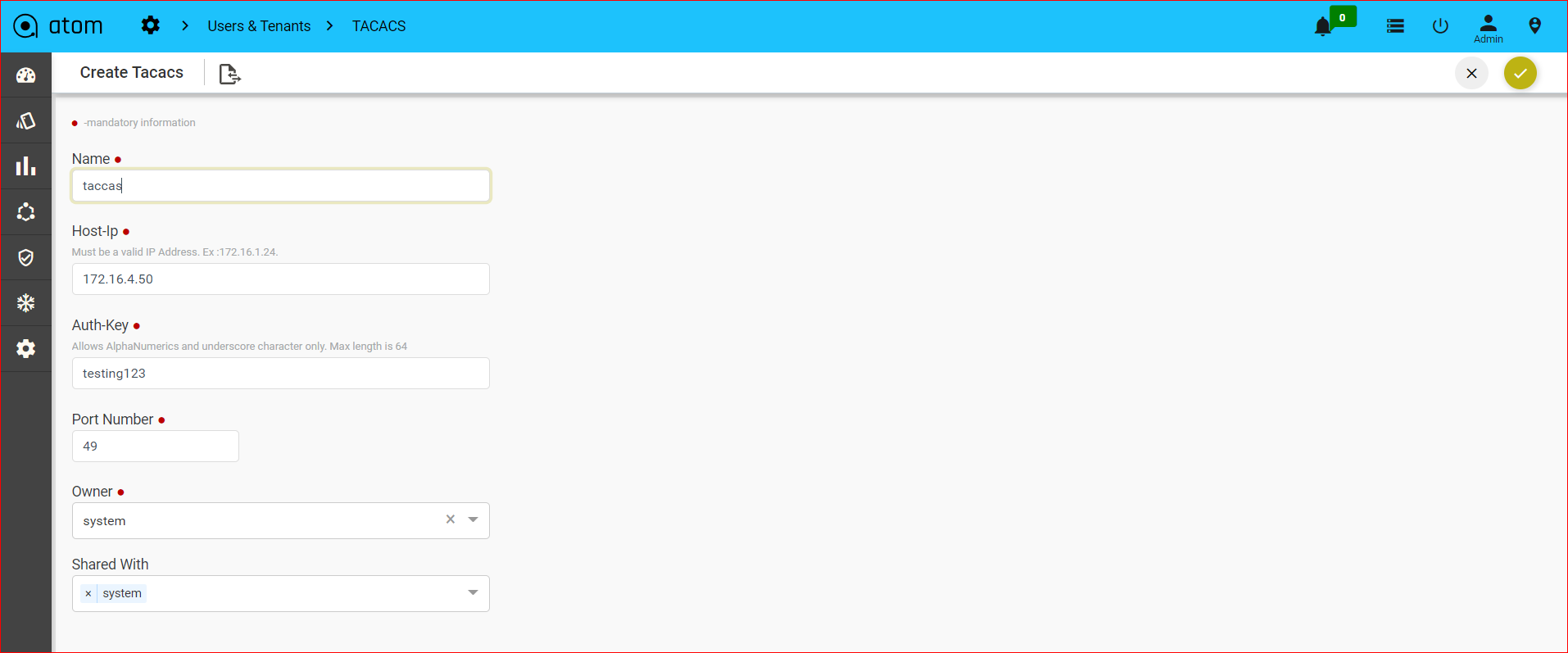

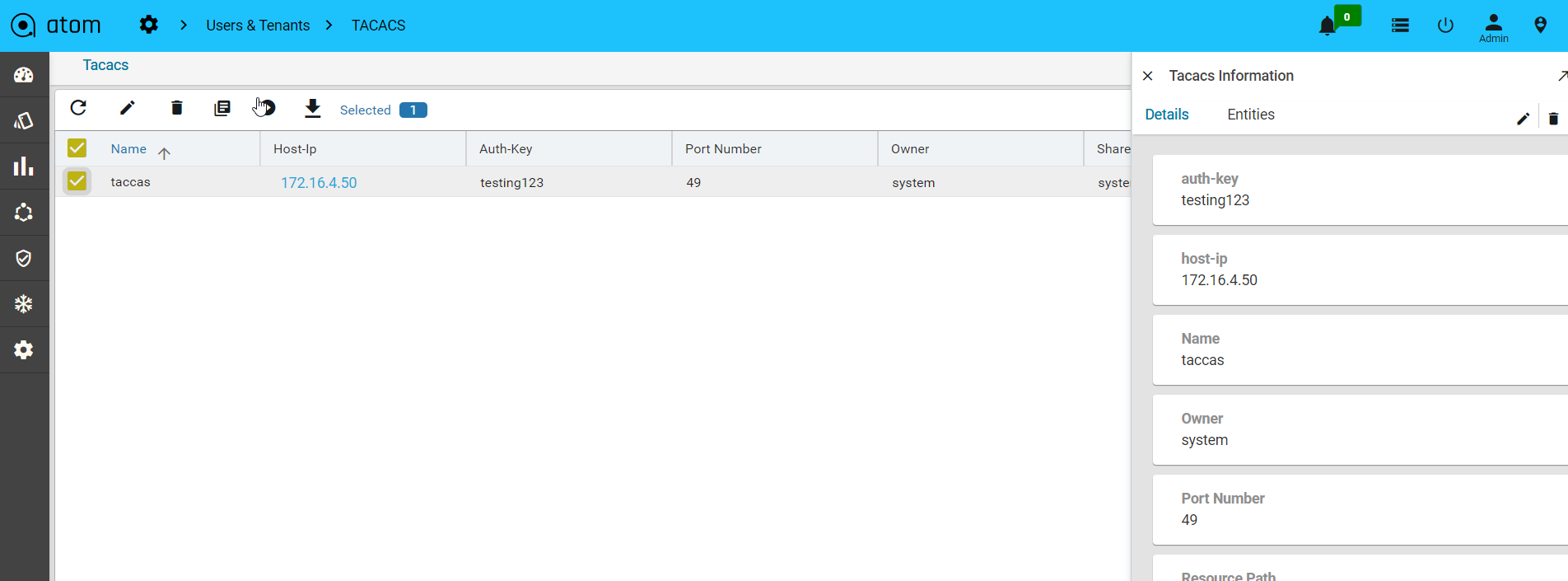

Integrating ATOM with Central Authentication Systems317

Managing Active Directory Users()317

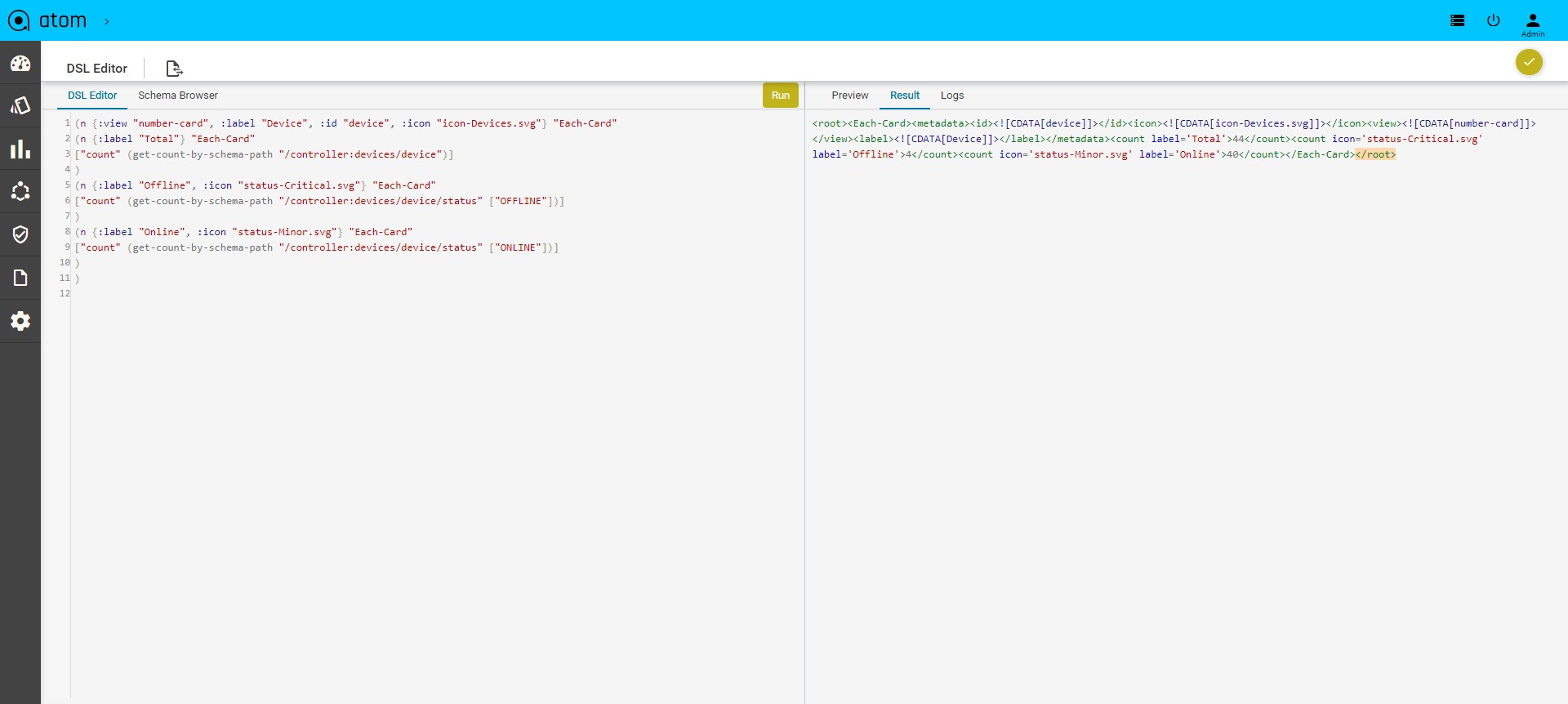

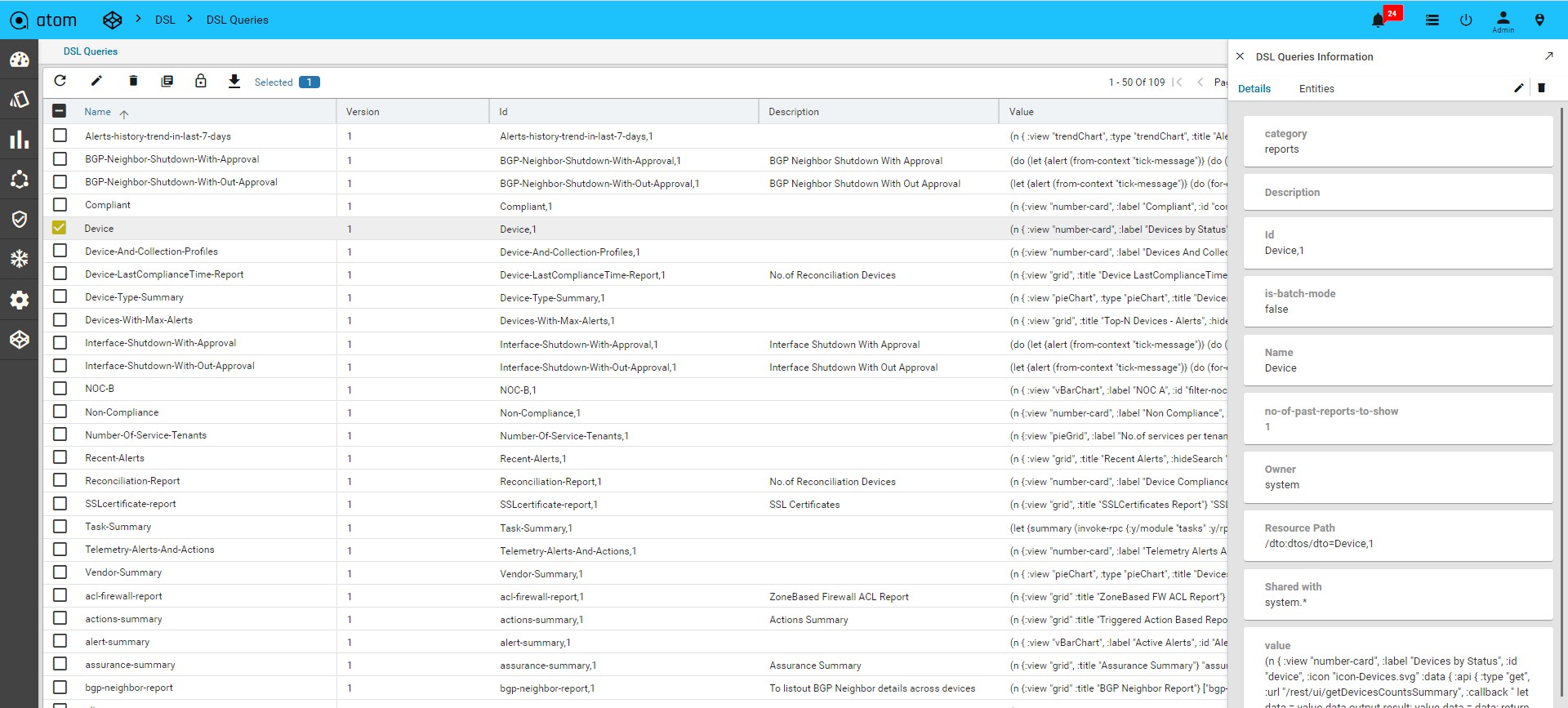

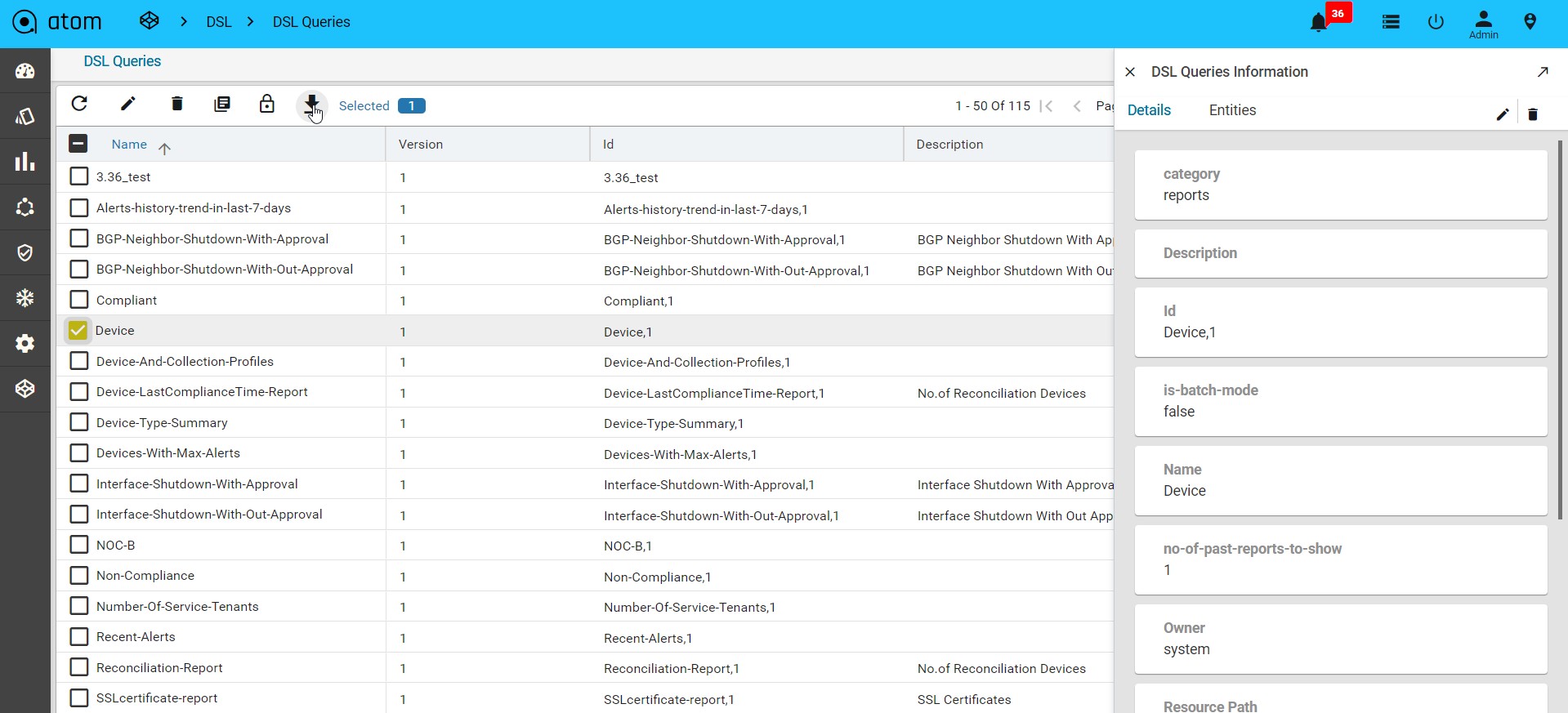

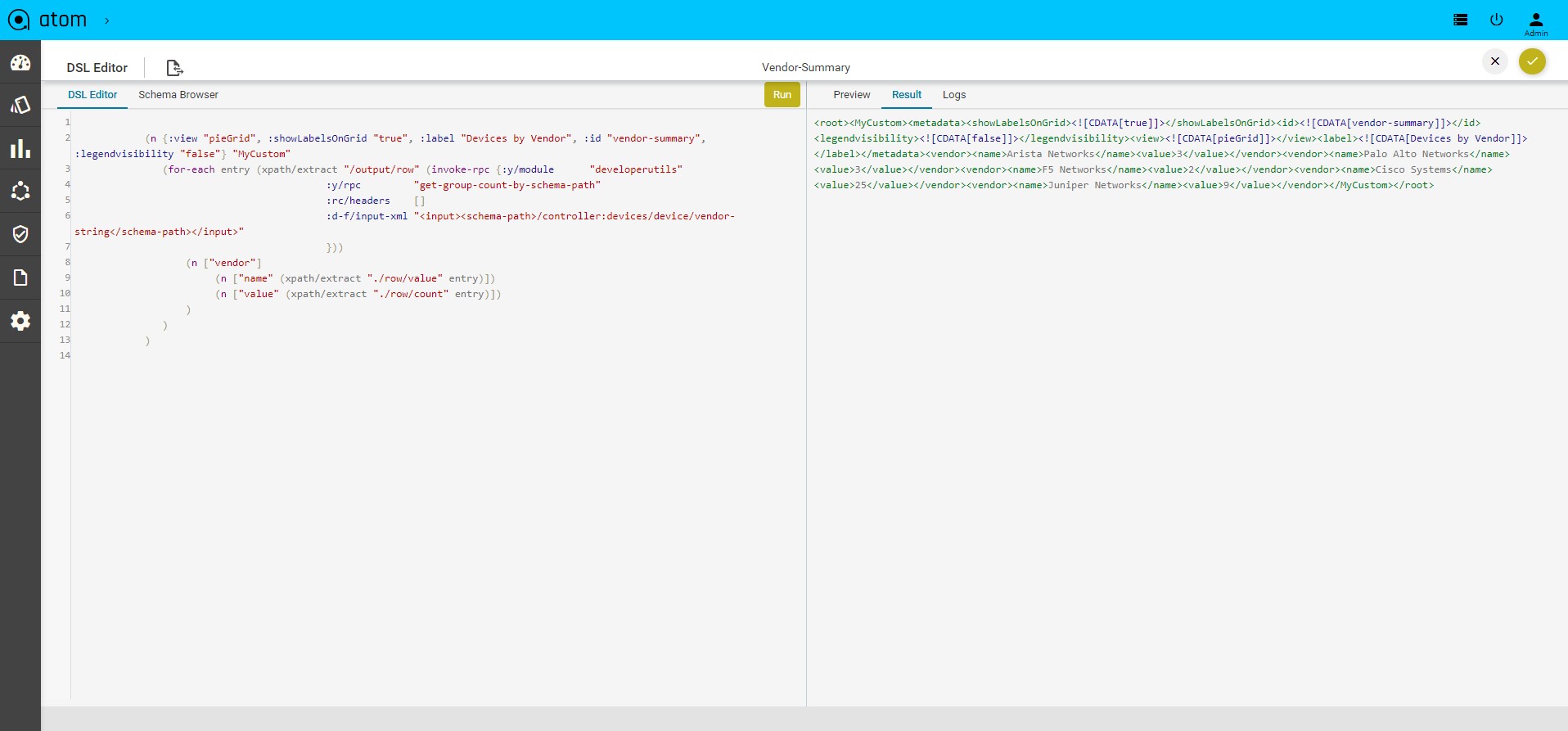

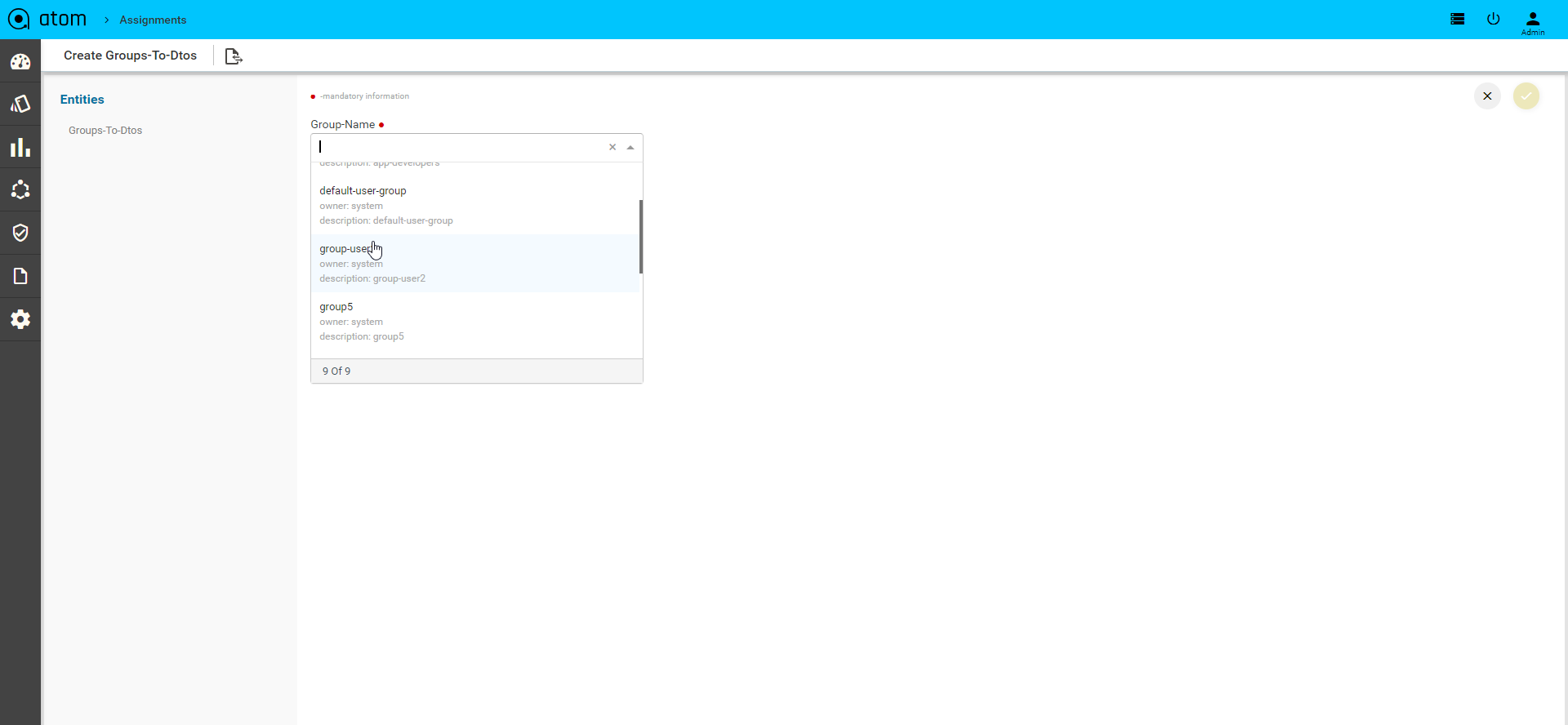

Customizing the Dashboard using DSL323

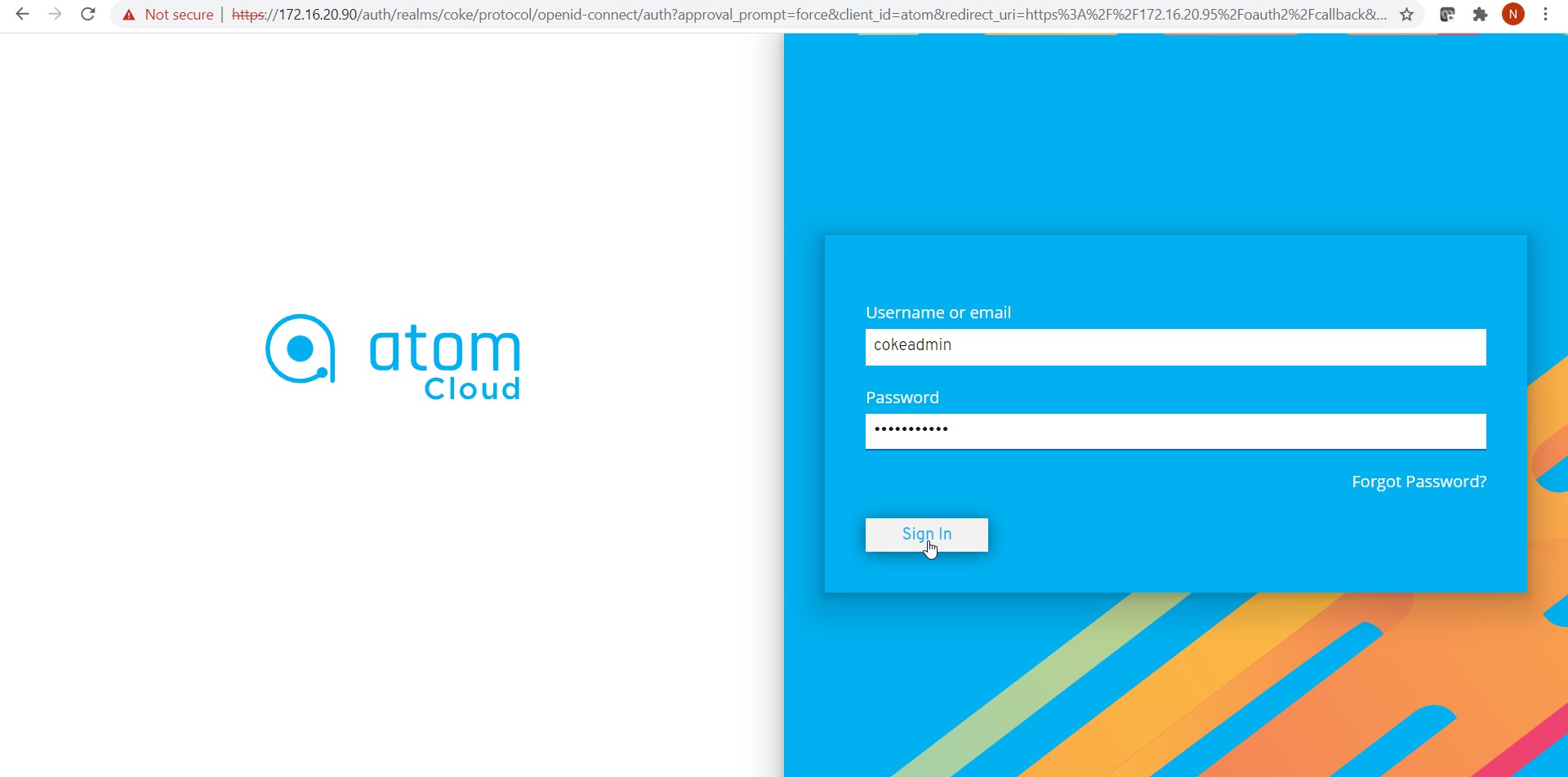

Getting Started with ATOM

Intended Audience

This document is intended for Network Administrators & Operators that are using ATOM to perform Network management, configuration management, services automation and MOPs.

References

- ATOM Deployment Guide – All aspects of ATOM Deployment including sizing and deployment process

- ATOM User Guide – Master [This Document]

- ATOM User Guide – Remote Agent Deployment Guide

- ATOM User Guide – Performance Management & Alerting

- ATOM User Guide – Network Configuration Compliance, Reporting & Remediation

- ATOM API Guide – Discusses all external interfaces and integration flows

- ATOM Platform Guide – Discusses Service model, Device model and Workflow development

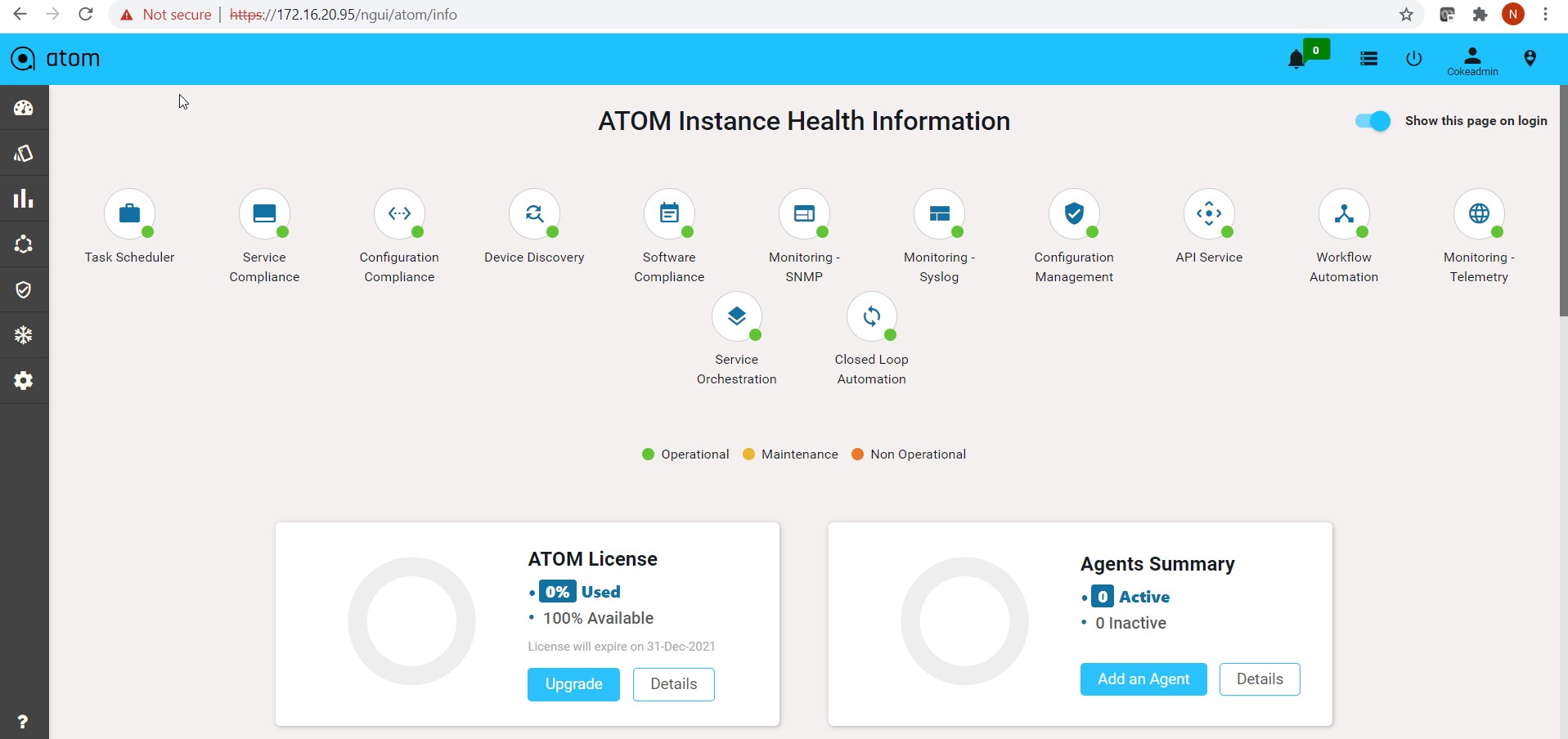

ATOM Solution Overview

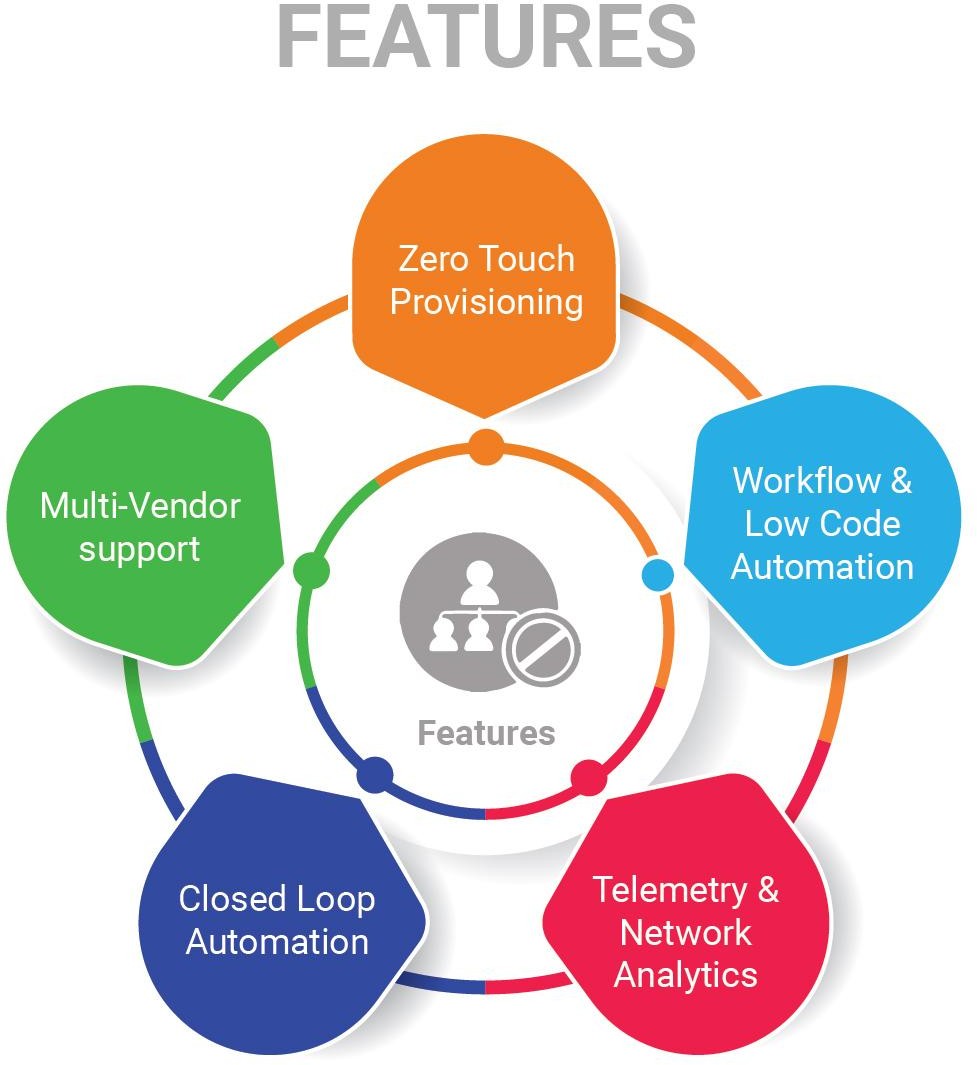

Following sections provide a brief overview of ATOM Features.

Configuration Management

ATOM provides Configuration management capabilities for a wide variety of devices. This includes configuration archival, scheduling, trigger driven configuration sync, configuration diff etc.,

Topology

ATOM provides topology discovery through CDP & LLDP. Topology can be displayed hierarchically using Resource Pools (Device Groups). Topology overlays Alarms and Performance information.

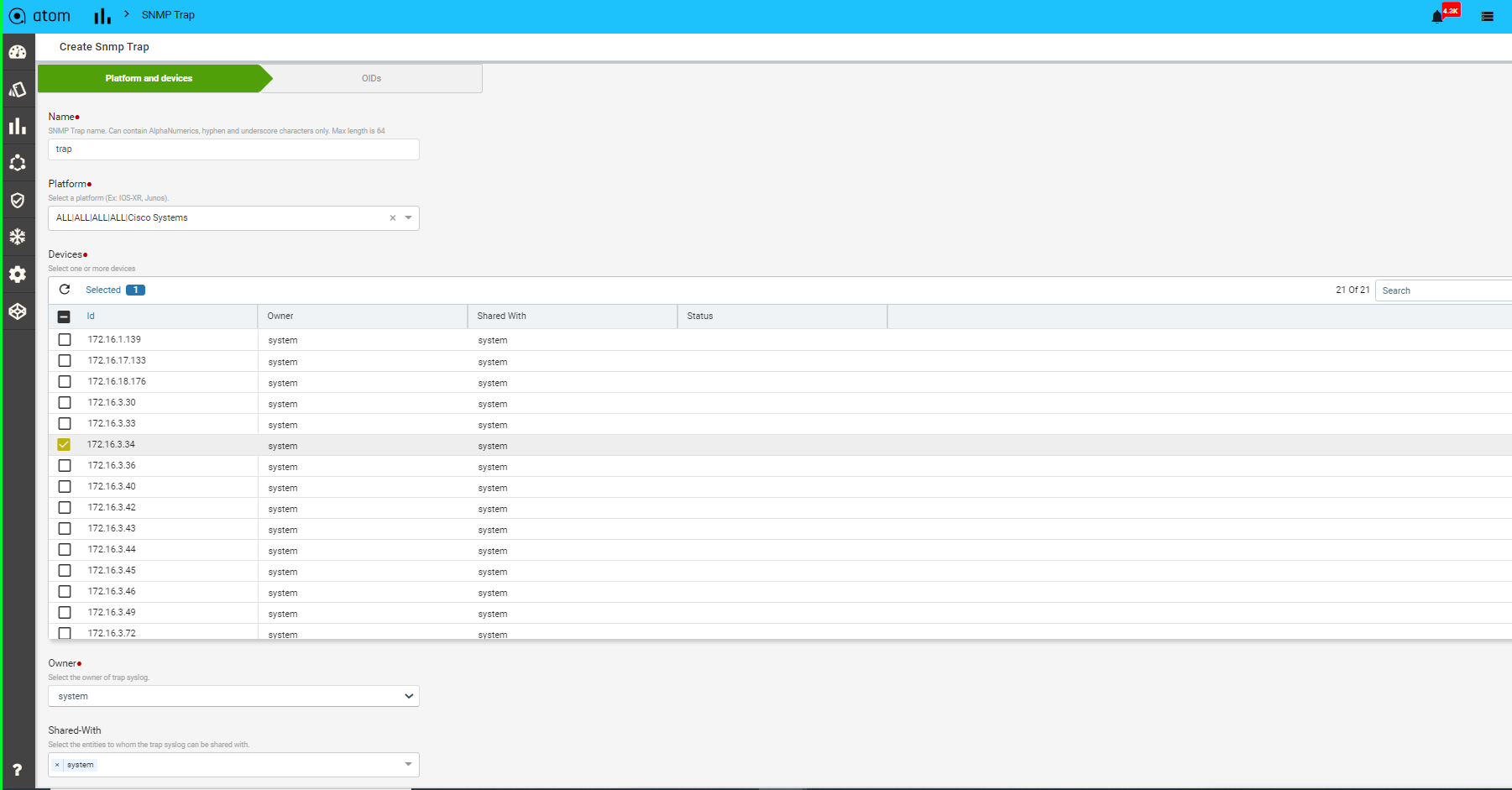

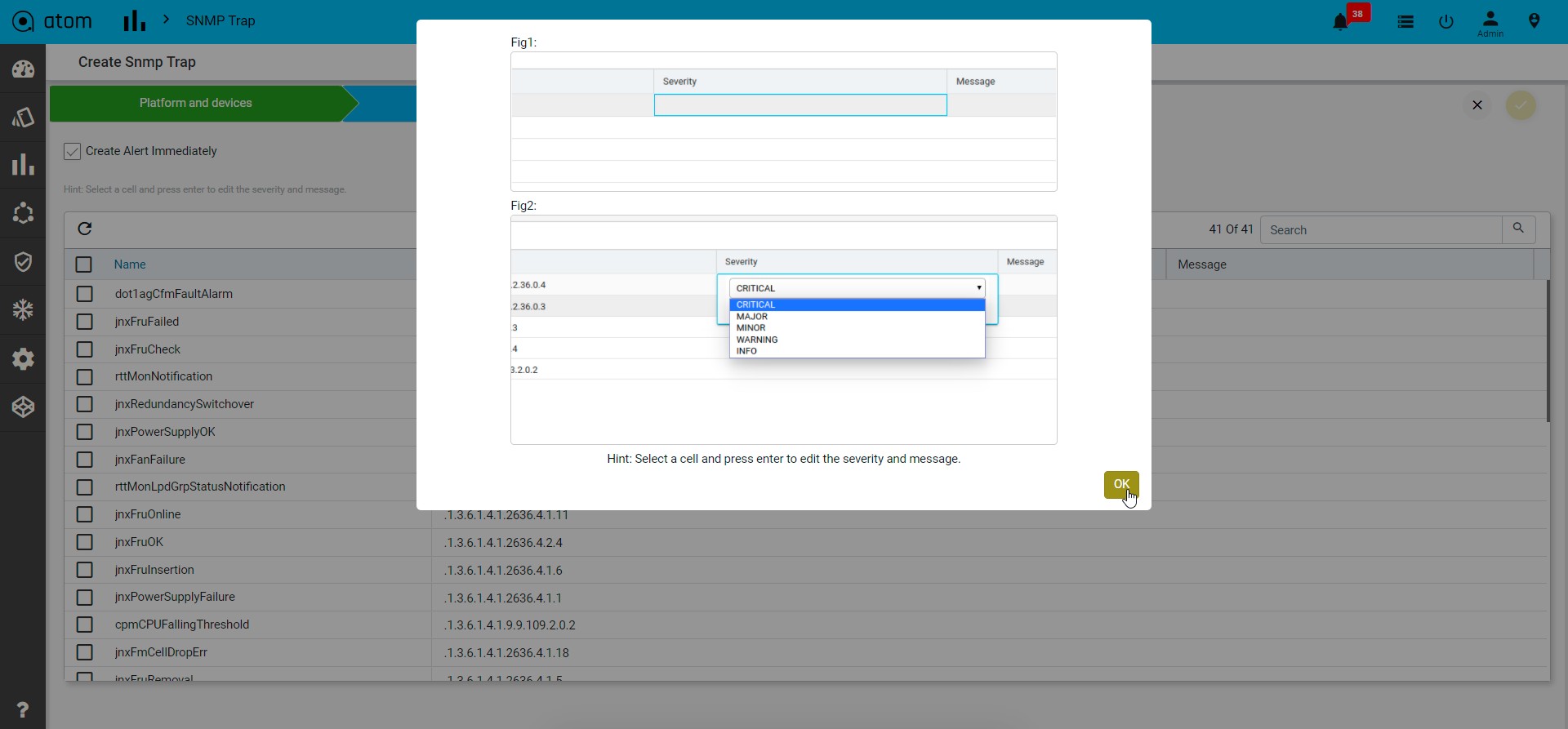

Collection & Reporting

ATOM supports collection of network operational and performance data through various protocols like SNMP, SNMP Trap, Syslog & Telemetry. Such information can be visualized in ATOM as reports or can be rendered on Grafana as Charts. Admin guide discusses Report customization in further detail.

Network Automation

ATOM provides Model driven Network automation for stateful services. Stateful services involve a Service model (YANG) and some business logic. Service model development is covered in ATOM Platform guide. Admin guide discusses how to deploy & operate a service.

Workflow & Low Code Automation

ATOM provides an intuitive graphical designer to design, deploy and execute simple or complicated network operations and procedures. It allows the administrator to configure pre-checks, post-checks and approval flow. Workflow creation flows will be covered in the ATOM Platform Guide. Admin guide discusses how to deploy & operate.

Telemetry & Network Analytics

In today’s economy, data is the new oil. Anuta’s ATOM helps organizations collect a massive amount of network data from thousands of devices and generate detailed in-depth insights that will help them deliver innovative applications and solutions to their customers. ATOM can collect network data from a variety of sources including model-driven telemetry, SNMP and Syslog. The diverse data format of each source is normalized to provide a single consistent view to the administrator. Grafana is packaged as part of ATOM to view historical data, observe patterns and predict future trends. Organizations can integrate their Big Data and AI platform with ATOM to generate business insights from the network element configuration and operational state.

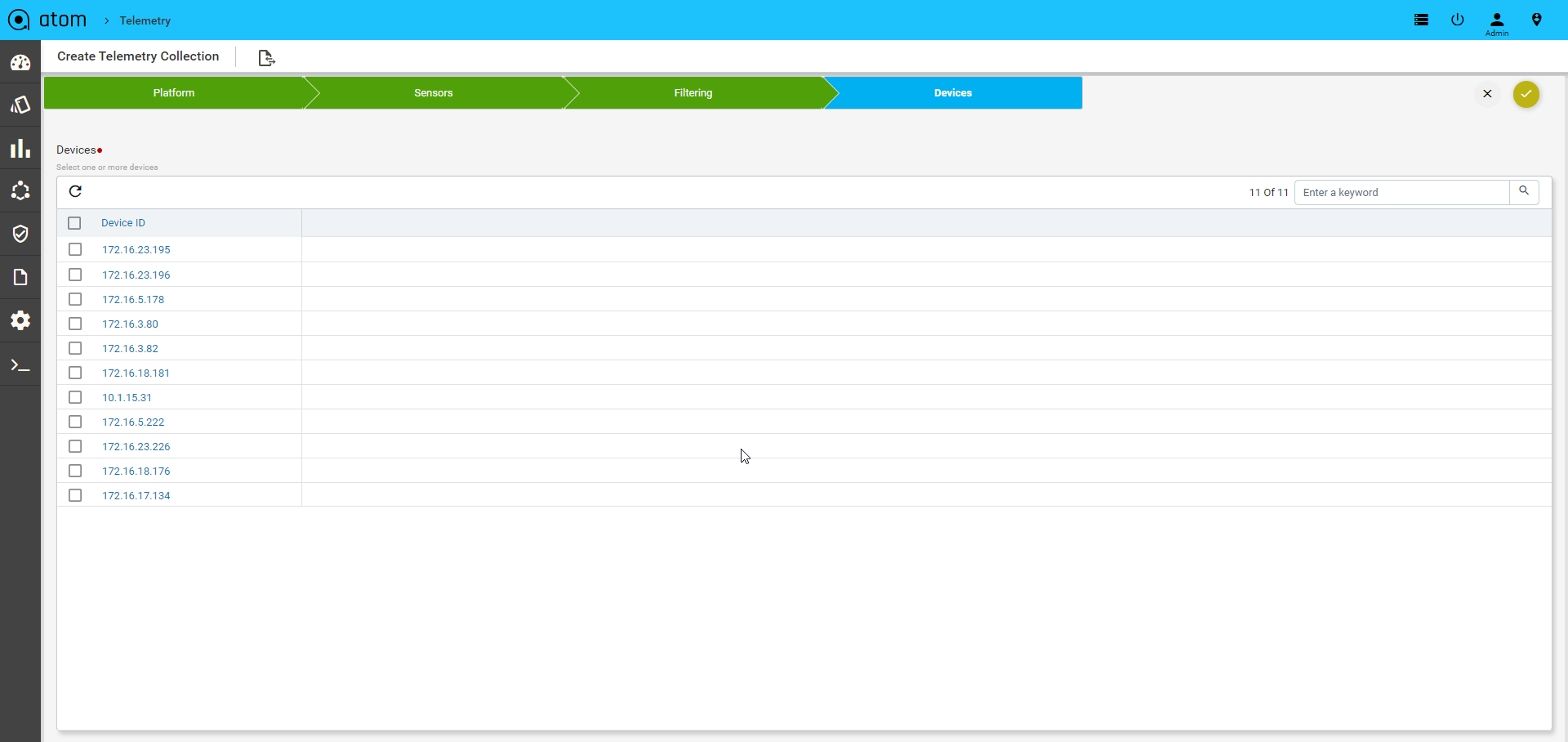

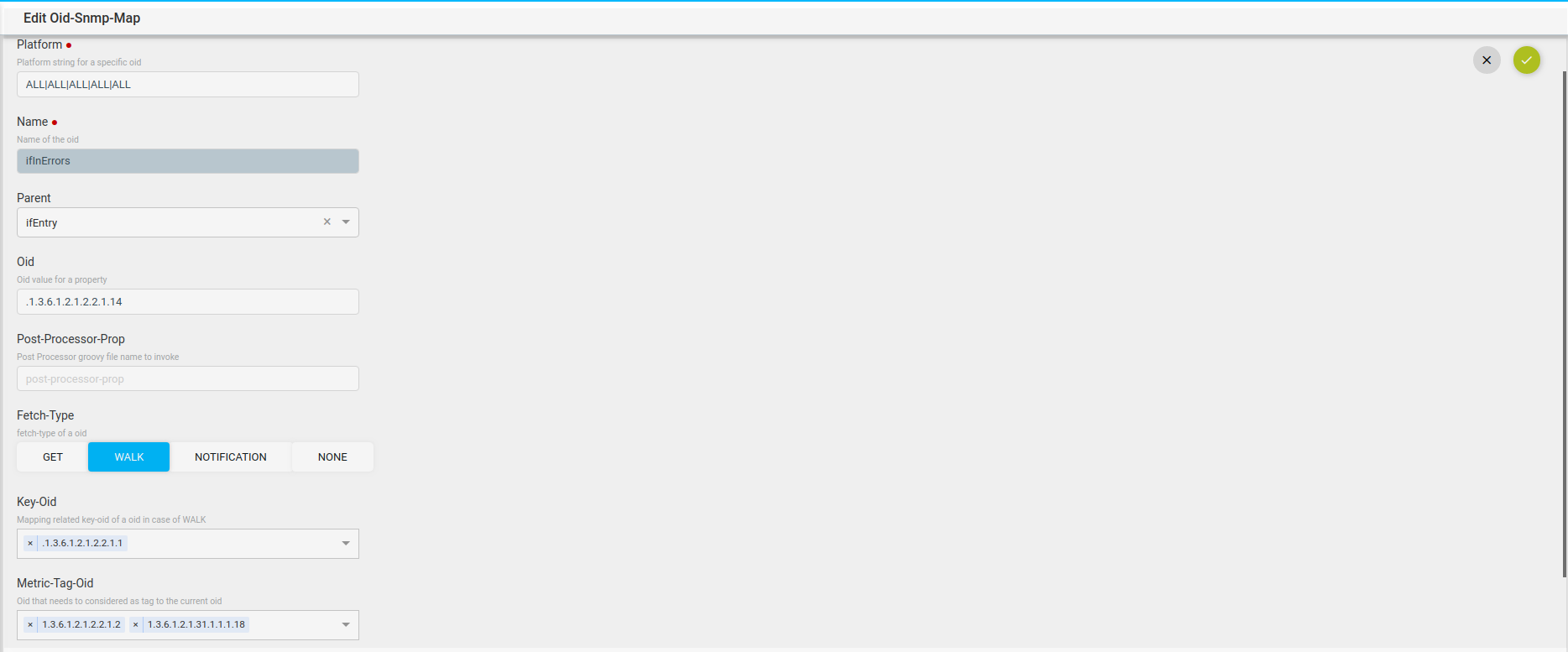

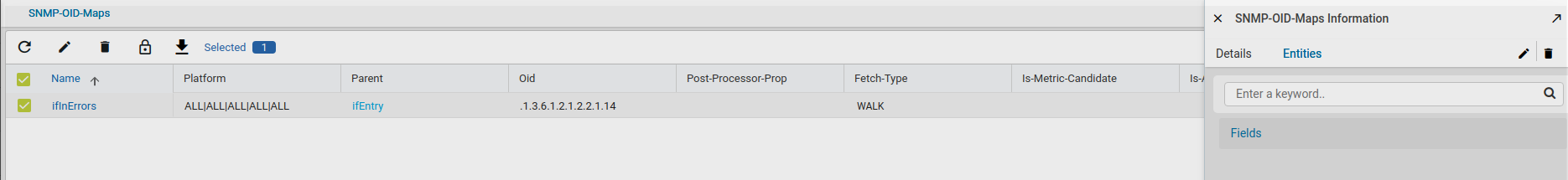

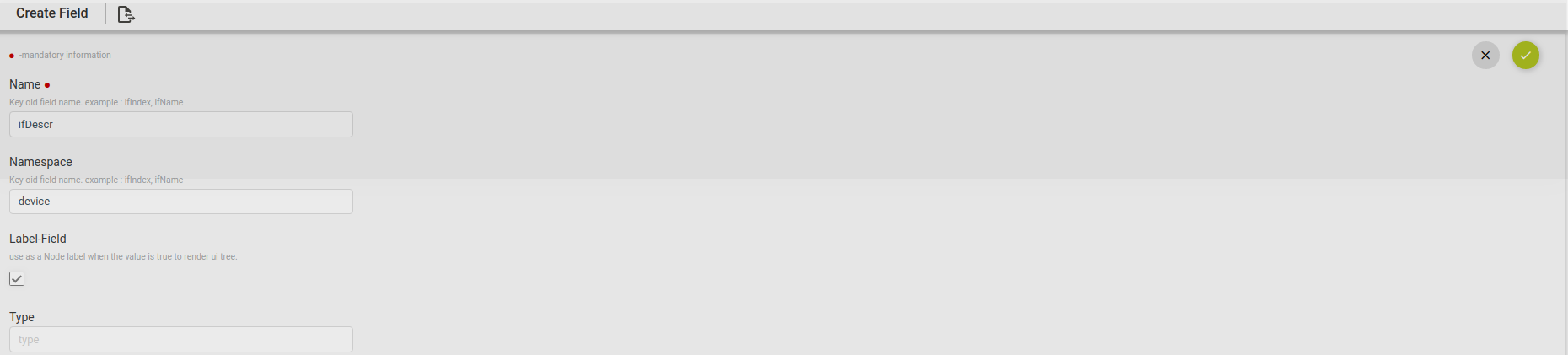

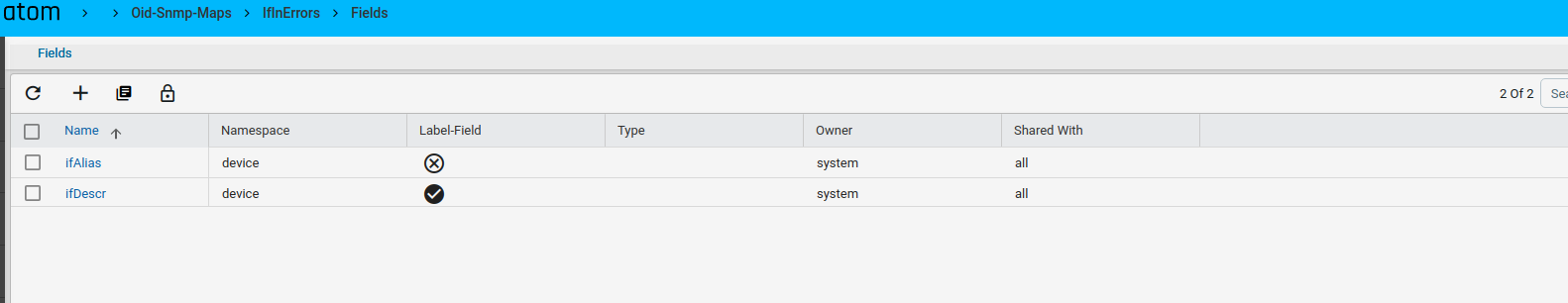

Procedure to Create Native Telemetry Collection

- Create a new Telemetry Collection

- Provide the name of collection

- Choose Junos as platform

- Select the transport as UDP which we will auto select the encoding as compact GPB with Dial Out Mode

- To configure resource filtering on device, select the filtering tab and choose the sensor name in dropdown & add regex pattern to configure

- Select ALL option, if we have same resource filter across sensors

- Once the telemetry collection is provisioned, users can’t edit the entry.

- Subscription is not required in this case.

Closed Loop Automation

Anuta ATOM allows administrators to define a baseline behavior of their network and remediation actions to be initiated on any violation of this behavior. ATOM collects a large amount of network data from multi-vendor infrastructure using Google Protobufs and stores it in a time series database. ATOM correlation engine constantly monitors and compares the collected data with the baseline behavior to detect any deviations. On any violation, the pre-defined remediation action is triggered thereby always maintaining network consistency.

The solution simplifies troubleshooting by providing the context of the entire network. Customers can define KPI metrics and corrective actions to automate SLA compliance.

Multi-Vendor support

Anuta ATOM has the most comprehensive vendor support. It supports thousands of devices spanning across 45+ vendors and automates all the use-cases including Data Center Automation, InterCloud,

Micro-Segmentation, Security as a Service, LBaaS, Campus/Access, Branch/WAN, IP/MPLS Edge, Virtual CPE, and NFV.

General Concepts

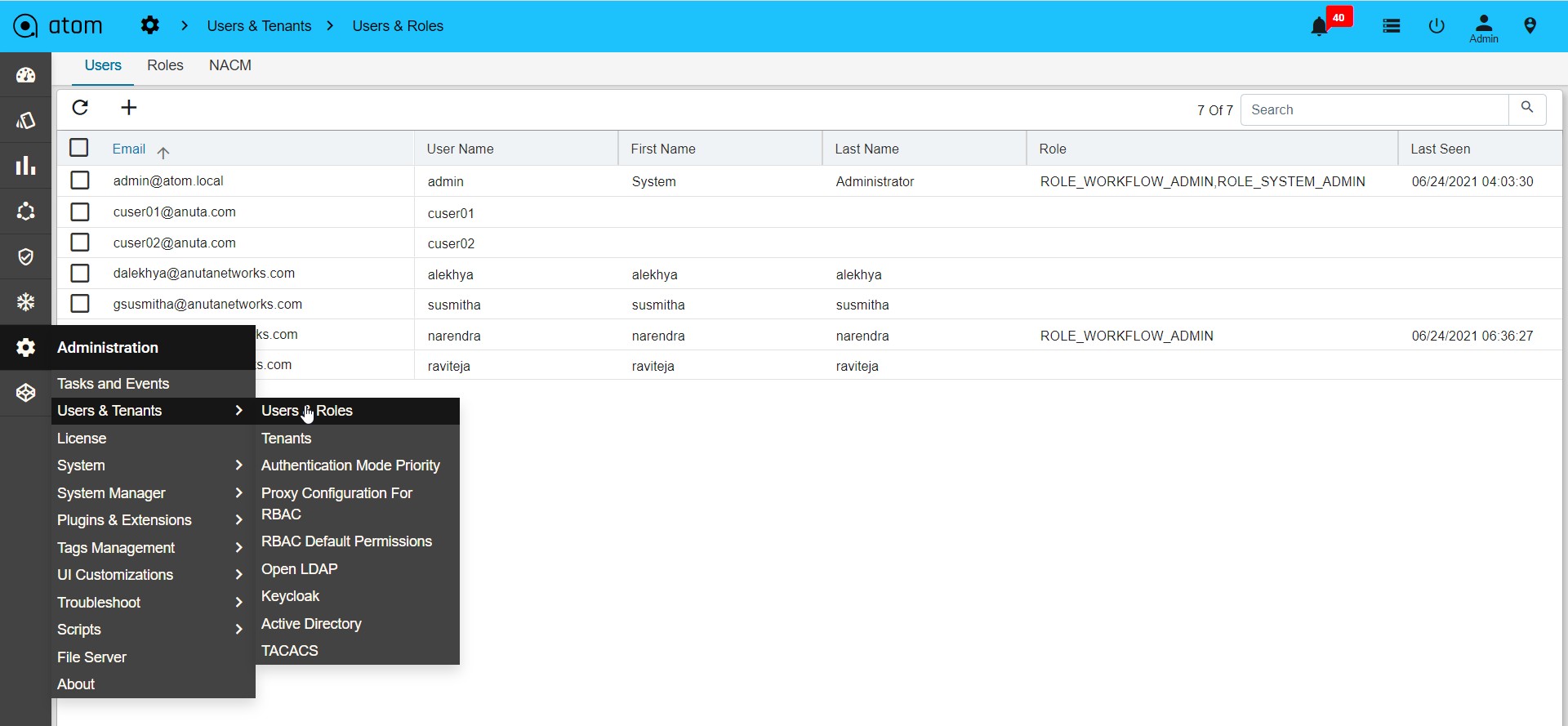

RBAC

Various ATOM Features and Levels of Access (Read, Create, Delete) are customized through RBAC. This is described in further Detail in User Management.

In case you are not able to access certain Feature or Policy / Data please contact your System Administrator.

Model Driven User Interface

Various ATOM Features are Model Driven or Driven by the Dynamic Pluggable Artifacts. Some of the following fall into this category:

- Device Packages

- Service Packages

- Workflow

- Reports

In case you do not find certain functionality expected in ATOM, please contact support@anutanetworks.com or your System Administrator.

Multi Tenancy

ATOM supports Multi-Tenancy across organizations and Sub-Tenancy within an Organization. This allows to vertically slice Any Data / Policies as per the business requirements of the Customer. Multi-Tenancy including Sharing, Wild Card usage to share across multiple

Sub-tenants, Users within a Sub-Tenant and more details are discussed in ATOM Multi Tenancy & Sub-Tenancy

In case you are not able to access certain Feature or Policy / Data please contact your System Administrator.

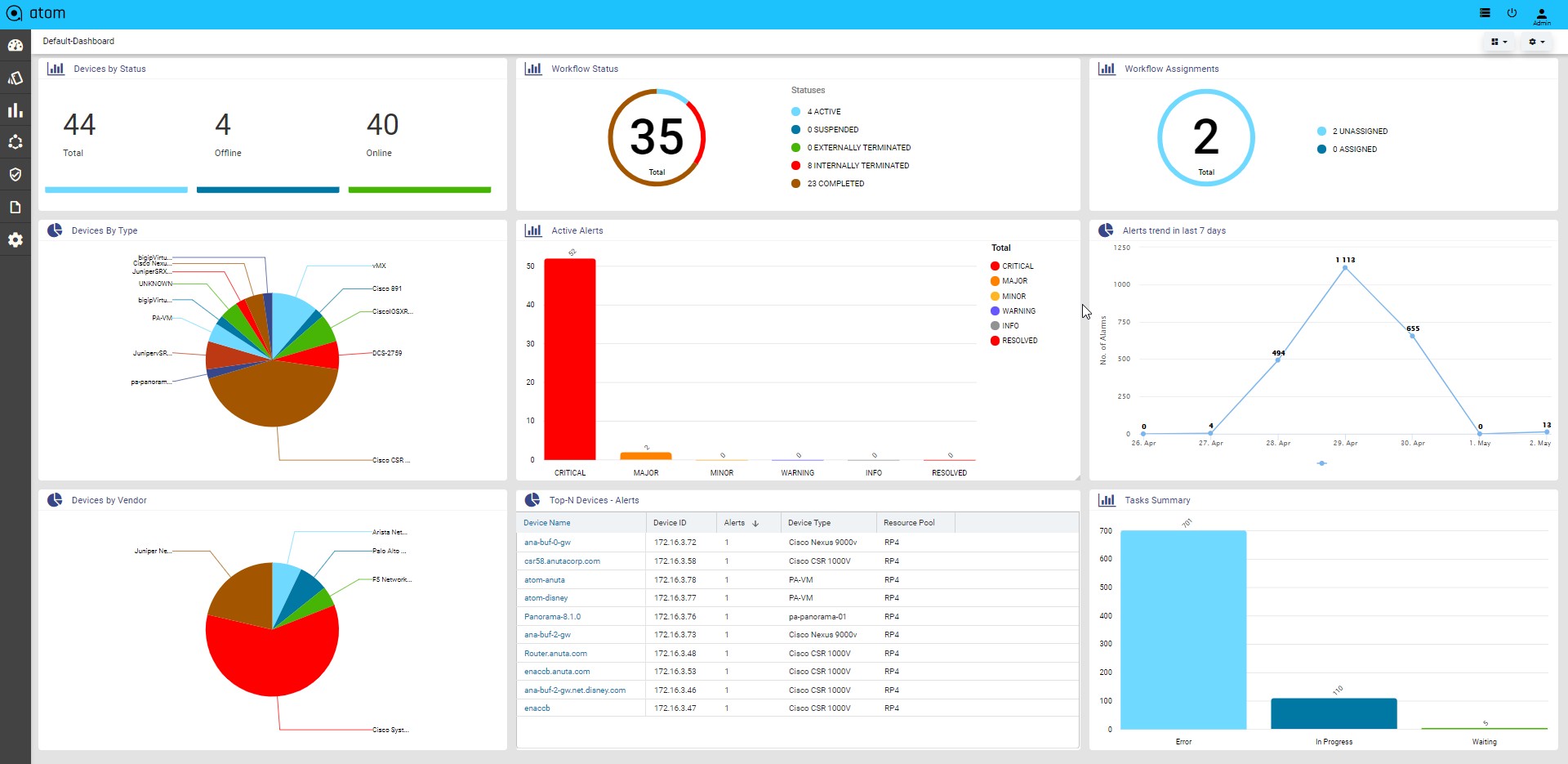

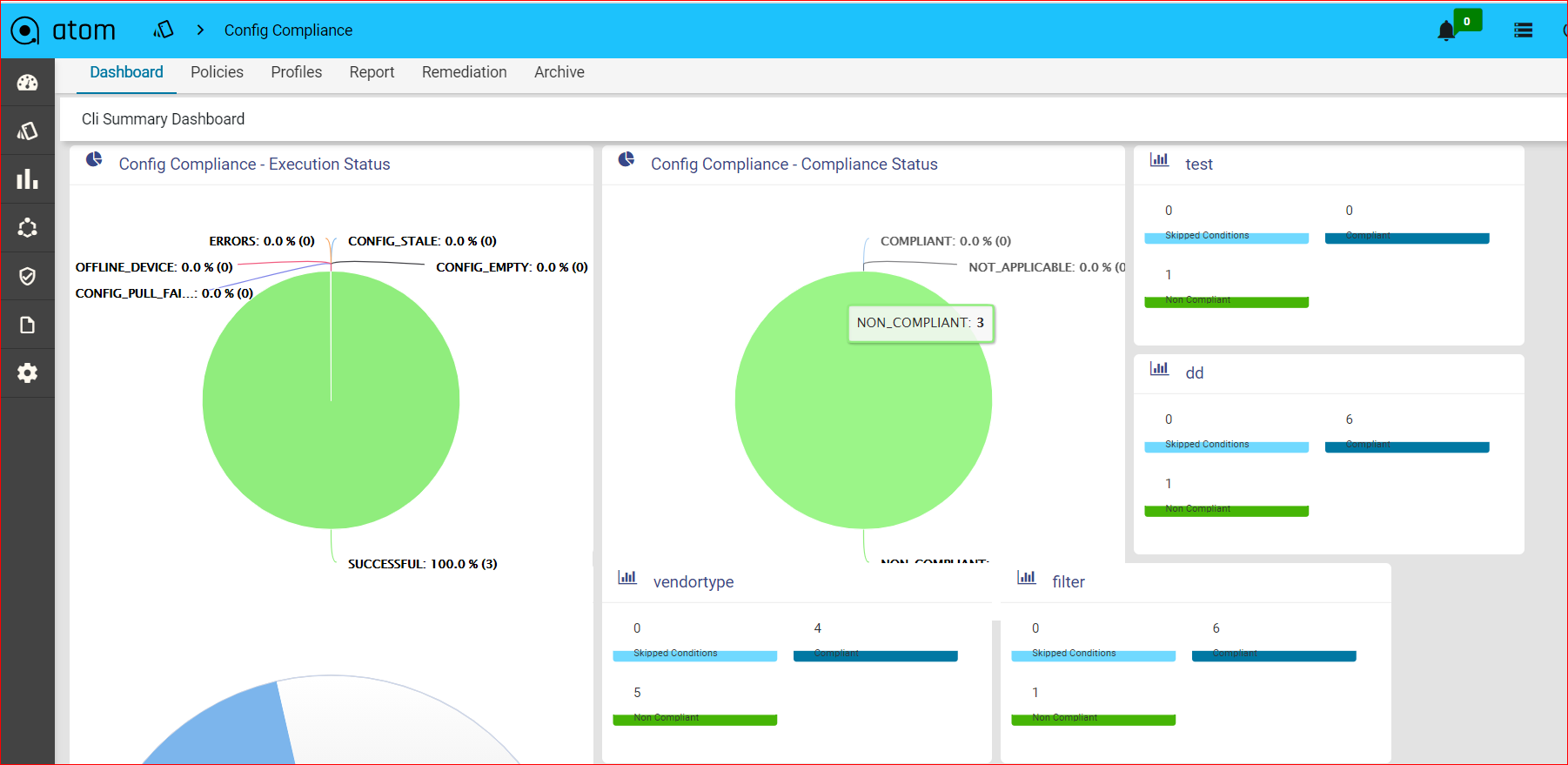

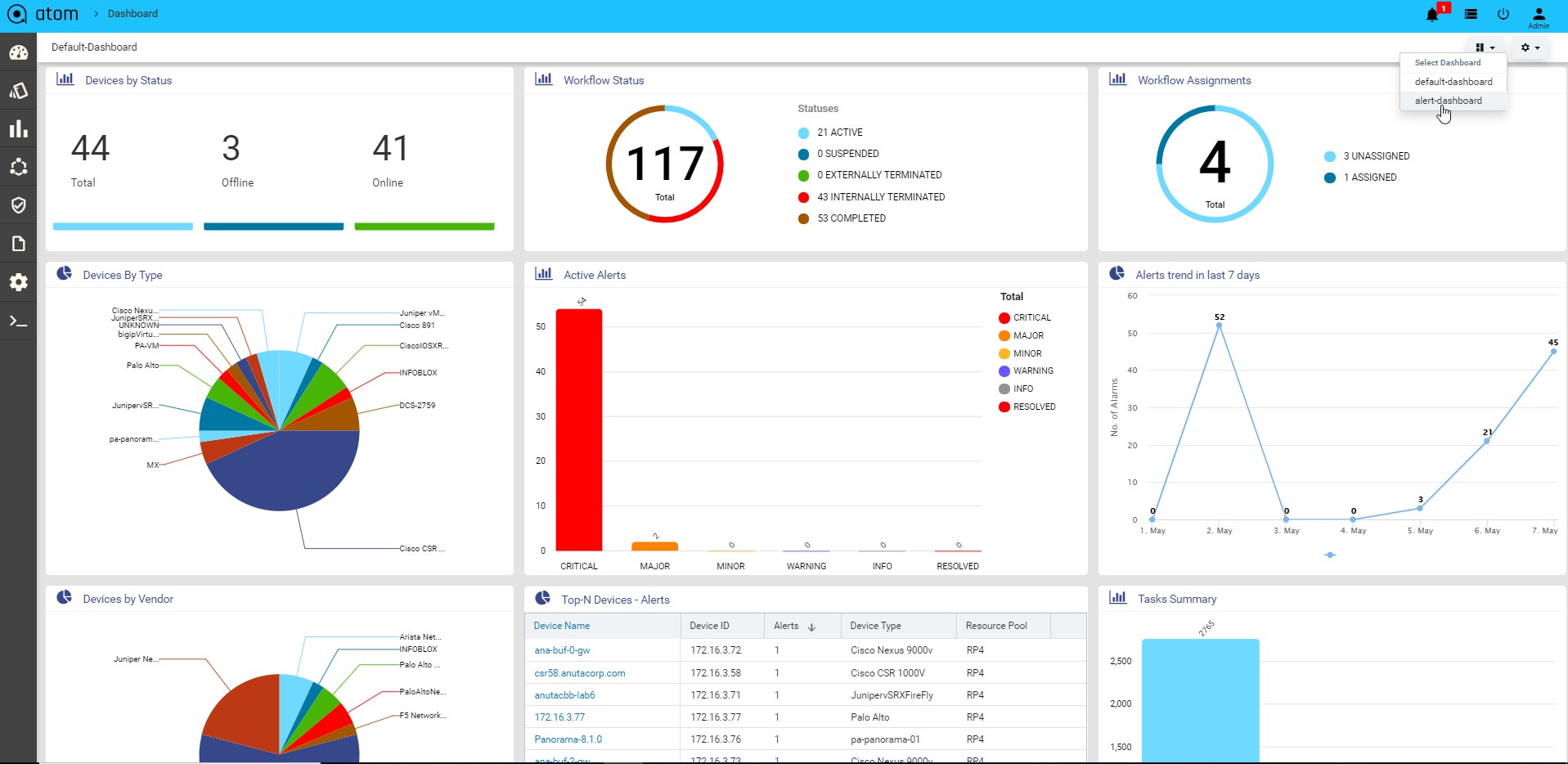

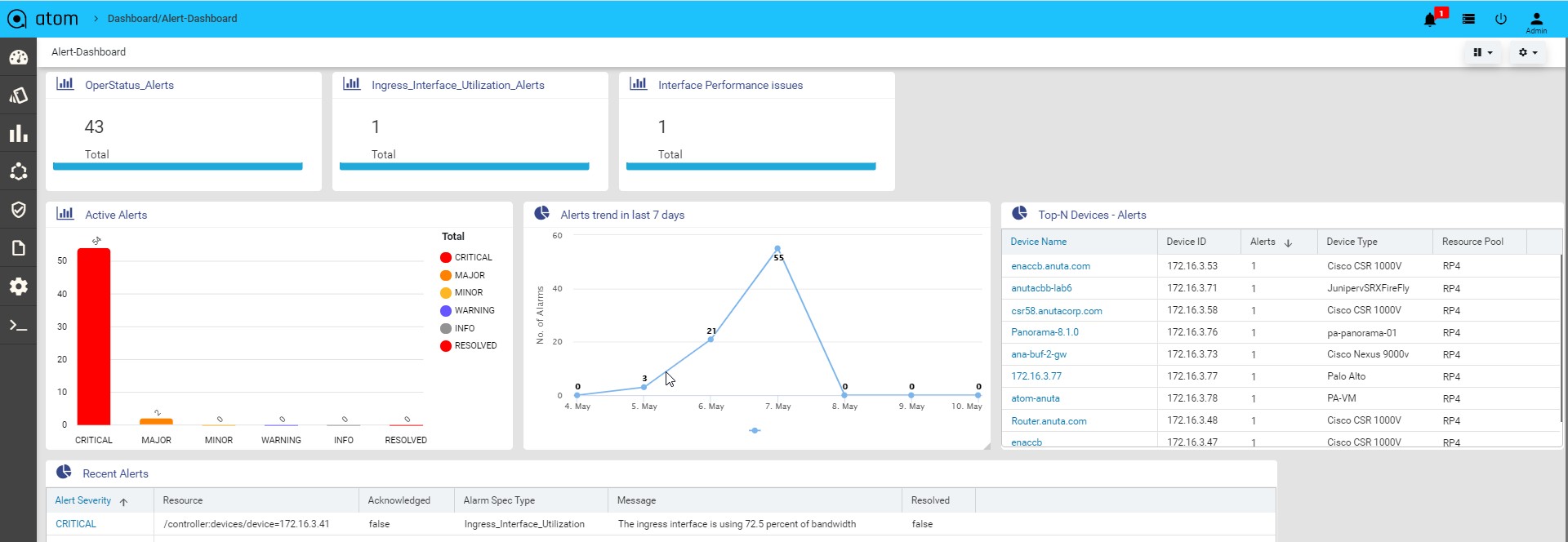

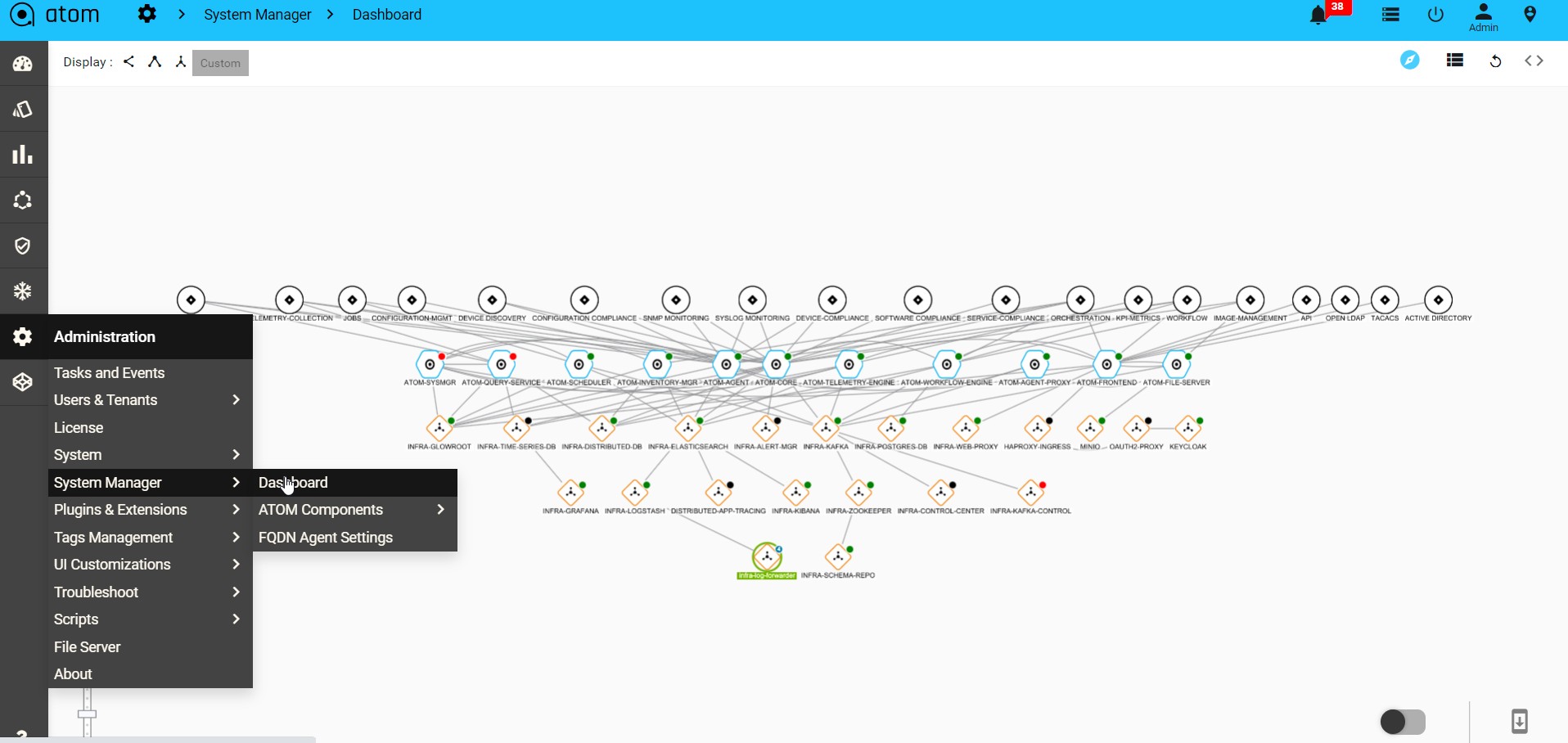

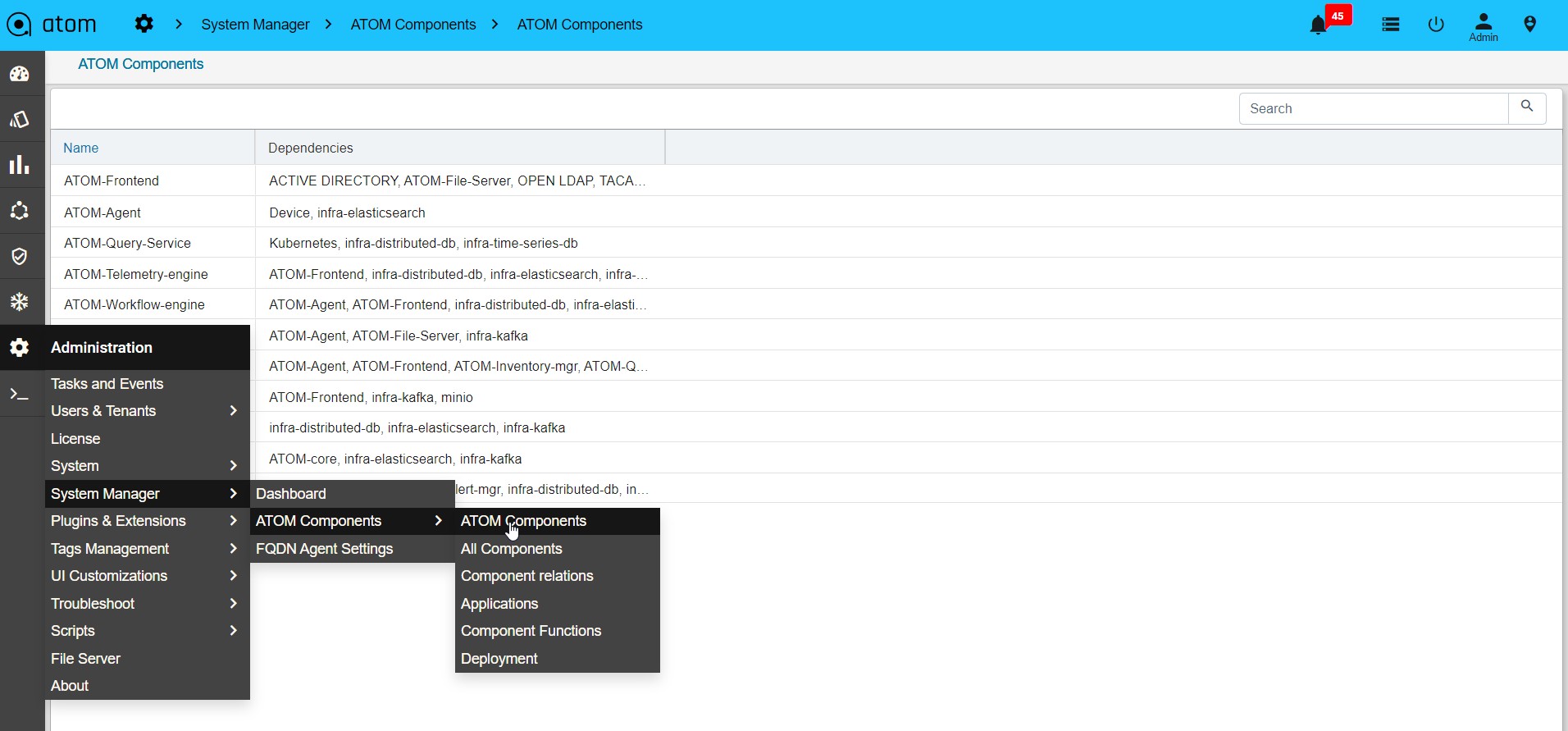

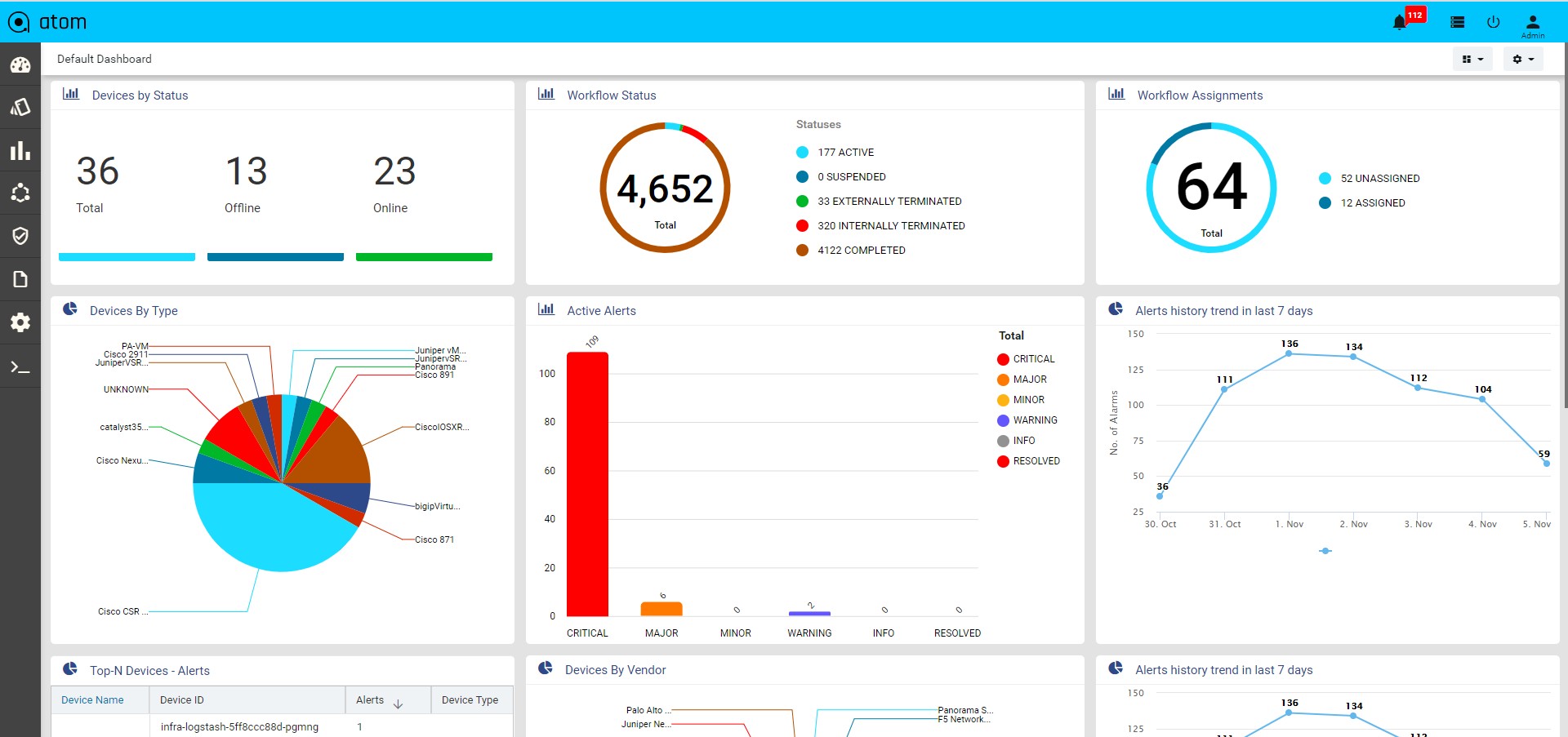

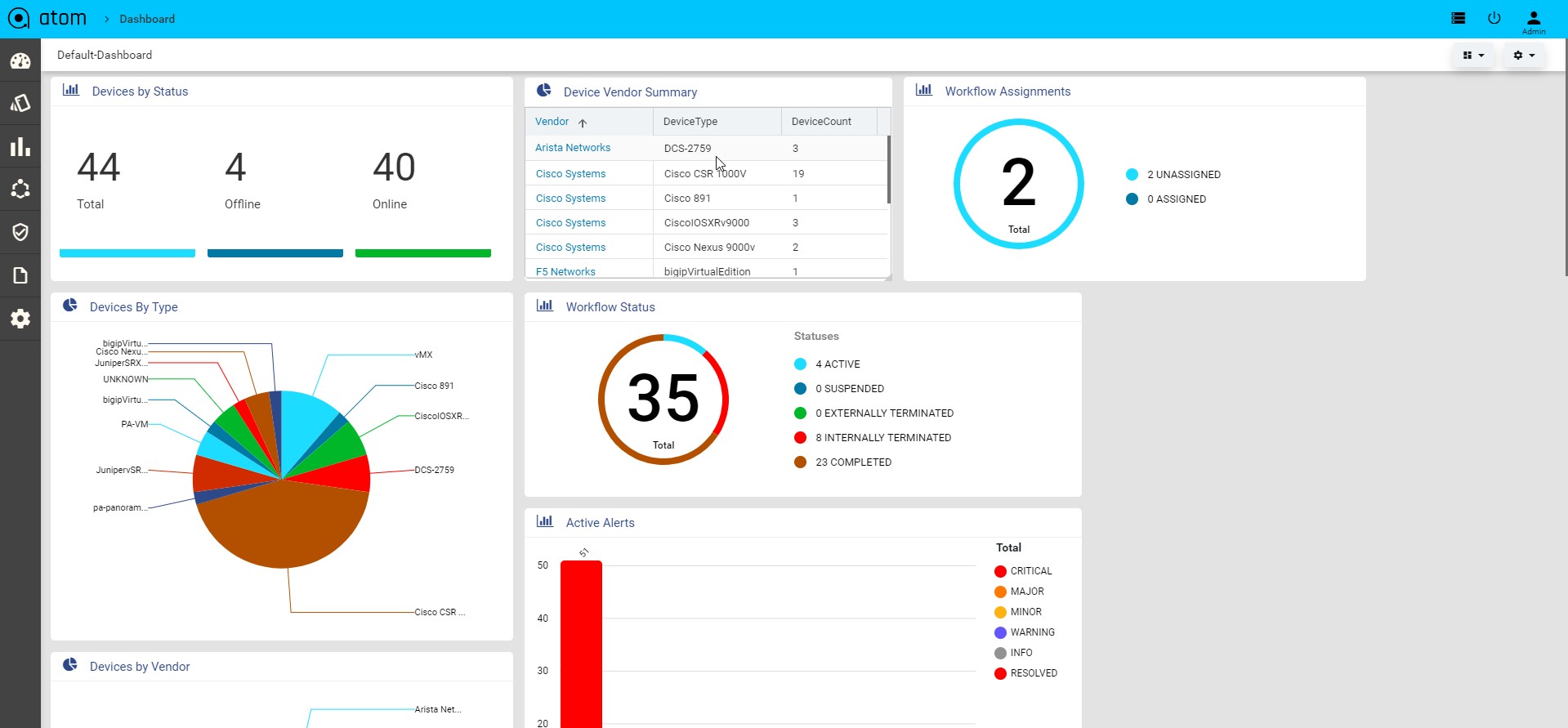

Viewing the Dashboard

Dashboard provides a simple, integrated, comprehensive view of the data associated with the resources managed by ATOM. Information about the devices, services, service approvals are available “at-a-glance” for the administrator.

Starting from the 7.x release, Dashboard, the landing page of ATOM, is organized into dashlets. A dashlet is an individual component that can be added to or removed from a dashboard. Each dashlet is a reusable unit of functionality, providing a summary of the feature or the function supported by ATOM and is rendered as a result of the custom queries written in DSL.

You can customize the look of the Dashboard, by adding the dashlets of your choice, and dragging and dropping (the extreme right corner of the dashlet) to the desired location on the dashboard.

Each dashlet contains the summary or the overview of the feature or the functionality supported by ATOM.

For example, the dashlet “Device” displays the summary of devices managed by ATOM. Some of the statistics that can be of interest in this dashlet could be as follows:

- Total number of devices

- Number of online devices

- Number of offline devices

These statistics can be gathered by ATOM and displayed in the corresponding dashlet depending on the DSL query written for each of them. You can save the layout containing the dashlets of your choice and set in a particular order.

Resource Management

ATOM Resource management involves device credential management, device onboarding through discovery or manual import, configuration archival, topology discovery & visualization, resource pools (device grouping), IP Address Management etc.,

Following table provides a quick summary of the activities that can be Resource Management activities.

| Credential Sets, Credential Maps and Devices | Resource Manager > Devices |

| Device Discovery | Resource Manager > Devices > Discovery |

| Visualize Topology | Resource Manager > Network > Topology |

| Create & Visualize Logical & Hierarchical Network Device Groups/Resource Pools | Resource Manager > Network > Resource Pools |

| Create physical locations | Resource Manager > Locations |

Device Management

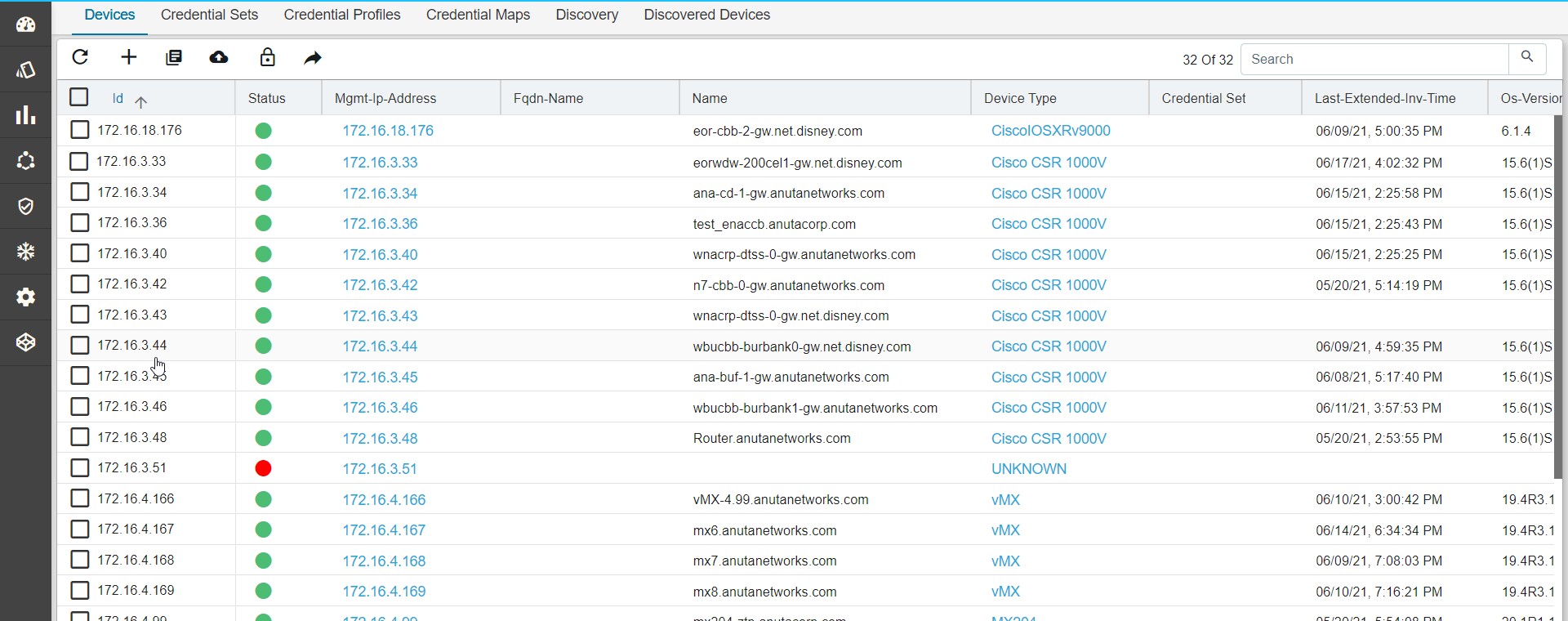

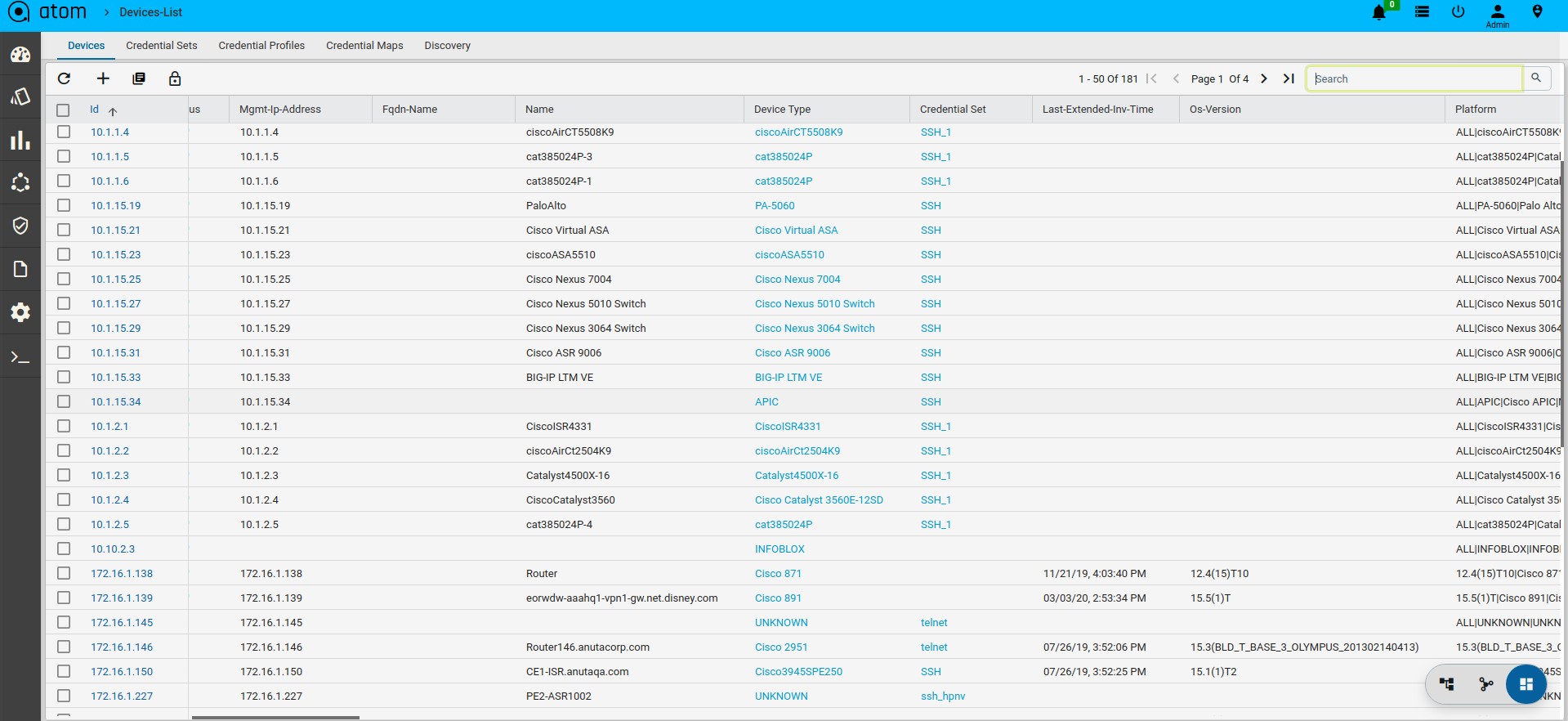

Device Management involves onboarding of devices and working with Device inventory, Configuration, Monitoring & Alerts. Devices can be added Manually, through an API or Automated Discovery using CDP/LLDP.

All Device Mgmt activities can be performed from Device Explorer & Grid View. Following are the three main views for a Device.

- Grid View – Grid layout of all Devices & and action on a device(s)

- Tree View – Device Group based tree view of devices that provides a much easier way to toggle between devices and inspect various device characteristics.

- Topology View – Devices can be visualized in a Topology view

- Device Detail View – On Clicking a Device from Tree View or Grid View a detailed view of the device is presented. This is same as the view when a device is selected from the Tree view

Grid, Tree view & Topology Views can be toggled using the view selector button available at the bottom right hand side corner of the page.

Credential Management

ATOM provides multiple functions like Provisioning, Inventory Collection etc. Function like Provisioning can be various ways – Payload (CLI vs YANG or Other) over a Given Transport (SSH, Telnet, HTTP(S), etc.,). For example, based on the use case ATOM Workflow Engine can use various Payload + Transport mechanisms to perform Provisioning actions. ATOM helps accomplish this using:

- Credential Sets – Define the Transport/Connectivity & Authentication to the devices

- Credential Profile – Maps Credential Sets to various functions in ATOM This addresses various scenarios, some as follows:

- Reuse of same SNMP Credentials across the entire Network, while retaining Device/Vendor Specific Transport for Provisioning.

- Inventory Collection Via SNMP for a Given Vendor/Device vs Telemetry for another

Credential Sets

Following section provides guidance on how to configure device credentials in ATOM.

- Navigate to Resource Manager > Devices > Grid View(Icon) > Credential Sets

- Create/Edit a Credential Set

- Name: Enter a string that will be used to identify the Credential Set

- Description: Enter a description w.r.t the created Credential Set(Optional)

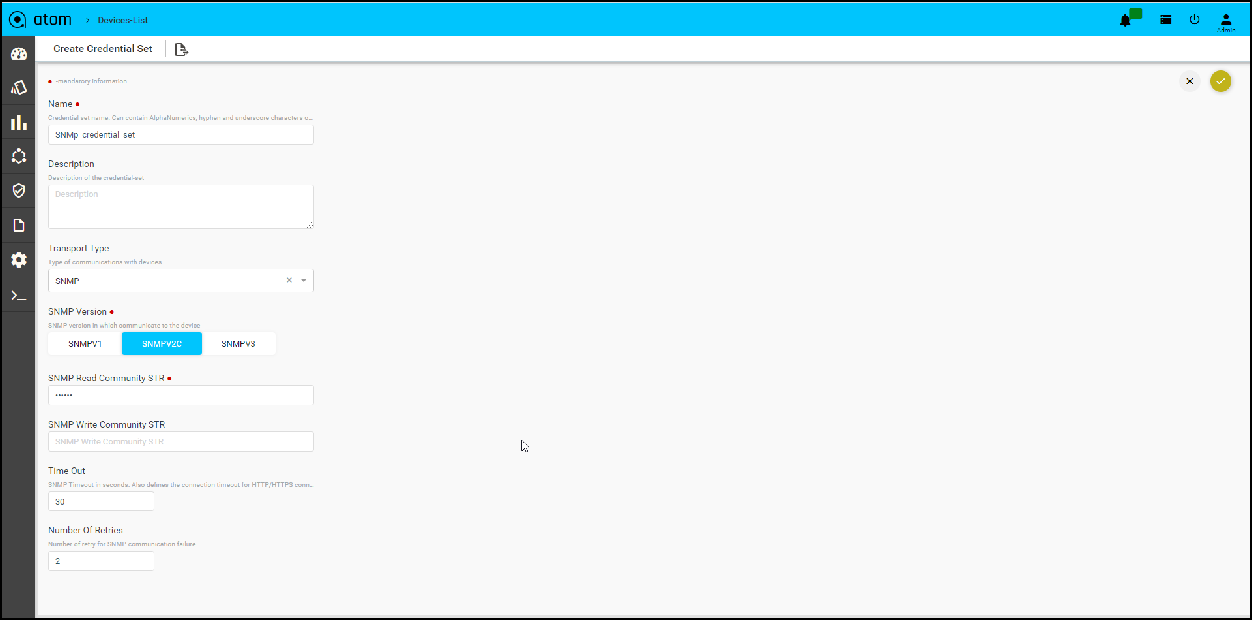

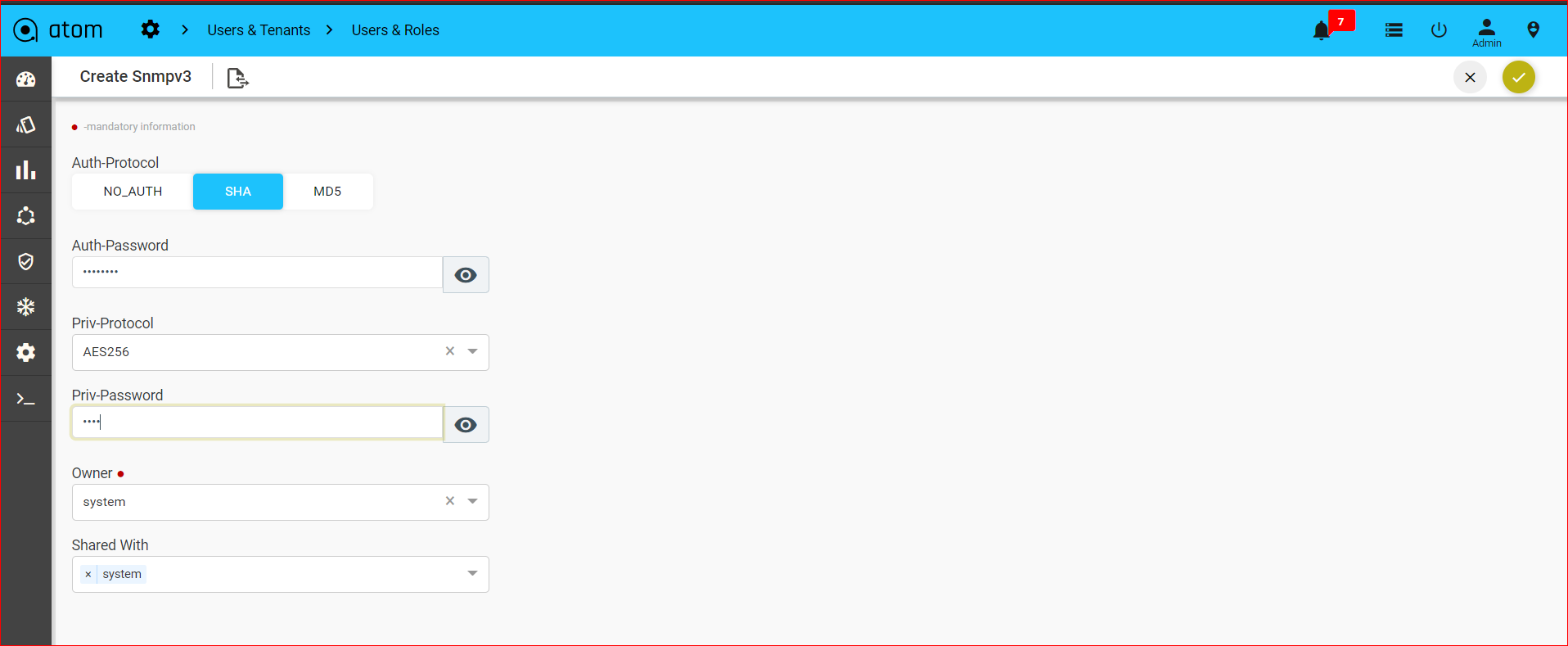

SNMP Transport credentials:

Select Transport type as “SNMP” can view below option

-

- SNMP version: Select the version of SNMP that should be used for device communication

- SNMP Read Community String: Enter the string that is used by the device to authenticate ATOM before it can retrieve the configuration from the device

- SNMP Write Community String: Enter the string that is used by the device to authenticate ATOM while writing configuration to the device

- Timeout: Enter the time taken for the response from the device in seconds.

- Number Of Retries: Enter the number of times the SNMP request is sent when a timeout occurs.

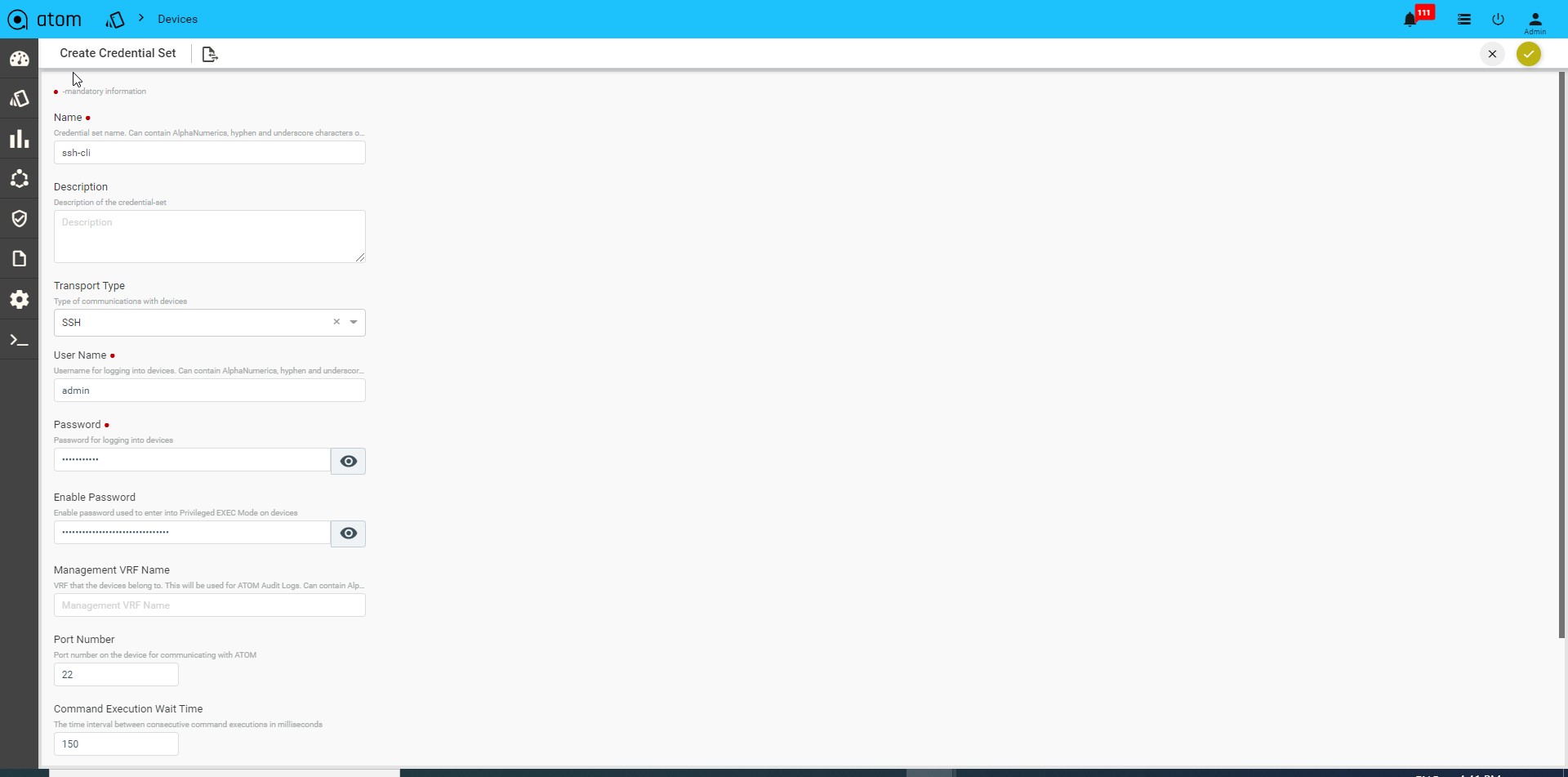

CLI Device(SSH/TELNET) Transport Credentials: Select Transport type as “SSH/TELNET”

-

- User name: Enter a string that should be used to login to the device

- Password: Enter a string that used be a password for logging into the device

- Enable Password: Enter a password to enter into the privilege exec mode of the device.

- Mgmt-VRF-Name: Enter the name of the management VRF configured on the device. This will be used by ATOM to retrieve the audit logs from the device.

-

- Port Number: Enter the number of the port on the device that should be used for communication with ATOM

- Command Execution Wait Time: Enter the number (in millisecs) that ATOM should wait for the consecutive commands to be executed on the device. Enter any number between 10 to 30000.

- CLI Configure Command TimeOut: Enter the time (in seconds) that ATOM should wait for the command line prompt on the device to appear. Enter any between 1 to 1200.

- Max Connections: Enter the number of max connections that can be opened for a given device at any time.

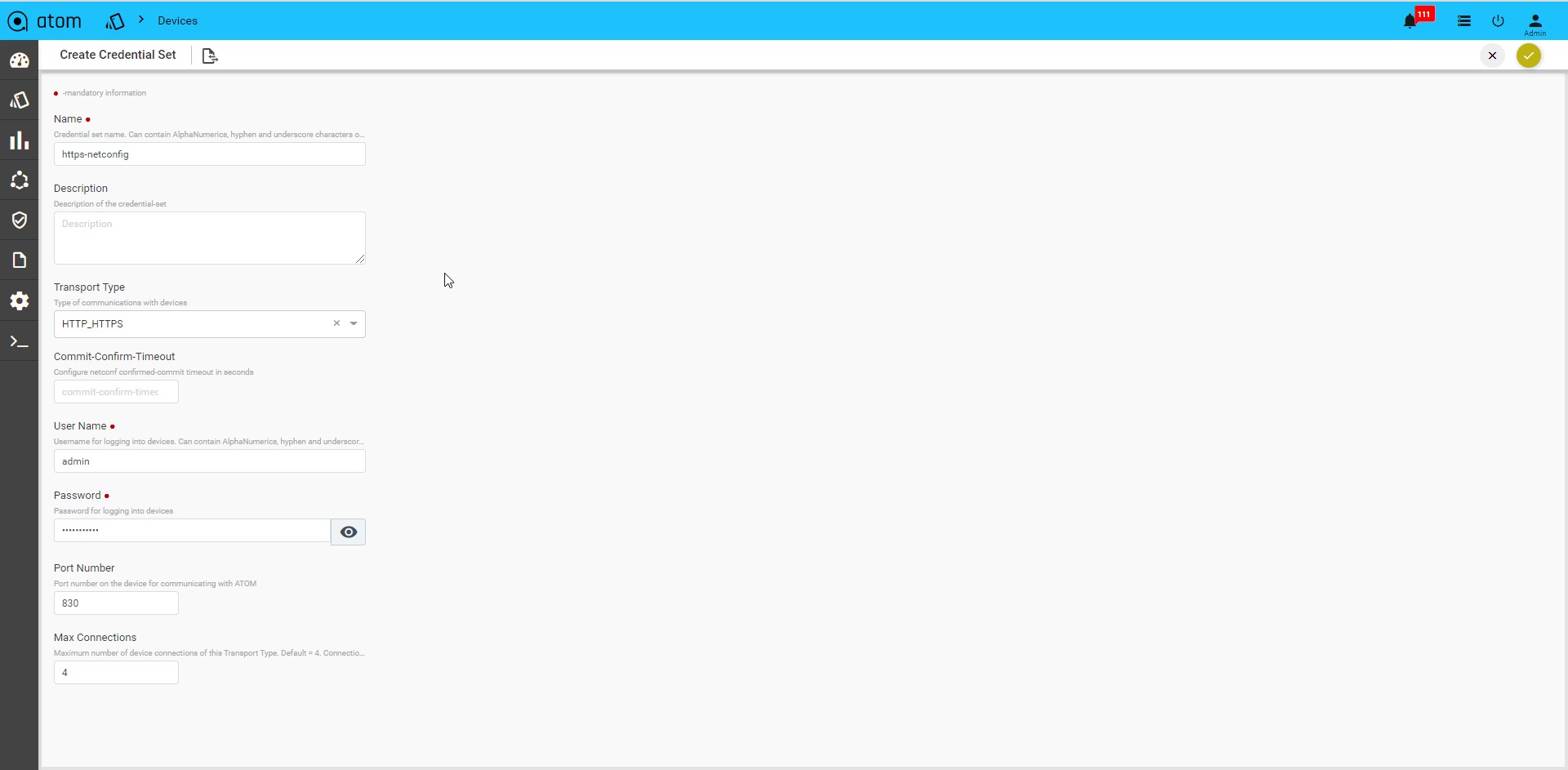

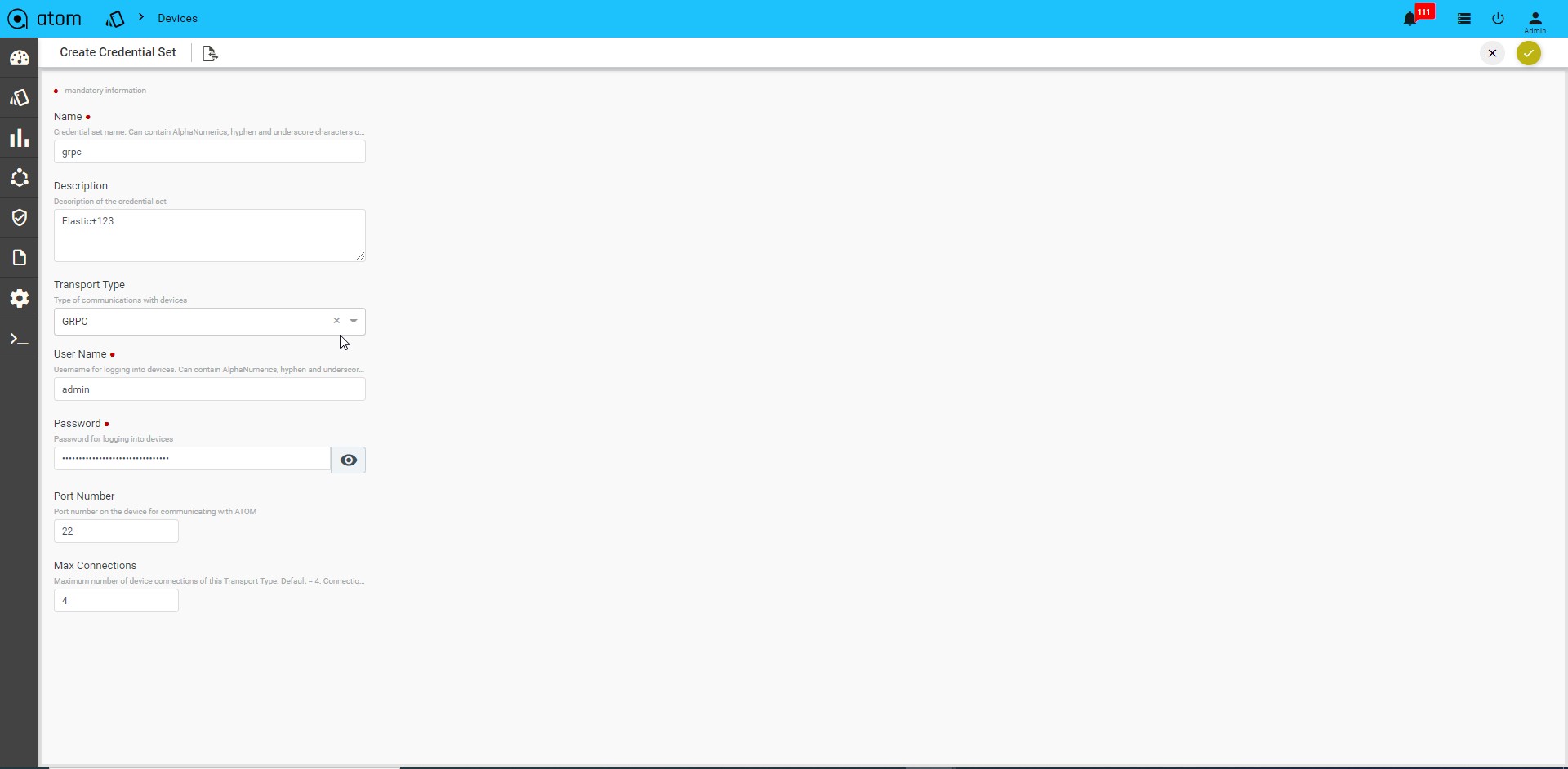

API Device Transport Credential:

Select Transport type as “HTTP_HTTPS / GRPC”

-

- User name: Enter a string that should be used to login to the device

- Password: Enter a string that used be a password for logging into the device

- Port Number: Enter the number of the port on the device that should be used for communication with ATOM.

- Max Connections: Enter the number of max connections that can be opened for a given device at any time.

GRPC Transport credential:

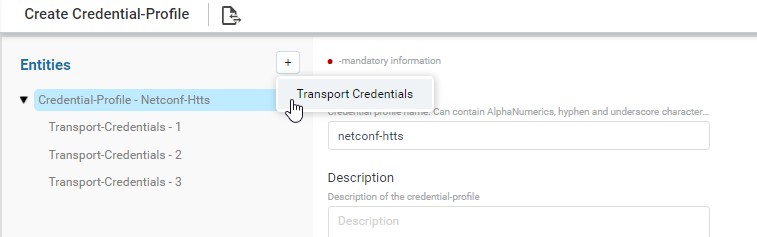

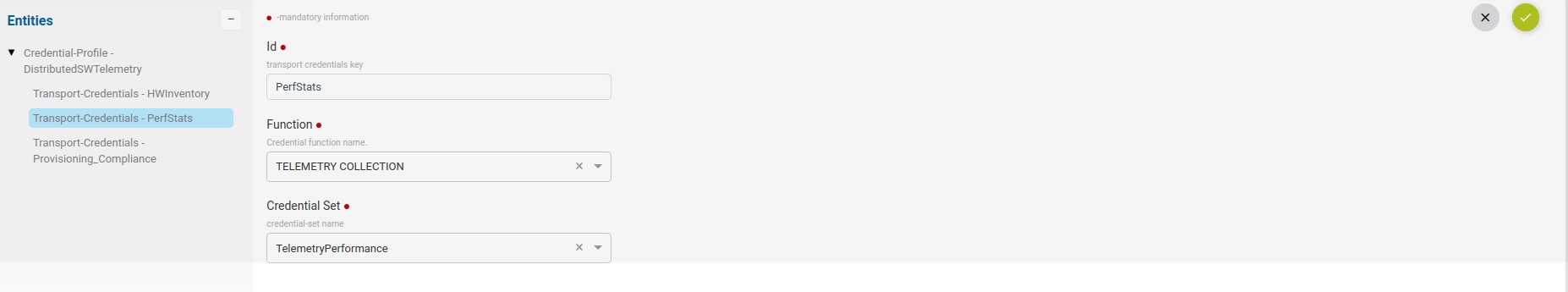

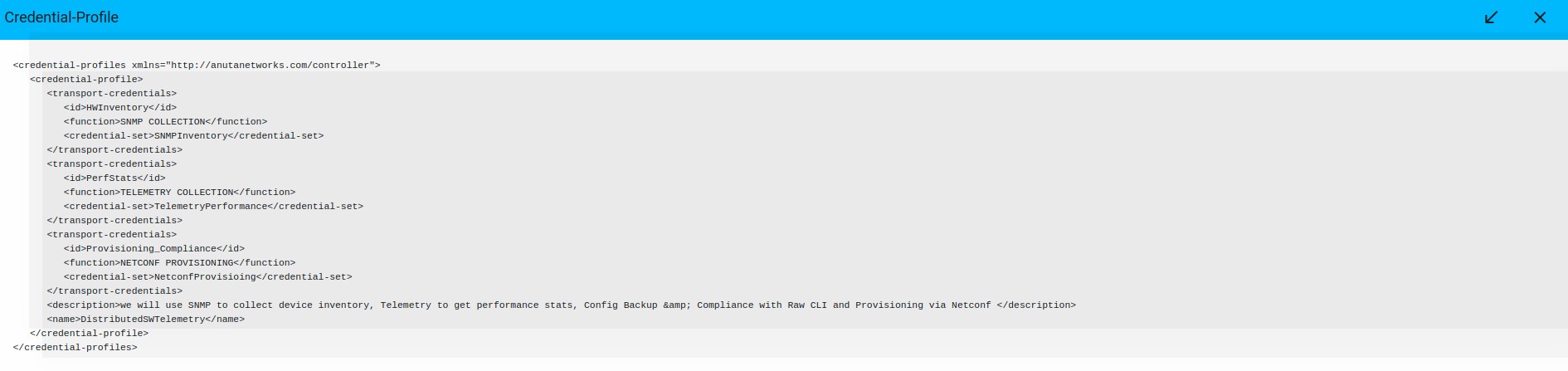

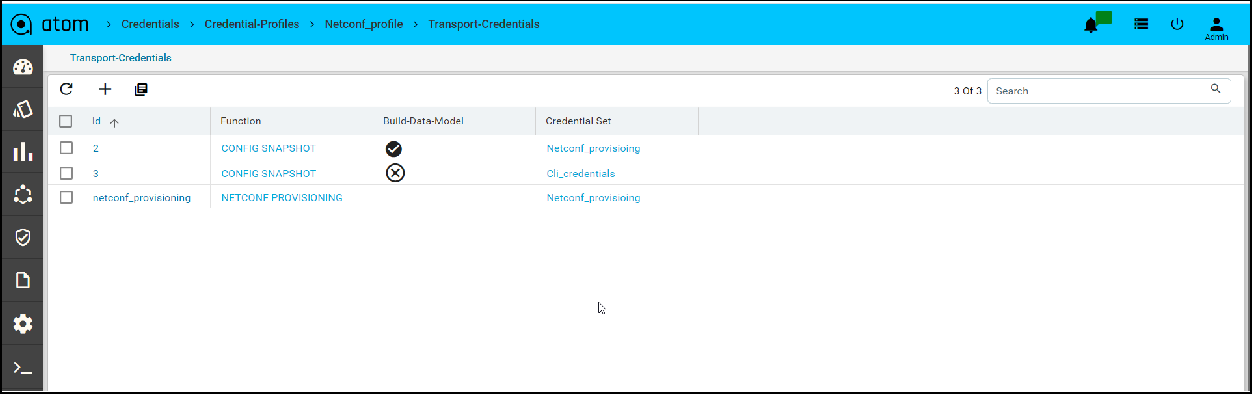

Credential Profile

By default, ATOM has the following out of the box functions:

- Config Provisioning

- SNMP

- Telemetry

- HTTP provisioning

- NETCONF provisioning

Navigate to Resource Manager > Devices > Grid View(Icon) > Credential Profile

- Here, provide the name of credential profile, description and add the transport credentials by choosing the appropriate functions.

- Below is the snapshot to attach the credential set with function.

Credential profile payload in XML:

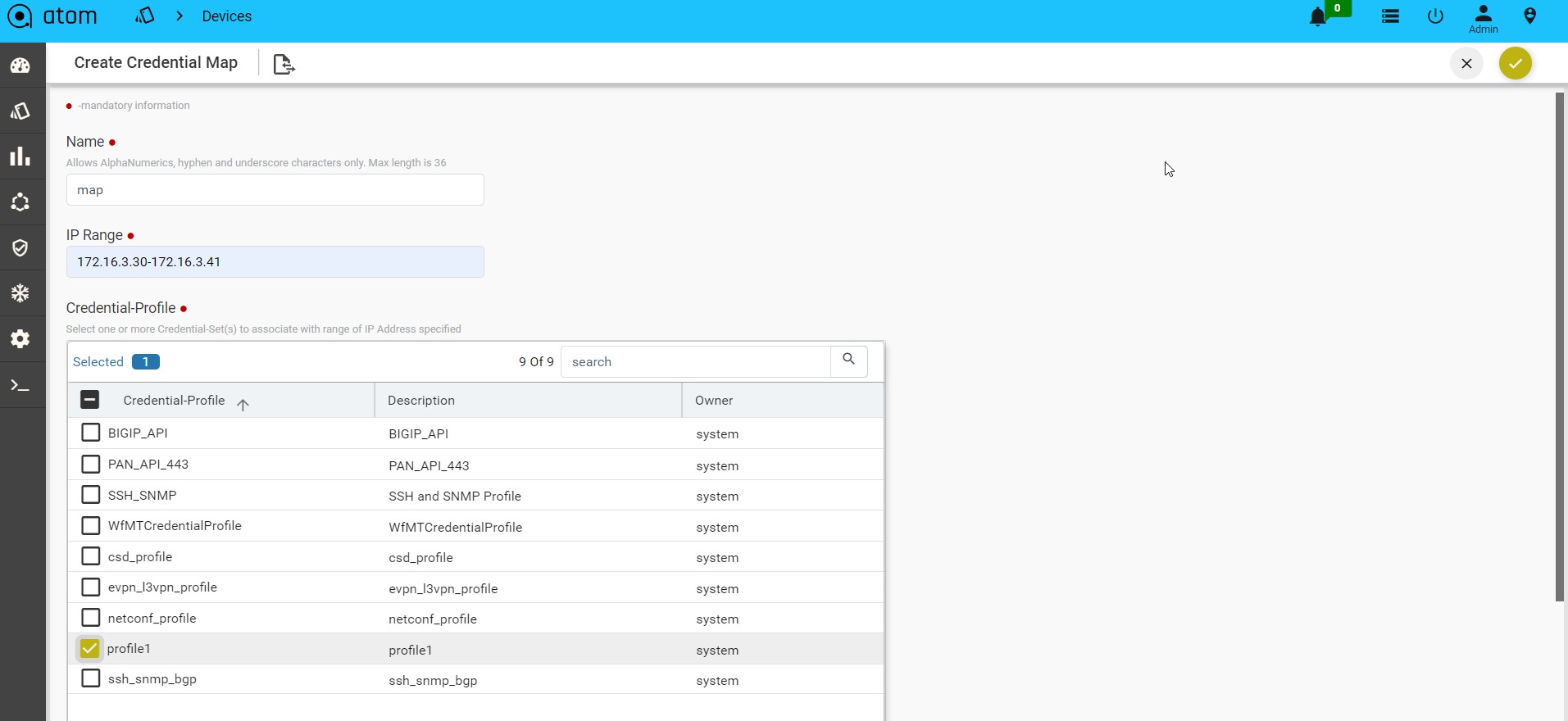

Credential Maps

Credential Map allows users to map multiple Credentials Profiles to an IP-Address range. This addresses the following use cases:

- Device Discovery – When ATOM needs to Perform Discovery using SNMP Sweep or CDP/LLDP. Since devices are yet to be onboarded, explicit assignment is not available.

- Credential profile is mandatory when onboarding a device.

When ATOM needs credentials for a device and explicit Device to Credential Profile is not available, ATOM will cycle through the IP Address range and use the first credential profile that works. The successful Credential Profile is mapped to the device. This process is repeated

whenever ATOM is unsuccessful communicating with the device using the current assigned credential profile.

To create a Credential Map:

- Navigate to Resource Manager > Devices > Grid View(Icon) > Credential Maps

- Create/Edit Create Credential Map:

- Name: Enter a name for the Credential Map

- Start-IP-address: Enter an IP address in the range from which ATOM starts the sweep for locating the devices.

- End-IP-address: Enter an IP address in the range beyond which ATOM will not continue the sweep for locating the devices.

-

- Credential Profile: Select one or more Credential Profiles shown.

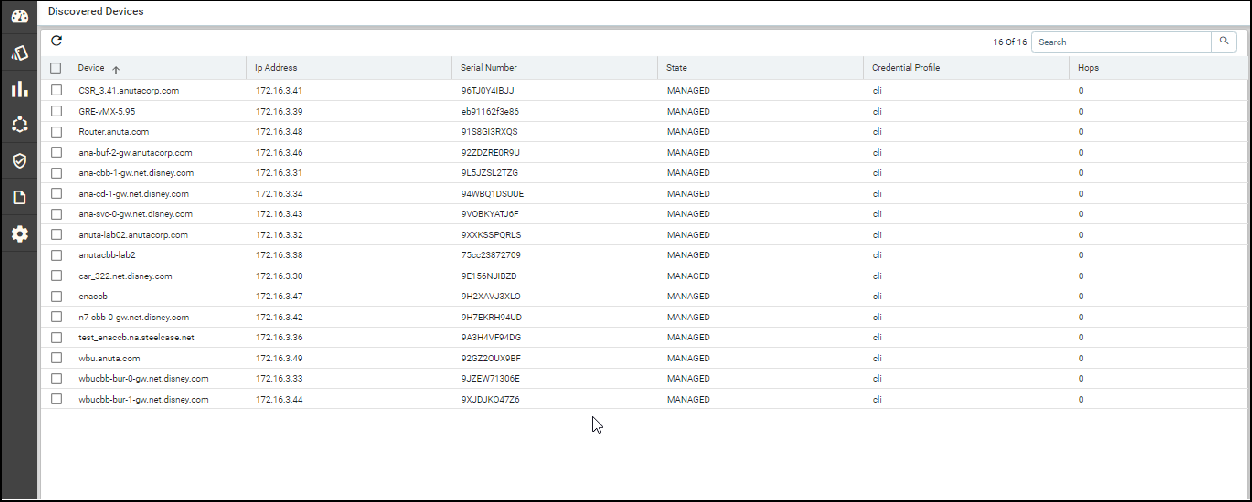

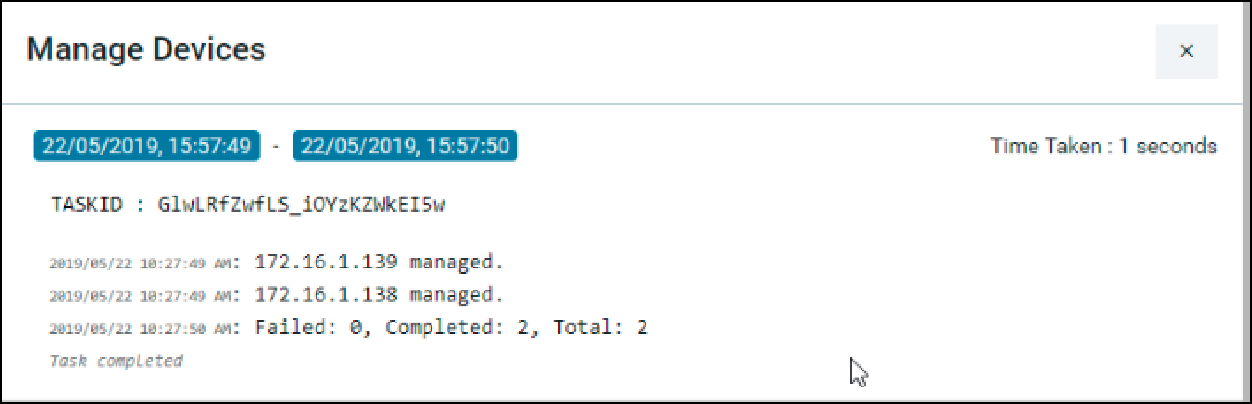

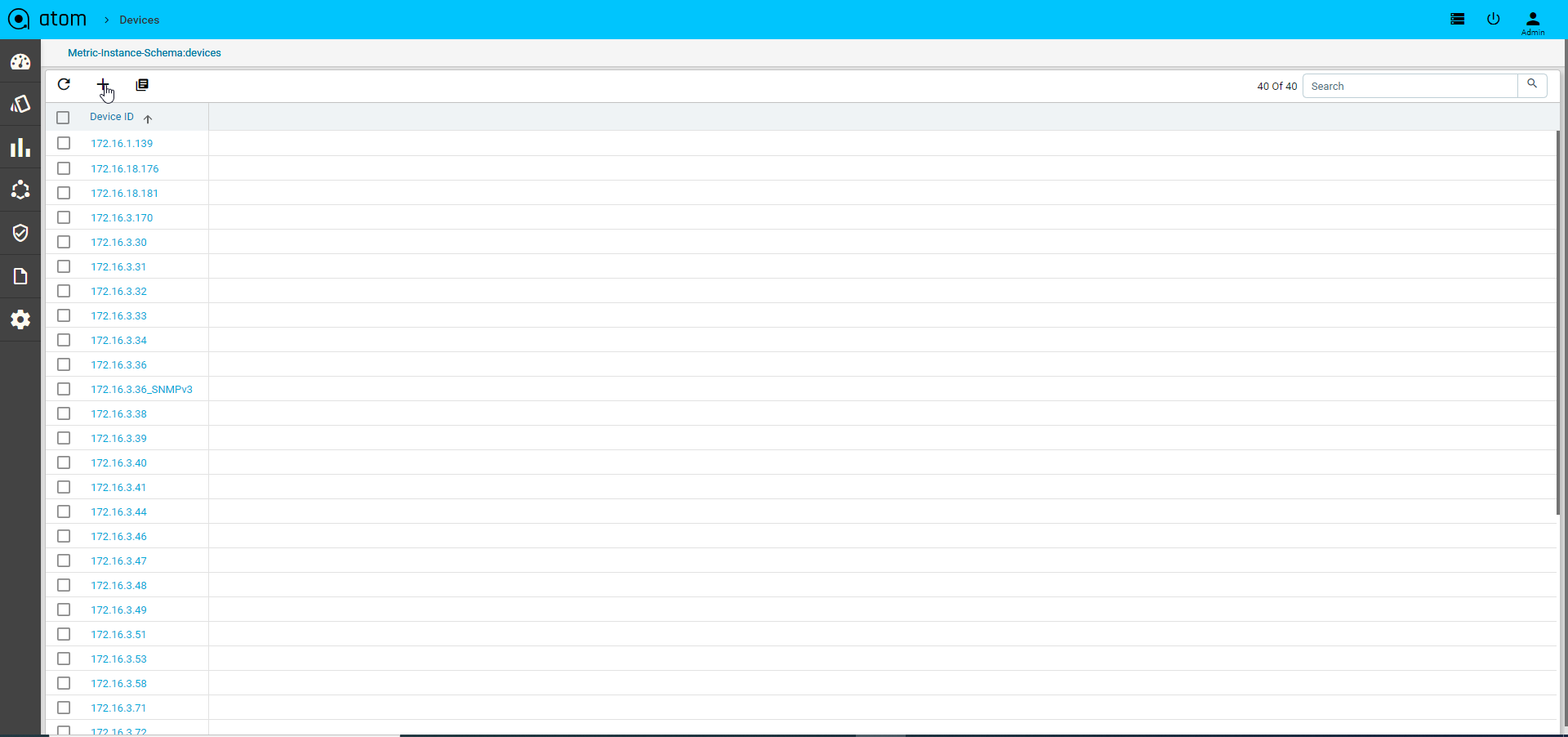

Device Onboarding

Devices can be onboarded into ATOM using an API, Manually through User Interface of Discovery using CDP/LLDP.

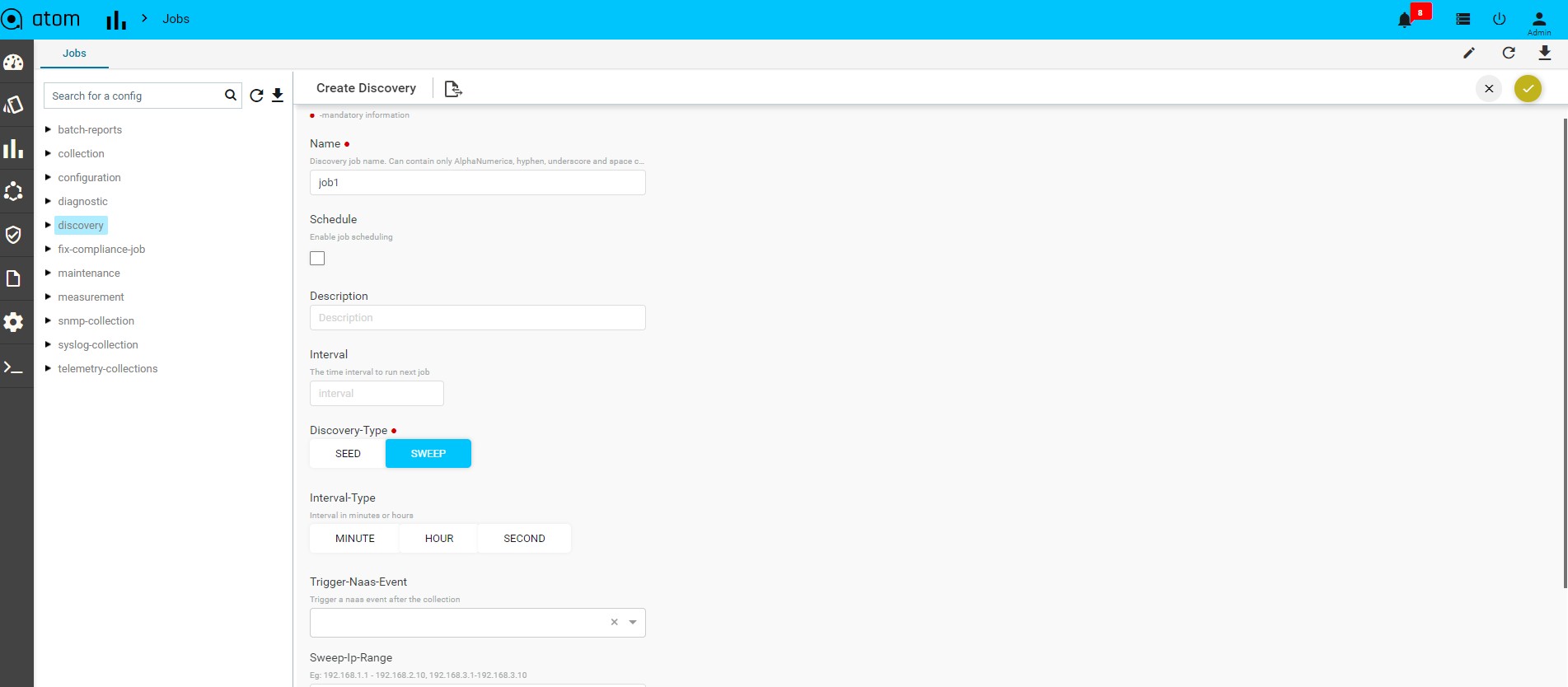

Discovering Devices:

Devices discovery is covered in section – Device Discovery Adding Device Manually:

We may have scenarios where device discovery is not viable. Some reasons below:

- Lack of support for Layer 2 discovery support on the device

- Operational/Administrative reason to not use LLDP/CDP

- SNMP Sweep discovery is not suitable – IP Address Range are not well defined, contiguous or some other reasons

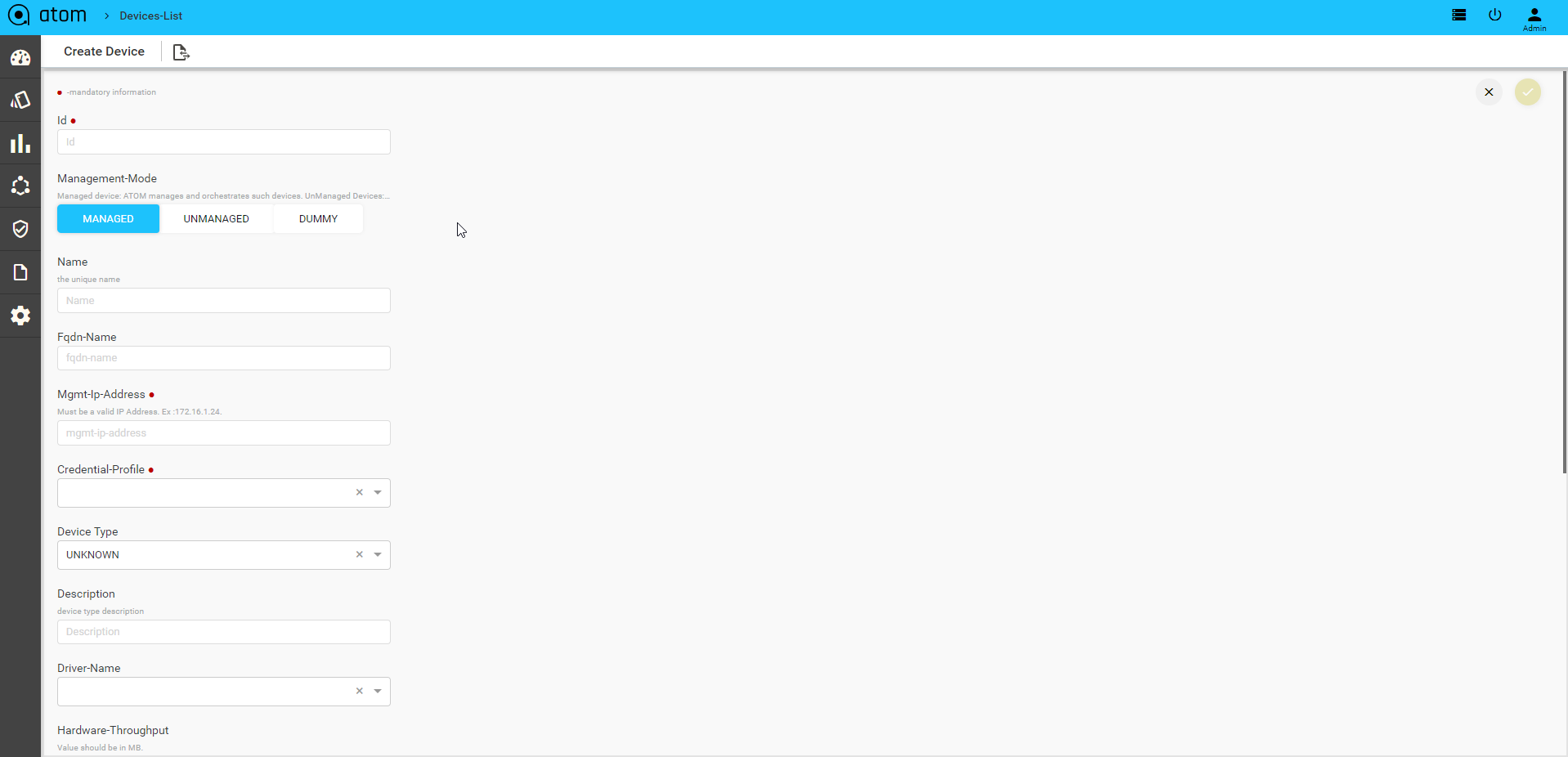

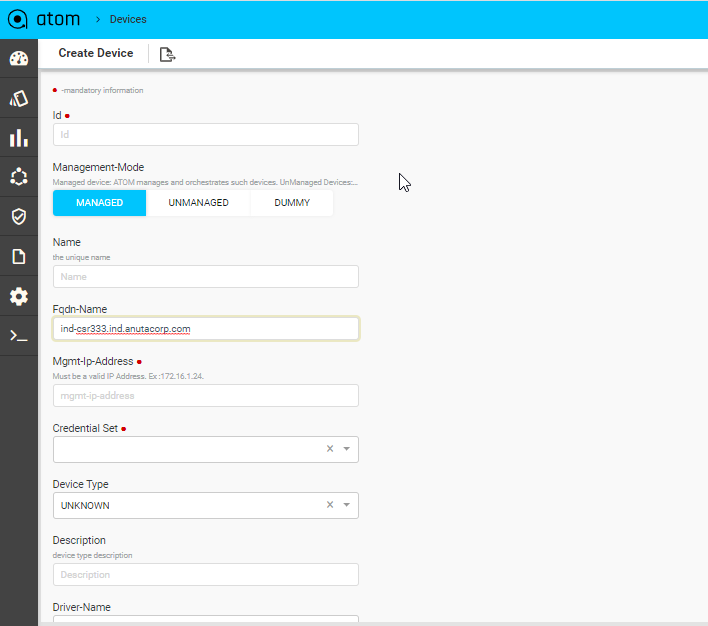

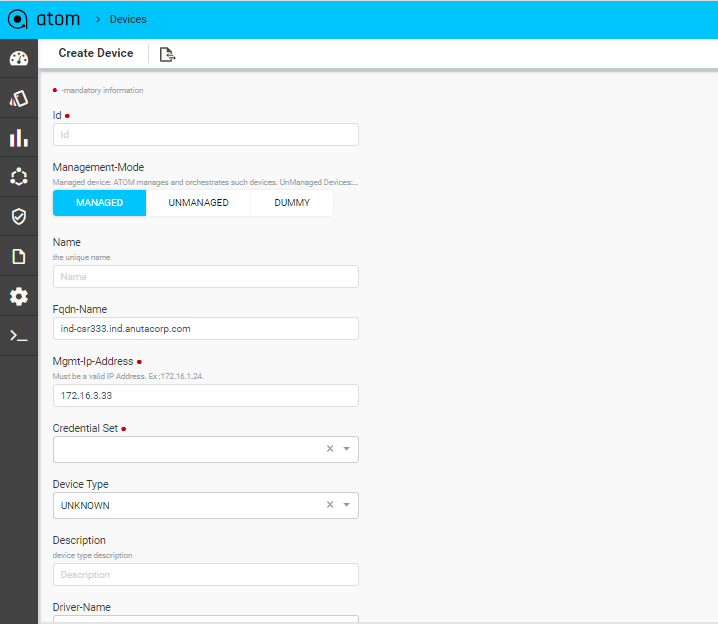

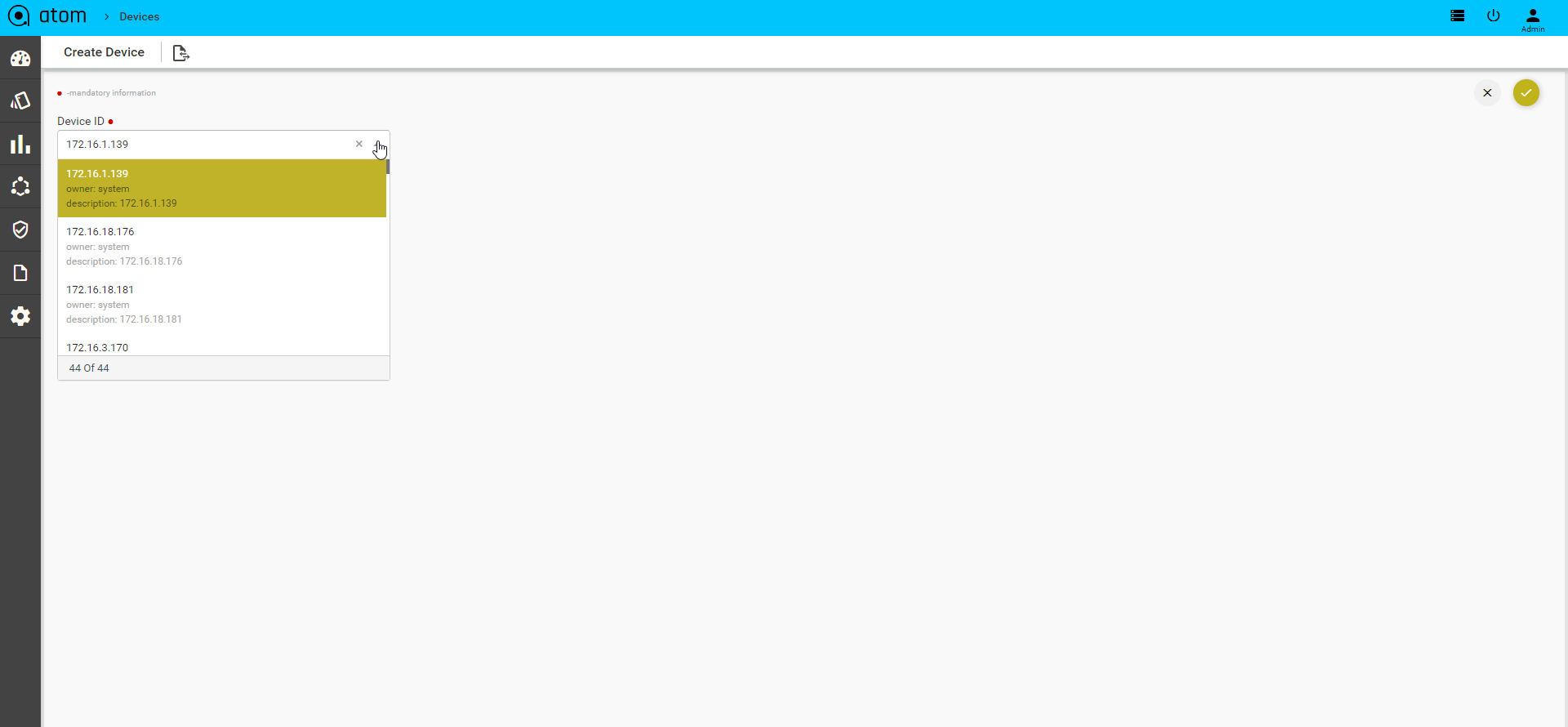

Before you begin, it’s mandatory to define Credential Sets & Credential Profiles. To Add/Edit a Device:

- Navigate to Resource Manager > Devices (Grid View)

- Add – Select Add action

- IP address: Enter the IP address of the device

- Credential Profile: Select the Credential Profile of the device

- Driver name: Driver can be selected for API devices.

- Latitude & Longitude: is a measurement on a globe or map of location north or south of the Equator on devices

- Modify – Select Device & Select Edit action

- Delete – Select one/more device(s) and Select Delete Action

Upon device addition, ATOM will perform the following:

Added Devices are shown in Devices grid and Device status will be shown in Green if device is SNMP reachable and ATOM is able to work with the device successfully.

Device Views

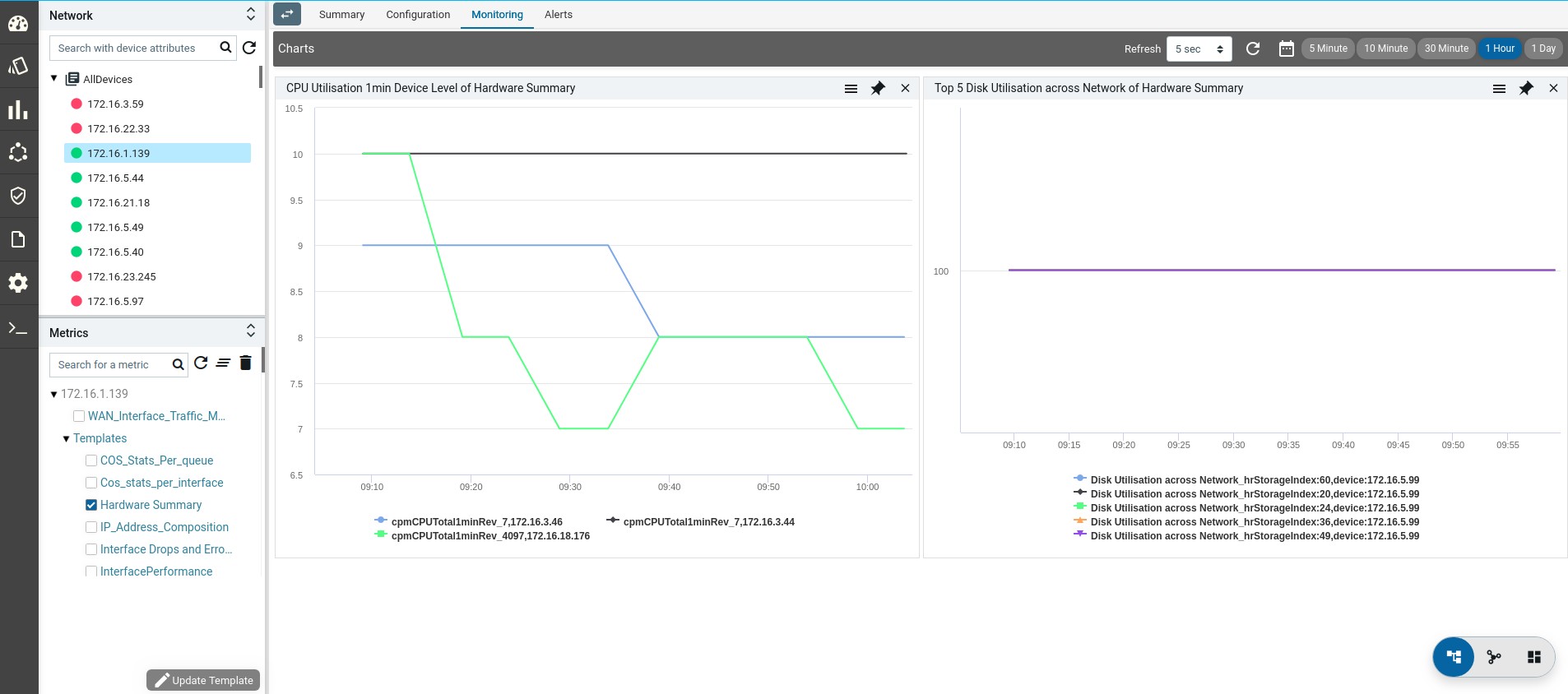

ATOM has 3 views for the devices – Tree (Device Explorer), Topology and Grid.

- Tree View:

- Topology View

- Grid View:

Device Explorer

Device explorer view will provide the devices, its associated config and observability elements in logical hierarchy. This view contains the available device-groups and its associated devices . By default, all the devices are part of AllDevices Group.

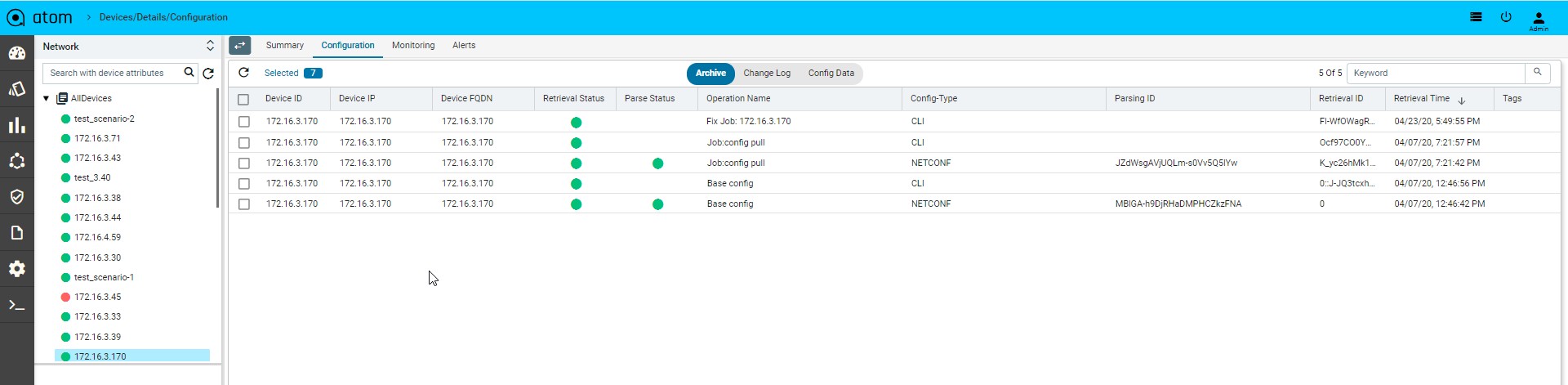

Device group will have all the corresponding device details Each group and node will have the following sections:

- Summary : It provides the device platform, version, serial number, current operating OS, Device hardware health, Interface summary, Config compliance violations and Active alerts and recent activity.

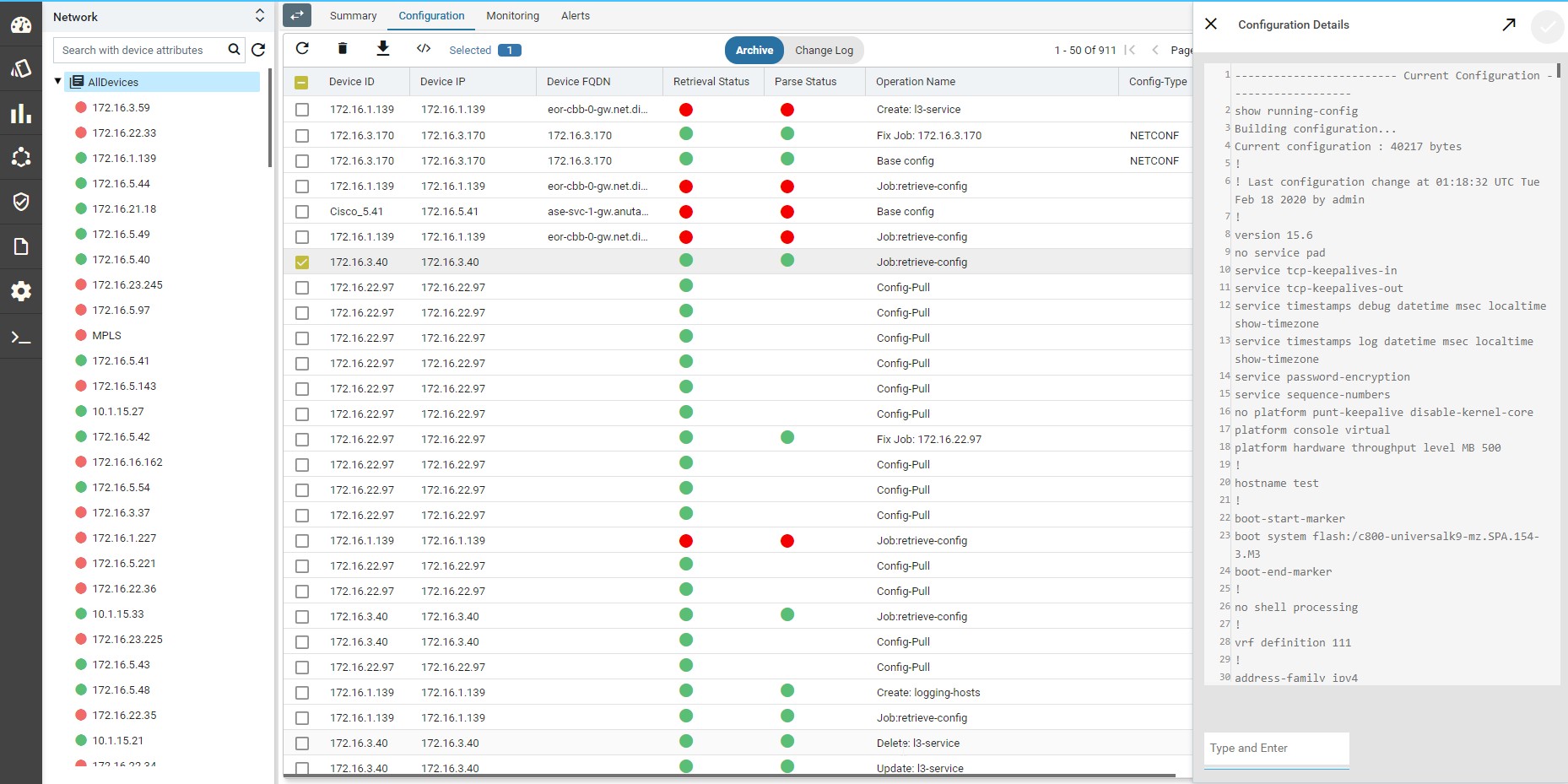

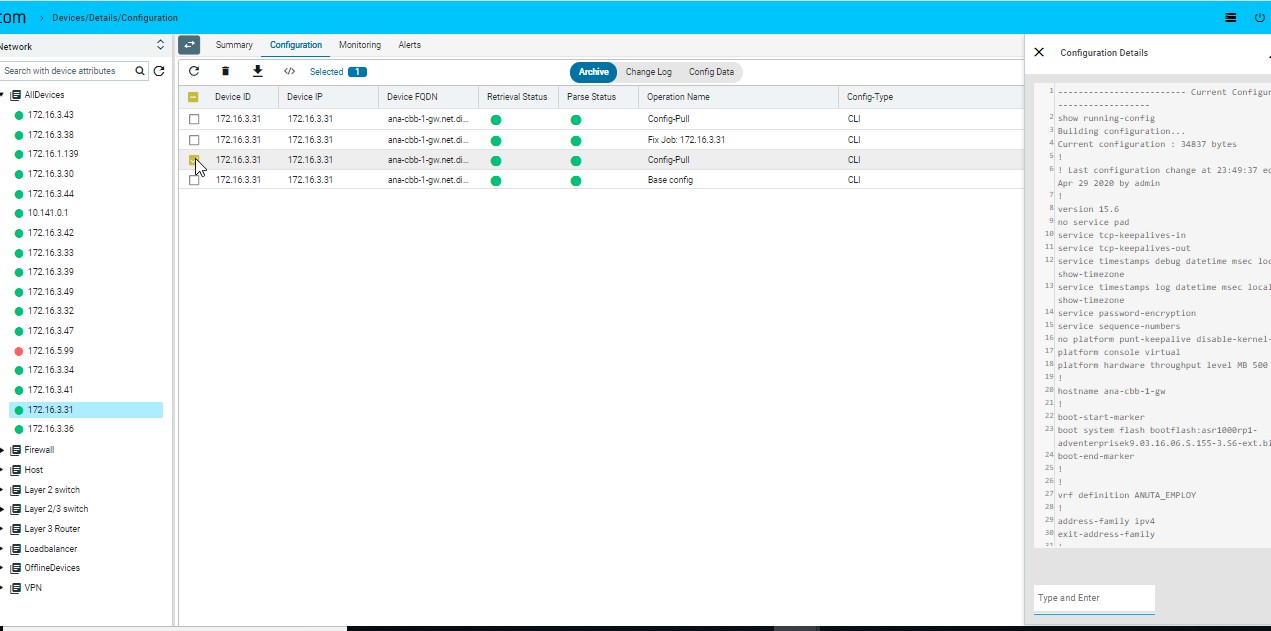

- Configuration : it provides the entire summary of config related operations.

- Config Archive : It shows the each config retrieval, type, retrieval & parsing status.

- Changelog : provides the summary of change in configuration such as number of lines added, deleted or modified and at what time & corresponding changes.

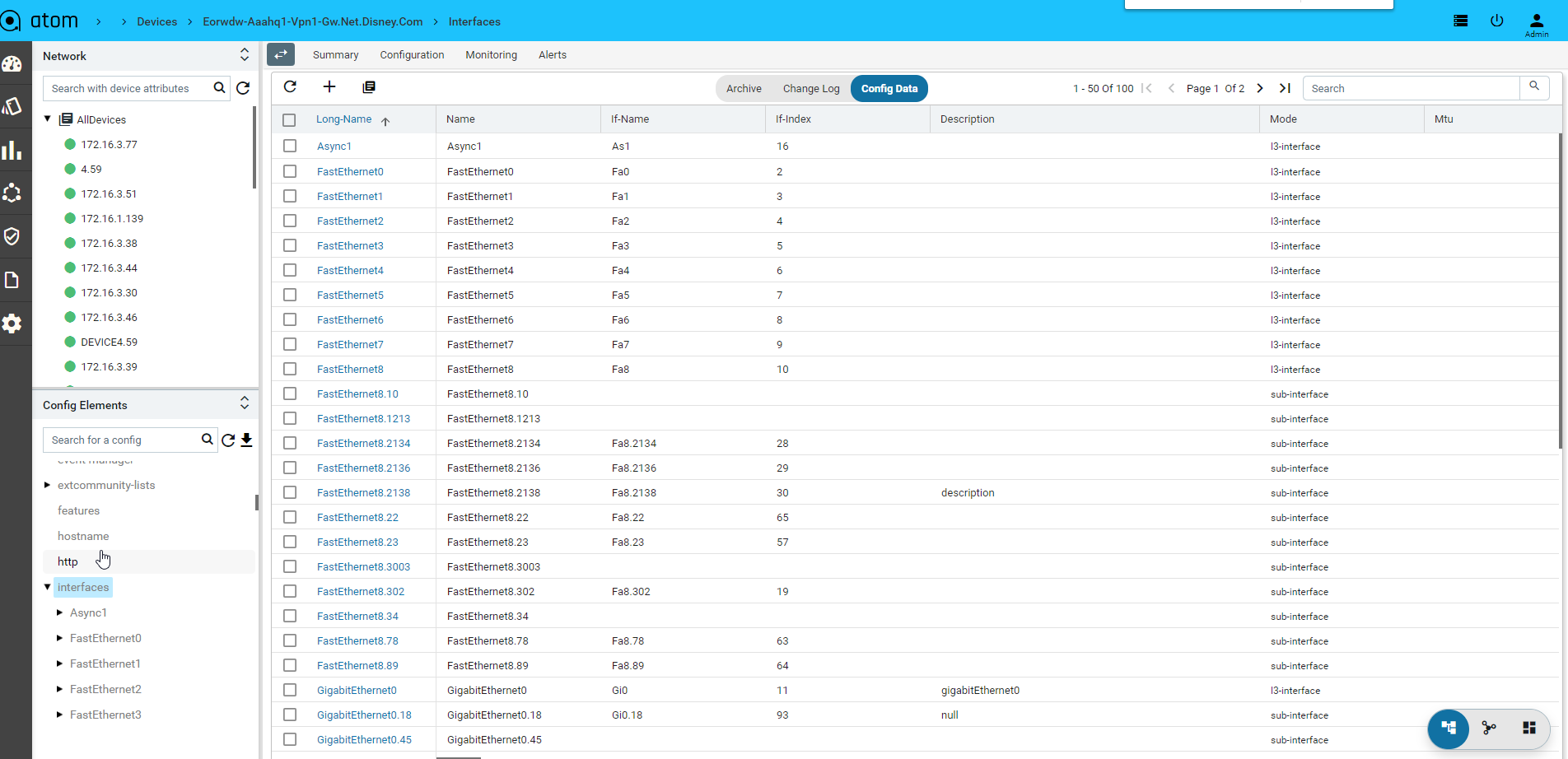

- Config Data : it will provide the entire config tree through YANG models parsing. This is not applicable for any device group as they can have heterogeneous models based on the grouping criteria & provisioning interface such as ATOM abstract device models, OC or Native models.

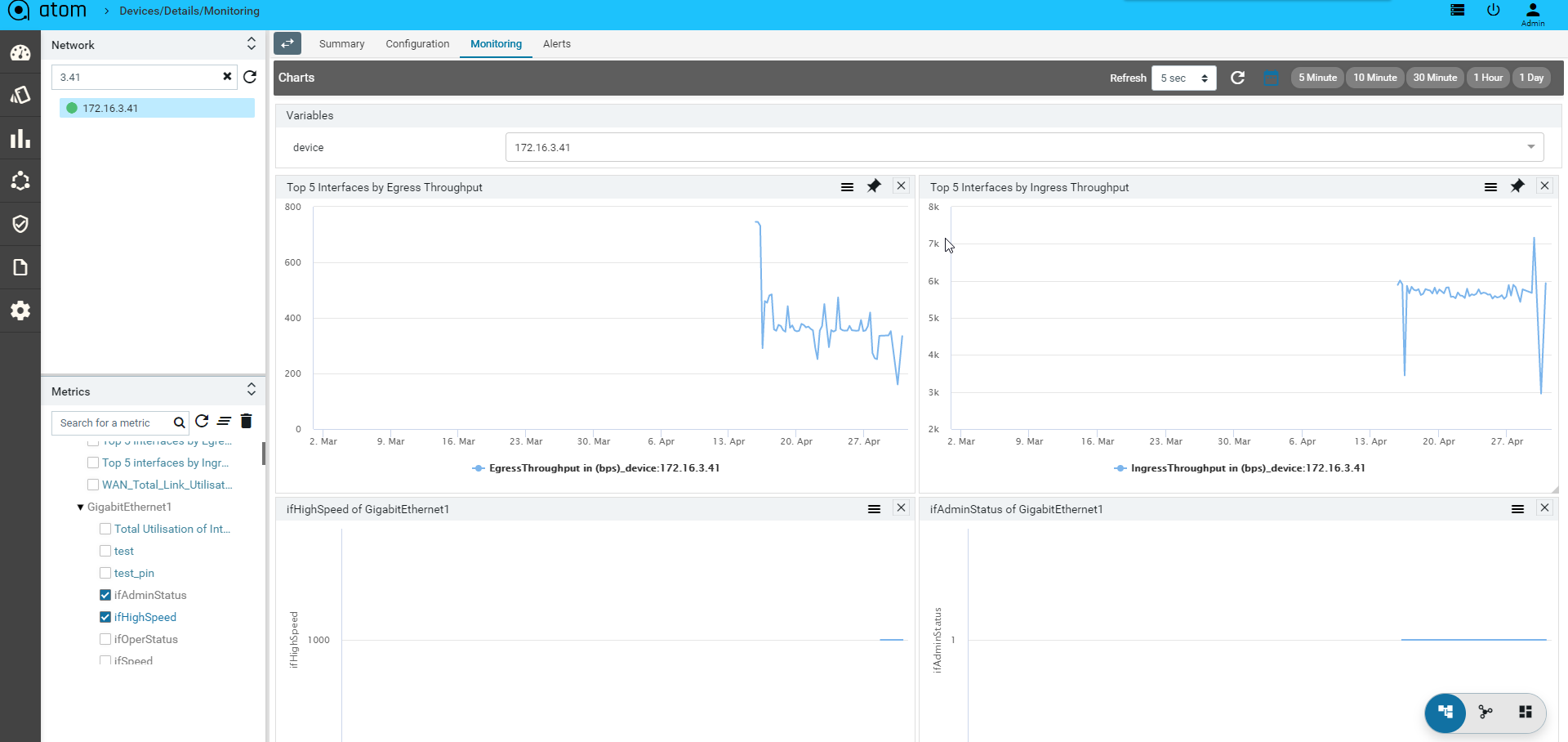

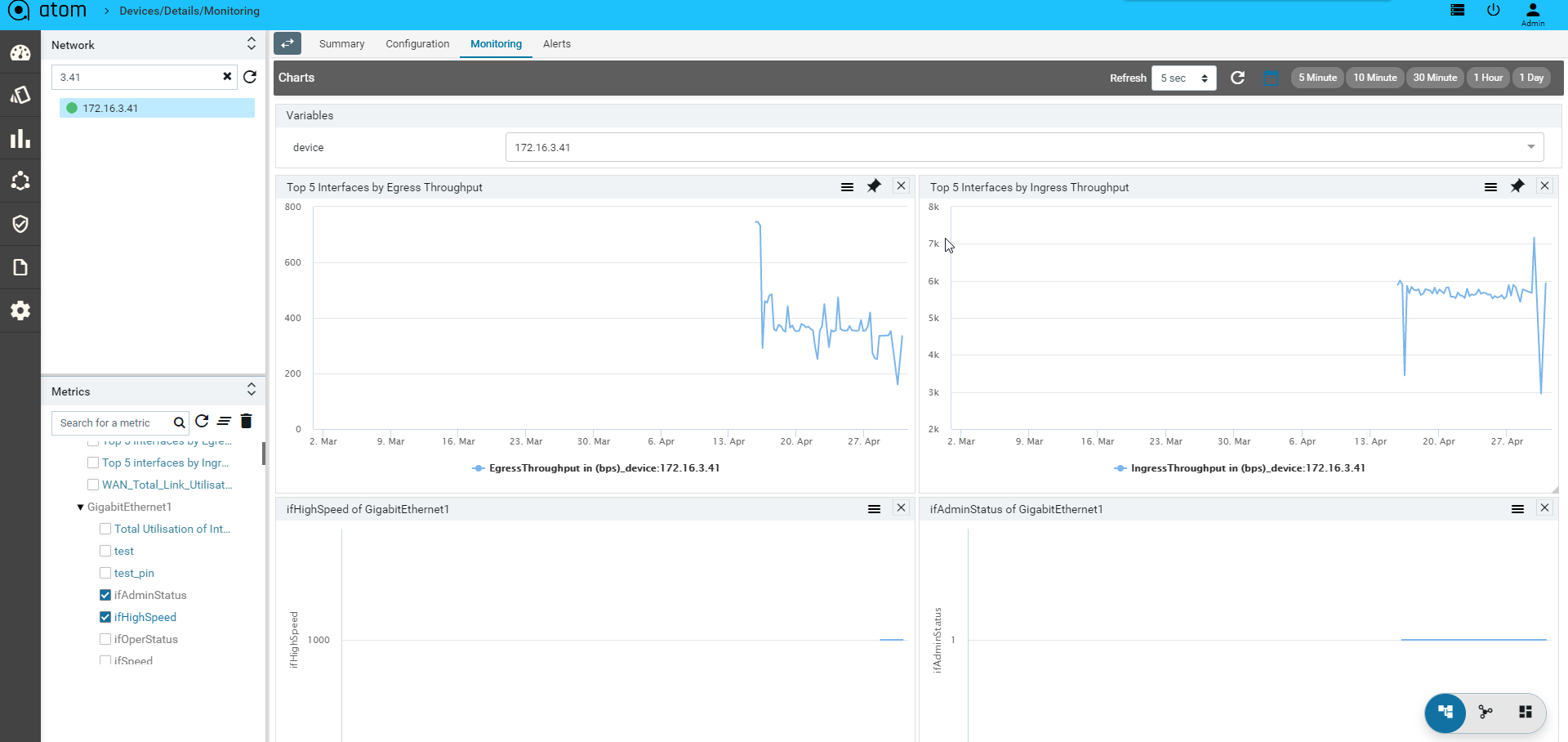

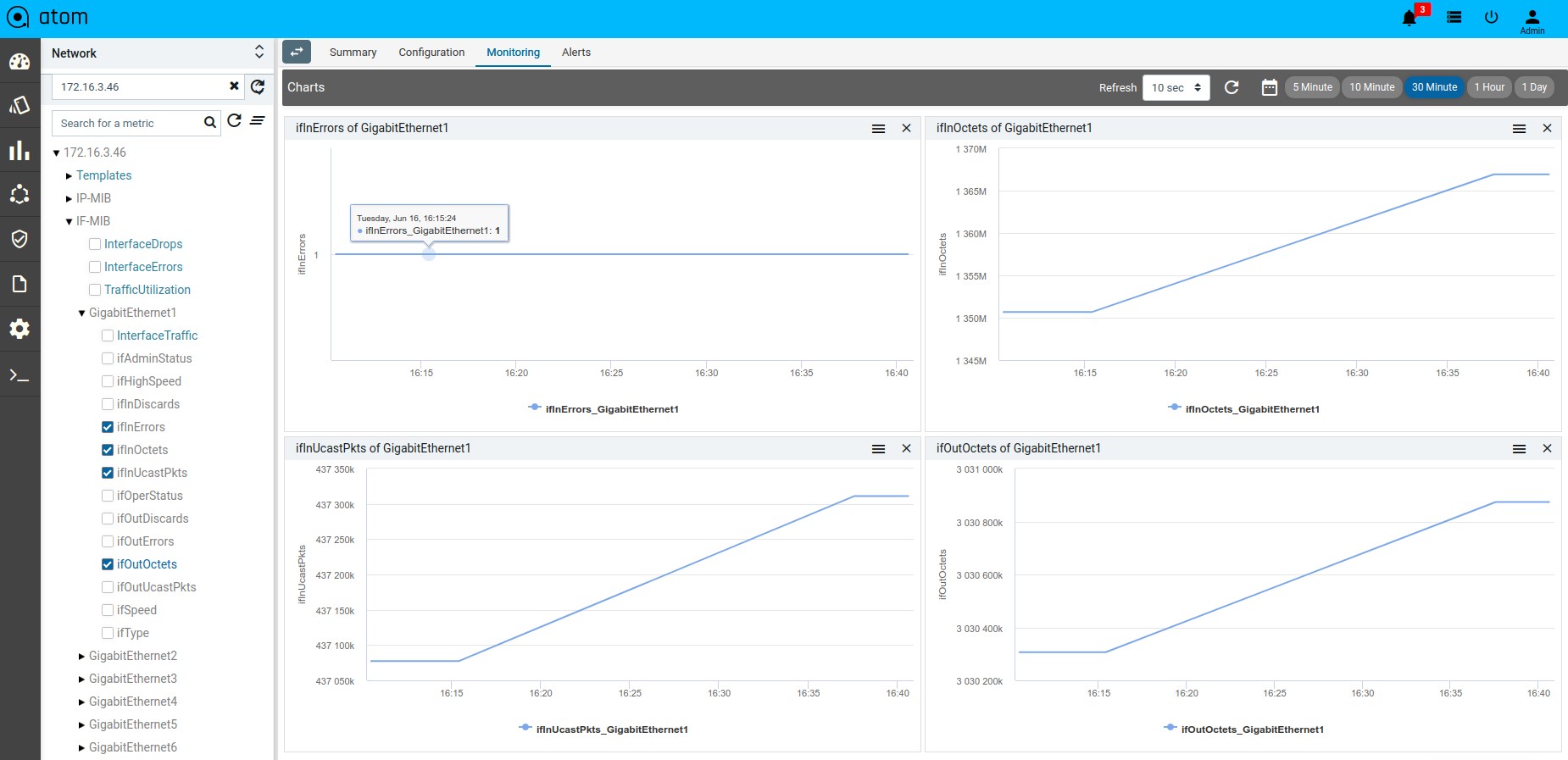

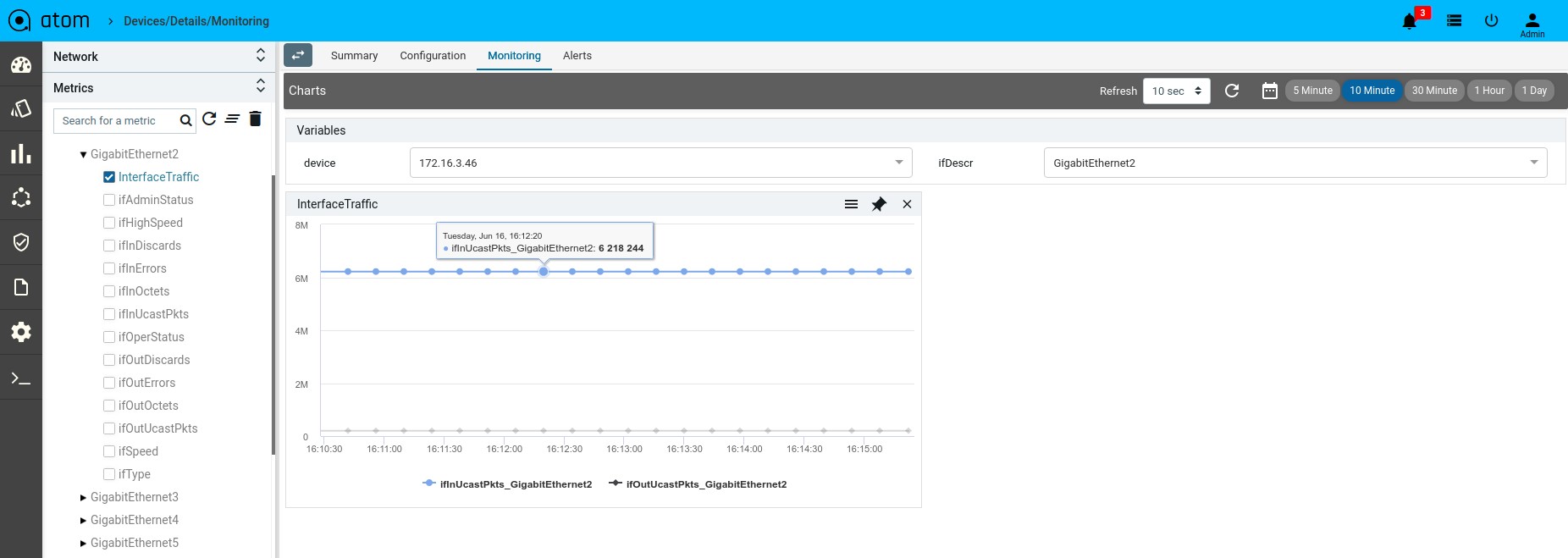

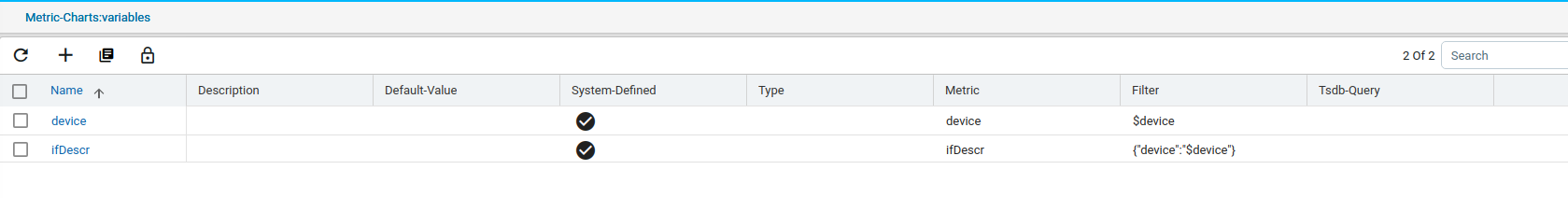

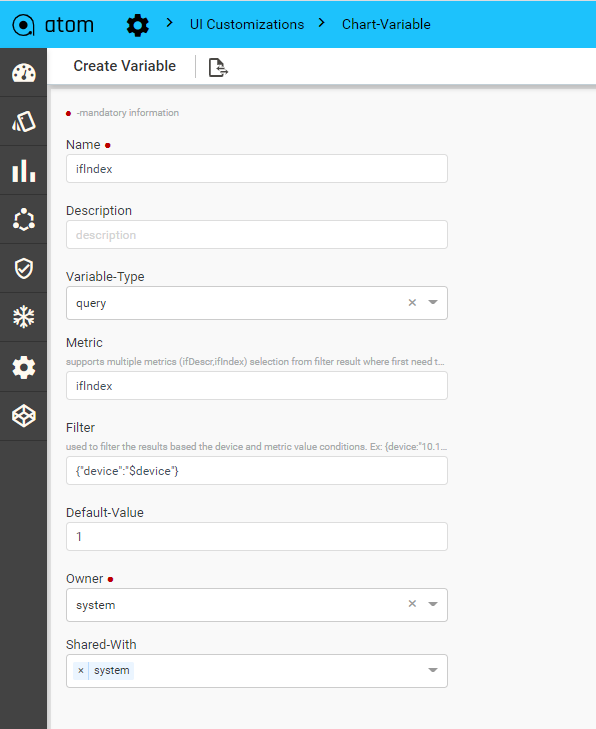

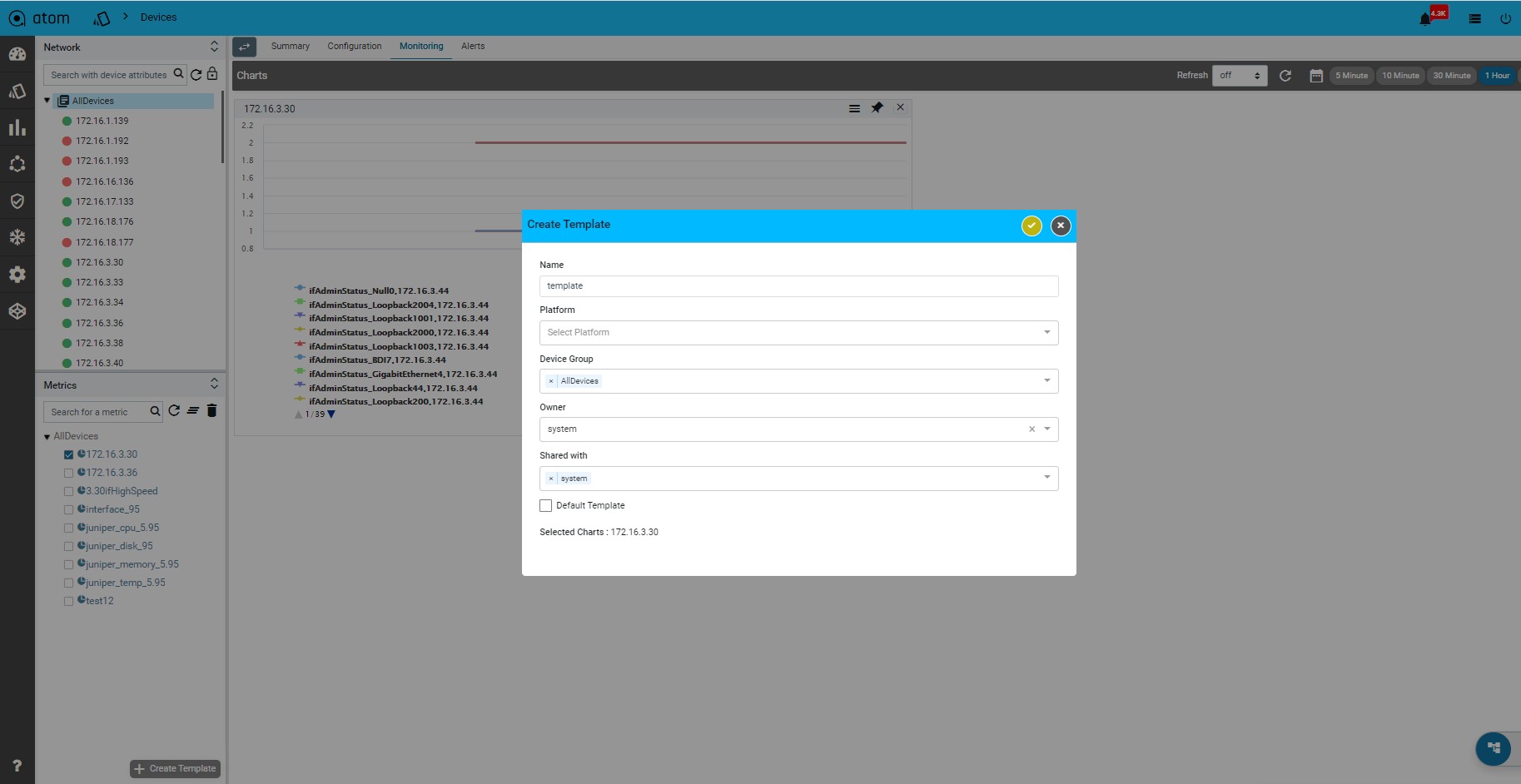

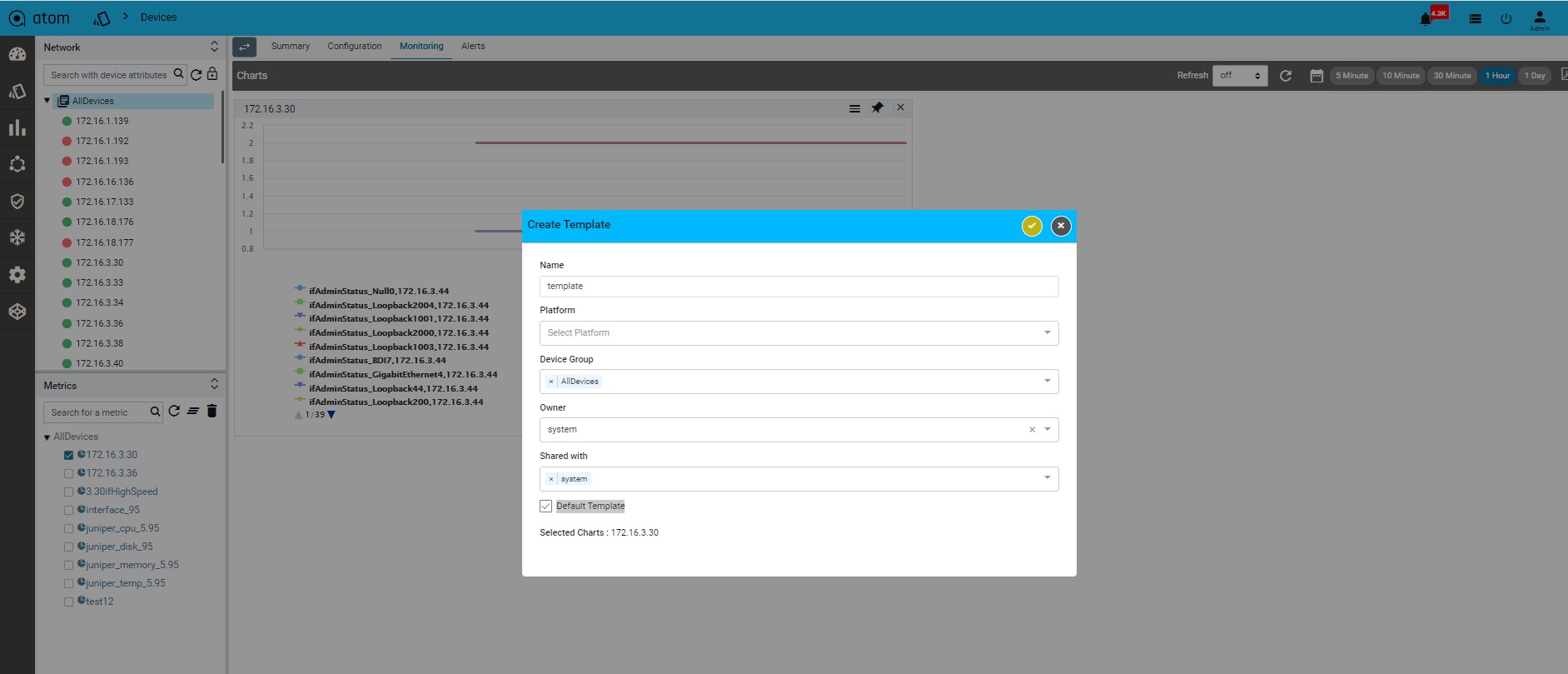

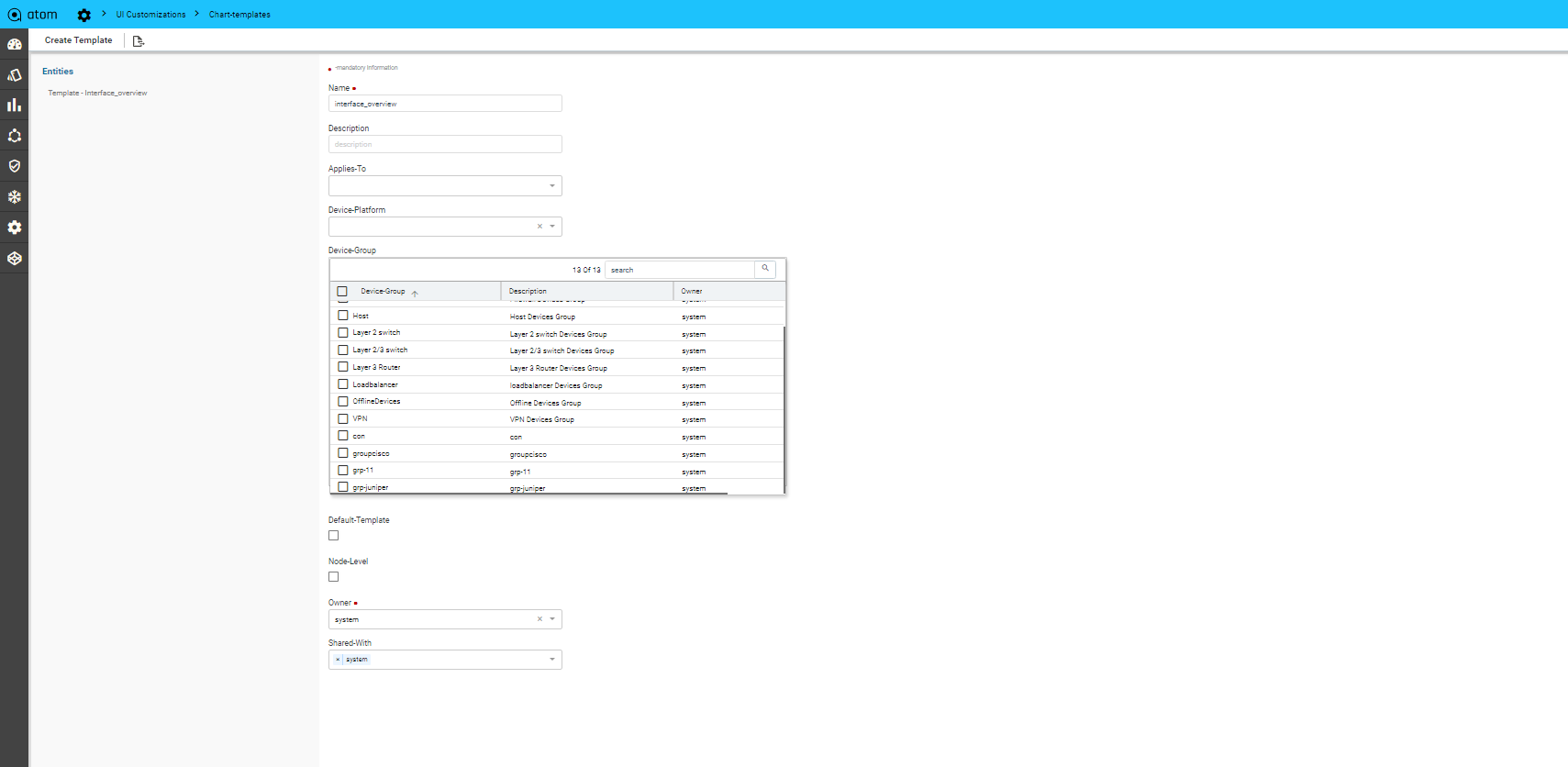

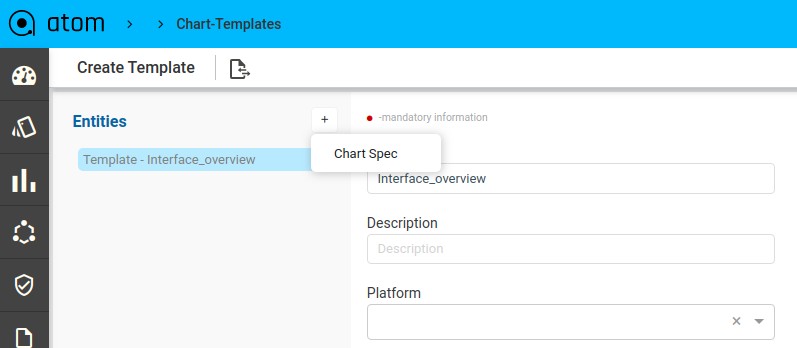

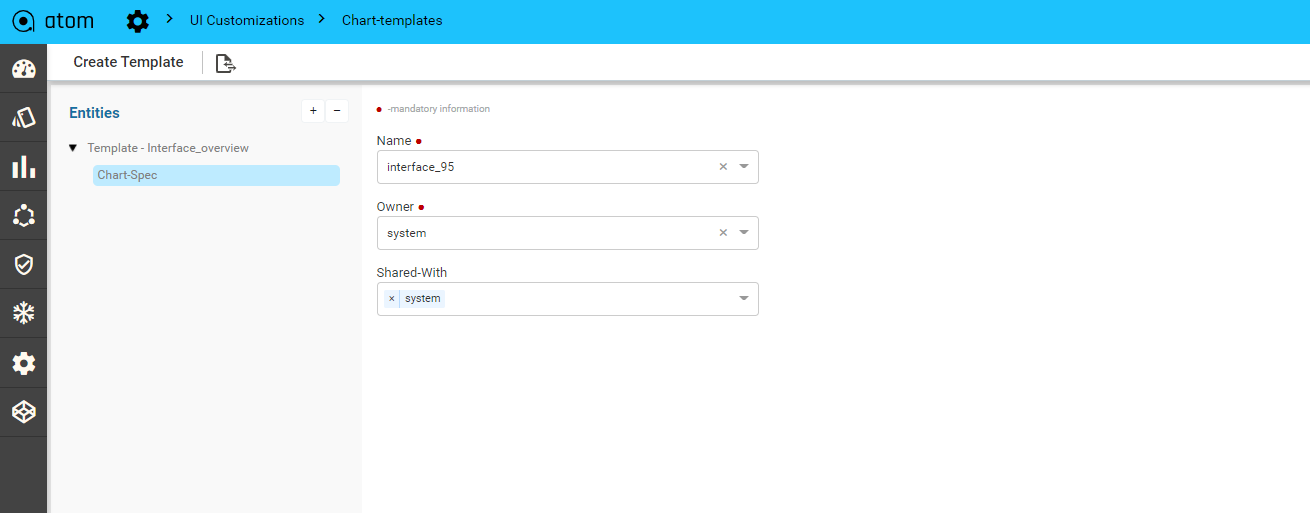

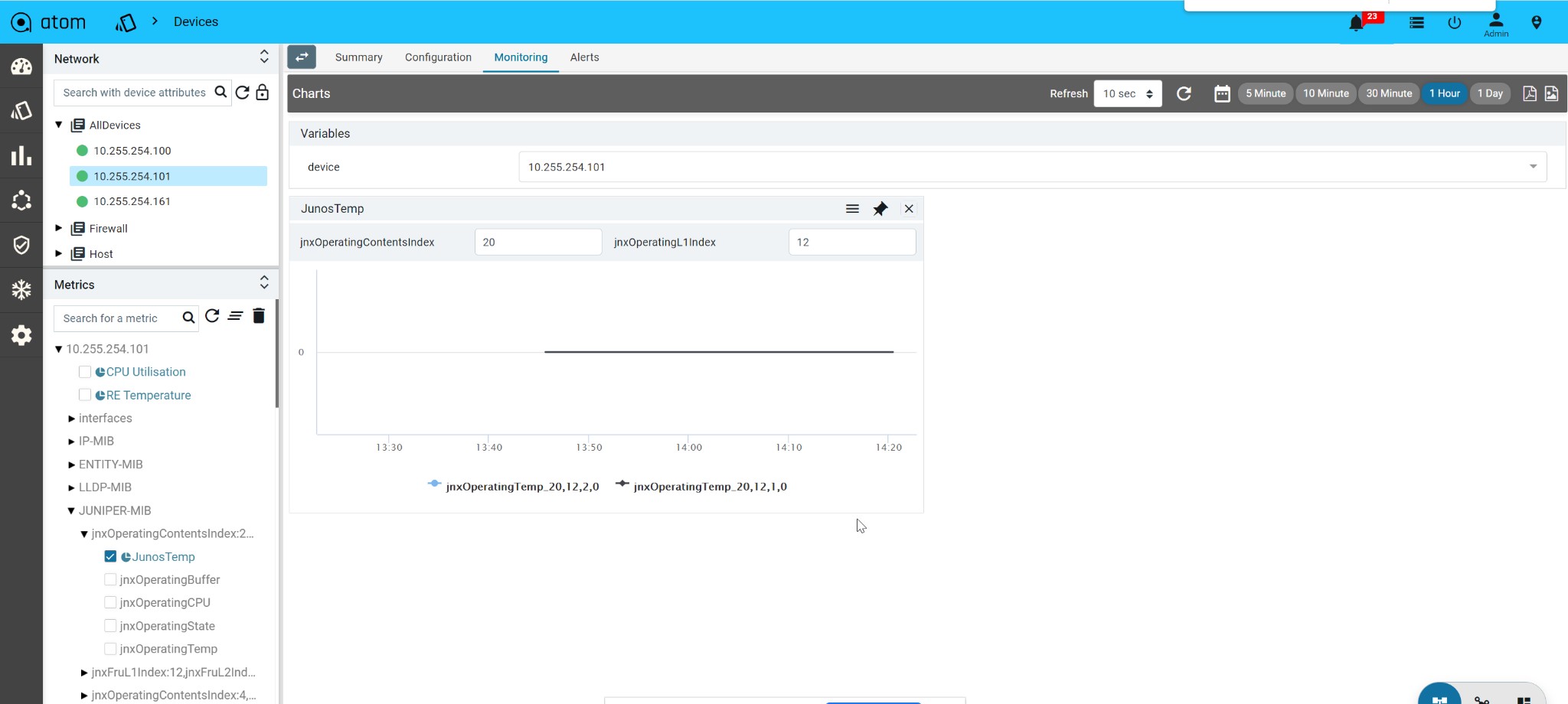

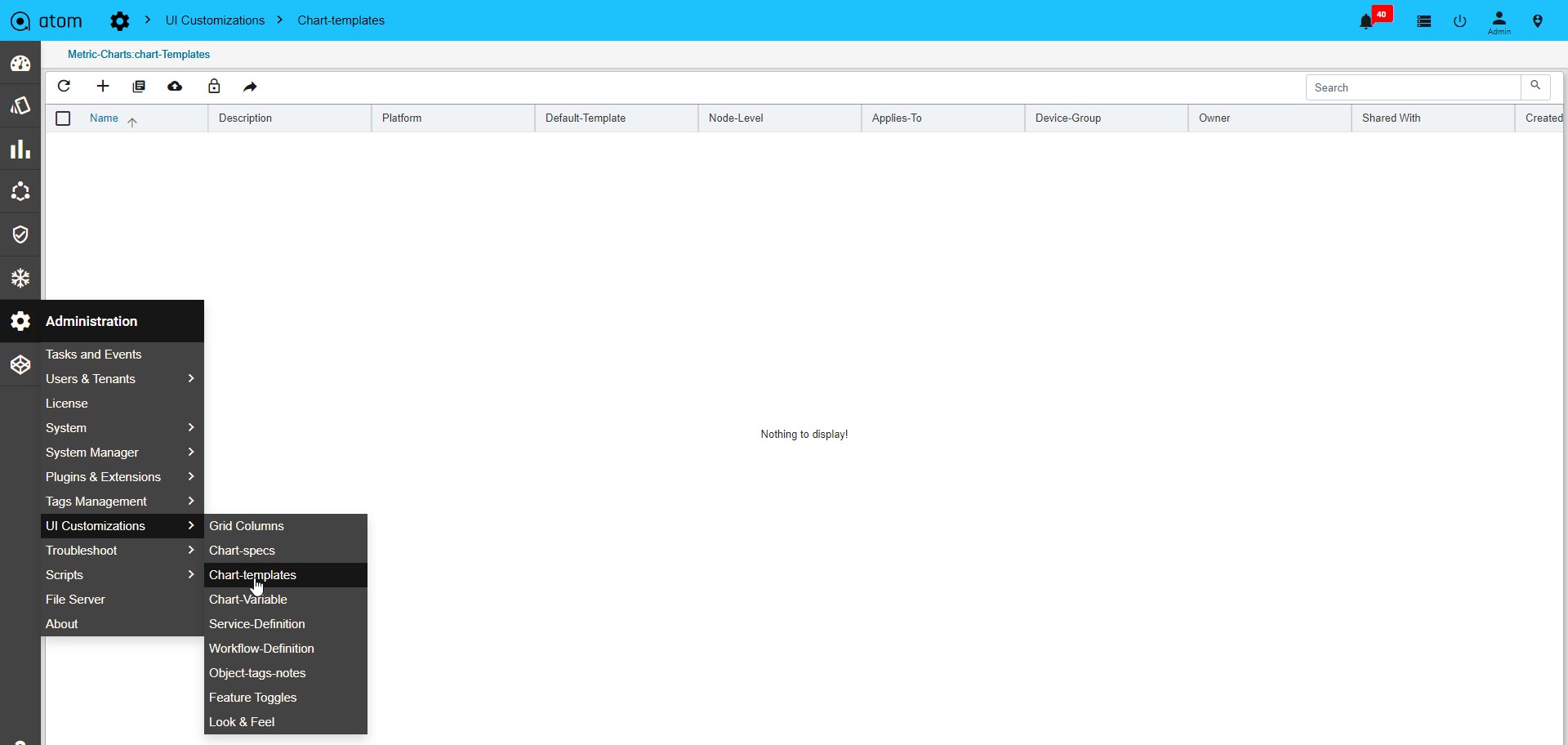

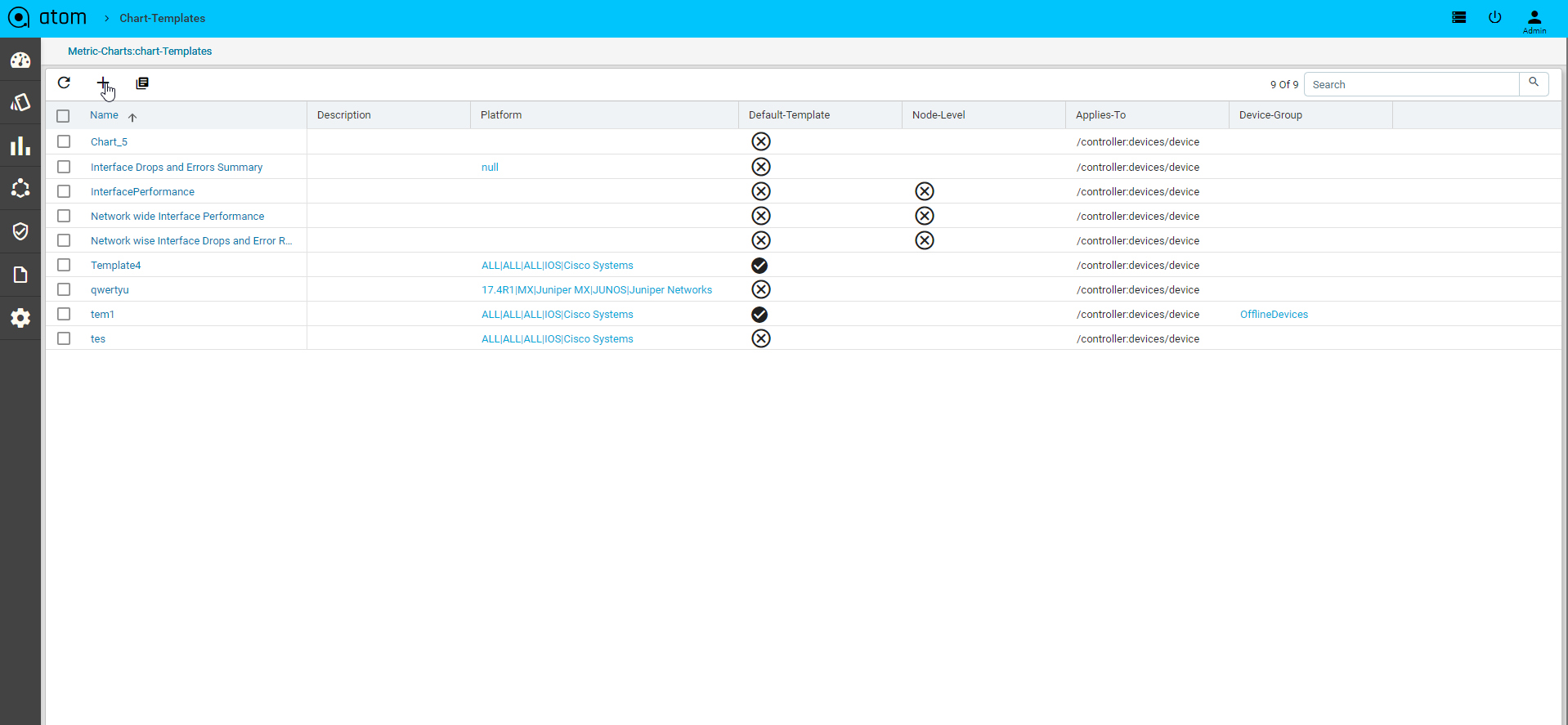

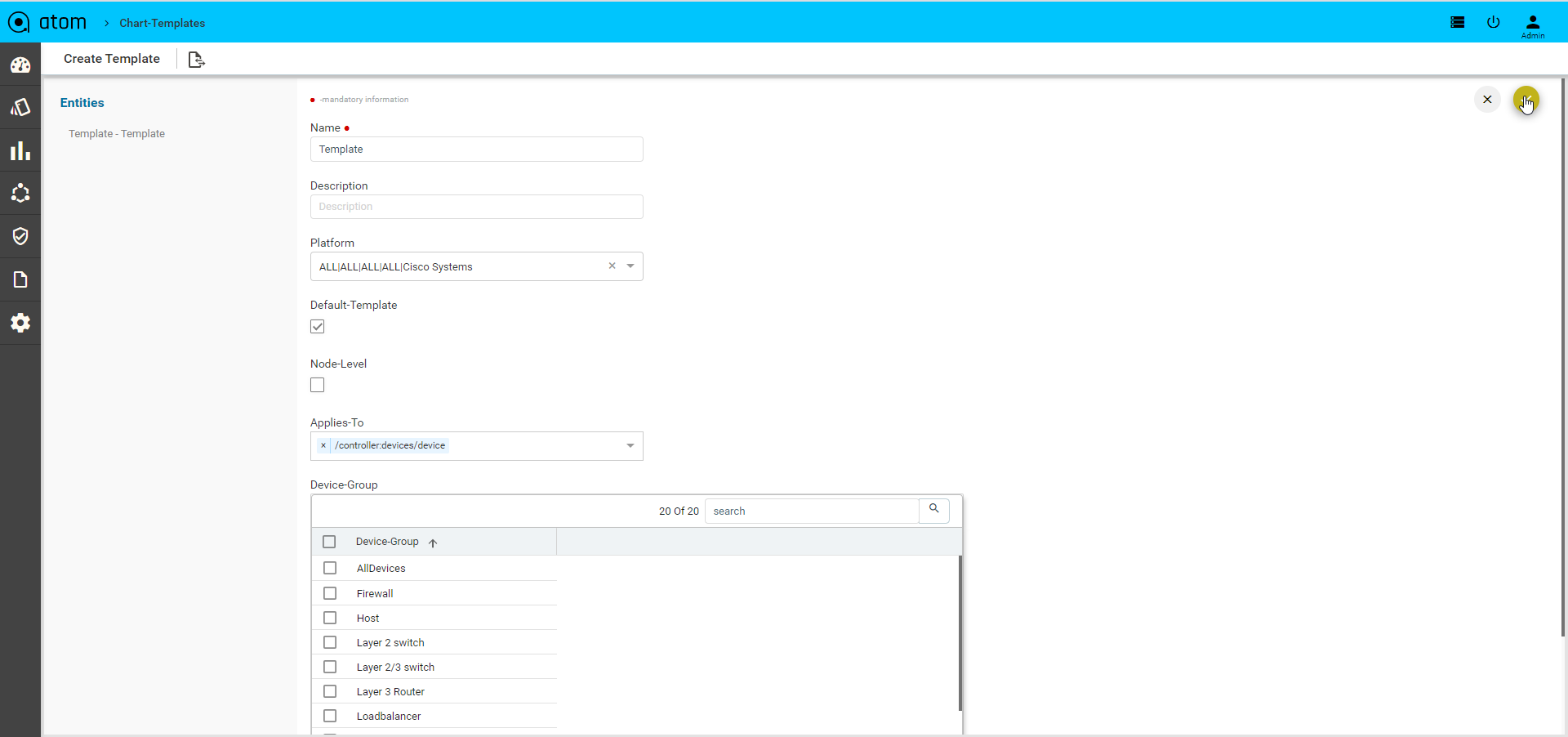

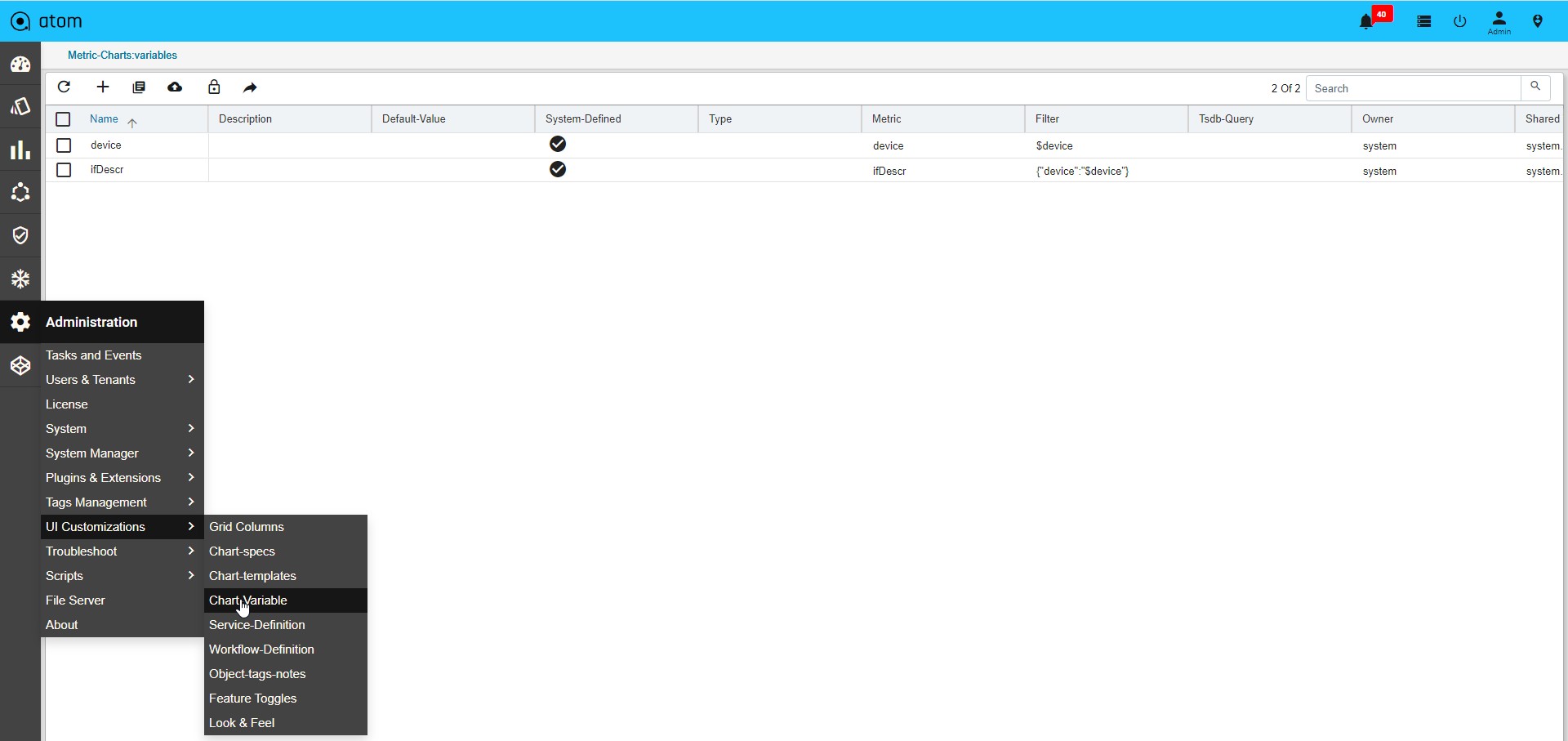

- Monitoring : It contains all possible templates & charts through inheritance from its group or node level. It will show the default template by default as its monitoring summary. Refer Monitoring Guide for more details.

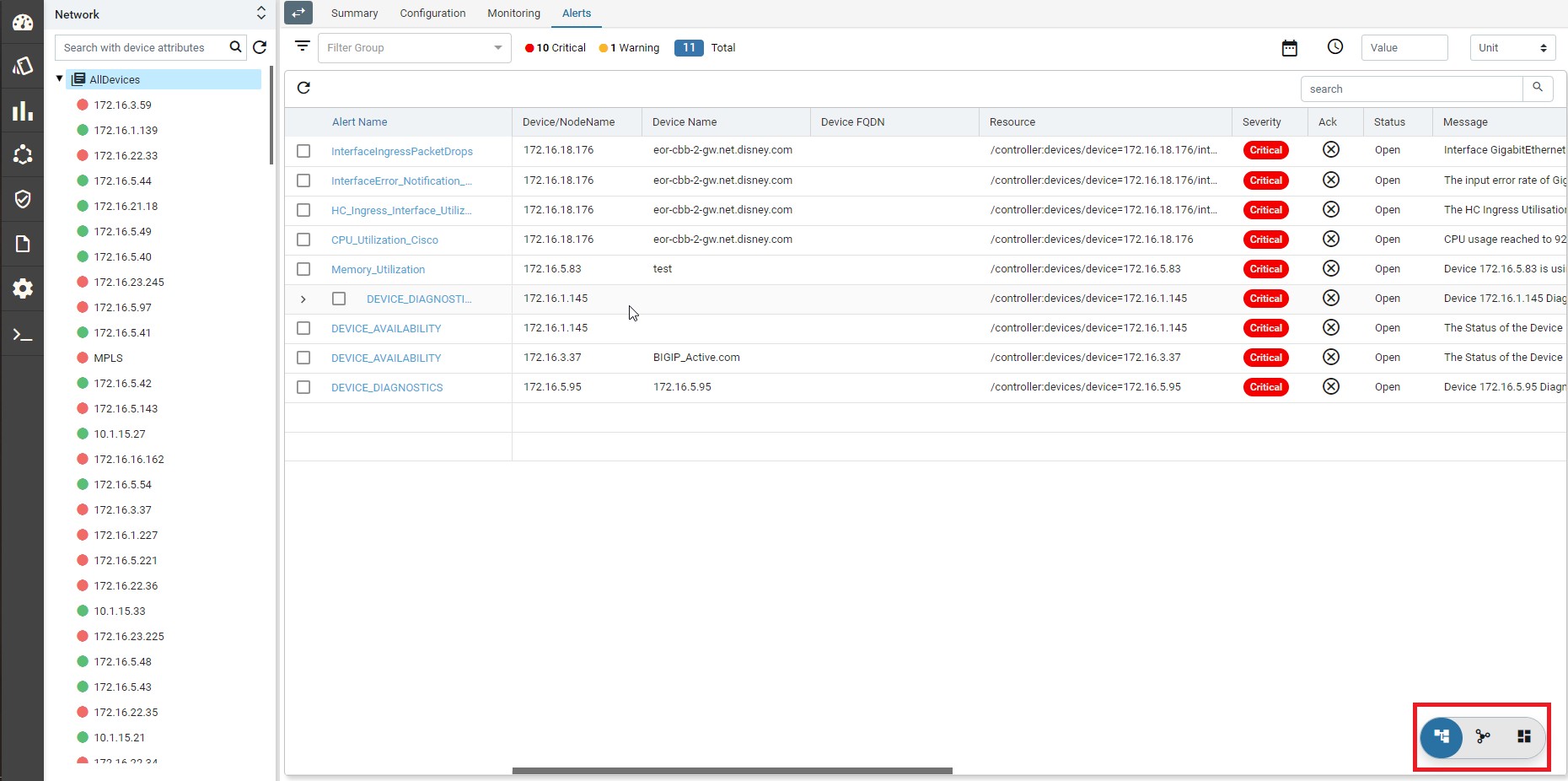

- Alerts : It will show the all active alerts and its history by default. Alert filter view is also available to search & prioritise the alerts. Refer Alerting Guide for more details.

Each device-group view will have a Summary dashboard which can be customizable.

Device Actions

ATOM supports common actions on Device. These actions can be performed from Device Grid view on one or more devices or from within the Device specific view and will be discussed in Device Summary section.

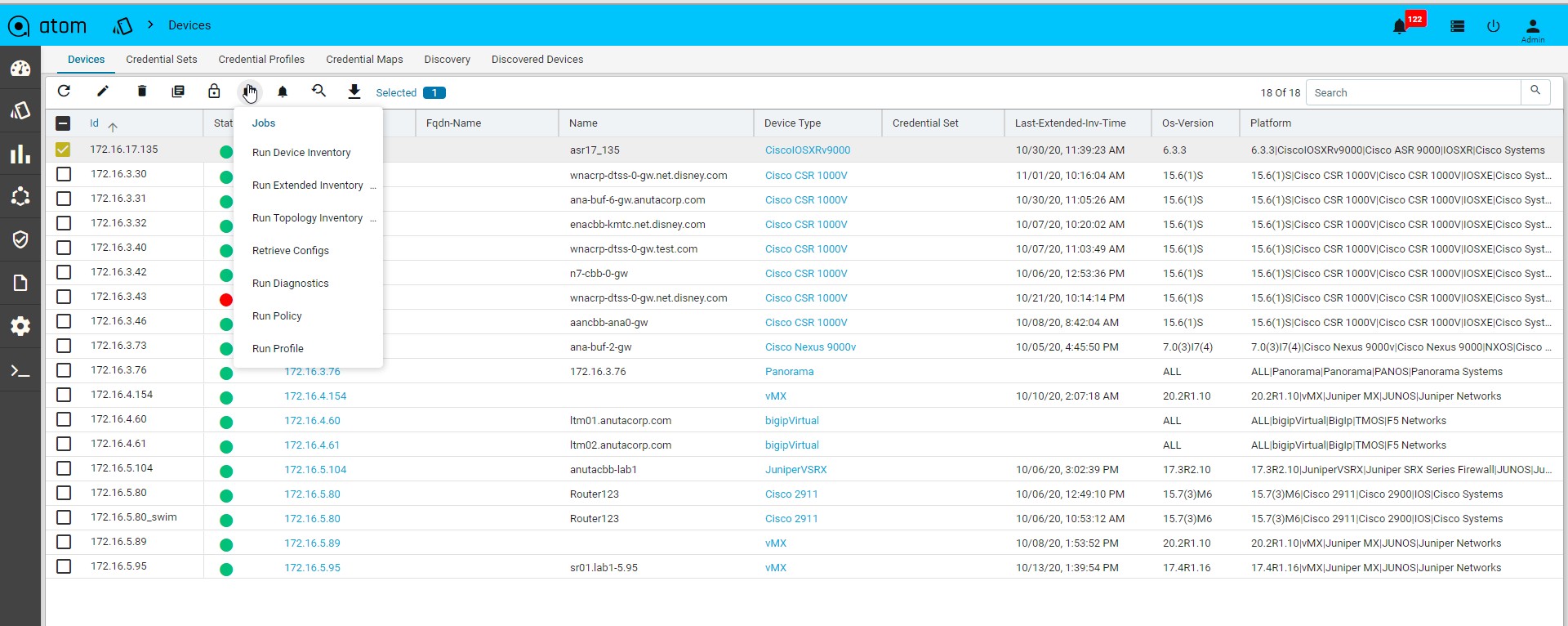

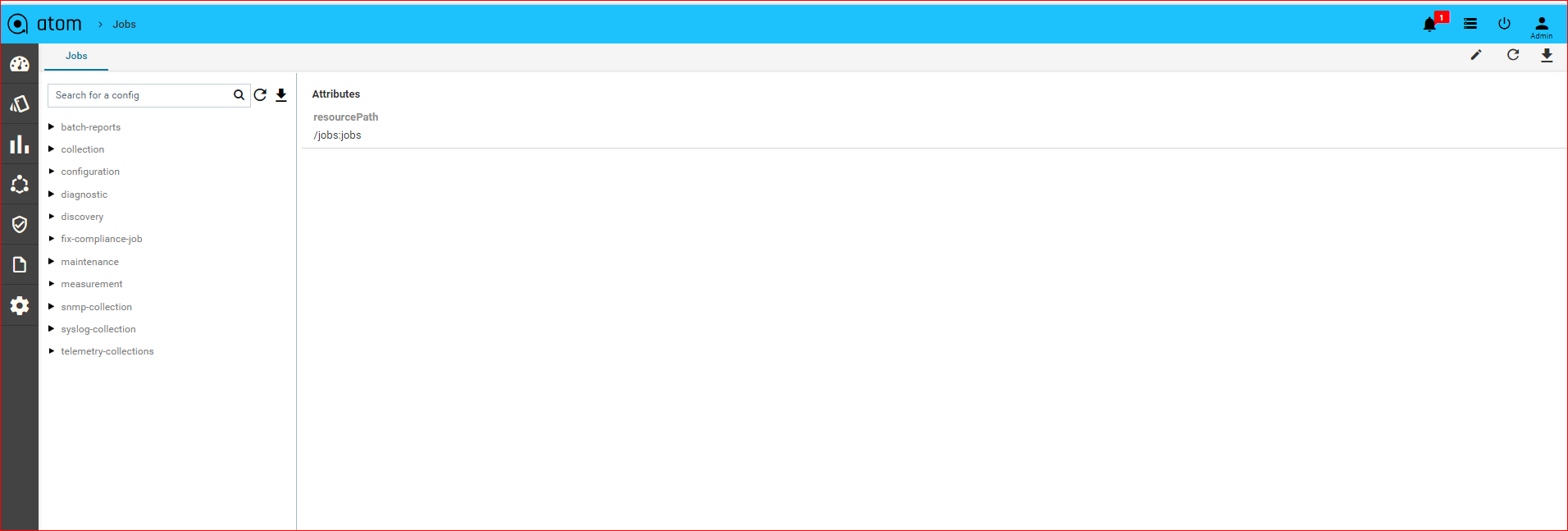

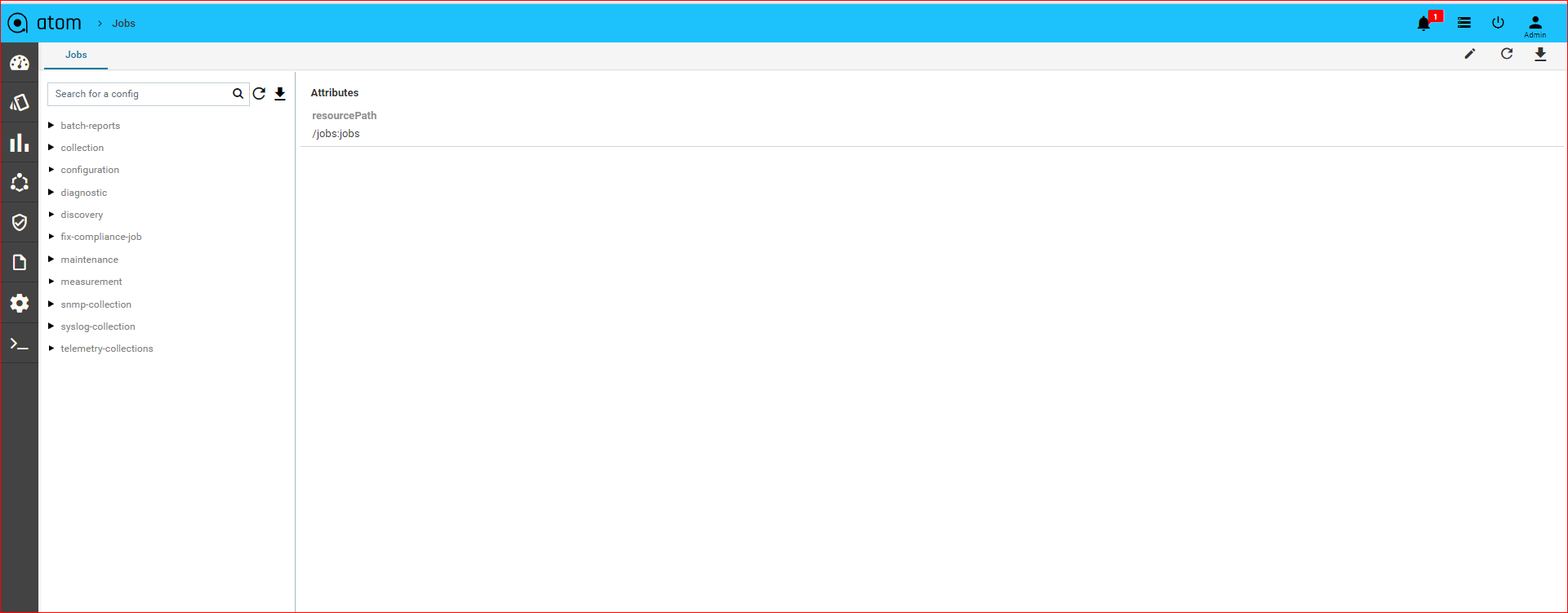

Jobs & Subscriptions

Various Collection & Diagnostics jobs can be invoked.

- Navigate to Devices > select one or more devices

- Click on the Jobs and select the job to run

- Jobs action -> Run Device Inventory

- Jobs action -> Run Extended Inventory

- Jobs action -> Run Topology Inventory

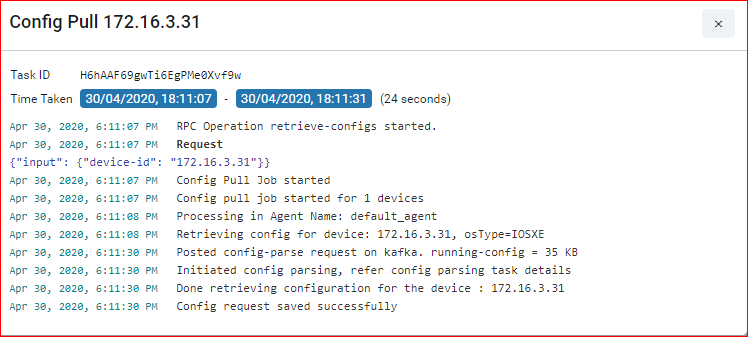

- Jobs action -> Retrieve Configs

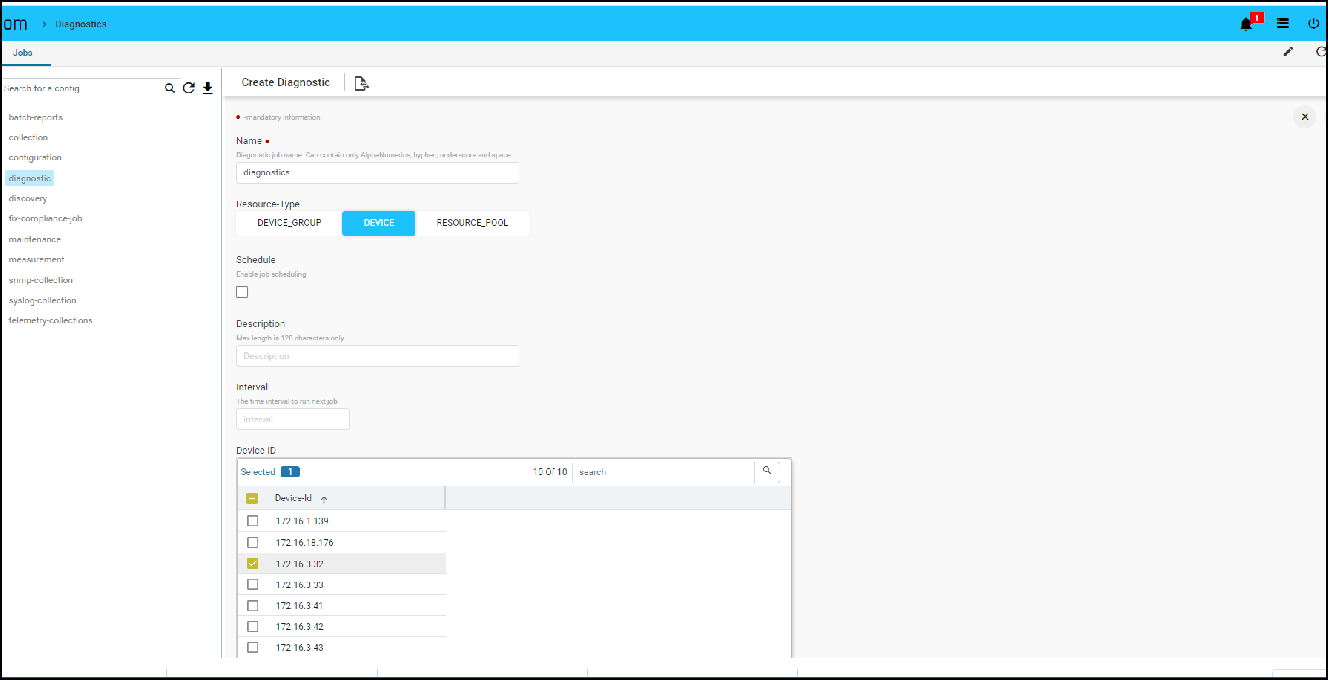

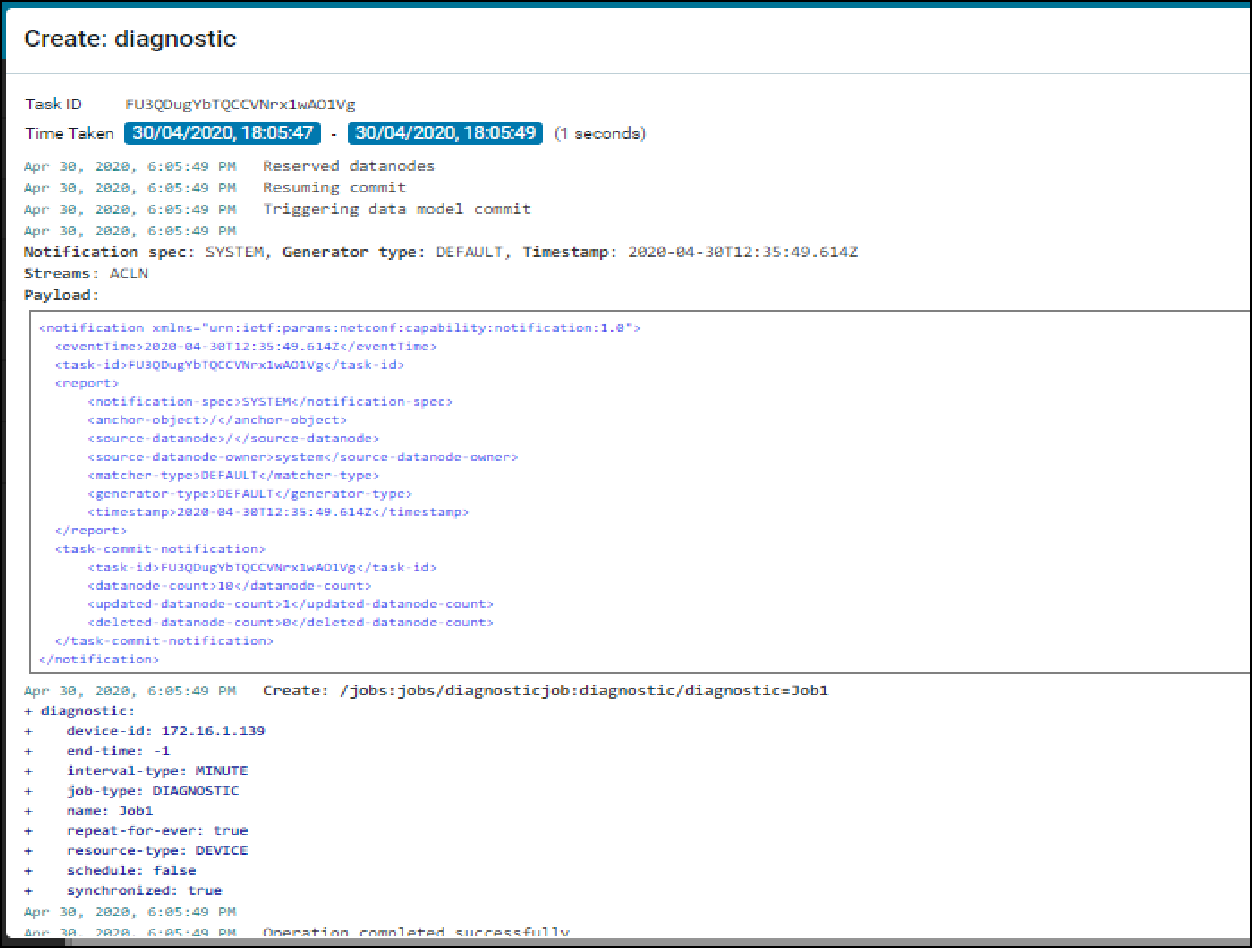

- Jobs action -> Run Diagnostics

- Jobs action -> Run Policy

- Jobs action -> Run Profile

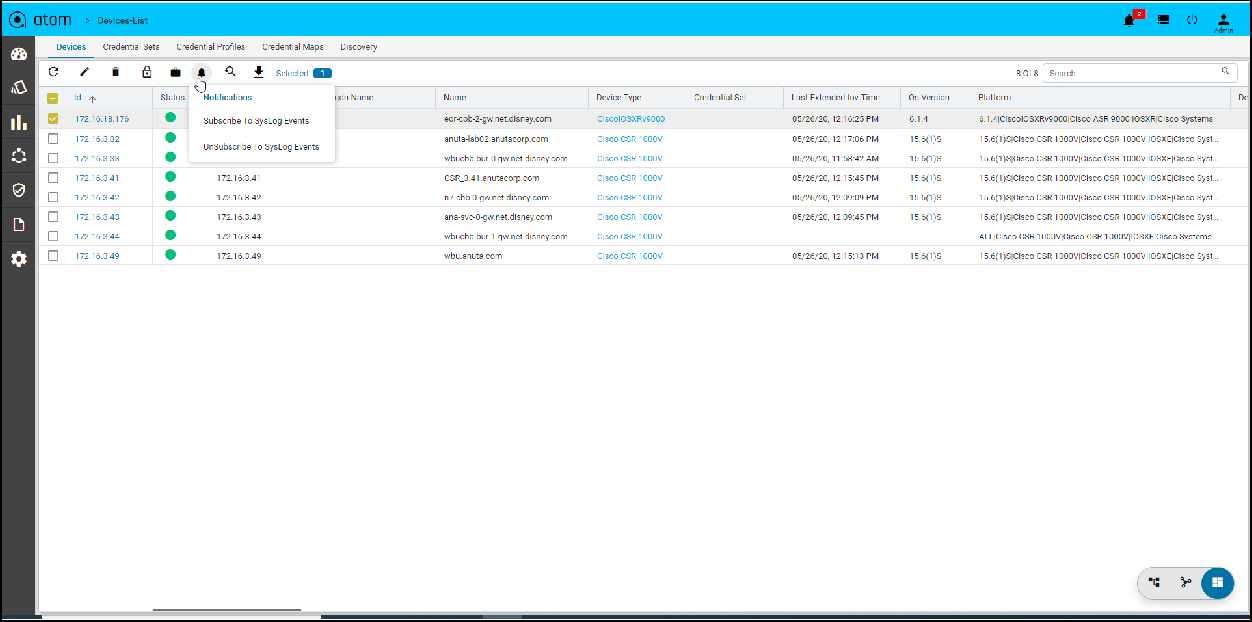

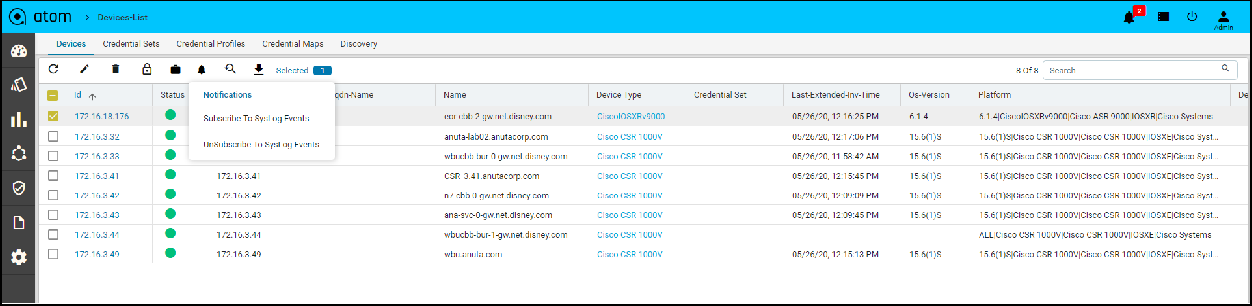

- Click on the Subscriptions to configure Syslog Subscription on the Devices

- This will result in ATOM being configured as a Syslog receiver and is a configuration change on the device.

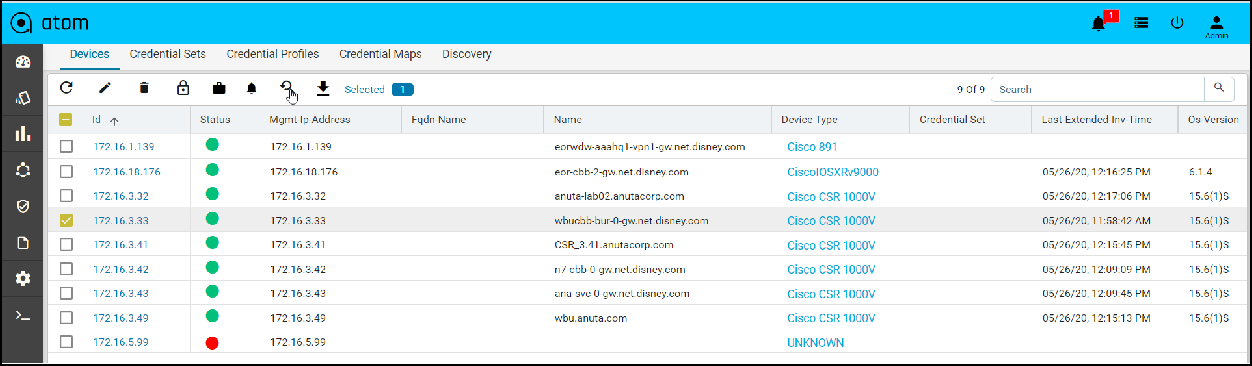

Exporting Device Information:

You can export the device information of the devices either in the XML or JSON format.

- Navigate to Resource Manager > Devices > Grid View(Icon) > Devices

- Select one or more devices

- Click the View/Download button and select either the XML or JSON

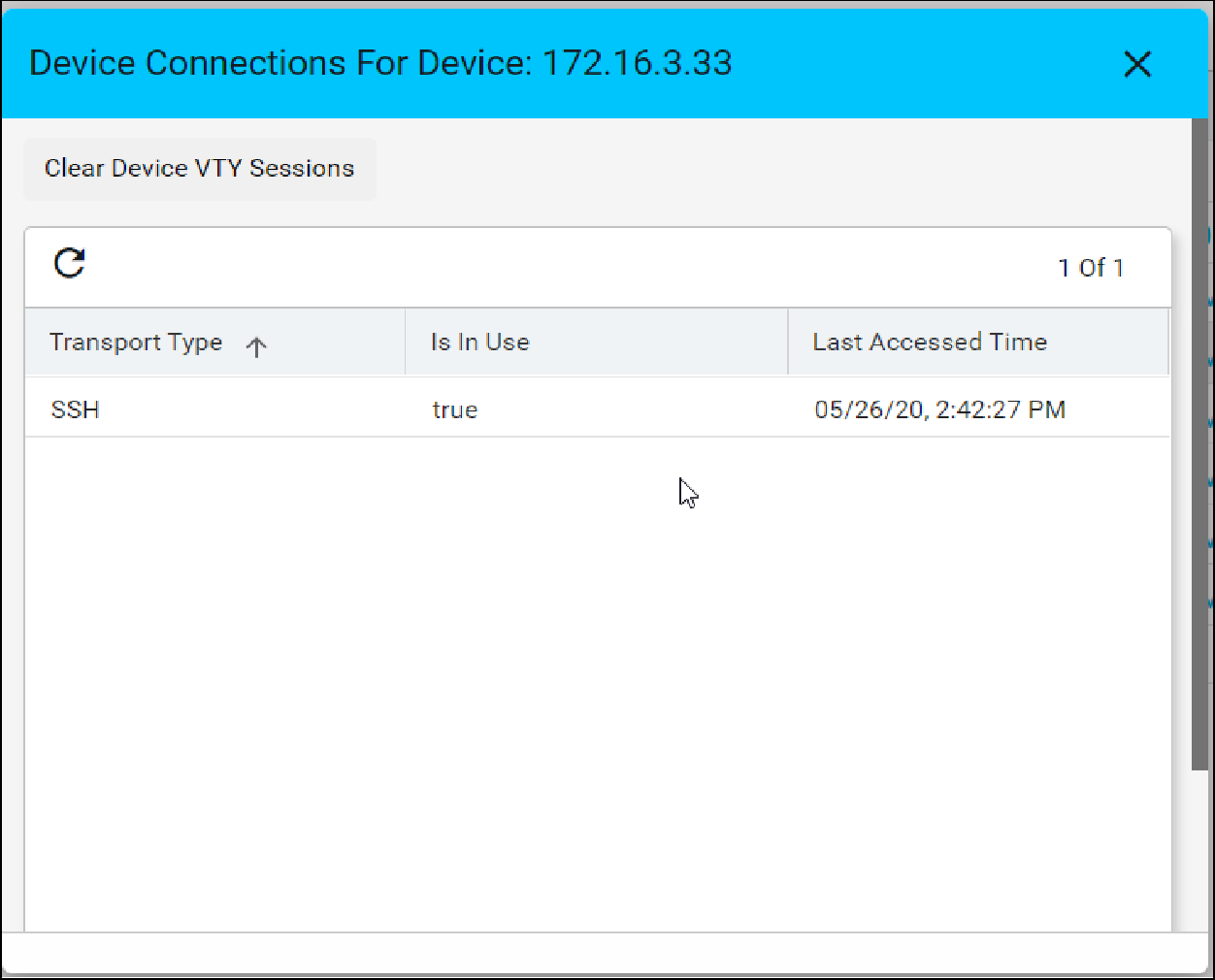

VTY Sessions

This is used to view the active vty sessions.

- Navigate to Resource Manager > Devices > Grid View(Icon) > Devices

- Select any device

- Click the VTY Sessions button

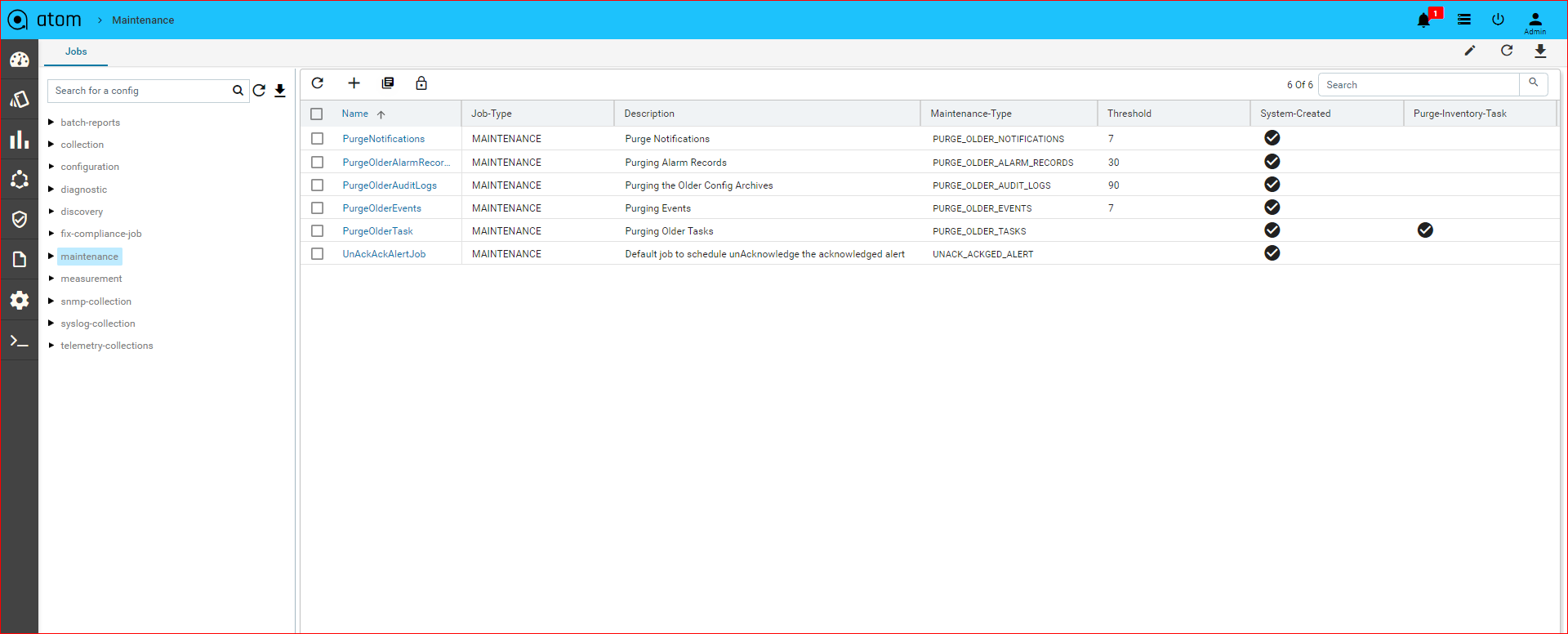

Default Jobs

Below are the jobs which run during the device onboarding process in the mentioned order.

-

- Device Inventory : It gathers the Platform, OS Version through SNMP and gets the Device to ONLINE. If the platform is not found in ATOM then check Platform guide on

- Device Extended Inventory : It collects the Serial Number, Interface performance, health, availability etc.,

- Device Diagnostics : ATOM will perform the reachability check through Ping, SNMP and Telnet/SSH if they are applicable.

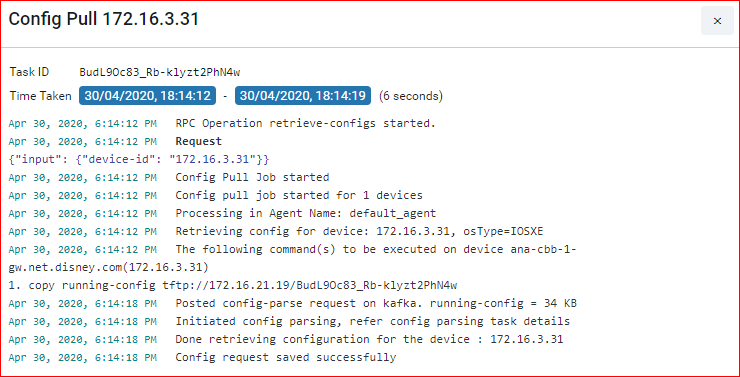

- Base Config Pull or Config Retrieval : It will retrieve the configuration and persist in the database. Configuration will be collected if the credential function is set to Config SNAPSHOT or any of the PROVISIONING functions. Build data model flag is used to parse the configuration into YANG entities from the specified config source snapshot.

Below is the example, to backup cli and netconf xml config and parse the xml version.

Config Type column will show us the source of config retrieval.

All the above operations can be customized for any platform as required and scheduled similar to other collection jobs.

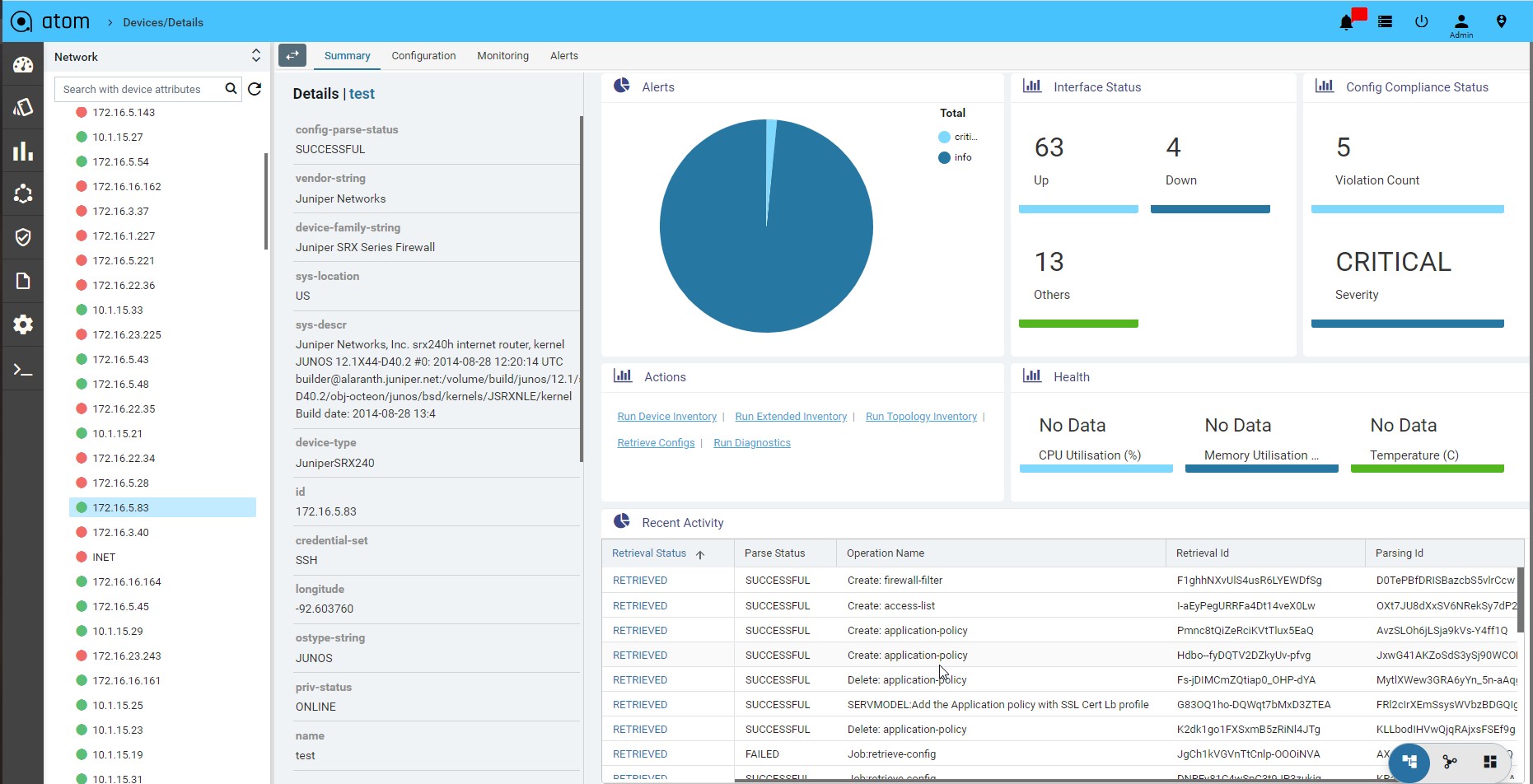

Device Summary

Device summary view provides a quick snapshot of important device attributes including Alarm summary, interface summary, recent configuration change history and health.

Device Summary also provides access to most popular device actions and quick links to frequently used activities.

- Navigate to Devices > select a device

- Click on the Device > Summary> to view the details associated with each attribute.

Configuration Management

Configuration Archive

ATOM Collects Network or Server Configuration through API, NETCONF or CLI over Telnet or SSH. ATOM Provides the following Configuration Management relations functions:

- Fetch, archive, and deploy device configurations

- Build Stateful Configuration Model for:

- Devices that support YANG Over NETCONF

- Devices where Device YANG Model is mapped to Concrete API or CLI

- Stateful Configuration – Support Create, Update, Delete

- Stateful Configuration – Configuration Drift & Transactions

- Compare Startup vs Running

- Compare Running vs Latest Archived

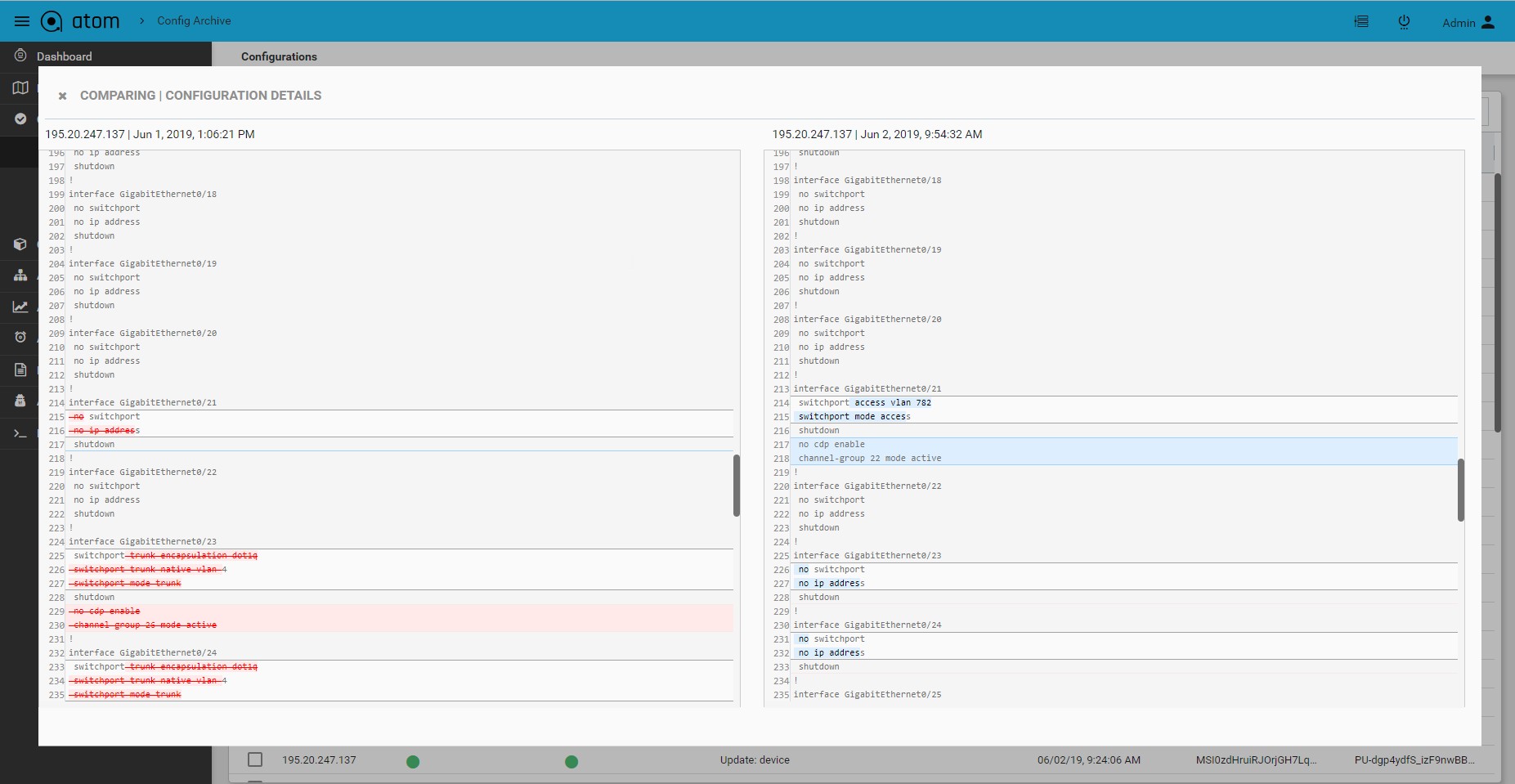

- Compare Two Versions of the Same Device

- Compare Two Versions of Different Devices

- Base Config vs Latest Version of Multiple Devices

- Search and generate reports on archived data

- Compare and label configurations, compare configurations with a baseline, and check for compliance.

- You can use the Baseline template to compare with other device configurations and generate a report that lists all the devices that are non-compliant with the Baseline template.

- You can easily deploy the Baseline template to the same category of devices in the network with dynamic inputs.

- You can import or export a Baseline template/Config archives.

- Set Up Event-Triggered Archiving

- Synchronize Running and Startup Device Configurations

- Deploy an External Configuration File to a Device

- Roll Back a Device’s Configuration To an Archived Version

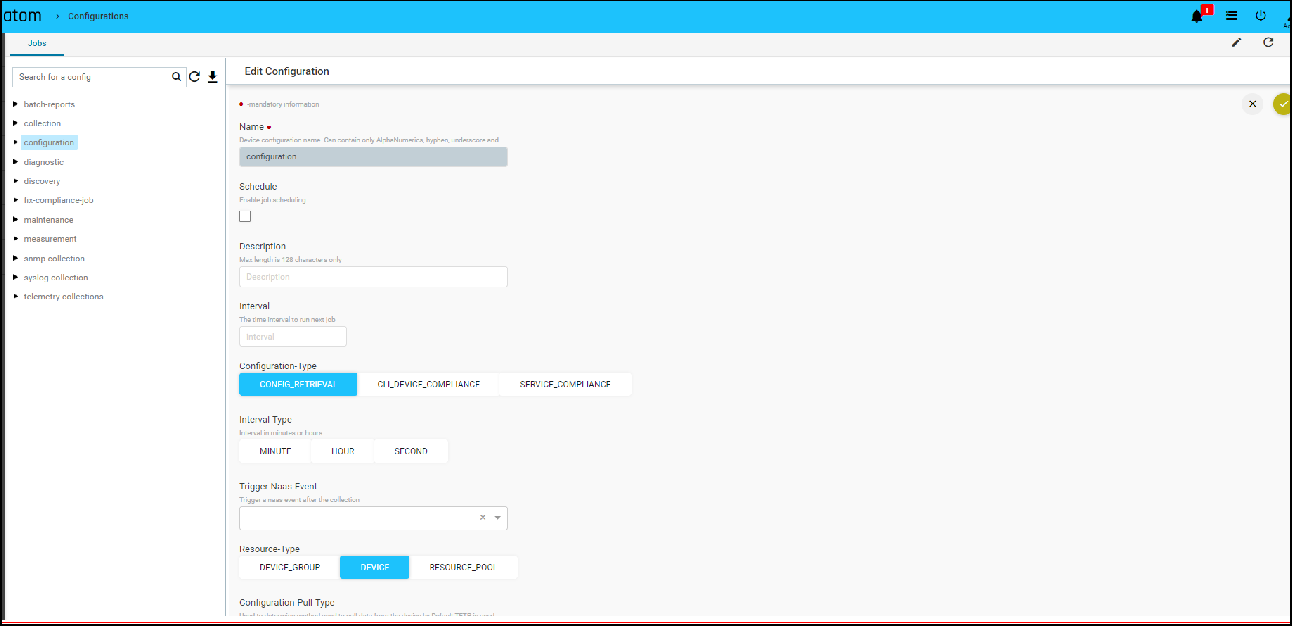

ATOM Collects Device configuration periodically as configured in Jobs->Configuration or upon a config change event from the device. To trigger configuration collection through config change notification, ATOM should be configured to receive config change notification through SNMP Trap or Syslog.

- To view Device(s) Configuration – Navigate to Devices > select a device(s)

- Click on the “Configuration > Archive” Tab

- Select an Entry in the Grid

- In Details view – CLI/XML Configuration is displayed

Configuration Diff:

Configuration differences across various revisions can be viewed by selecting two versions from the Configuration archive grid.

- To view Device(s) Configuration – Navigate to Devices > select a device(s)

- Click on the “Configuration > Archive” Tab

- Search configuration grid using tags or other attributes

- Select two configuration revisions

- Click on “Compare” to launch configuration diff view

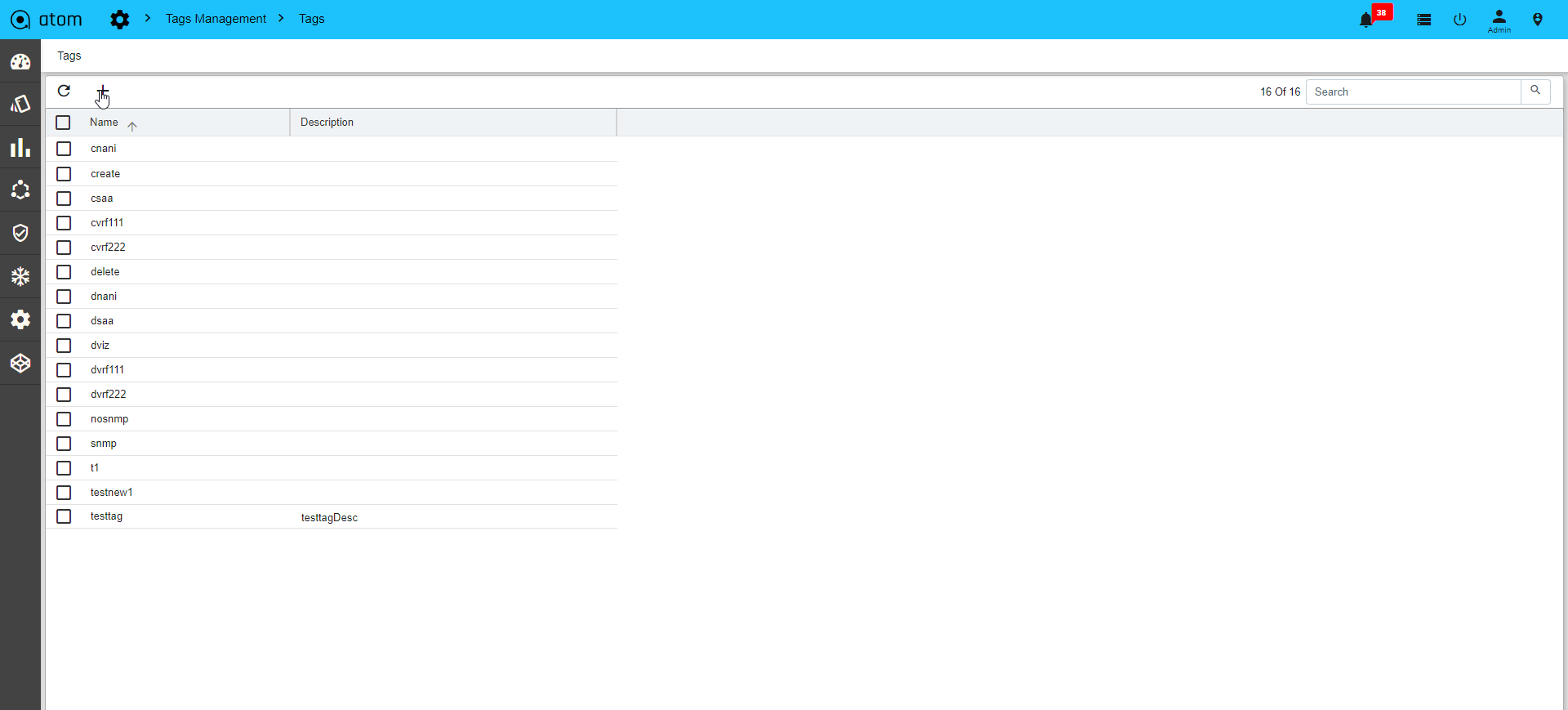

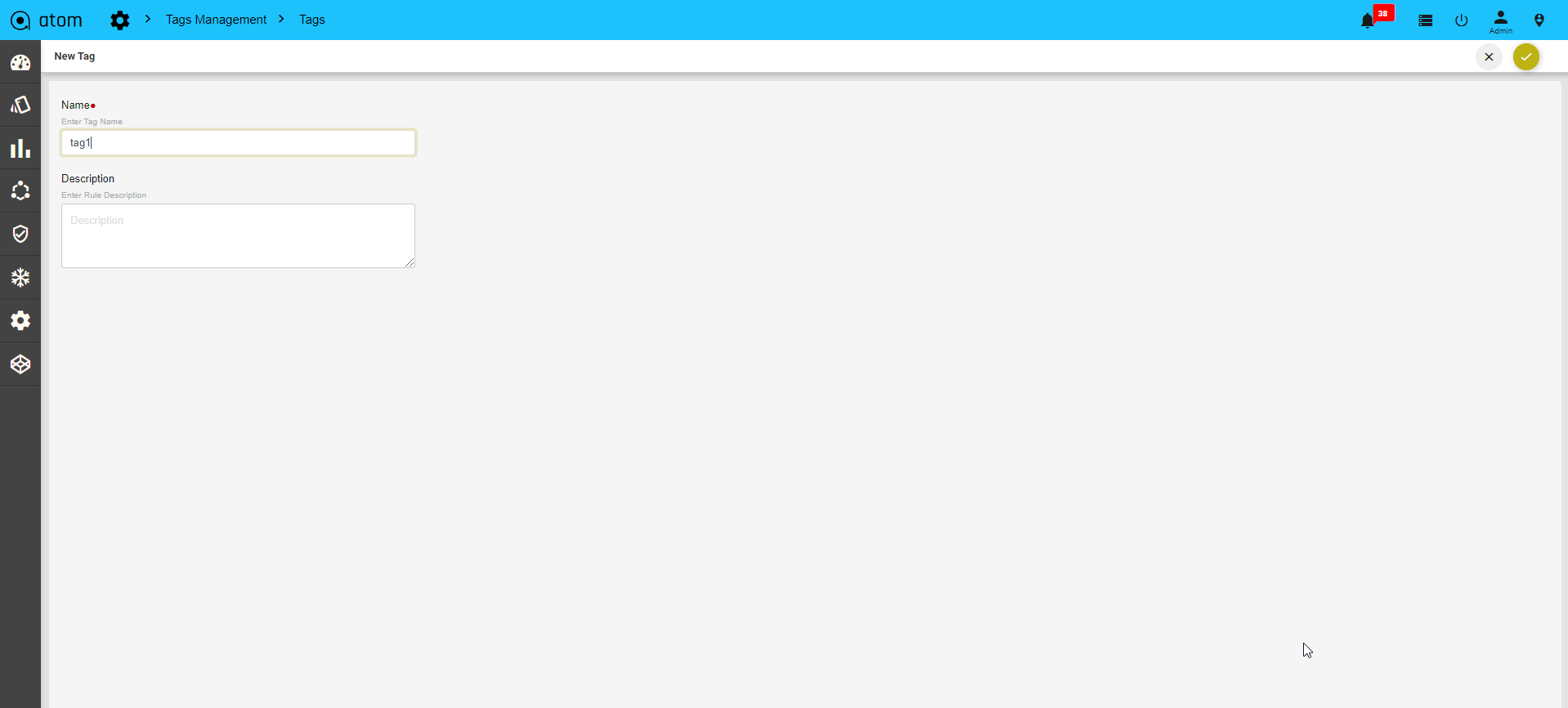

Configuration Tagging:

Configuration version can be tagged using user provided flags or tags. This can be used for filtering and comparison of configuration revisions.

- To view Device(s) Configuration – Navigate to Devices > select a device(s)

- Click on the “Configuration > Archive” Tab

- Select an entry from the configuration revision grid

- Click on “Update Tags”

- Enter one or more tags in the lower right of the configuration details view

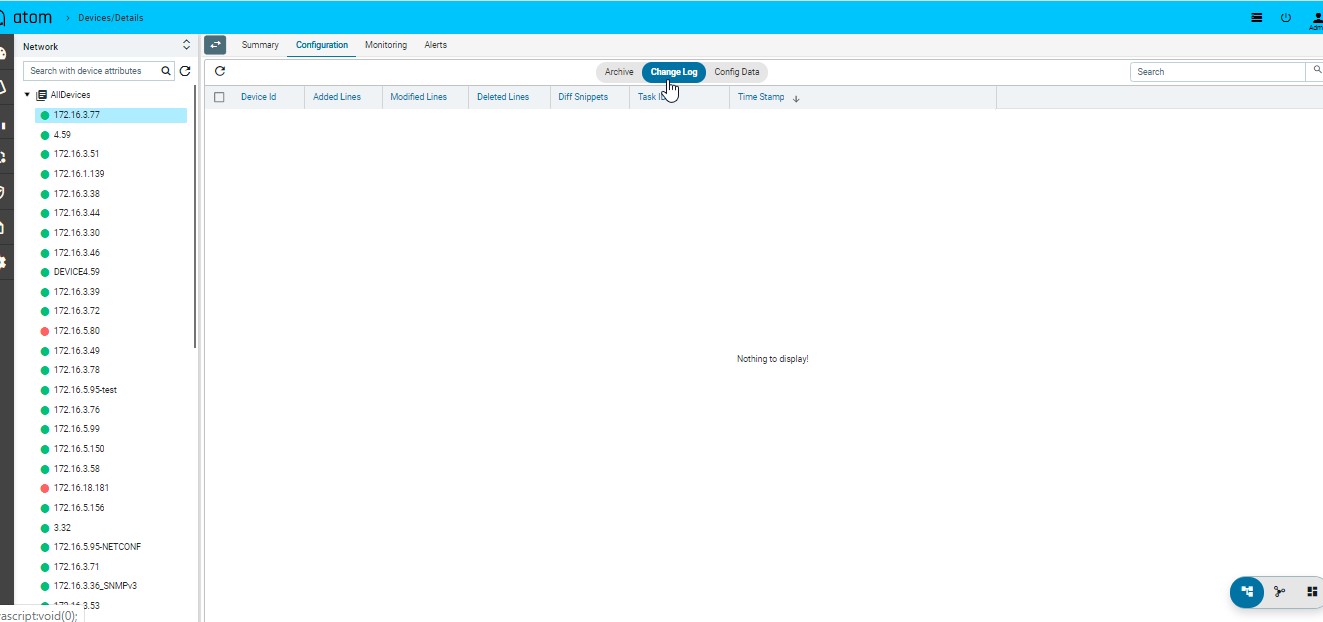

Configuration Change Log

Configuration archive provides full comparison of device configuration changes across revisions. ATOM provides another view to see only config modifications only.

This can be enabled from Admin Settings.

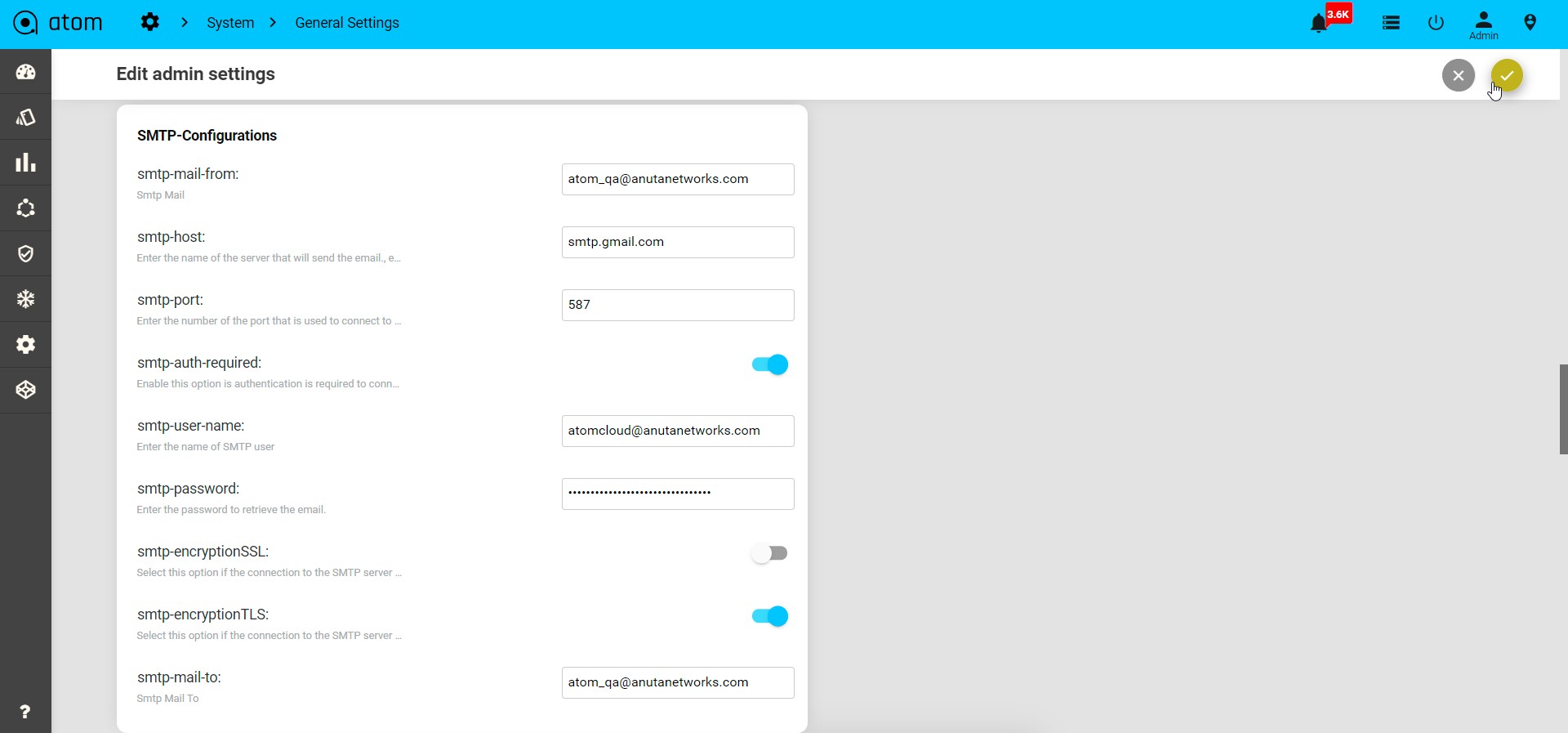

- Administrations > System> General settings> Admin settings

- Edit “Admin Settings”

- Set “generate-config-inventory-event” to true Config change history for devices can be tracked as follows:

- Navigate to Devices (Tree View) > select device(s)

- Click on the “Configuration” Tab

- Click on the “Change Log” Tab

Configuration Change Management – Create/Update/Delete

Configuration archive discussed in the “Configuration Management” section provides a Read-Only view of Device CLI configuration. Additionally, ATOM provides Model driven configuration for create, update & delete. This includes the following:

- Discovery of Device configuration

- Show a tree view of the configuration

- Create/Edit/Delete of Device configuration Configuration Editing can be done from “Config Data” view:

- To view Device Configuration – Navigate to Devices > select a device

- Click on the Configuration> Config Data Tab

- From the Tree view select a node and possible operations are shown on the right hand side

Note: Create/Edit/Delete from here will send configuration instructions to the device. ATOM should be set to interactive mode from the Administration page.

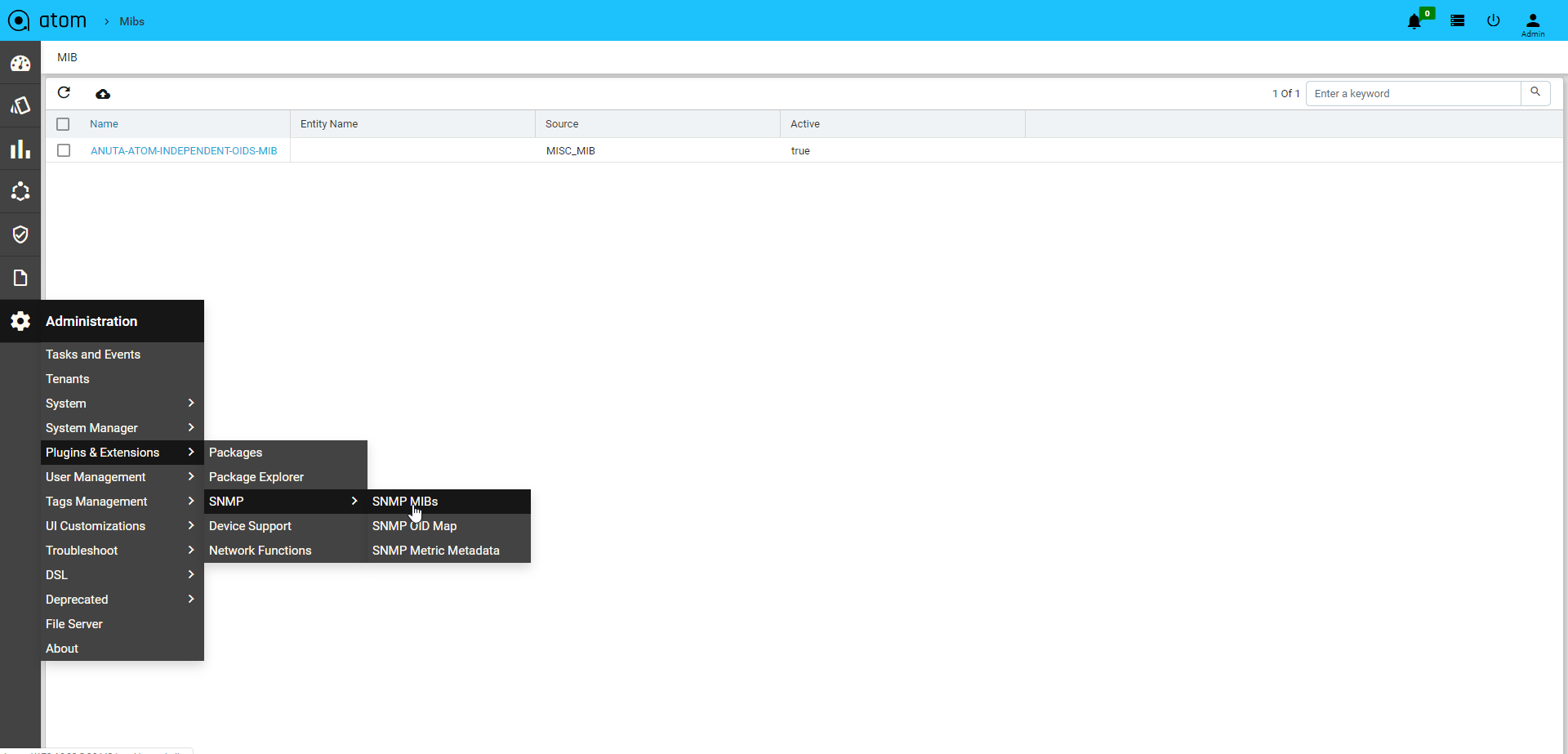

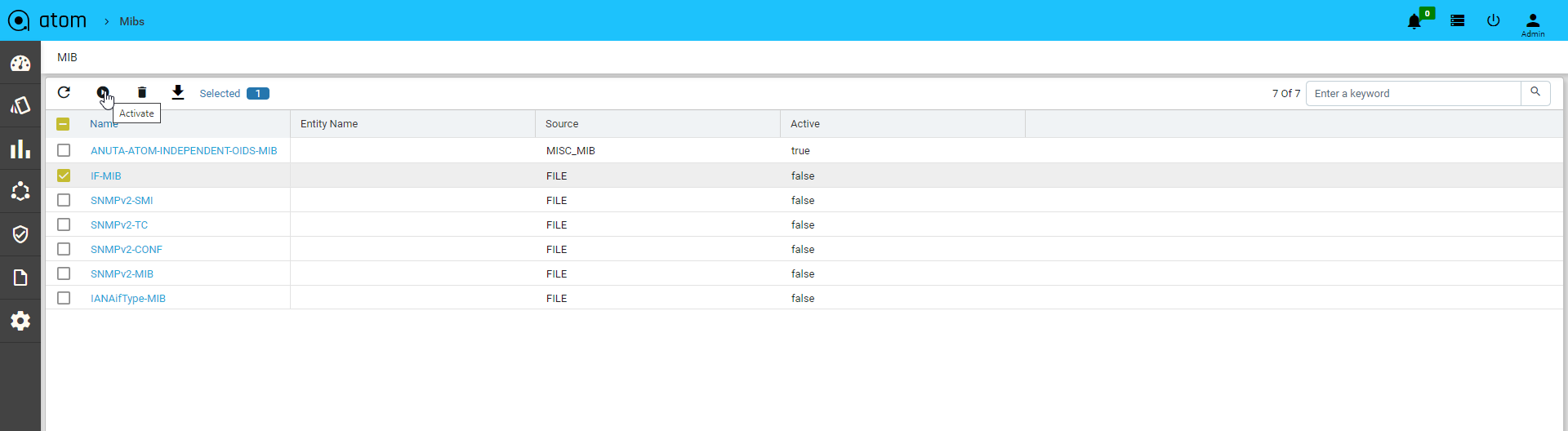

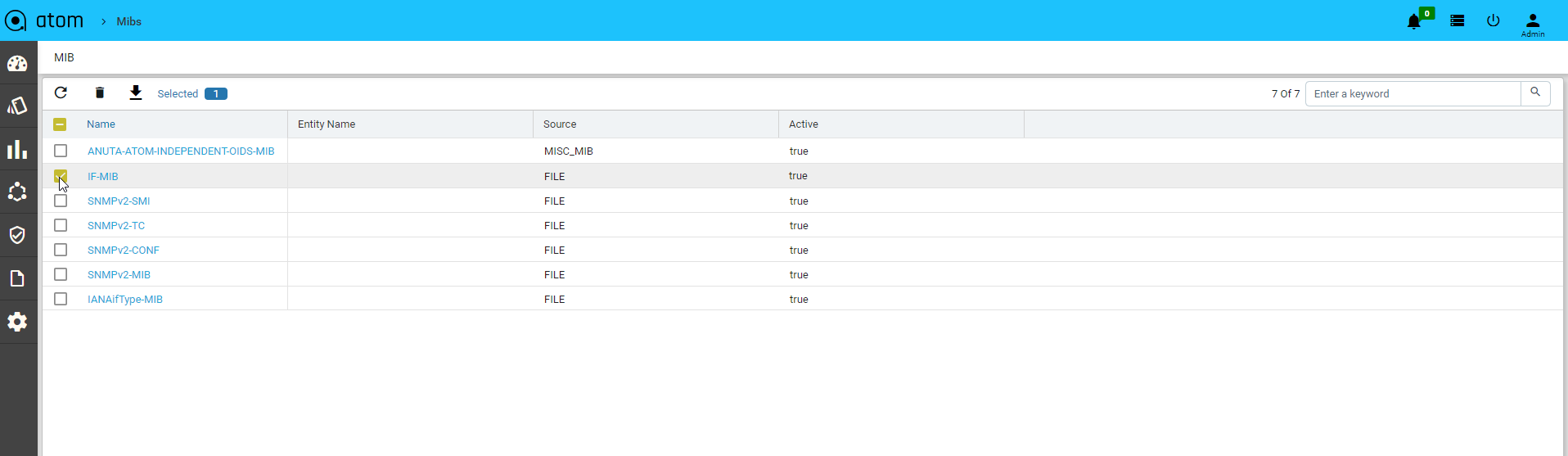

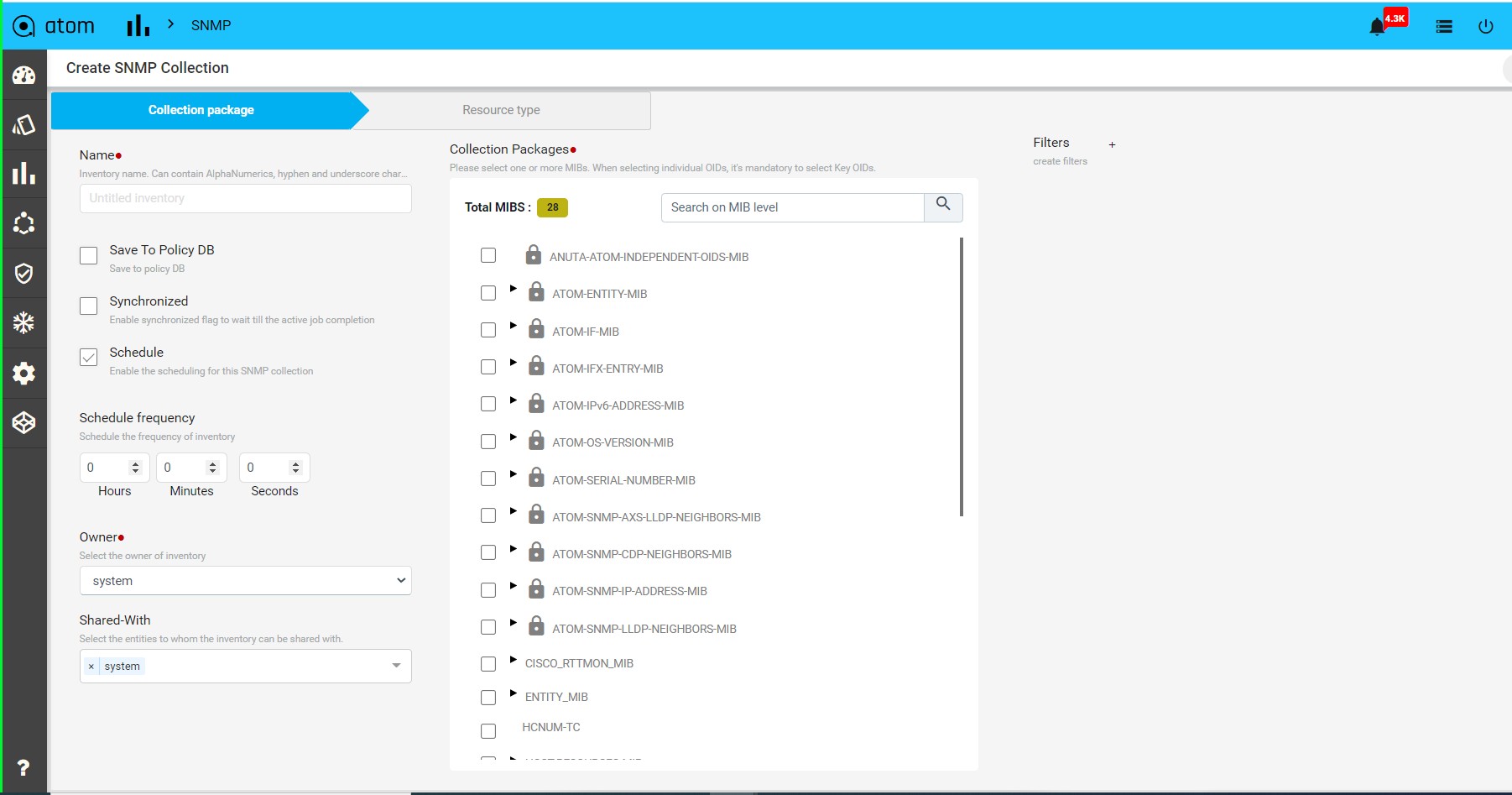

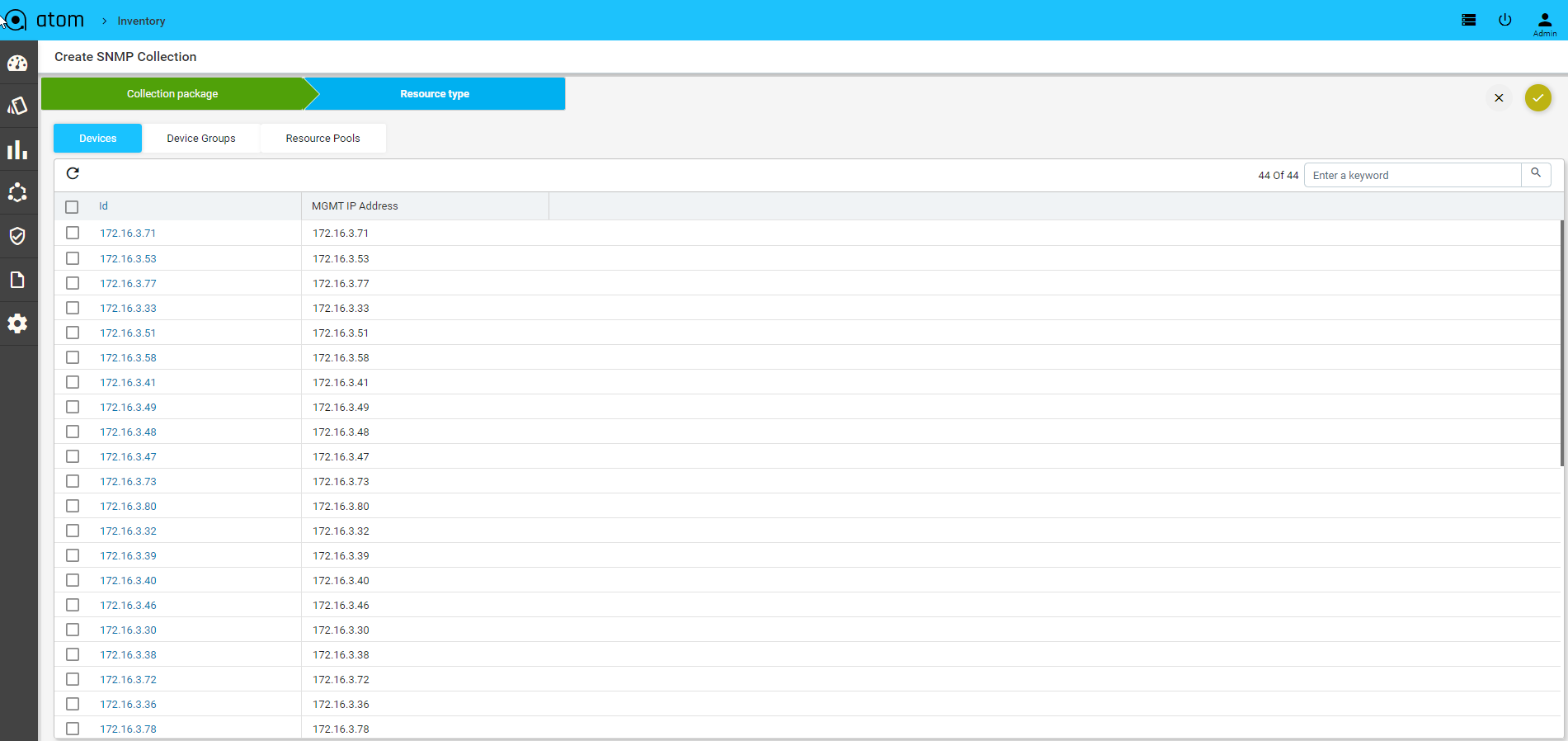

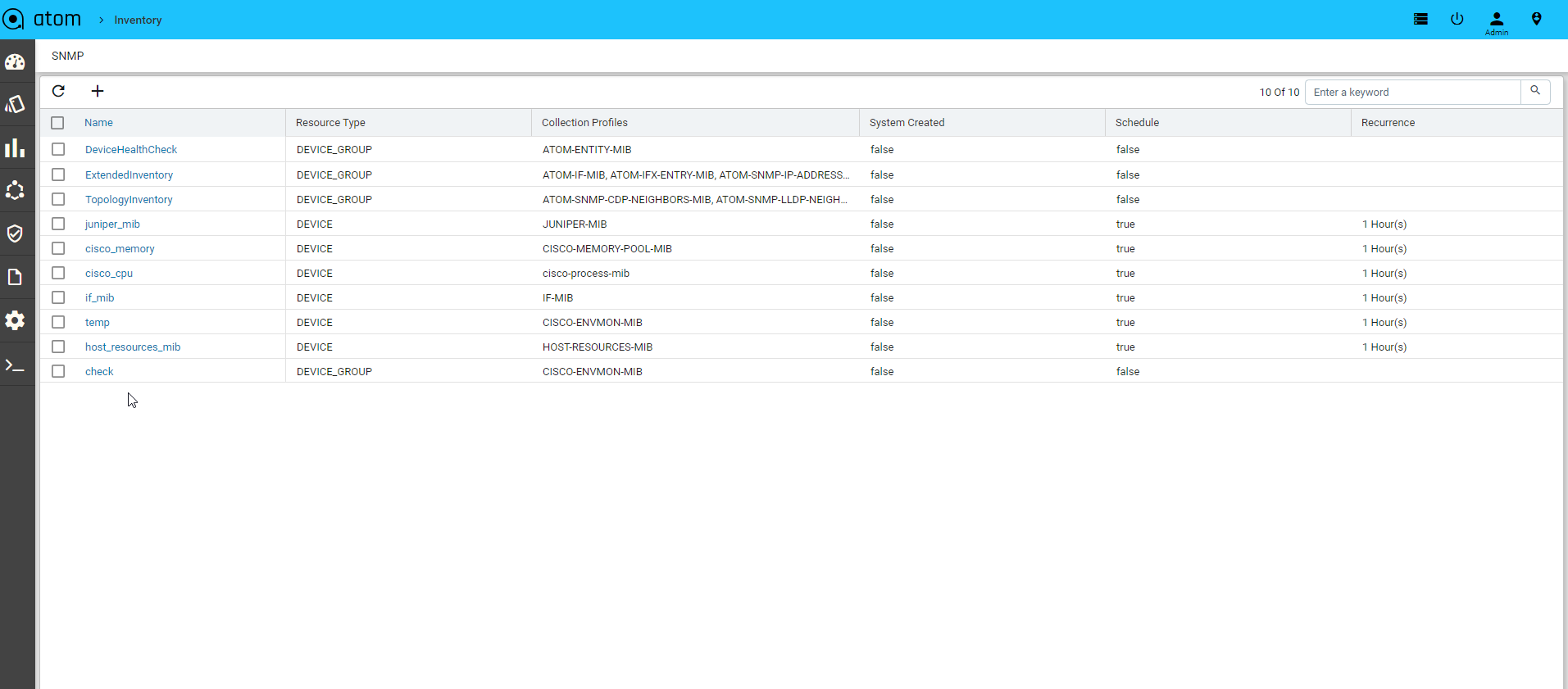

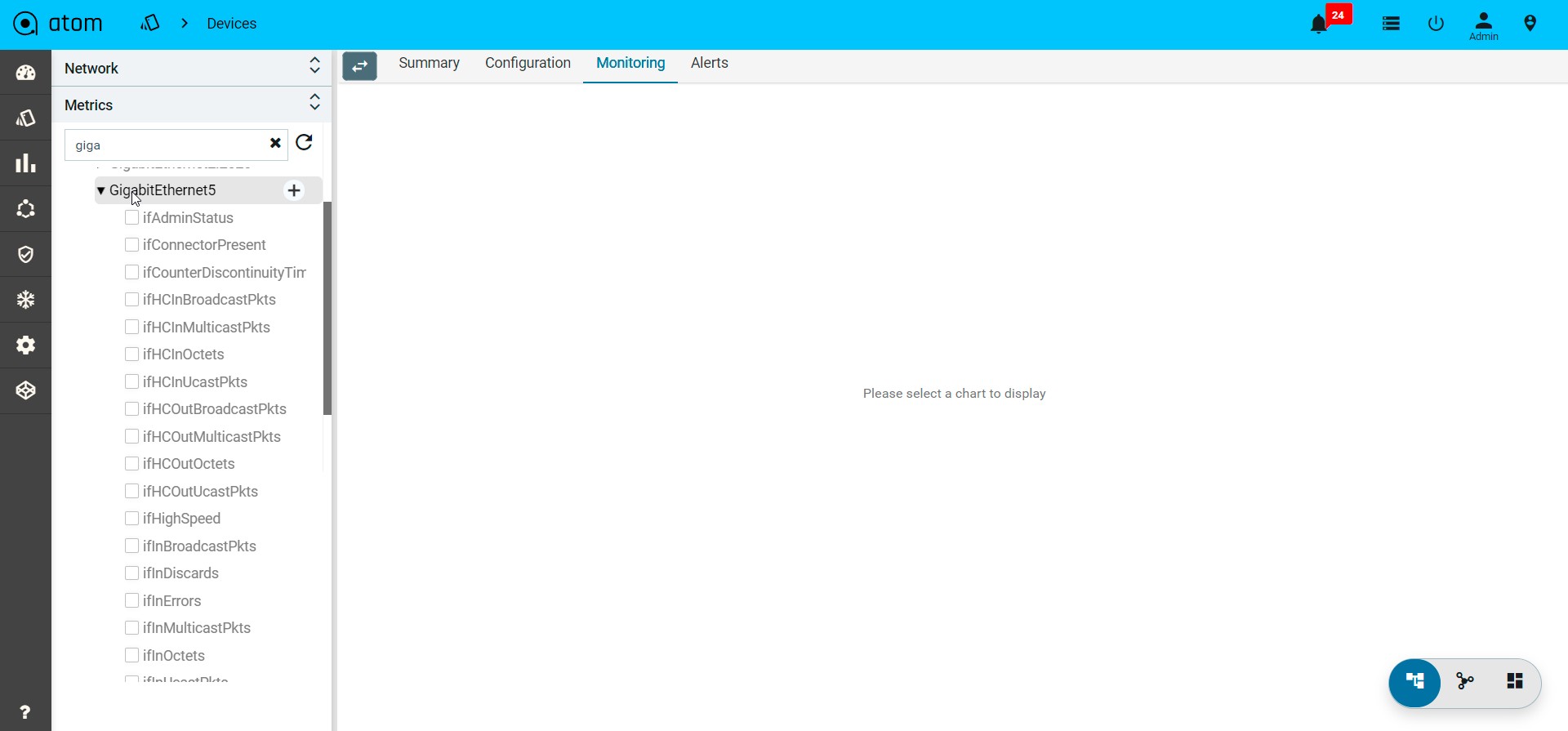

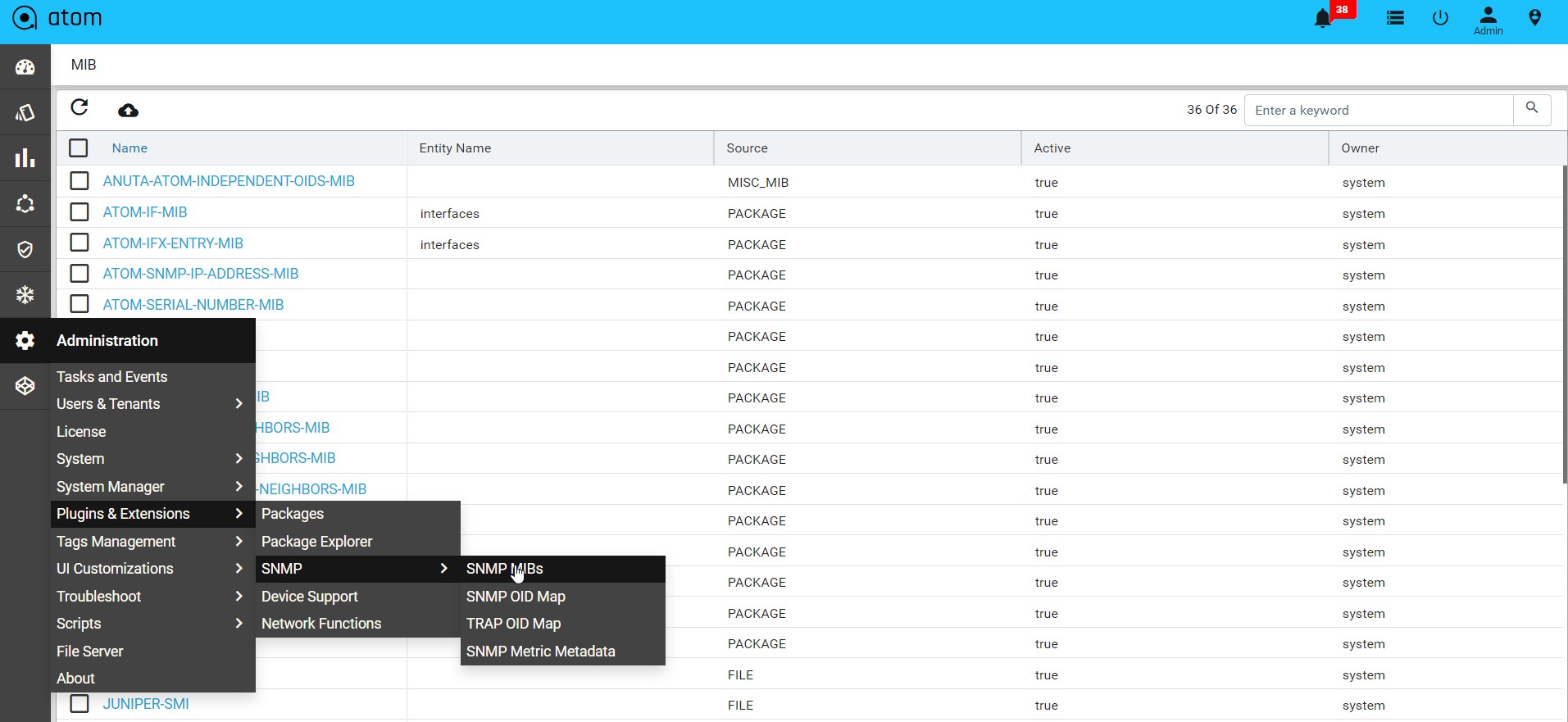

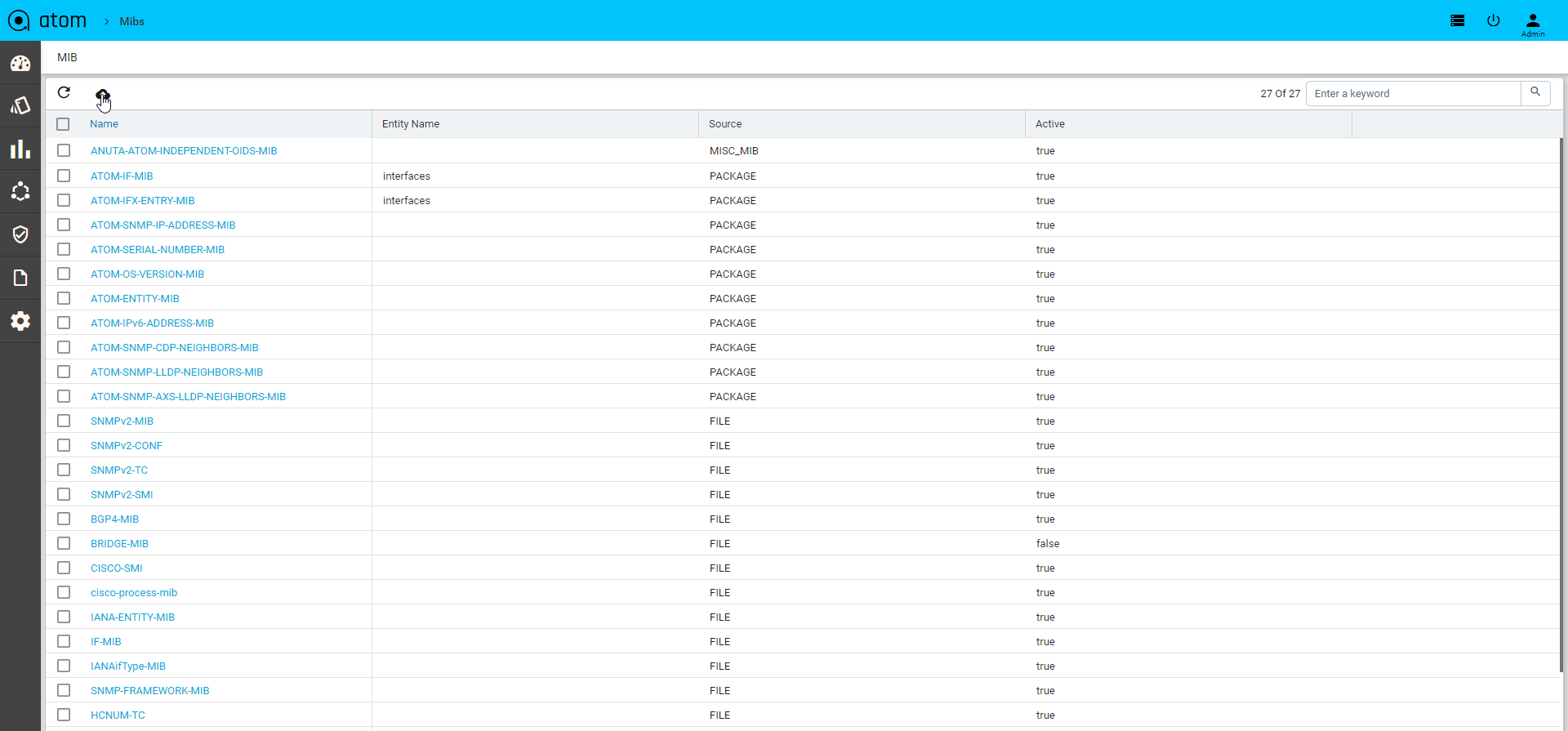

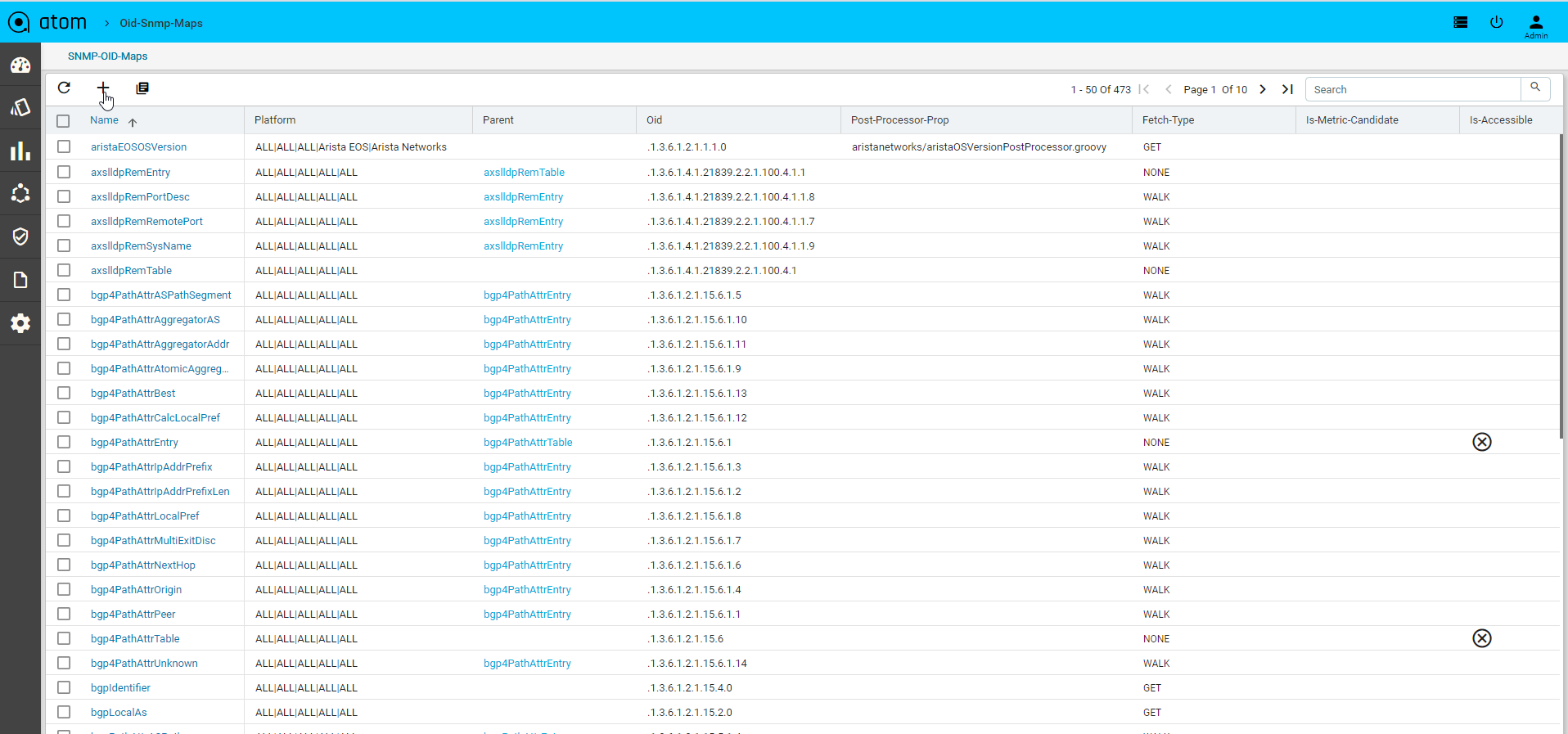

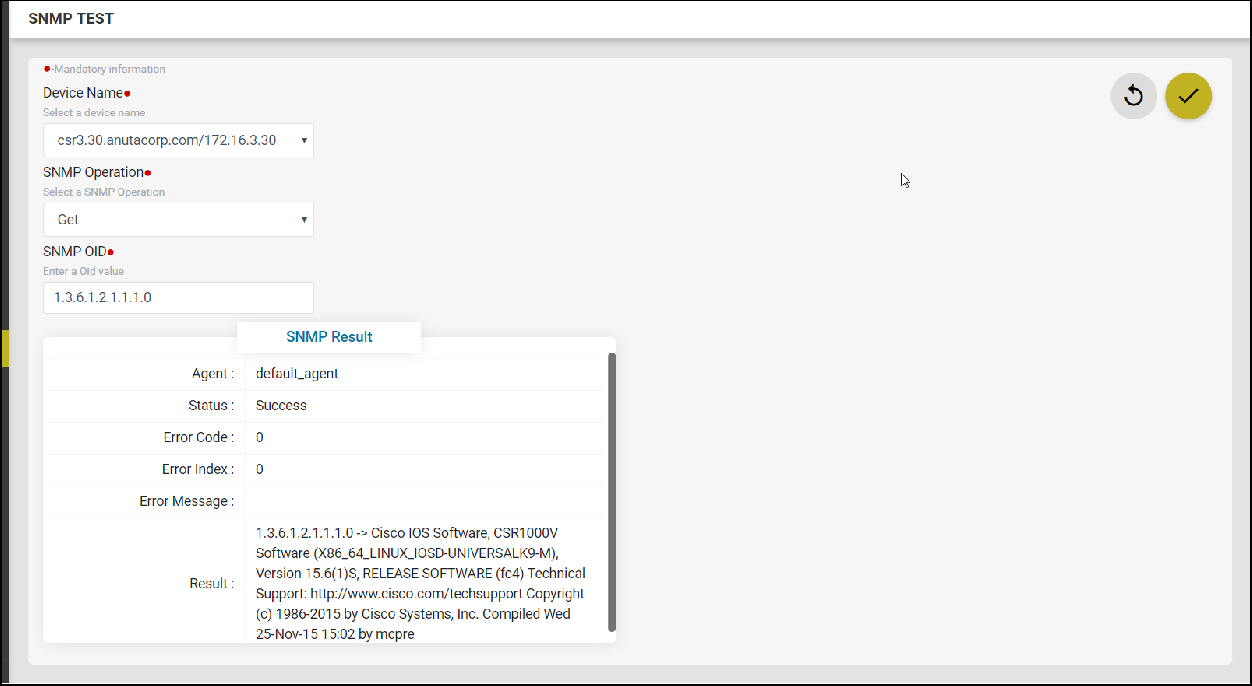

Device Inventory (SNMP)

All Device inventory collected through SNMP Collection job is shown in Entities view. Following provides guidance on

- To view Device Configuration – Navigate to Devices > select a device

- Click on the “Monitoring” Tab

- Collected data will be shown under MIB-name

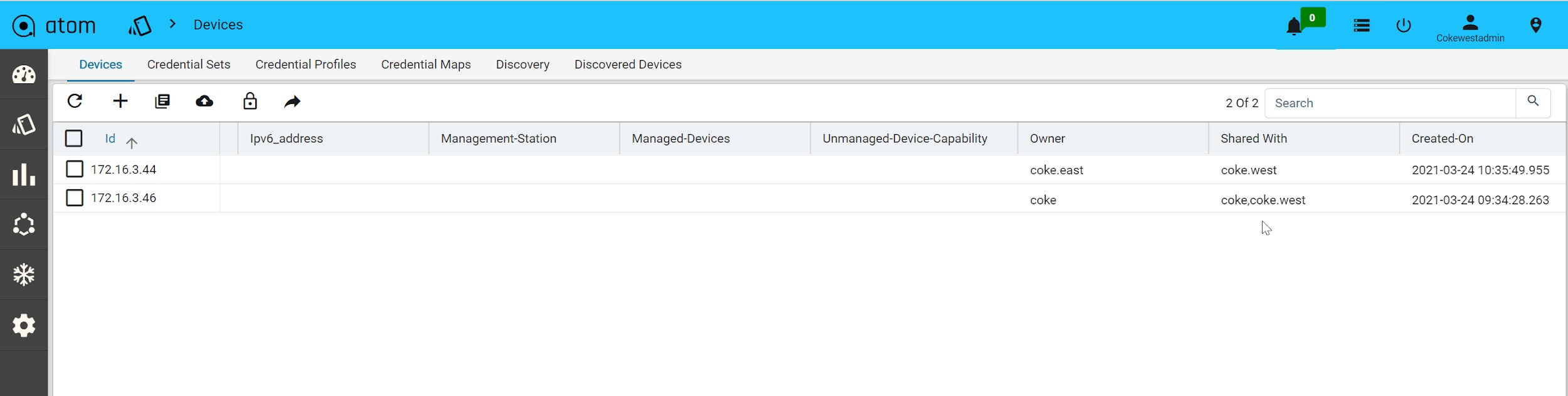

Adding Unmanaged devices

Some devices, with feature capabilities such as L2 only, L2 and L3 both, L3 only, can be manually added to the Devices table. Such devices are not managed by ATOM and it does neither generate configurations nor push any configurations on them. Multiple unmanaged devices can be on-boarded into the resource pool and each such device can be used during service instantiation.

To add an Unmanaged device, do the following:

- Navigate to Resources > Devices > Add Device

- In the Create device screen, select the Unmanaged option Enter values in the following fields:

- Host Name: Enter a name for the device

- Device Capability: Select one or more capabilities from the available list.

For example, if you want the device to behave as a L3 device, choose L3Router

from the list.

-

- Device Type: Select the category of the device that it belongs to. 3. Add network connections between the null device and it’s peer device as follows:

- Source Interface: Select the interface, on the null device, from which the network connection should originate.

- Peer Device: Select the device, managed by ATOM, as the peer device.

- Peer Interface: Select the interface on the peer device where the network connection should terminate.

Adding Dummy devices

In some scenarios, you may have to create devices for which configurations are created as a part of a service but are not pushed to any actual device. These logical entities are termed Dummy Devices and they do not have any real world counterparts with a pingable IP address.

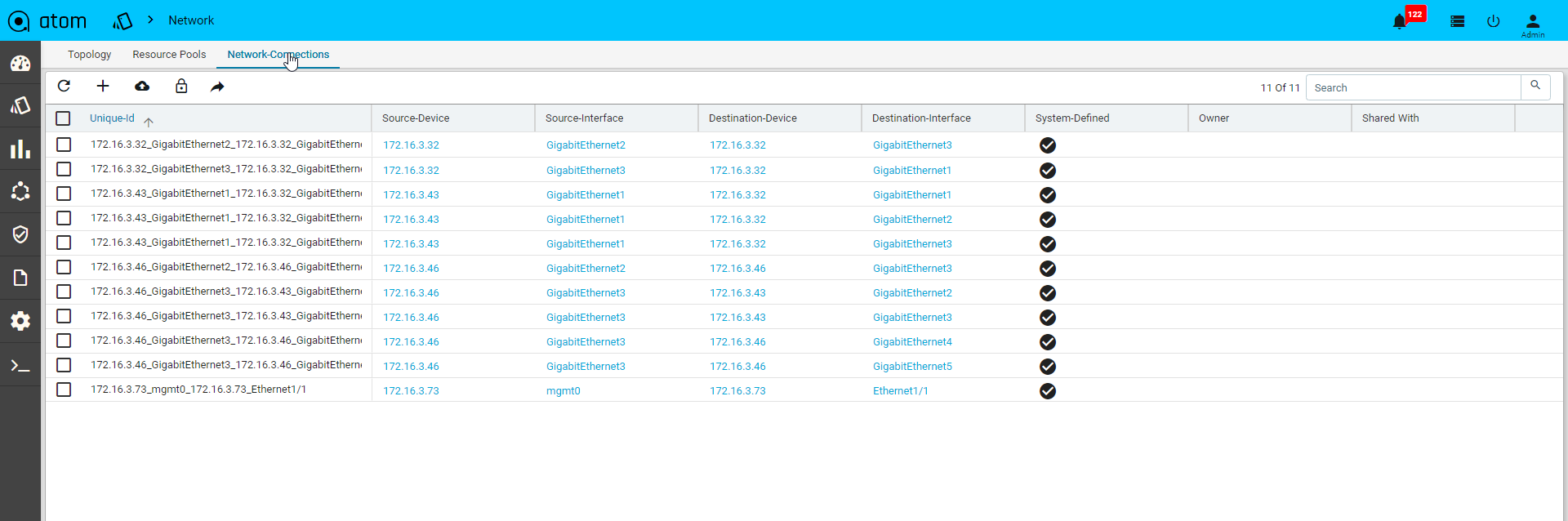

Network Topology

Network Connections

Network connectivity is discovered between devices using Layer 2 discovery protocols – CDP & LLDP. In cases where CDP/LLDP is not supported or enabled on the device, Network connections can be added Manually using Network connections .

NOTE: Network connections should be added manually between the devices that have LACP port channels configured on them.

To add a Network Connection, do the following:

- Go to Resource Manager > Network

- Click Network Connections and click Add.

- In the Create Network Connection screen, enter the values in the following fields:

- Unique ID: This is a system-generated ID for a network connection.

- Source Device: Select a Device (origin of the network connection)

- Source Interface : Enter a name for the interface on the source device

- Destination Device: Select a Device (the end of the network connection)

- Destination Interface: Enter a name for the interface on the destination device. A Network Connection is established between the interfaces of the source and the destination devices.

Network Topology

All the devices for each of which network connections are available are displayed in the topology view.

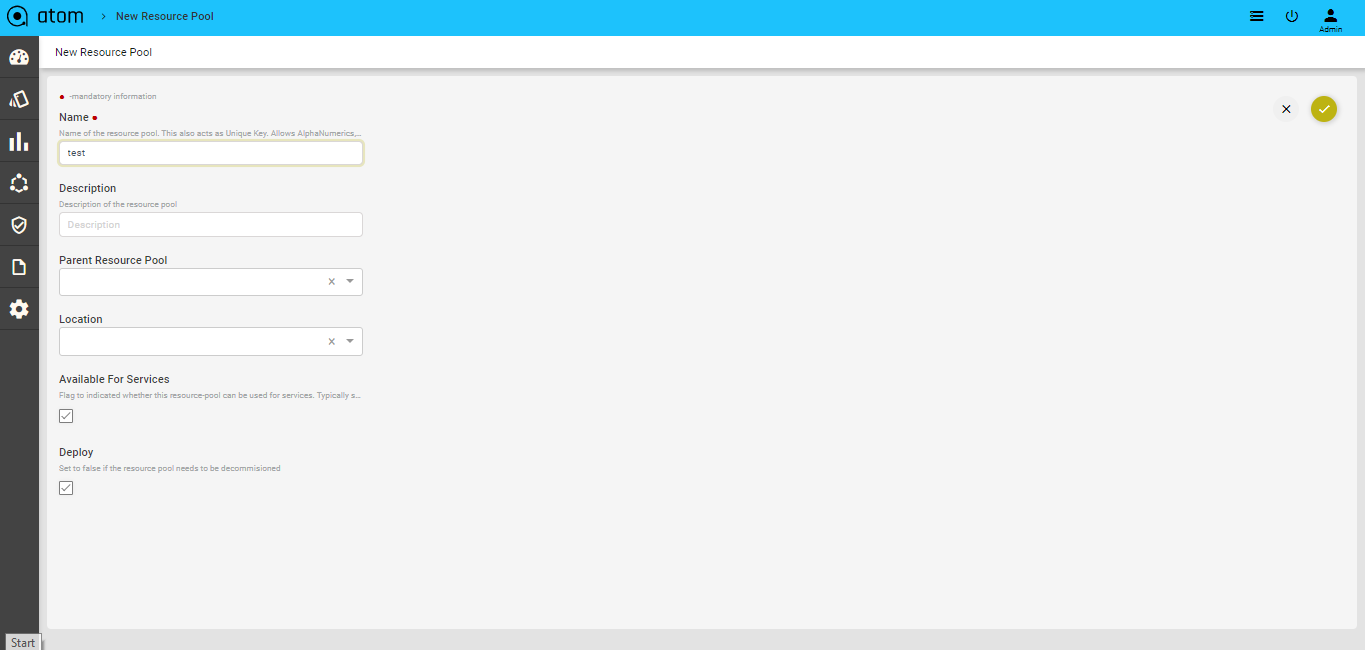

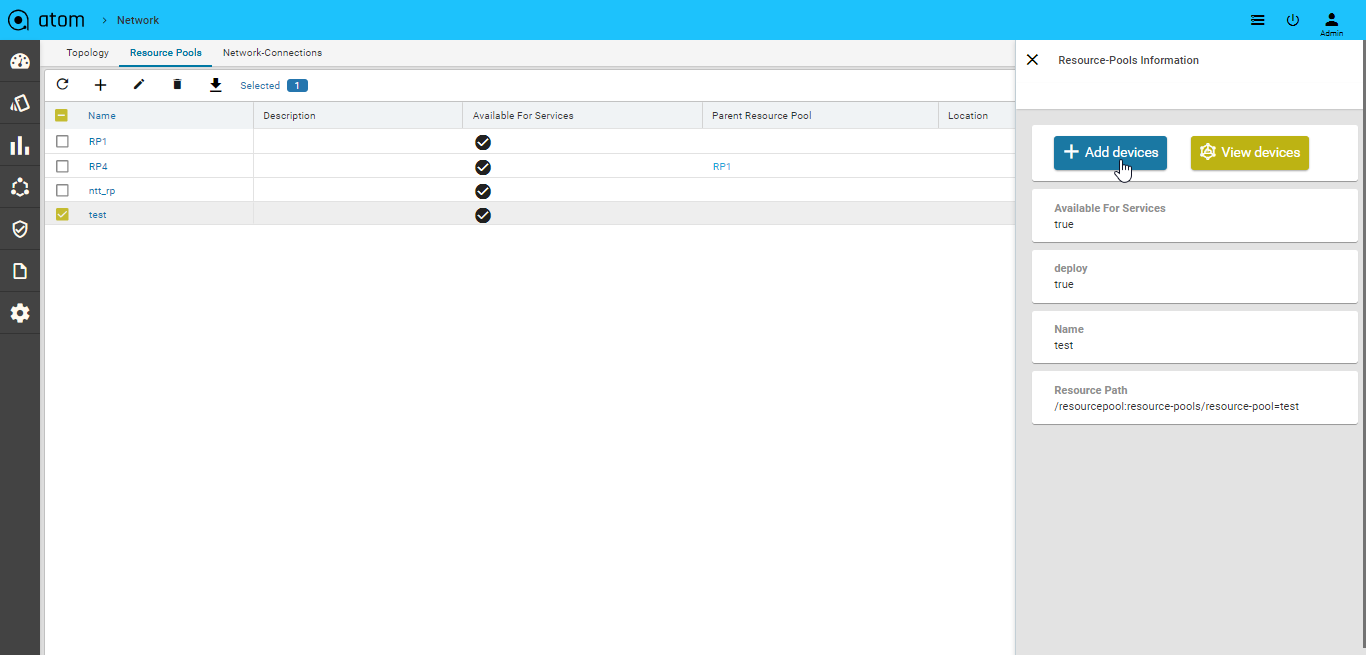

Resource Pools

A resource pool is a logical abstraction for flexible management of resources managed by ATOM. A resource pool can contain child resource pools and you can create a hierarchy of shared resources. The resource pools at a higher level are called parent resource pools. Users can create child resource pools of the parent resource pool or of any user-created child resource pool. Each child resource pool owns some of the parent’s resources and can, in turn, have a hierarchy of child resource pools to represent successively smaller units of resources.

Resource pools allow you to delegate control over the resources of a host and by creating multiple resource pools as direct children of the host, you can delegate control of the resource pools to tenants or users within the organizations.

Using resource pools can yield the following benefits to the administrator:

- Flexible hierarchical organization

- Isolation between pools, sharing within pools

- Access control and delegation Creating a Resource Pool

- Navigate to Resource Manager > Network > Resource Pools

- In the right pane, click the Add Resource Pool button to create a Resource Pool

- In the Create Resource Pool, enter values in the fields are displayed: .

- Name: Enter a name for the resource pool

- Description: Enter some descriptive text for the created resource pool

- Available for Services: Select this option if the resource pool can be used for creating services.

- Parent Resource Pool: Select a resource pool that should act as the parent for this resource pool that is being created.

- Location: Select the name of the site or the geographical location where this resource pool should be created. See the section, “Locations” for more information about creating Locations and Location types.

- Deploy: Select this option if the resource pool should be deployed or used in services.

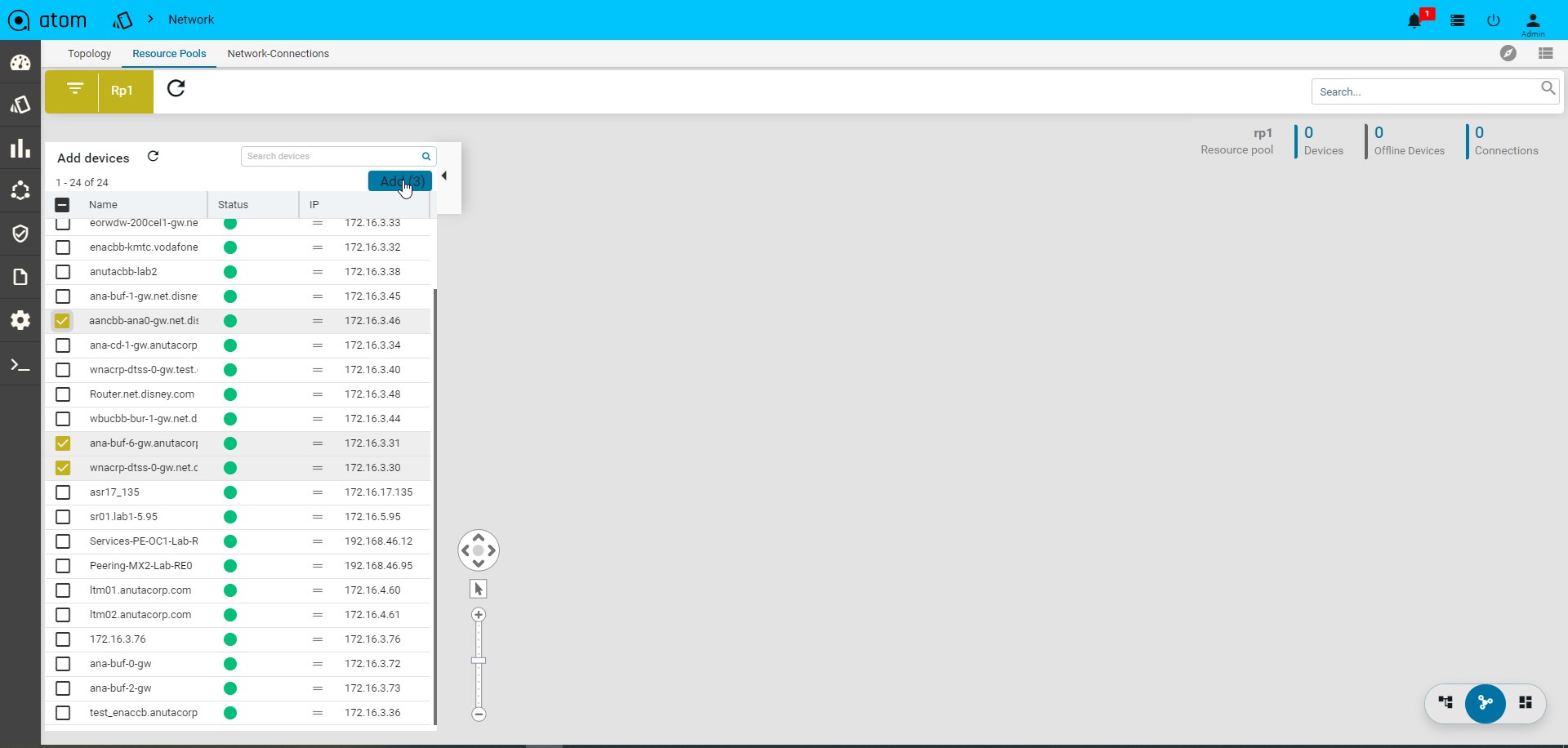

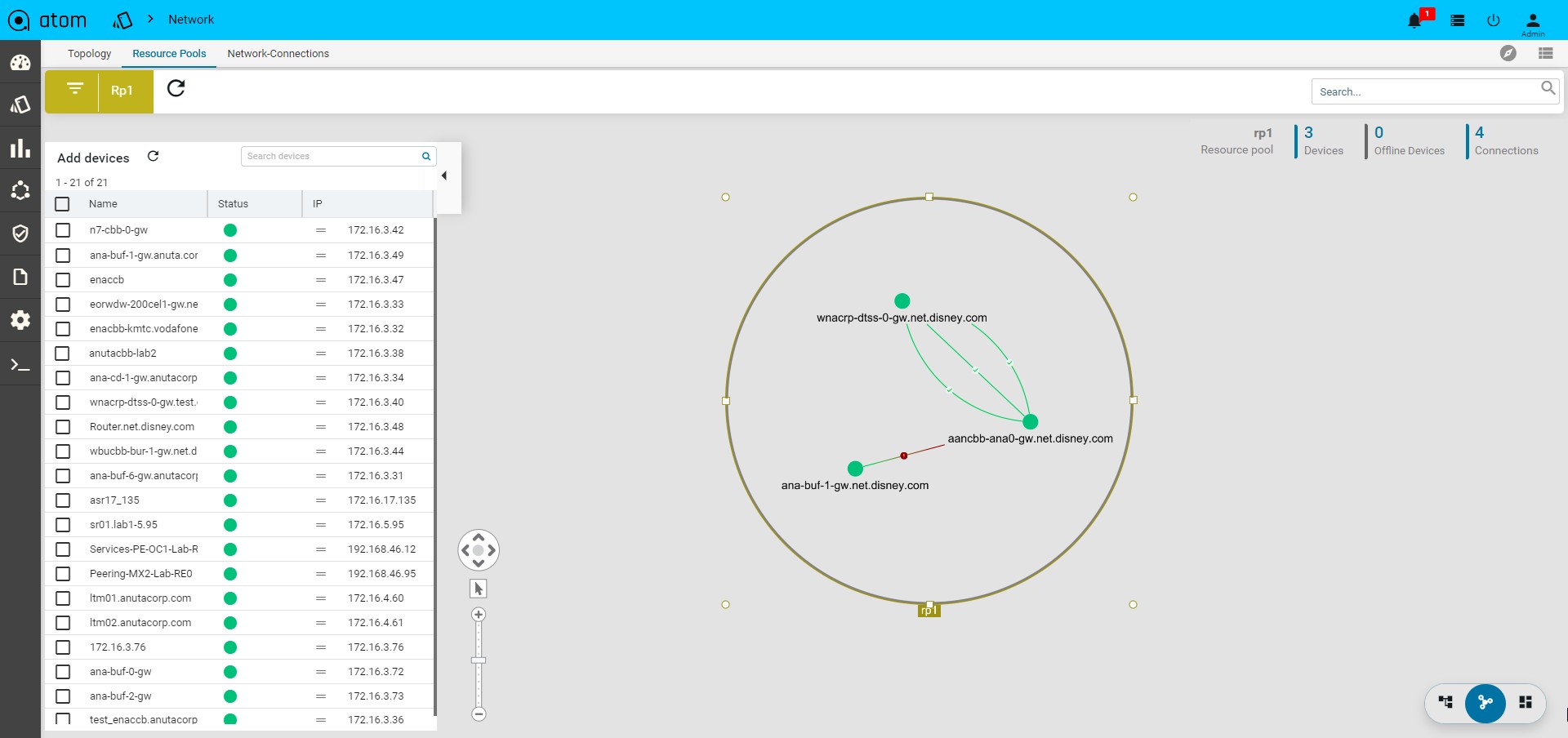

Adding Devices to a Resource Pool

- Click the created resource pool to add the required devices to it.

Select Resource pool > Add Devices

- All the devices available in ATOM are displayed in the left pane.

- Click Add to include the required devices in the resource pool

- Select the device from the Drag and Drop the devices pane to the right pane All the selected devices are now part of the resource pool created earlier.

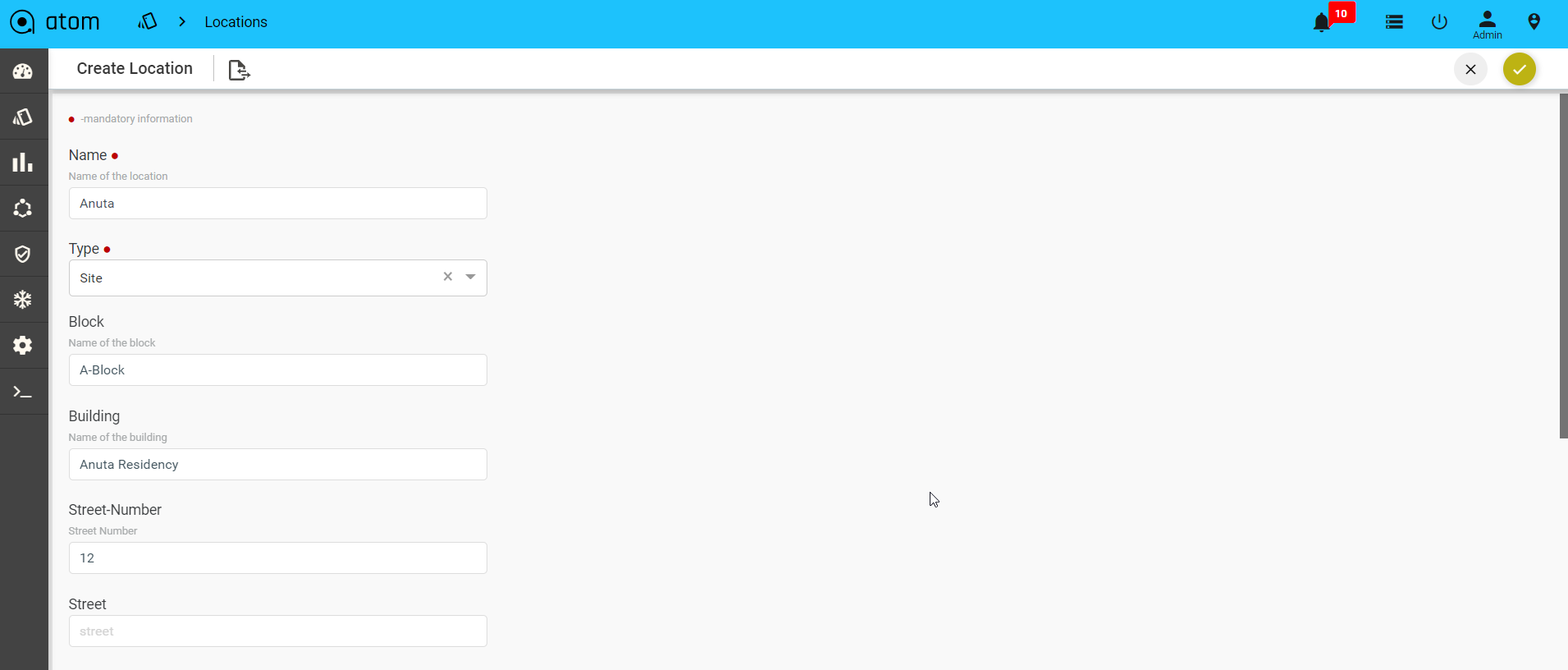

Locations

Devices & Resources Pools can be attached to a Physical Location. Location tagging allows devices and resource pools to be visualized on a Geographical Map in topology view.

- To Create/Edit a Location – Resource Manager > Locations > Resources-Location >click

Add Location

- In the Create Location screen, enter values in the fields described:

- Name: Add the name of for the data center or the site that you want to create.

- Type: Select from the pre-defined location types from the drop-down menu. (preferably select Site)

- Block: Enter the name of the block where the location is situated

- Building: Enter the name of the Building

- Street Number: Enter the number of the street where the Building is located

- Latitude: Enter the latitude of the site.

- Longitude: Enter the longitude of the site.

- Street: Enter the street name where the building is located.

- Country: Select a country from a pre populated list available in ATOM

- City: Select a specific city contained in the chosen country.

- State: Enter the name of the State or province to which the city belongs.

- Zip Code: Enter the zip code of the City where the Site is located.

- Parent Location: Select one of the predefined locations (of the type, Region or Country) defined earlier.

For assigning the created Location to a Resource pool, refer to section, “Creating a Resource Pool”.

After the successful allocation of the Resource Pool to the given Location, you can view it on the map. Select the created Resource Pool and click View on Map

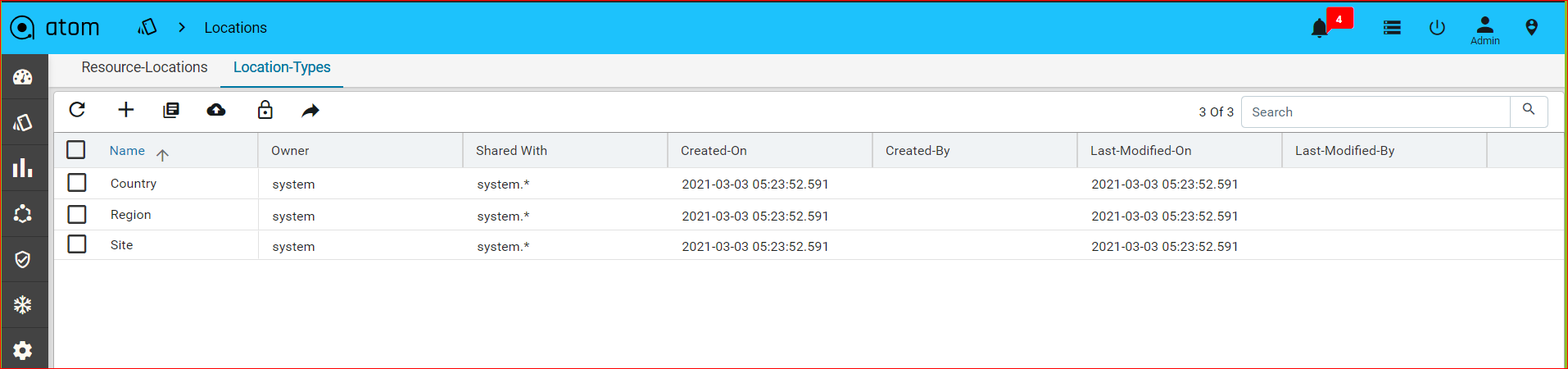

Location Types

Add the types of the location that should be associated with a Location. Navigate to Resource Manager > Locations > Location Types.

The default location types available in ATOM are Region, Country, and Data Center

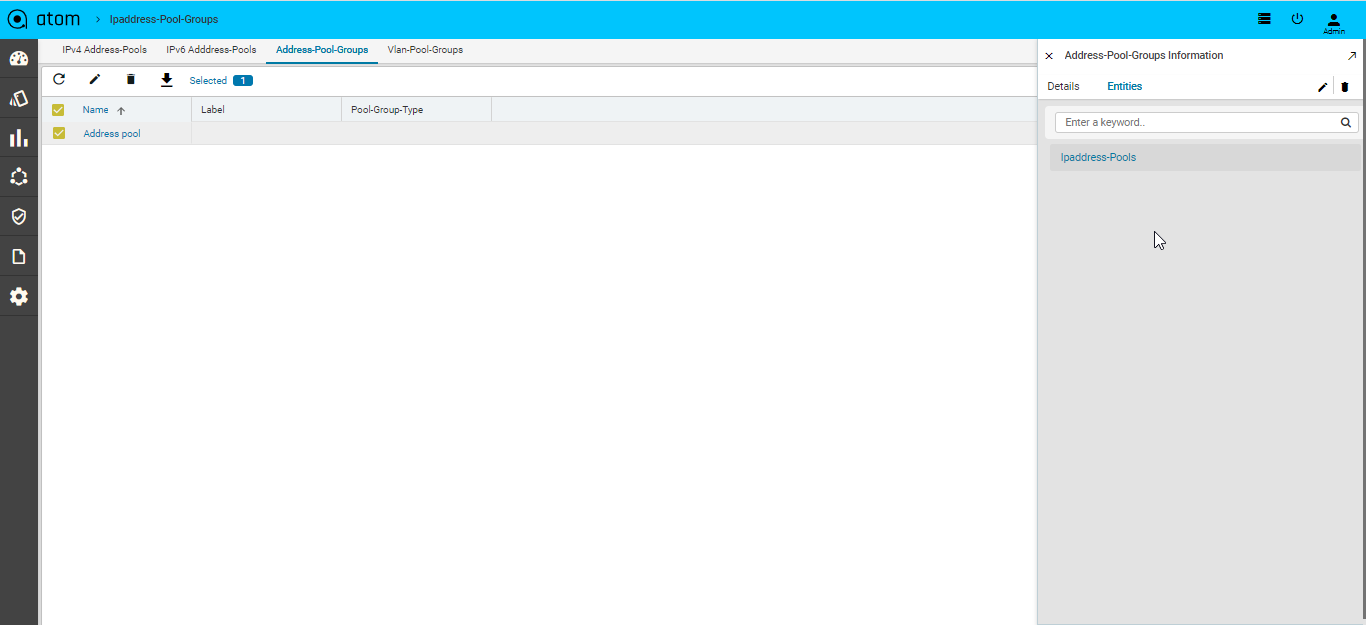

IPAM

IP Address Pool Group

For effective management of IP addresses, you can arrange IP addresses as an ordered collection and use them while instantiating a service.

- Navigate to Resource Manager > IPAM > IP Address Pool Groups

- Click Add IP Address Pool Group in the right pane

- In the Create IP Address Pool Group screen, enter values in the fields:

- Name: Enter the name of the IP address pool group

- Label: Enter the name of the label that describes the IP address pool group

- Click Add to add IP Address Pools to be included in the IP Address Pool Group

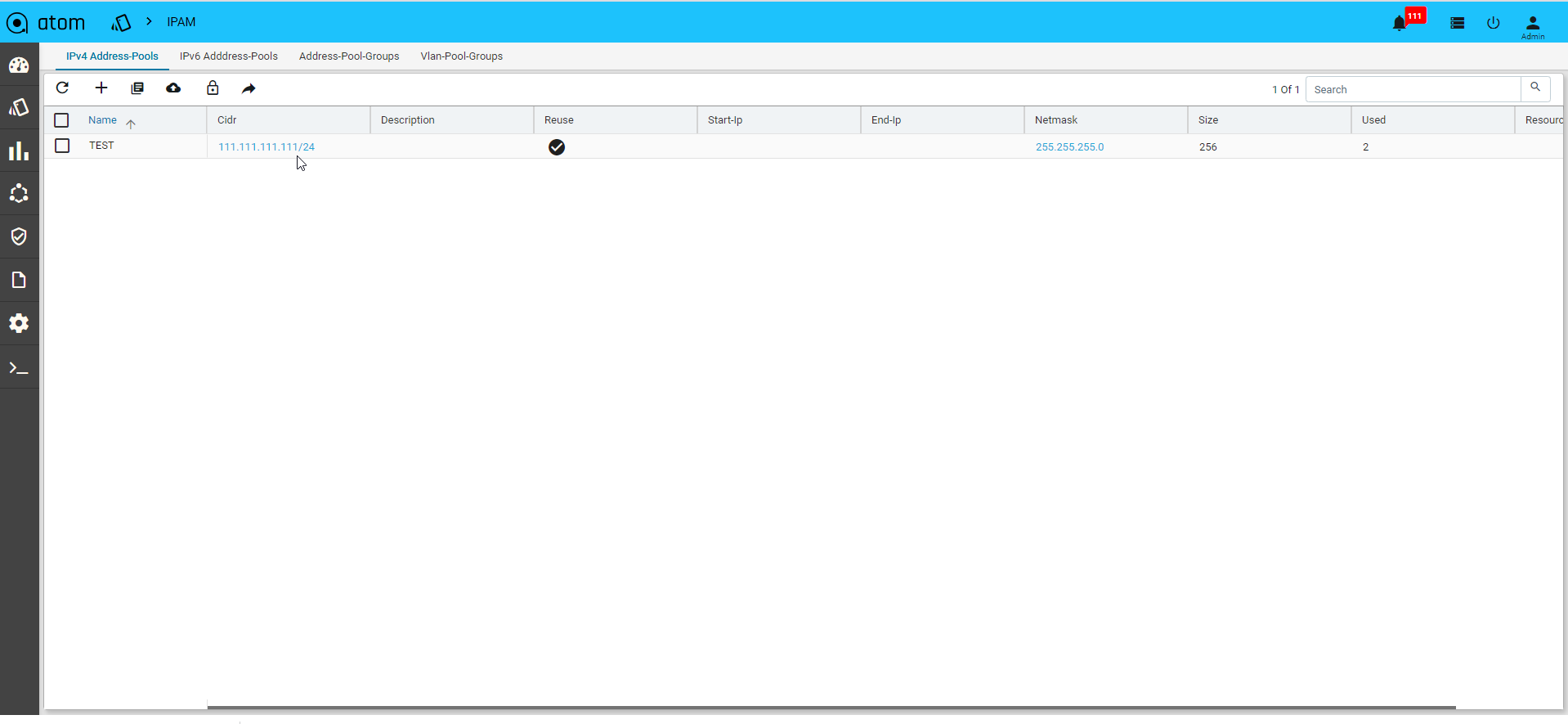

IP Address Pools

A range of IP addresses can be assigned to a pool and associated with a resourcepool.All these IP addresses will be used during the instantiation of the service.

- Navigate to Resource Manager > IPAM > IP4- Address Pools

- Click Add IP Address Pool on the right pane and enter values in the following fields:

- Name: Enter a unique name for the IP address pool

- CIDR: Enter the CIDR (IP address followed by a slash and number)

- Description: Enter the description for the created IP Address Pool

- Reuse: Select this option if the IP addresses contained in this pool should be reused across different services.

- Start IP:Enter the start IP address of the range of IP addresses

- End IP: Enter the last IP address in the IP address range.

- Resource Pool: Select the Resource pool to which these IP addresses should be assigned. All the services that are created in these Resource Pools will use these IP addresses.

Creating IP address entries

IP Address entries are the IP Address Pools that have been reserved for a service.

-

-

- Click IP address pool > Action > IP address entries

-

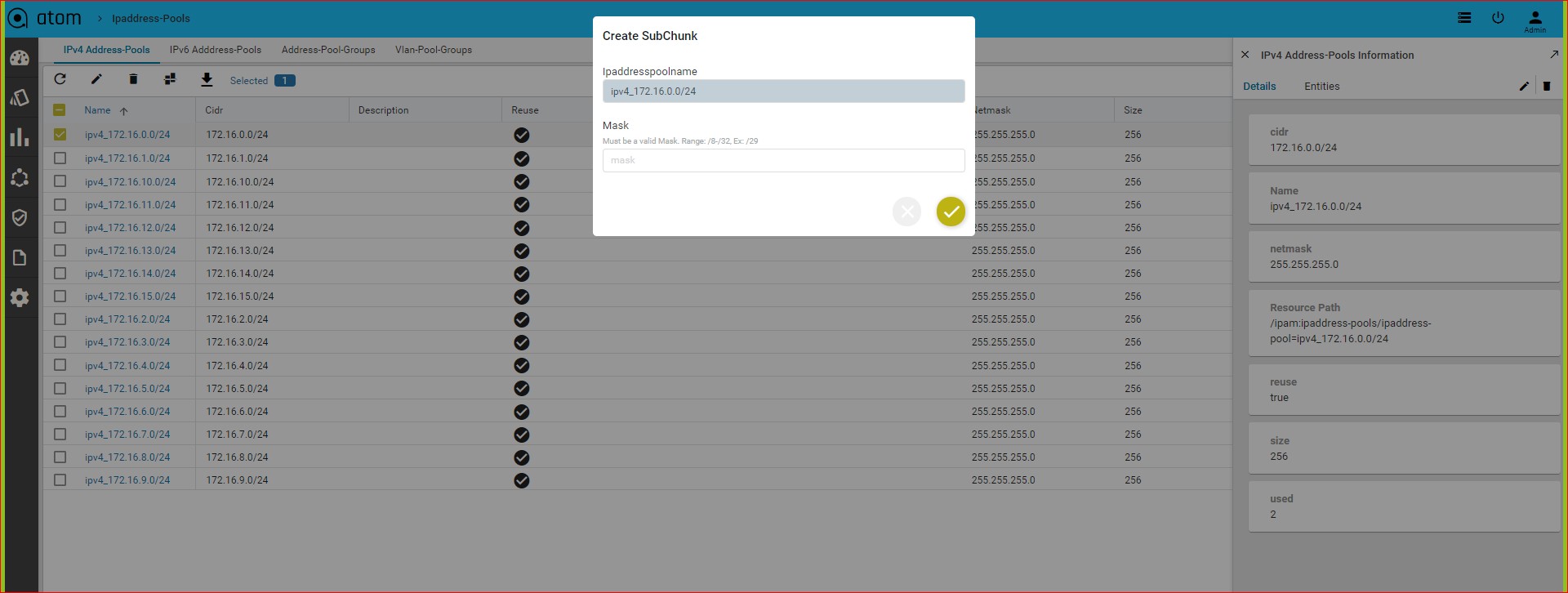

IPV4

Creating Sub Chunks of the IP Address Pools:

The network contained in an IP address pool can be divided into two or more networks within it. The resulting sub chunks can be used for different services to be configured on a resource pool tied with the parent IP address pool.

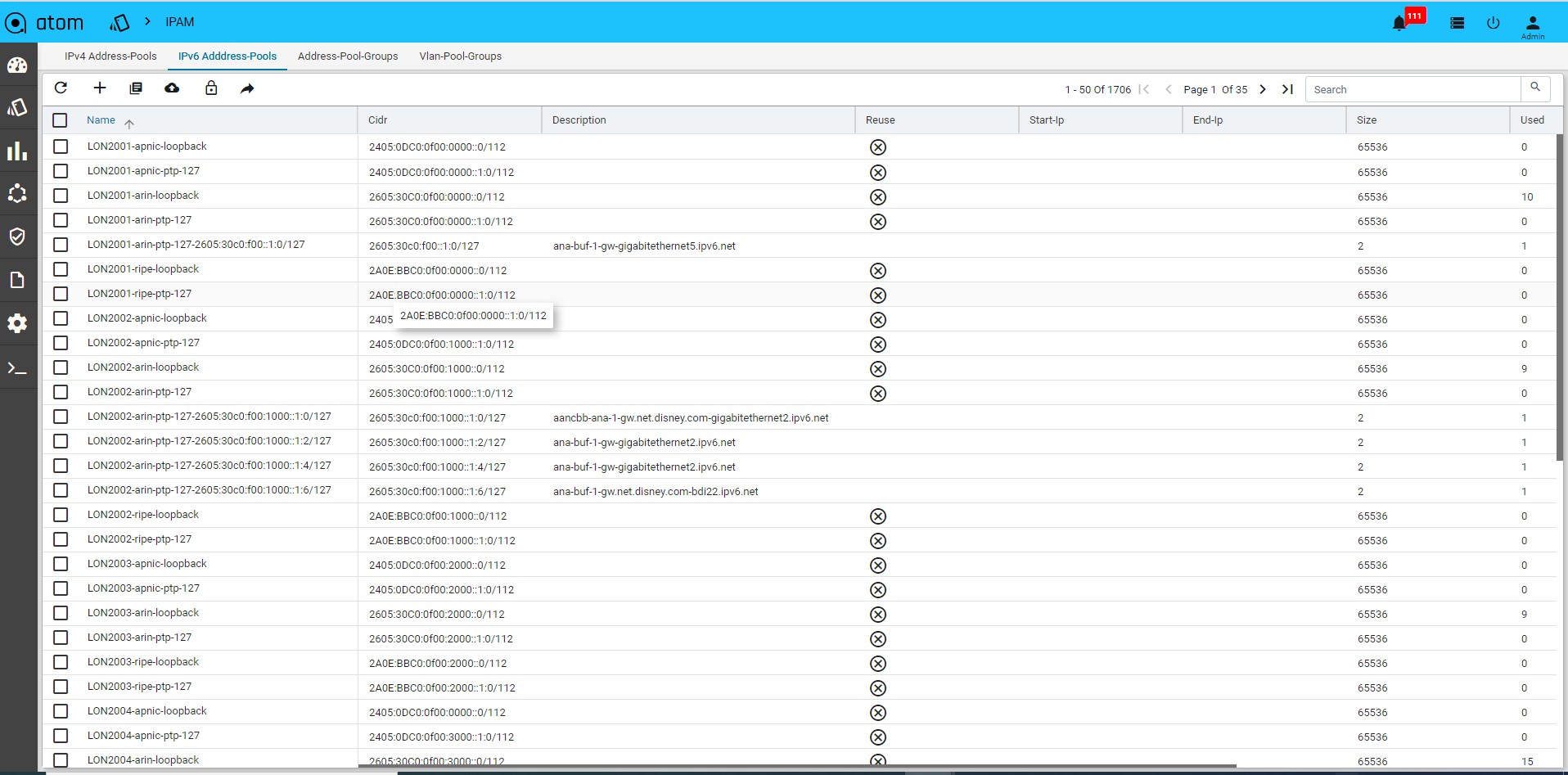

IPV6:

Resource Manager > IPAM > IP6- Address Pools

Address-pool-Groups

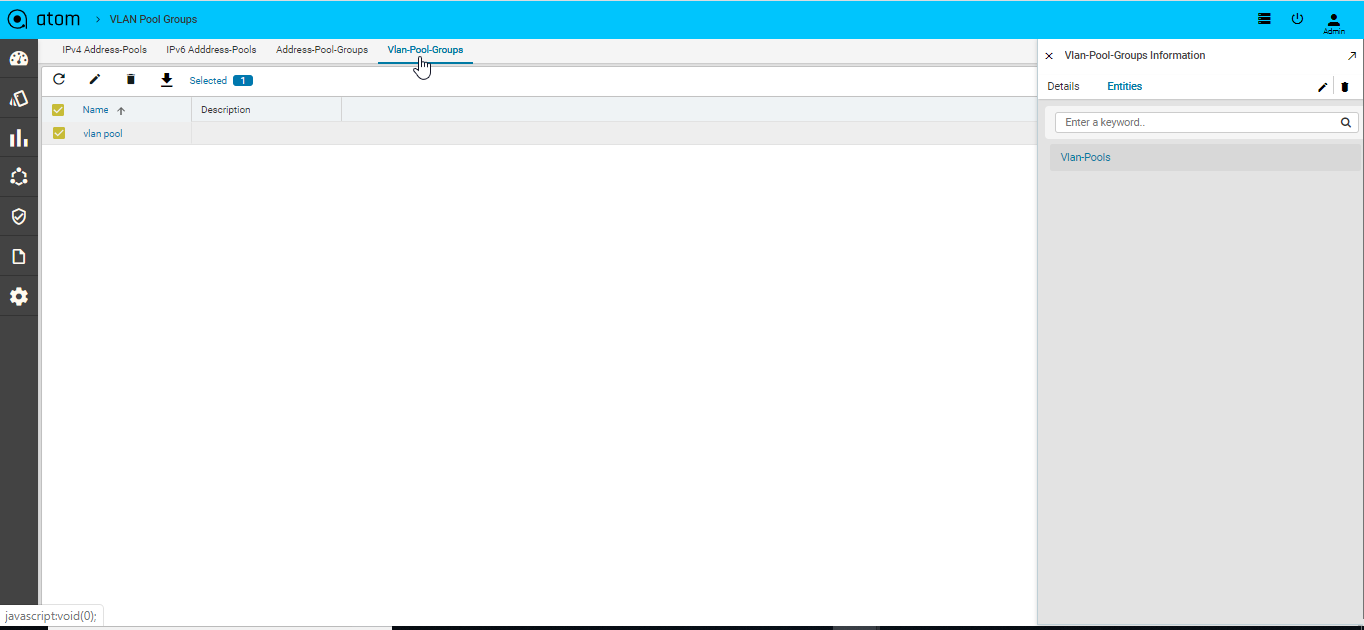

VLAN Groups

You can define VLAN groups and VLAN pools and define them as resource boundaries for a tenant in such a way that these VLAN Pools can be used during service instantiation on a resource pool.

Adding VLAN Groups

- Navigate to Resources > IPAM > VLAN Groups

- In the right pane, click Add VLAN Pool Group

- In the Create VLAN Pool Group screen, enter values in the following fields:

- Name: Enter a name for VLAN Group

- Description:

- Click Actions > vlan pools > vlan pool to create VLAN pools in the VLAN group:

- Enter values in the following fields:

- Start VLAN: Enter a number from the valid VLAN range. (1-4096)

- End VLAN: Enter a number from the valid range (1-4096)

- Click Add to add the required resource pools to the VLAN Pools

- Click the vlan pool > click Actions to add allocated VLAN.

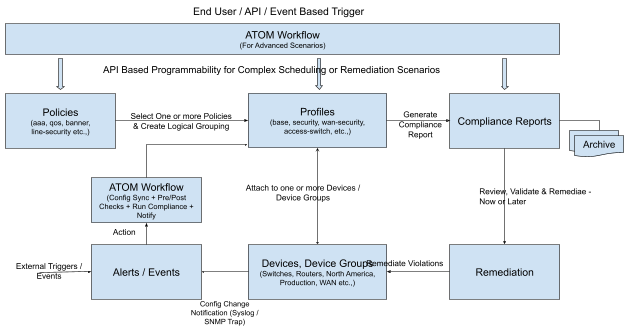

Configuration Compliance

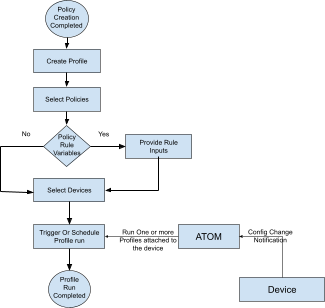

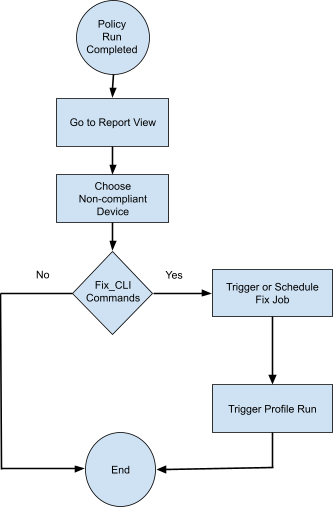

Configuration Compliance feature allows users to Define & Enforce Configuration Compliance Standards. This is realized within ATOM using the following primitives.

- Policies – Define Configuration standards & Remediation Policy by Device Family, Device Type, OS Type etc.,

- Profiles – Group multiple policies and apply configuration standards on one or more devices

- Reports – Comprehensive compliance reporting view at device level

Remediation – Fix Policy Violations on one or more devices Following diagram summarizes the overall flow.

Remediation – Fix Policy Violations on one or more devices Following diagram summarizes the overall flow.

Configuration Compliance Can address the following scenarios:

- Check If a particular configuration is present

- Check If a particular configuration contains a given pattern / should NOT contain a given pattern.

- Check If a particular configuration contains stale/unwanted configuration.

- Check for User defined parameterized values (Dynamic inputs) in configuration.

- Arithmetic checks to enforce thresholds on resource usage and capacity planning. Example:- Per device Max 100 vrfs to be configured or 20 Vlans to be enabled or 10 bgp sessions per vrf

- Group parameterized values to apply the policy. Example valid Values: ‘Any’, ‘AnyEthernet’, ‘FastEthernet0/.*’ etc.

- Regex and Jinja2 Parsers In & Between conditions

- Apply filters on configuration to categorize config blocks. Ex:- Access vs trunk based on link speed, Ports description satisfying regex classifiers,Ports which are admin up and contain IP.

- Inventory checks for NETCONF/YANG parameters using XPath based expressions.

- Parse as Blocks to split the entire running configuration into blocks and search for the condition match criteria value within each block.

- Custom Block split definitions based on the start and end expressions you provide in the Block Start Expression and Block End Expression text boxes.

- Evaluate each block against a set of conditions with individual actions/severities by using the Condition Scope as Previously Matched Blocks to parse.

- Raise single violations for condition violation by any block or multiple violations per block of violation with individual remediation actions defined.

ATOM supports Configuration Compliance for the following Vendors:

-

- Cisco Systems

- Juniper Networks

- Fortinet

- Force10 Networks

- Brocade

- PaloAlto Networks

- Riverbed Technology

- F5 Networks

Policies

Compliance policy allows configuration standards to be defined in CLI format and YANG format(x-path or xml). Following provides a high level overview of a Policy:

-

- Policy is a collection of Rules

- A Rule contains one or more Conditions

- Condition describes

- Expected Configuration. Configuration can be parameterized through Rule Variables.

- Action to be taken on a condition evaluation includes CLI commands or Netconf XML RPC format to be used to remediate a violation.

-

- A Rule can be attached to one or More device platforms – Vendor, OS Type, Device Family, Device Type and OS Version

Use Cases

| # | Configuration Standard Style | Example | Reference |

|

1 |

Static Configuration |

Example: All Devices in Target Network should Contain a specific Domain Name

Expected Configuration: ip domain-name anutacorp.com Fix Configuration: <<If missing, configure the above command>> |

Scenario1 |

| XPath Expression | Xpath Expression:

Cisco-IOS-XR-native:native/ip/domain/name=`anutacor p.com’ |

Scenario6 | |

|

XML Template Payload |

Template Payload:

<native xmlns=”https://cisco.com/ns/yang/Cisco-IOS-XE-native“ > <ip> <domain> <name>net.disney.com</name> </domain> </ip> </native> |

Scenario11 |

|

|

2 |

Dynamic Configuration with User provided values |

Example: Devices in Target Network should have a specific Loopback interface – Loopback0 or Loopback1 based on user input.

Expected Configuration: interface {{ interface_name }} Fix Configuration: <<If missing, Configure the specific Loopback interfaces>> |

Scenario3 |

| X-path | Xpath Expression: | Scenario9 |

| Expression | Cisco-IOS-XE-native:native/interface/Loopback/name=’ | ||

| 0′ and | |||

| Cisco-IOS-XE-native:native/interface/Loopback[name=0 | |||

| ]/ip/address/primary/address='{{ lo0_ipv4addr }}’ and | |||

| Cisco-IOS-XE-native:native/interface/Loopback[name=0 | |||

| ]/ip/address/primary/mask=’255.255.255.255′ and | |||

| Cisco-IOS-XE-native:native/interface/Loopback[name=0 | |||

| ]/ipv6/address/prefix-list/prefix='{{ lo0_ipv6addr }}’ | |||

|

XML Template Payload |

Template Payload:

<native xmlns=”http://cisco.com/ns/yang/Cisco-IOS-XE-native” > <interface> <Loopback> <ip> <address> <primary> <address>10.100.99.98</address> <mask>255.255.255.255</mask> </primary> </address> </ip> <ipv6> <address> <prefix-list> <prefix>2605:30C0::3B/128</prefix> </prefix-list> </address> </ipv6> <name>0</name> </Loopback> </interface> </native> |

Scenario13 |

|

| Configuration | Example: All the VTY lines should have specific | ||

| with Patterns, | exec-timeout and session-timeout configured. | ||

| 3 | Wildcards,

etc.that |

Expected Configuration: | Scenario4 |

| require Regular

expressions |

line vty (.*)

session-timeout 10 |

| exec-timeout 10 0

Fix Configuration: <<If missing, Configure the timeouts under all matching VTY lines>> |

|||

|

4 |

Configuration with sub-modes |

Example: The physical Interface should not be shut down and show be in auto-negotiation mode

Expected Configuration: interface {{ interface_name }} no shutdown negotiation auto Fix Configuration: <<If missing, Configure the above commands for one or more interfaces>> |

Scenario3 |

|

5 |

Removing unwanted extra configuration |

Example: Finding the Devices having extra ntp-server addresses configured and removing those other than expected server addresses.

Expected Configuration: ntp-server 10.1.1.1 Fix Configuration: << Configure above ntp-server if not found. Remove any ntp server other than 10.1.1.1 >> |

Scenario2 |

| Example: Finding the devices in the network which | |||

| doesn’t contain the OSPF router-id configured as per | |||

| loopback0 ip address. | |||

|

6 |

Advanced: Presence of an entity value from one block in another | Expected Configuration:

interface Loopback0 ip address 45.45.45.5 255.255.255.255 ! router ospf 100 router-id 45.45.45.5 |

Scenario5 |

| Fix Configuration:

router ospf 100 |

| router-id 45.45.45.5 |

Scenario 1: IP Domain Name

Scenario: Network Devices must have domain name configured. In this example we are looking for the domain name as anutacorp.com across all devices in the lab.

Platform:

Cisco IOS-XE

Expected Configuration:

ip domain-name anutacorp.com

Fix-CLI Configuration:

ip domain-name anutacorp.com

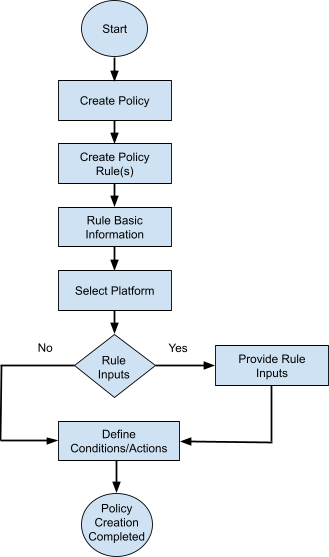

Follow the steps below to configure Compliance Policy for the above scenario.

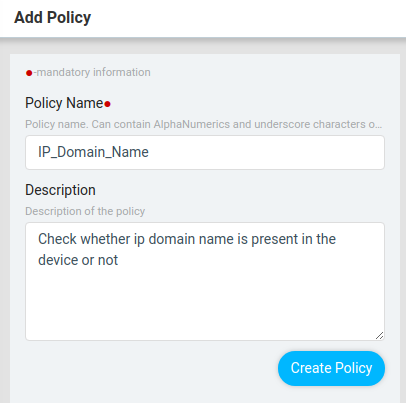

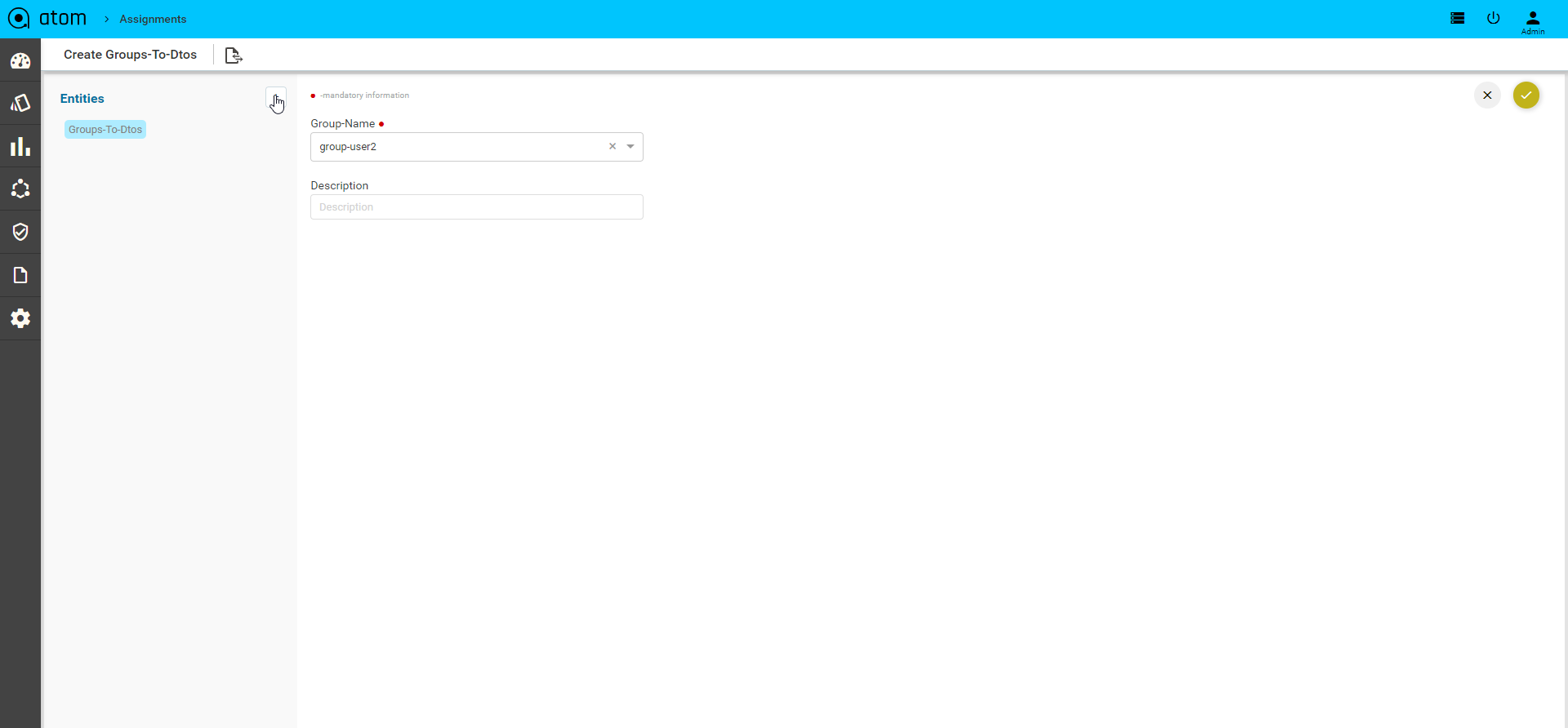

- Configure Policy

Steps:

-

- Navigate to Resource Manager > Config Compliance -> Policies

- Click ‘+’ to create new Policy and provide the following information

- Policy Name

- Description

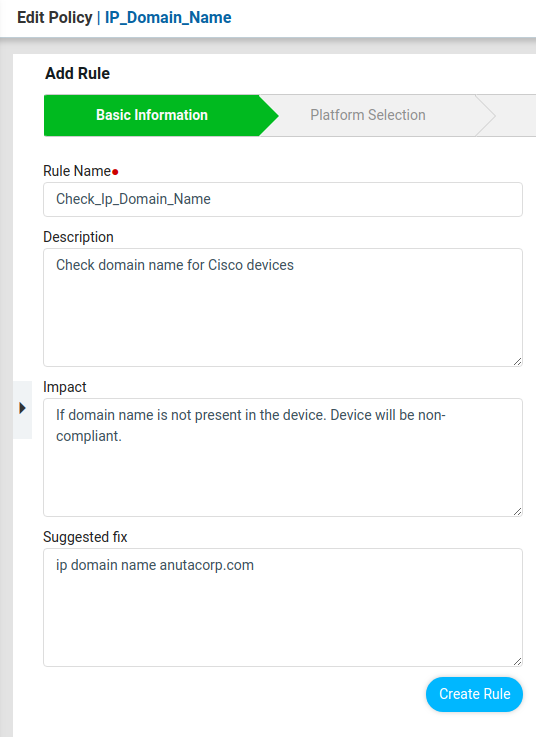

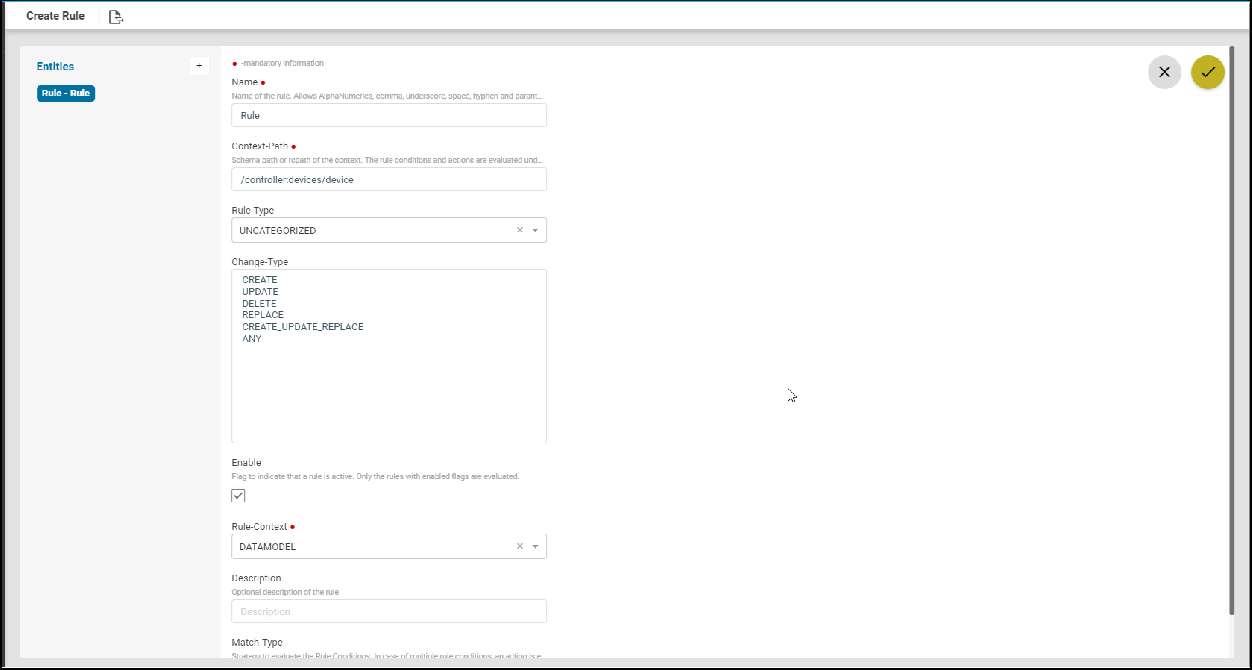

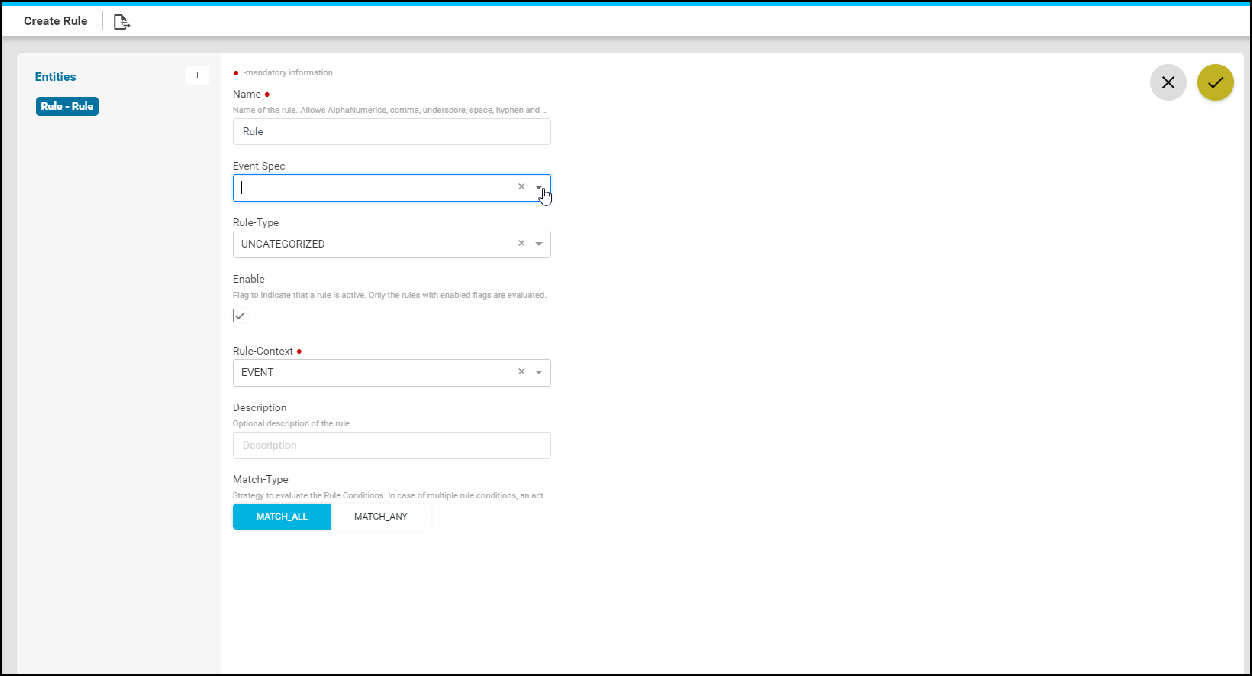

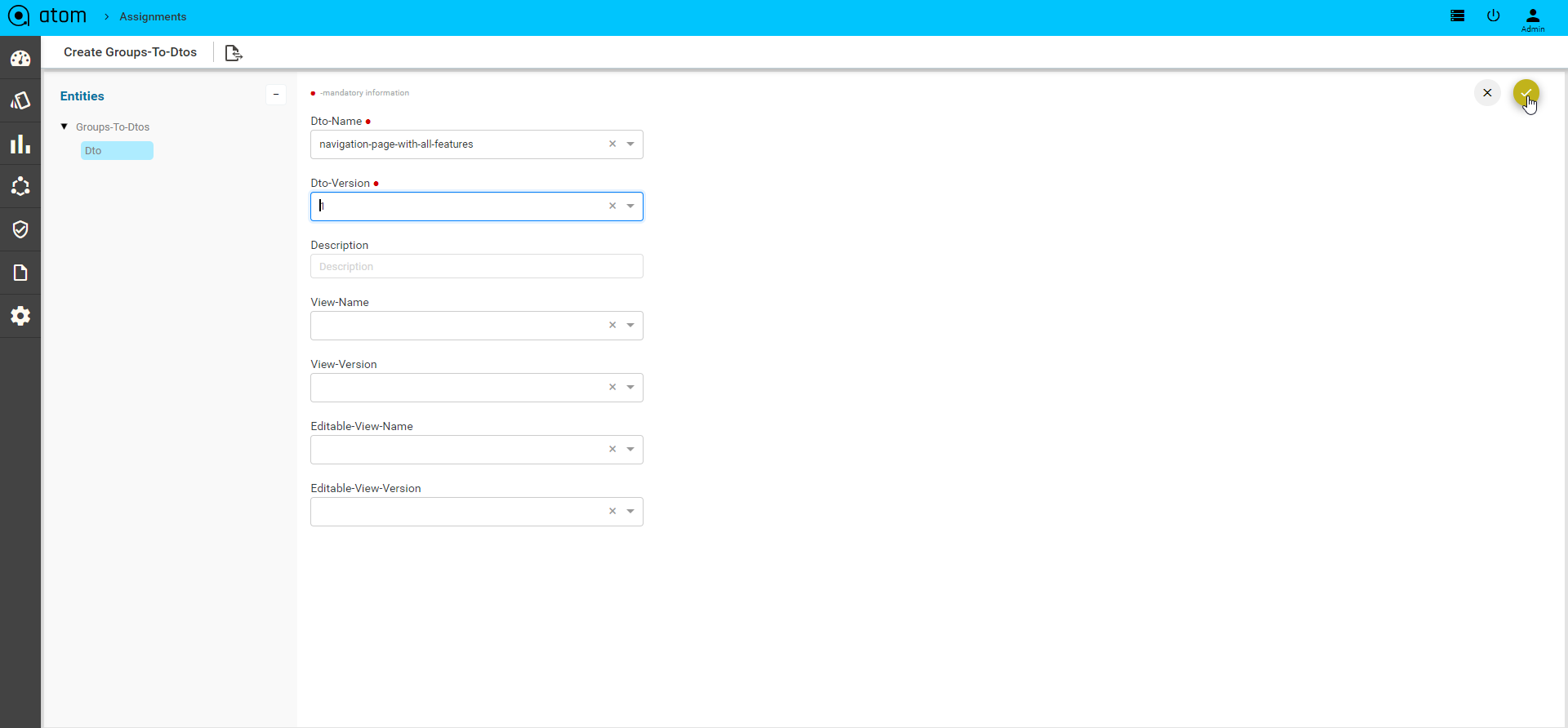

- Configure Rules

One or more rules can be configured to express configuration standards. Based on the complexity of the scenario, configuration standards can be broken up into more than one condition.

Steps:

-

- Navigate to Resource Manager > Config Compliance -> Policies

- Create/Select a Policy

- Click ‘+’ to create new Rule

- ATOM opens up new wizard as shown below

-

- Rule has four components

- Basic Information

- Platform Selection

- Rule Variables

- Conditions & Actions

- Rule has four components

Basic Information

Provide basic information as described below. Information provided here is for documentation purposes only.

Rule Name: Provide any Name

Description: Brief explanation of the configuration evaluation that the rule is going to perform.

Impact: If the device configuration does not meet the rule or rules in the policy, type it in the Impact field.

Suggested Fix: Using which non-compliance can be corrected and device returns to a state of Compliance.

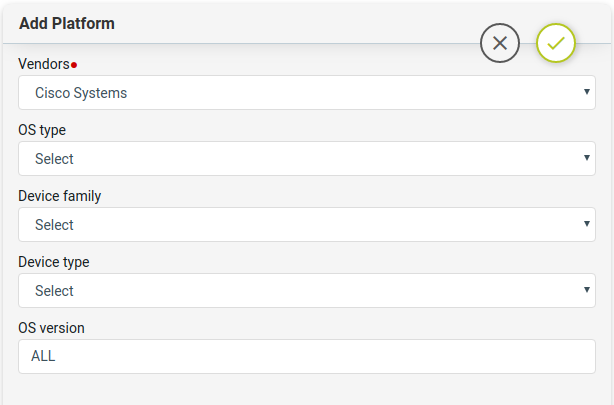

Platform Selection

Rules contain configuration standards expressed in CLI Configuration format. Configuration standard can be at Vendor level, Device Type, Device Platform, OS Type or OS Version.

Steps:

-

- Navigate to Config Manager > Config Compliance -> Policies -> Rules

- Create/Select a Rule & Provide the following information

- Vendor

- OS type

- Device Family

- Device Type

- OS Version

Note: Platform Selection will be used during Policy execution. Devices that don’t match the above criteria are skipped.

Note: It’s not common to have more than one Platform

Rule Variables

Rule variables allow configuration to be parameterized.

Steps:

-

- Navigate to Resource Manager > Config Compliance -> Policies -> Rules

- Create/Select a Rule Variables

- Key – Provide unique name to identify rule variable

- Description – Describe rule-input configuration

- Default Value – Default value. Can be overridden during Policy execution time

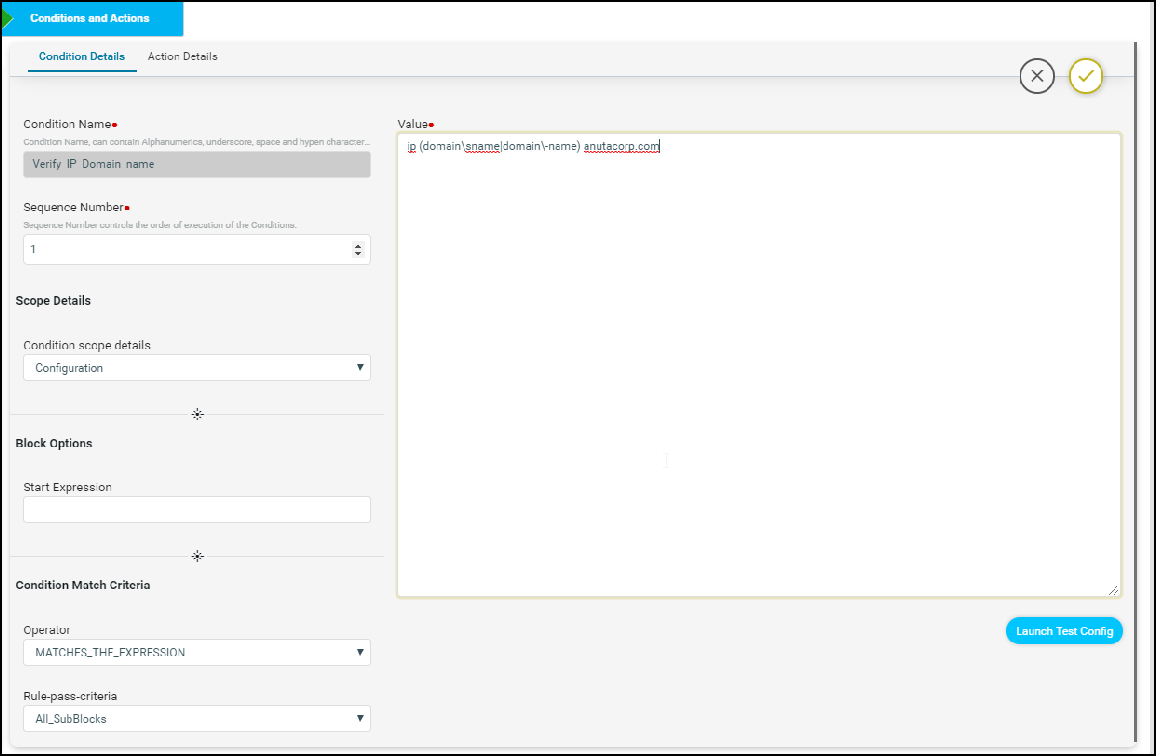

Conditions and Actions

Expected configuration & actions to be taken when violations are detected are specified in the

Conditions & Actions section.

Based on the complexity of the scenario, configuration standards can be broken up into more than one condition.

Steps:

-

- Navigate to Resource Manager > Config Compliance -> Policies -> Rules

- Create/Select Conditions & Actions

- Condition Details – Described Below

- Action Details – Described Below

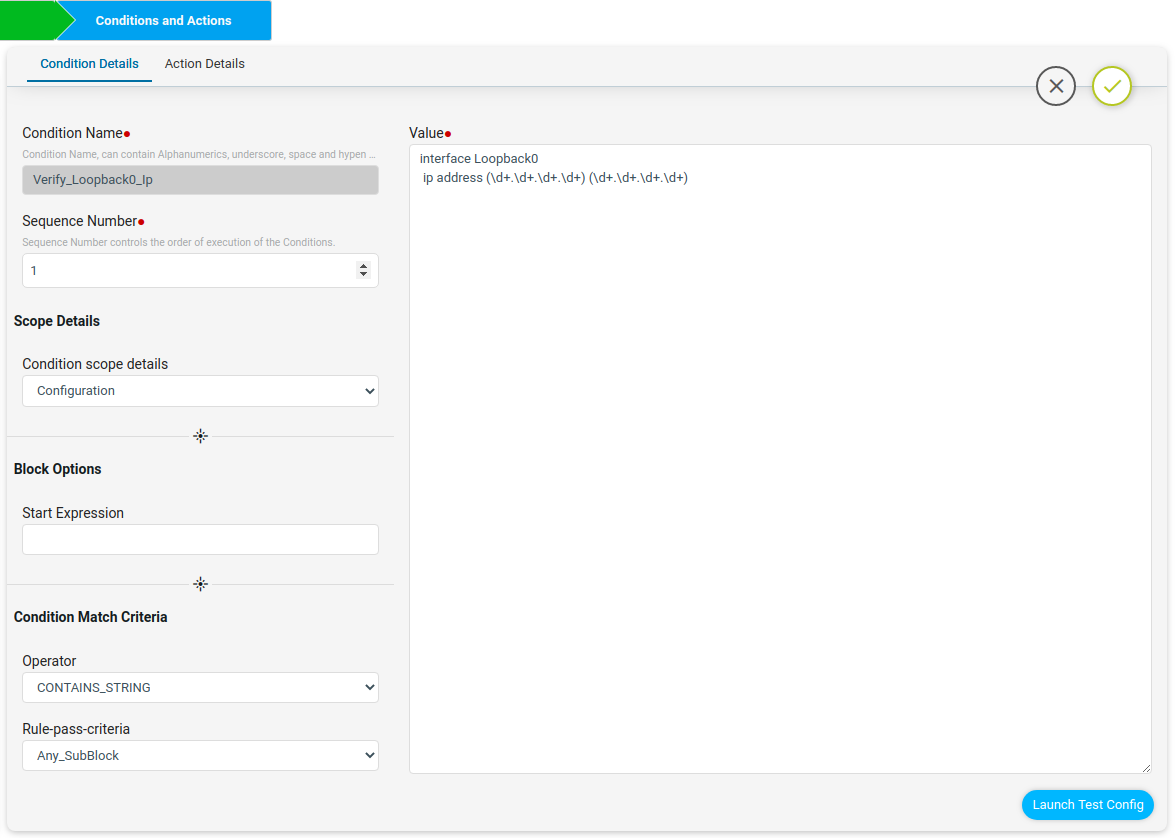

Condition Details

Condition section provides users to specify the expected configuration and various options on how to match the expected configuration including option to identify sub mode configuration blocks.

Condition Name: Name of the Condition

Sequence Number : Order of the condition execution.

Scope Details

Condition scope details: Scope could be either full configuration copy or configuration matched in prior condition.

- Configuration – Full Configuration

- Previously_Matched_Blocks – Subset of configuration matched by prior condition

Block Options

Start Expression – Regular expression indicating the start of the sub-block.

End Expression – Regular expression indicating the end of the sub-block.

Condition Match Criteria Operator:

MATCHES_THE_EXPRESSION – Checks whether the condition value exactly matches with device configuration or not.

DOESNOT_MATCHES_THE_EXPRESSION – Checks whether the condition value does not match with the device configuration or not.

CONTAINS_STRING – Checks whether the device configuration contains condition value config or not.

Rule-Pass-Criteria:

All_SubBlocks – Checks whether the condition value matches in all the blocks or not. Any_SubBlock – Checks whether the condition value matches in any of the blocks or not.

Value: Value field accepts Configuration Standard as CLI Configuration. Following types of configuration can be provided:

-

- Static Configuration

- Dynamic/Parameterized Configuration

- Configuration with Regular Expressions

- Configuration coupled with Jinja2 Templating

Note: For some Vendor Configurations like Cisco IOS-Style, whitespace in command prefix is mandatory to identify commands at sub-mode level.

For Scenario 1 – Provide value as ip domain-name anutacorp.com to search for a given domain name in the running configuration.

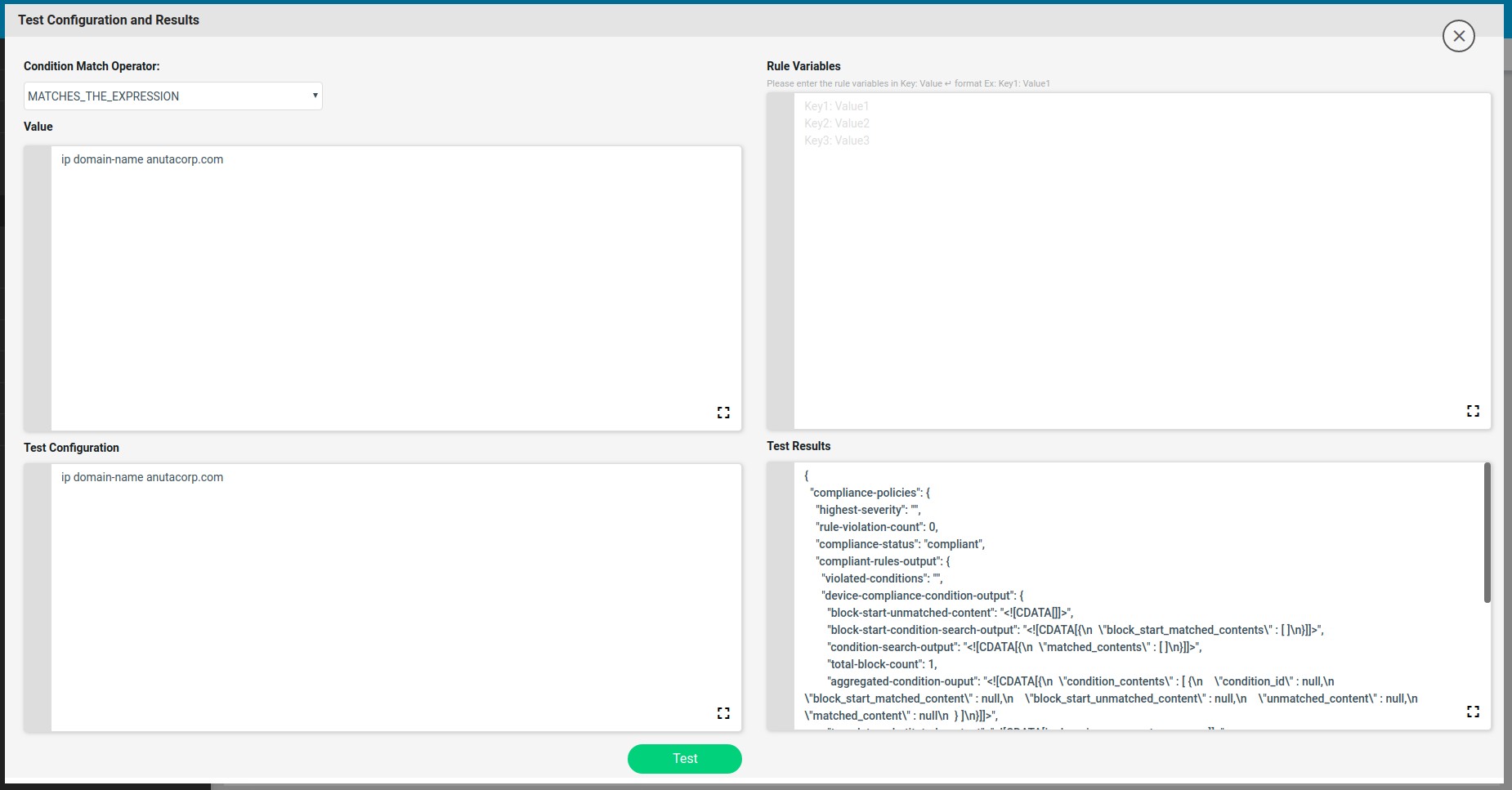

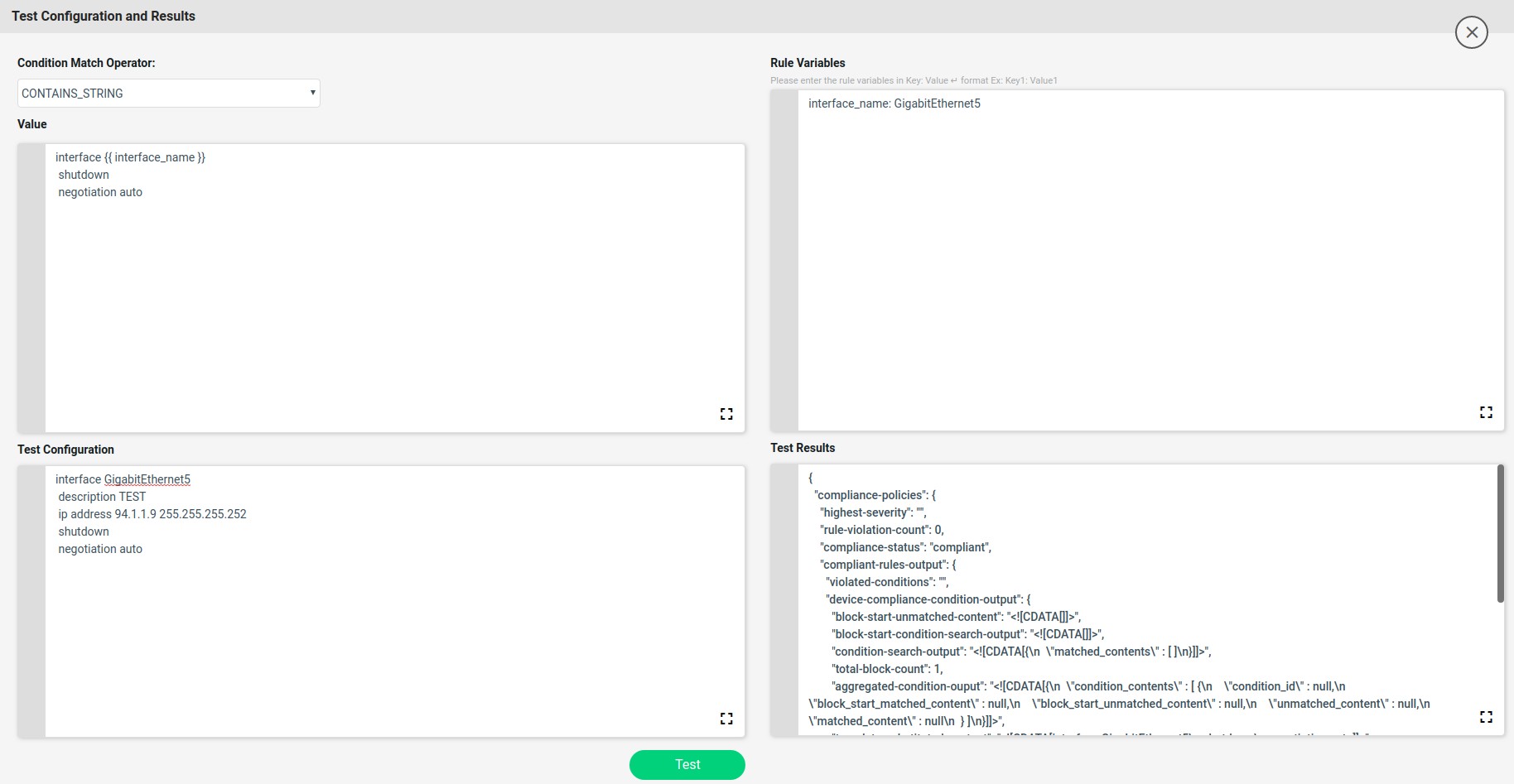

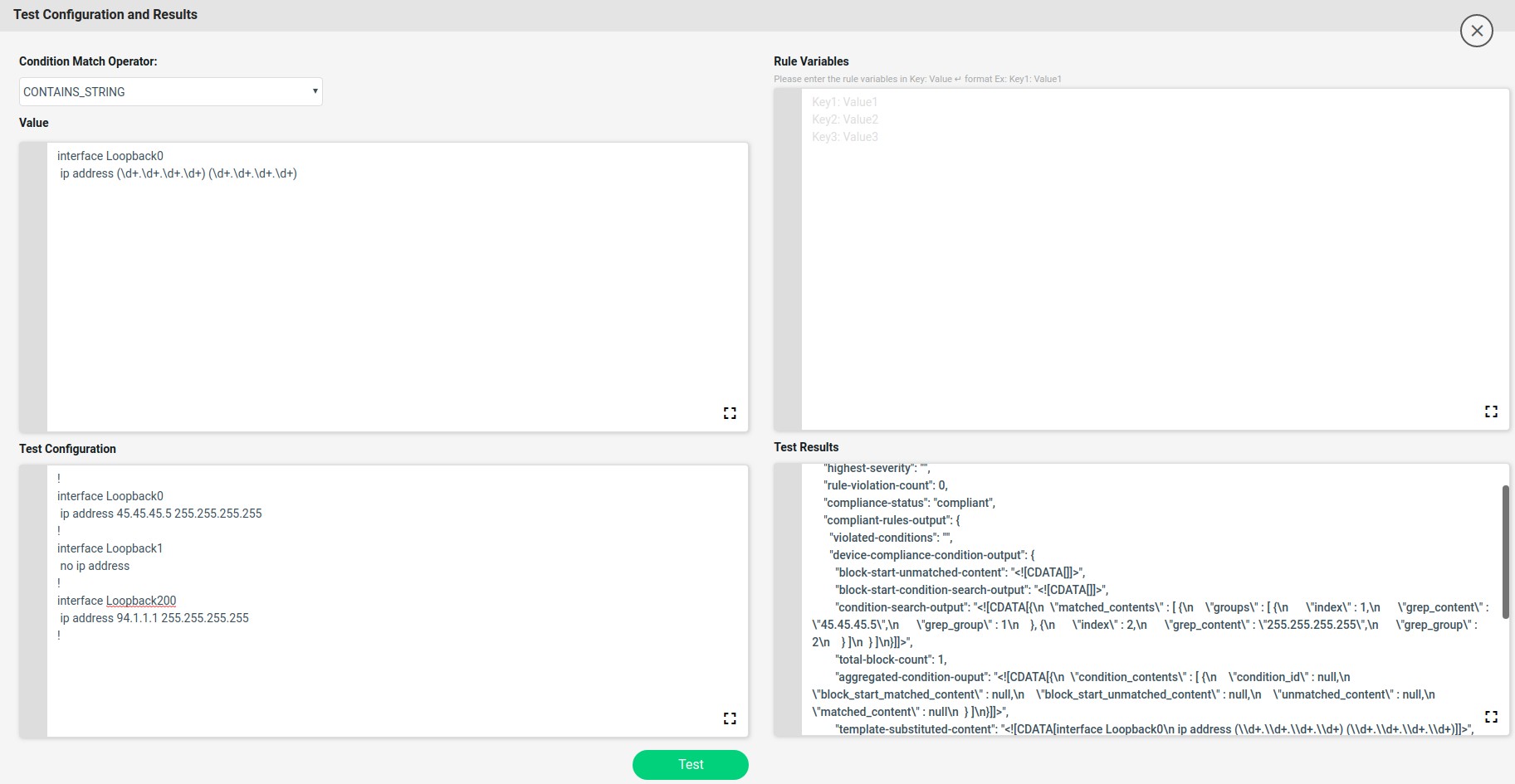

Test Config

Based on the complexity of the configuration standard, Value may be complex and may need to build up iteratively. Test Config utility helps the CLI configuration condition to be validated against Test Configuration.

Steps:

-

- Navigate to Resource Manager > Config Compliance -> Policies -> Rules –>

- Create/Select Conditions & Actions

- Click “Launch Test Config” will launch a form to Test Condition

Condition Match Operator: MATCHES_THE_EXPRESSION DOESNOT_MATCHES_THE_EXPRESSION CONTAINS_STRING

Value: Sample configuration to be tested. Value will be shown from the Condition Details. Value can be further refined

Note: Any Edits to Value will reflect in the Condition Details -> Value and Vice-versa.

Test Configuration: Sample device configuration

Rule Variables: The rule variables created in the rule will be shown here with default values. Values can be modified.

Note: Any Edits to Values will not be reflected in the Rule Variable default values provided in Rule Variables Section.

Test Results: Based on Condition Match Operator test results will be shown on the right hand side

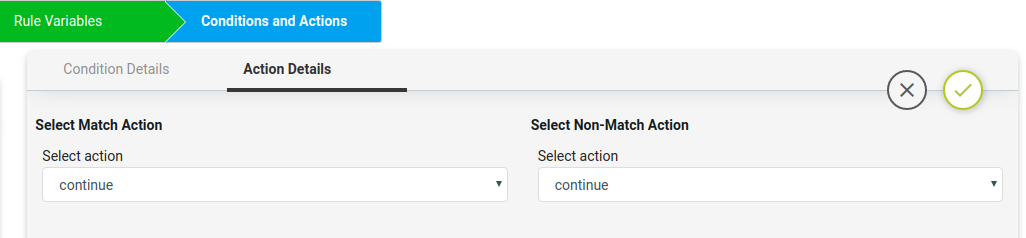

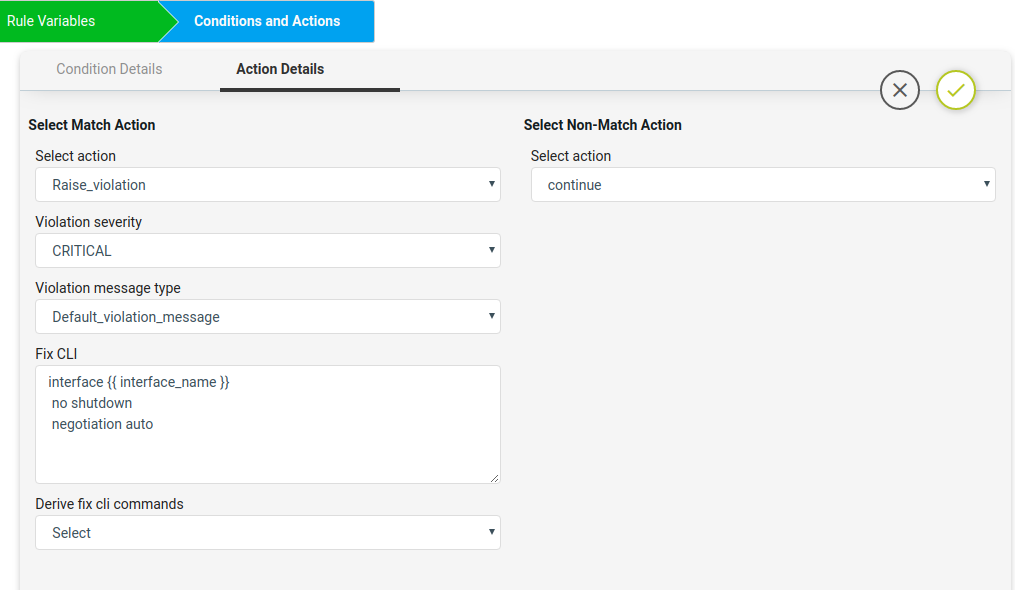

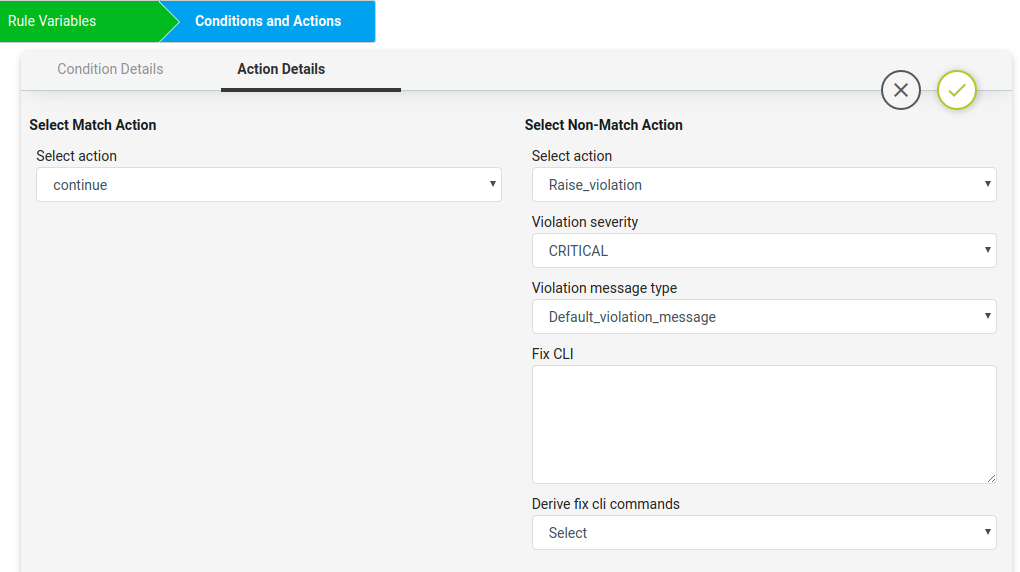

Action Details

Action can be taken after Condition evaluation. Condition can result in either a “Match” or “Non-Match”. Depending on the scenario one or both criteria may apply.

Select Match Action – This option is applicable when Condition evaluates to a Match

Select action:

Continue – continue execution to next condition Donot_raise_violation – skip execution and don’t raise violation

Raise_violation_and_continue – raise violation and continue execution to next conditions Raise_violation – raise violation and skip execution

For Scenario 1, no action needs to be taken during a match condition, so select continue

as action.

Violation severity: LOW MEDIUM HIGH CRITICAL

Violation message type:

Default_violation_message User_defined_violation_message

Derive fix cli commands:

Use_unmatched_block – unmatched config from the block Use_matched_block – matched config from the block Use_complete_block – total block config

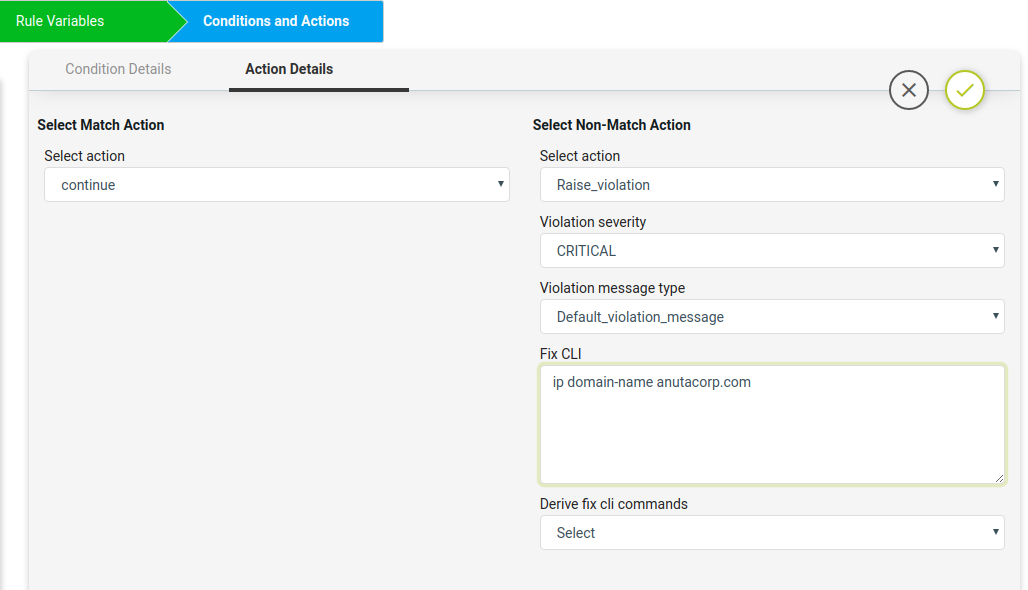

Select Non-Match Action Select action:

Continue

Don’t raise violation

Raise violation and continue Raise violation

For Scenario 1, Action is required when Condition is not matched. Select Raise violation and continue.

Violation severity: LOW MEDIUM

HIGH CRITICAL

Violation message type:

Default_violation_message User_defined_violation_message

Fix CLI: Provide the CLI Configuration to be used for remediation. Fix CLI can be either provided here or derived.

Option – 1 – Explicit Remediation / Fix CLI

For Scenario 1, Provide “ip domain-name anutacorp.com” in Fix CLI.

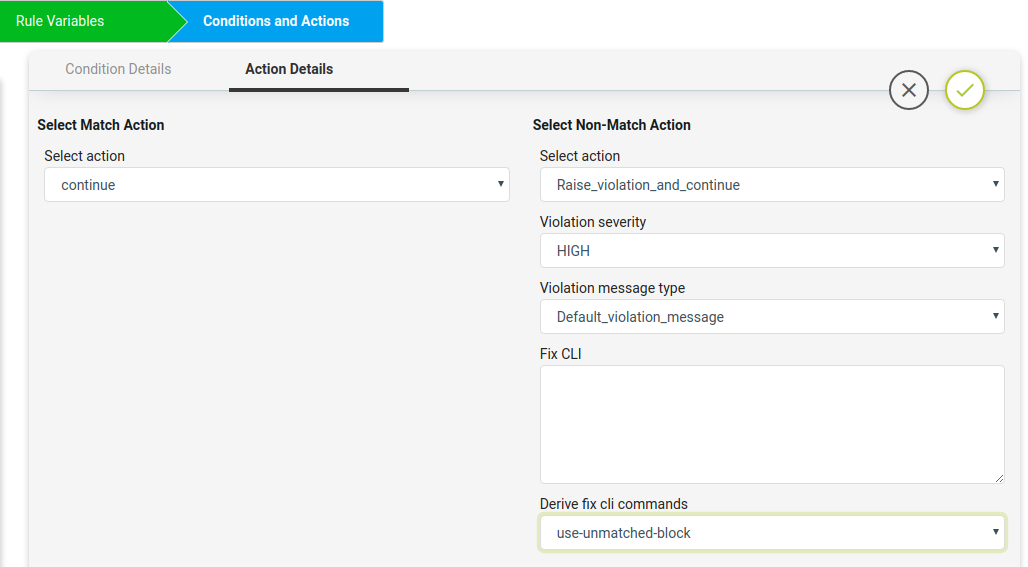

Option – 2 – Remediation Commands can be derived from Condition evaluation.

Derive fix cli commands:

Options below:

Use_unmatched_block Use_matched_block Use_complete_block

For Scenario 1, Select “Use_unmatched_block”. Since this is non-match Action, unmatched_block will be Condition Details->Value and can be used as Fix CLI.

Scenario 2: NTP Server configuration check

Scenario:

- All devices in the network should contain the designated ntp server.

- Remove all other ntp servers

- In this example

- Expected ntp-server = 10.0.0.1

Platform:

Cisco IOS-XE

Expected Configuration:

ntp server 10.0.0.1

Fix-CLI Configuration:

ntp server 10.0.0.1

<<Remove Any Other ntp server other than 10.0.0.1>>

This use case uses regular expressions and contains two conditions.

- Condition-1 – Check for expected config & if not found remediate using Fix CLI.

Fix-cli Configuration :

ntp server 10.0.0.1

- Condition 2- Check for unwanted ntp-servers and remove them.

Fix-cli Configuration :

no ntp server 10.0.0.2 //Derived no ntp server 10.0.0.3 //Derived

Steps:

-

- Navigate to Resource Manager > Config Compliance -> Policies

- Click ‘+’ to create new Policy and provide the following information

- Policy Name – NTP_Common_Peer_Configuration

- Description

- Select the Policy and Click ‘+’ to create new Rule

- Rule Name – Check_NTP_Common_Peer_Configuration

- Navigate to Config Manager > Config Compliance -> Policies -> Rules

- Select a Rule & Provide the following information

- Vendor – Cisco Systems

- OS type – IOSXE

- Device Family – ALL

- Device Type – ALL

- OS Version – ALL

- Rule variables are not required for this scenario.

- Now fill the Conditions and Actions

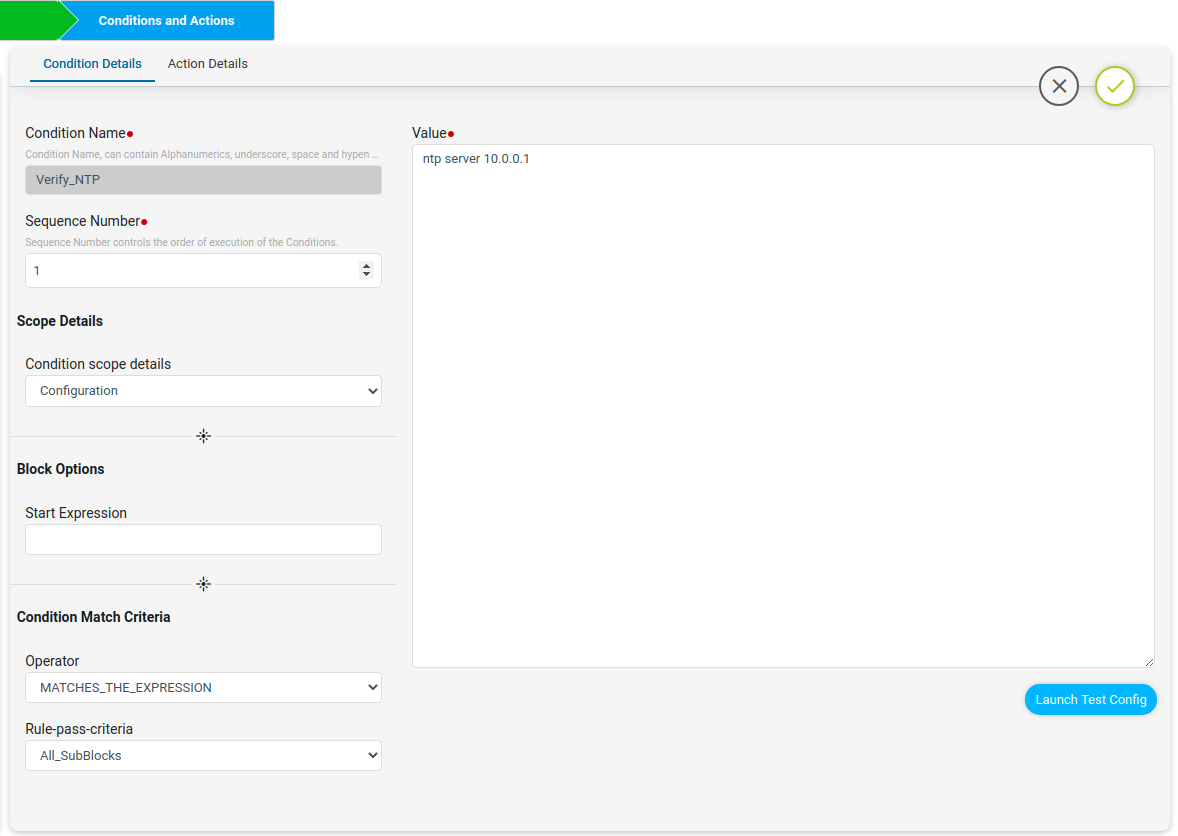

Condition1

The Verify_NTP condition will check if the NTP server config is present in the device or not.

Here Non-Match Action can be done either using the commands in Fix CLI or using the Derive fix cli commands.

-

- Using the Fix CLI user needs to provide the configuration commands manually.

- Using the Derive Fix CLI Commands user needs to select the use_unmatched_block as shown below.

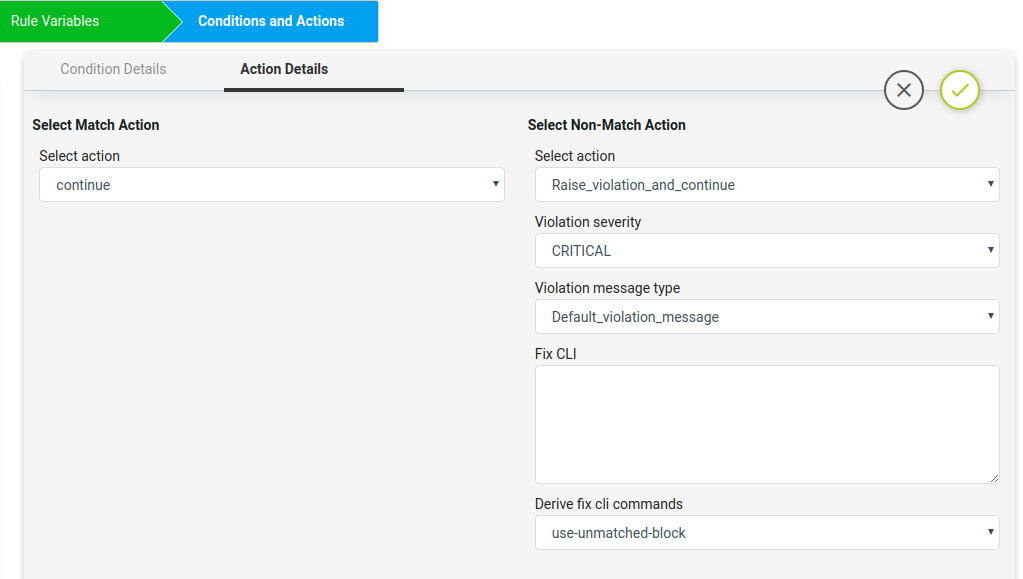

Here on Match Action it will Continue and on Non-Match Action the Derive fix cli commands uses the use-unmatched-block to remediate the device.

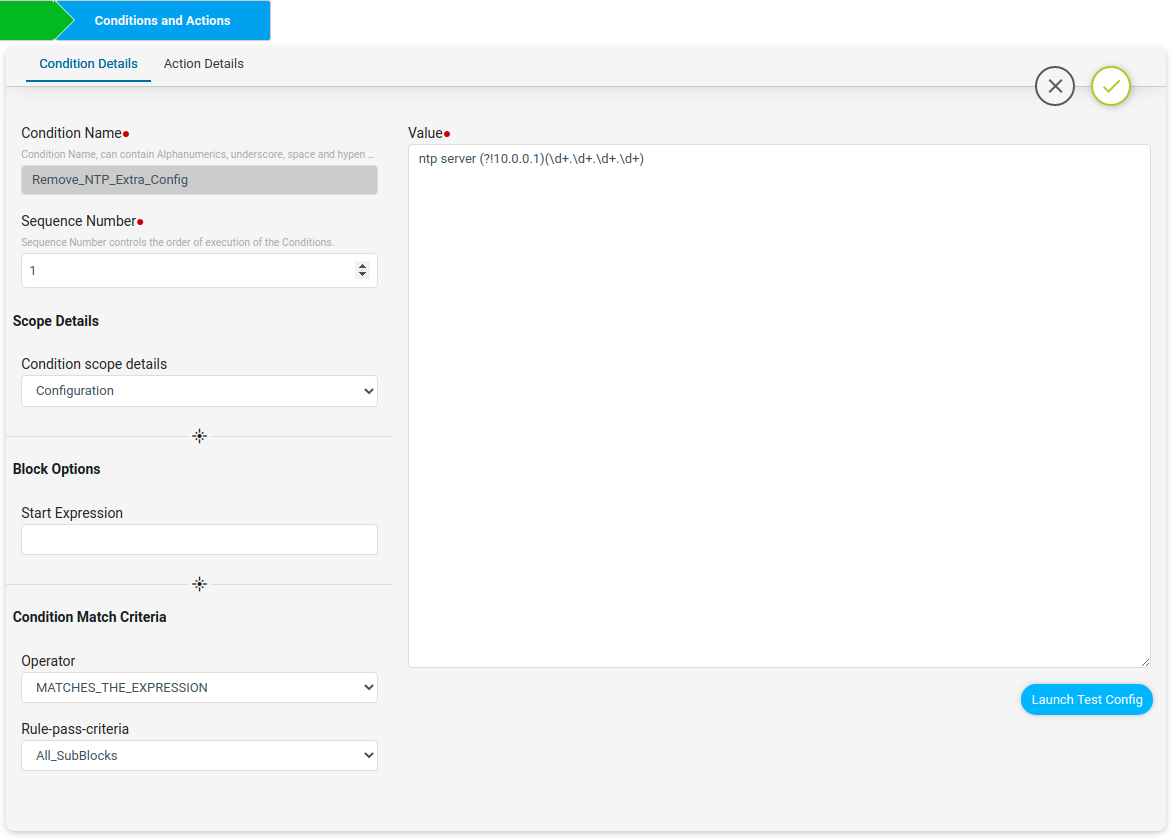

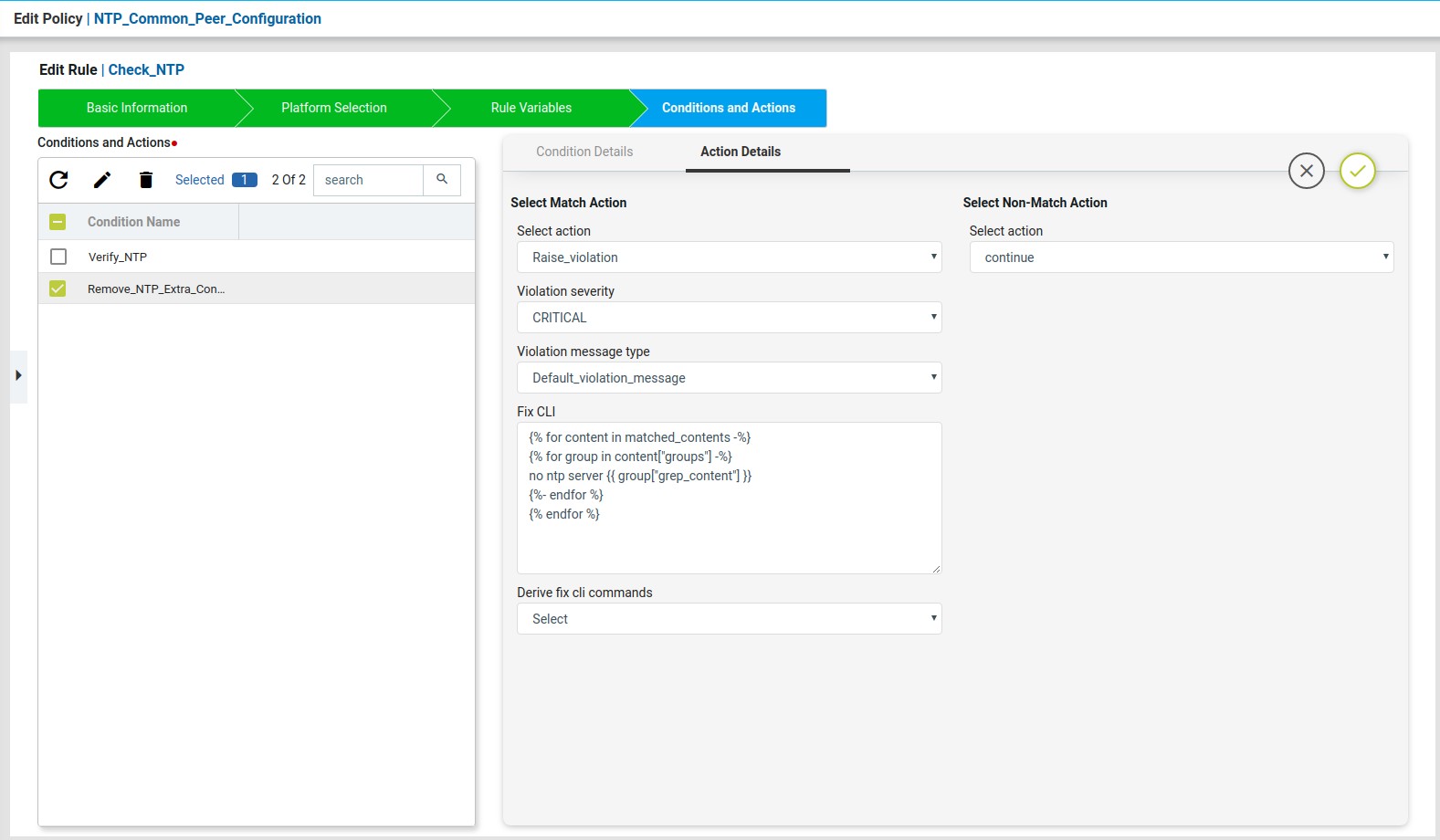

Condition2

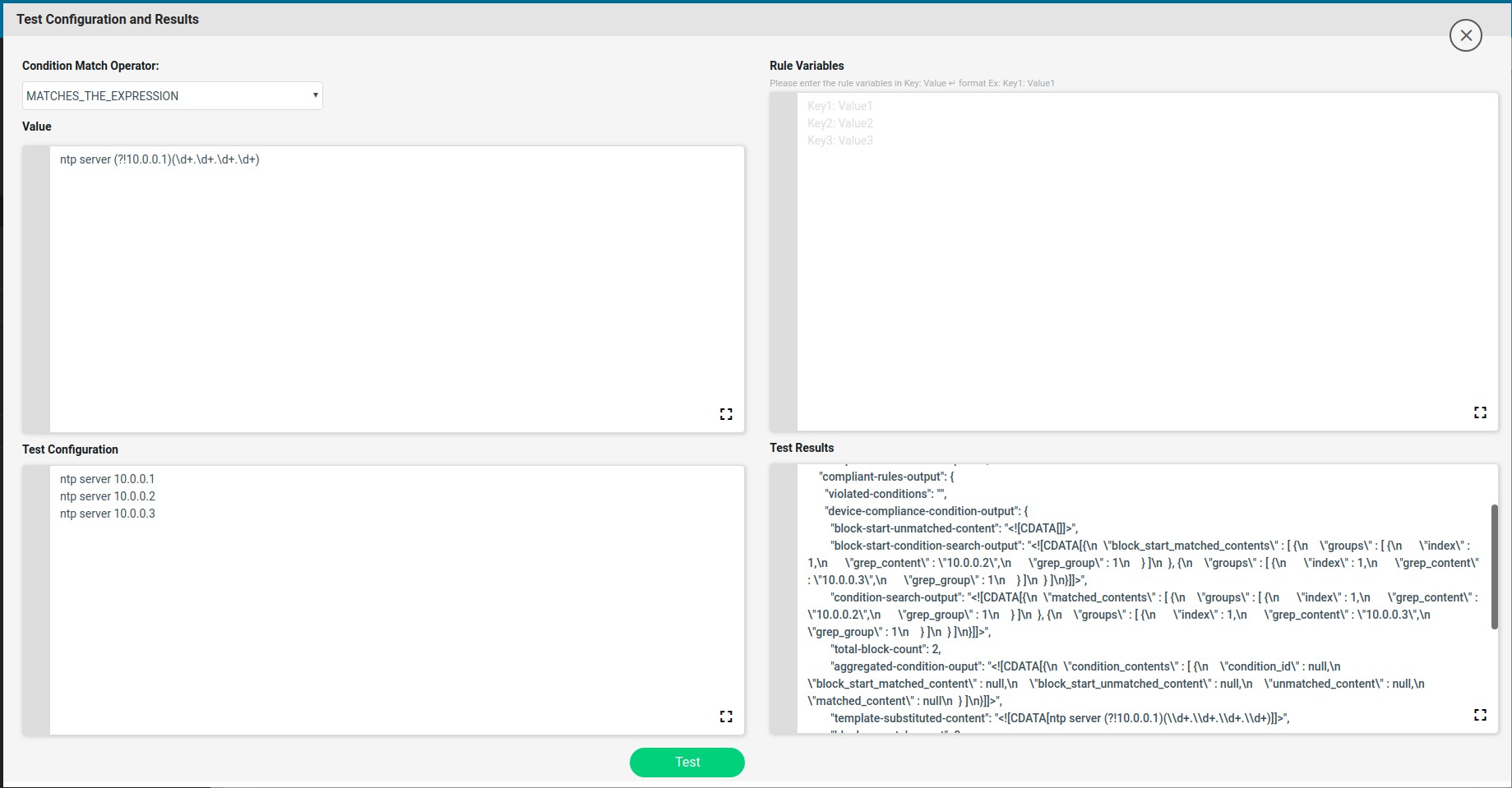

Remove_NTP_Extra_Config condition will use the regex to match and capture the extra NTP server ip configured in the device other than the expected ip.

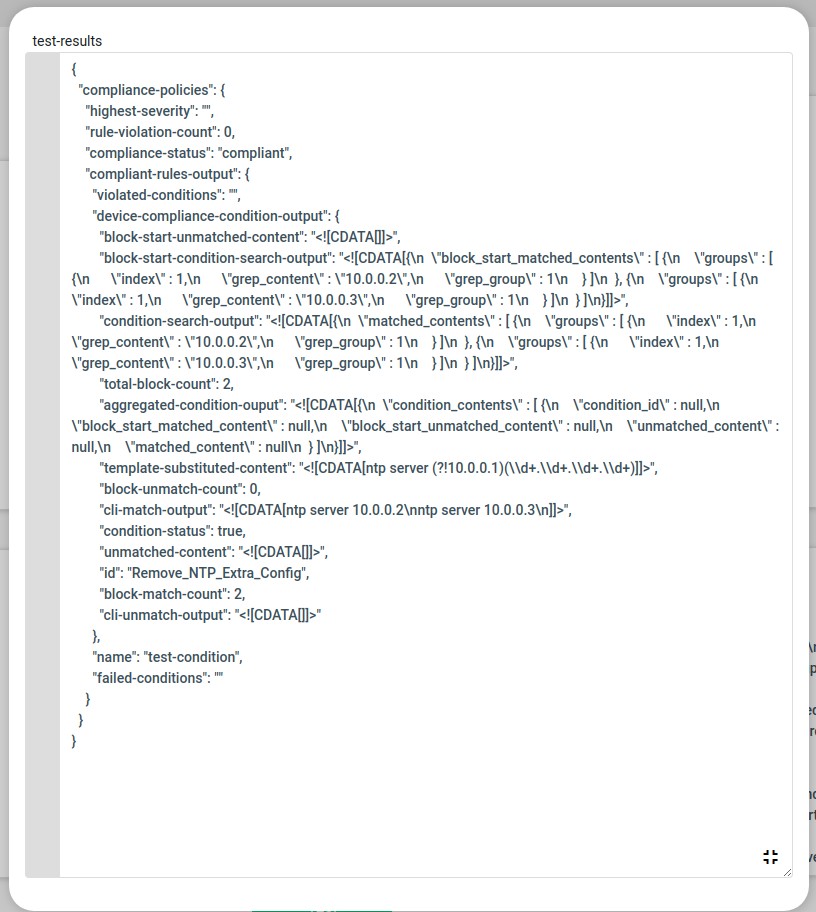

The extra NTP server ip captured will be stored in the backend data structure which is shown in the Test Results tab.

The captured data will be stored in the condition-search-output

On Match Action write a jinja2 configuration template to remove the extra ip’s captured using the above test-result data structure.

Finally if different NTP servers are present on the device, for Non-Compliant device Fix CLI will show up as below

Scenario 3: Interface configuration check

Scenario: All devices in the network should have a specific interface in no shutdown state with auto negotiation enabled. The interface block can have extra configuration commands under it but should be in no shutdown state and auto negotiation enabled.

Platform:

Cisco IOS-XE

Expected Configuration:

interface {{ interface_name }} no shutdown

negotiation auto

Fix-CLI Configuration:

interface {{ interface_name }} no shutdown

negotiation auto

This use case is an interface block configuration having rule variables. In this use case as no shutdown is generally not visible on device running config, we will check whether the interface is in shutdown or not. If shutdown it will remediate to no shutdown.

Steps:

-

- Navigate to Resource Manager > Config Compliance -> Policies

- Click ‘+’ to create new Policy and provide the following information

- Policy Name – Interfaces

- Description

- Select the Policy and Click ‘+’ to create new Rule

- Rule Name – Check_Interfaces

- Navigate to Config Manager > Config Compliance -> Policies -> Rules

- Select a Rule & Provide the following information

- Vendor – Cisco Systems

- OS type – IOSXE

- Device Family – ALL

- Device Type – ALL

- OS Version – ALL

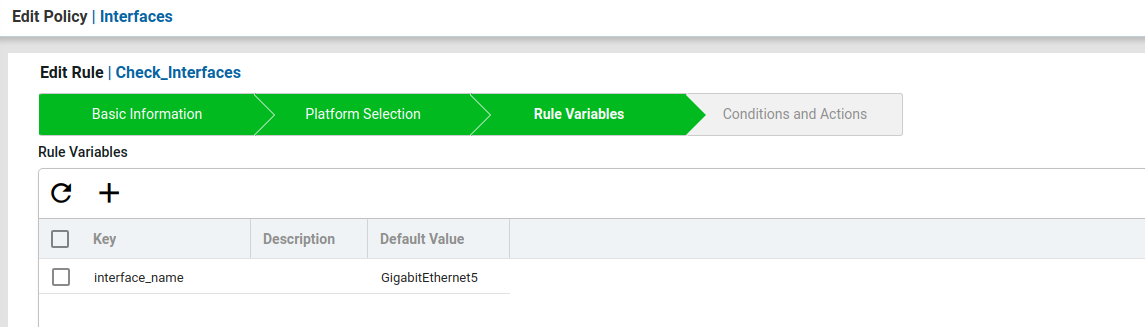

- Now create the Rule variables for this scenario.

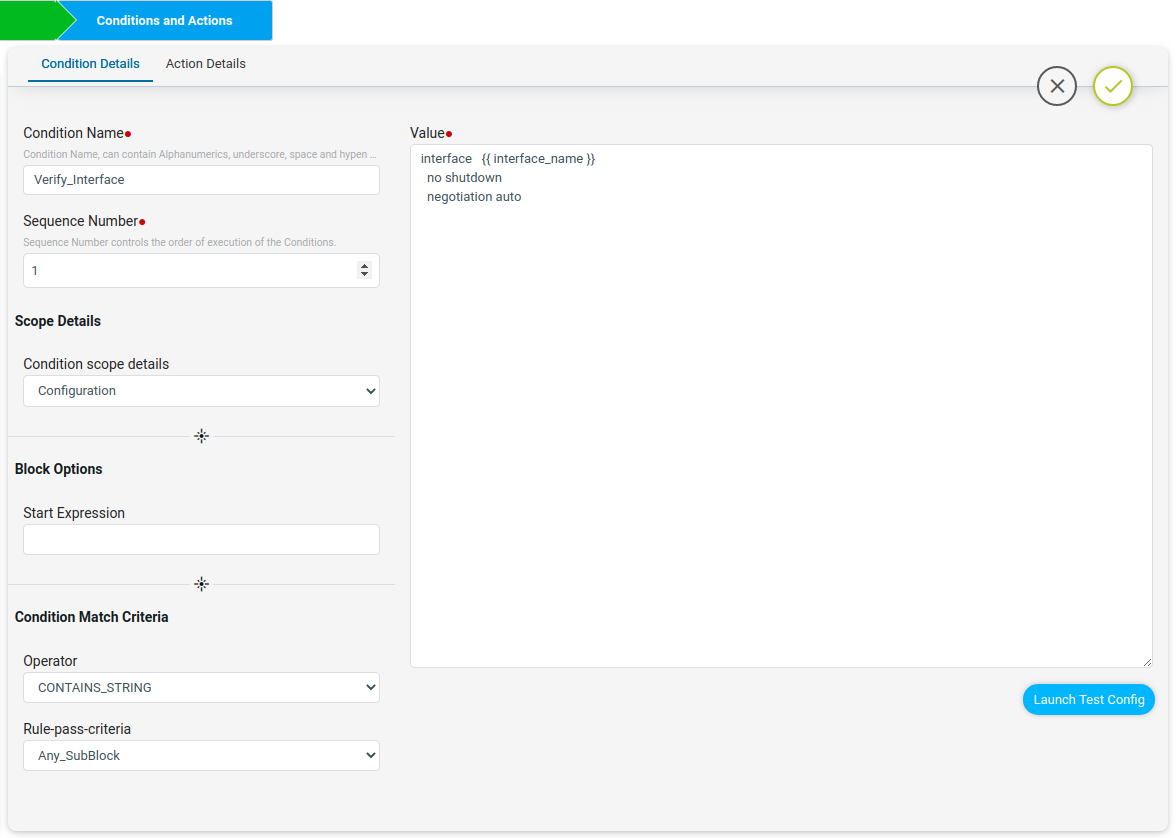

In the policy we will have a jinja rule variable interface_name. Here Verify_Interfaces condition will check if the interface block config is present in the device or not and under that interface if no shutdown and negotiation auto is present.

For Scenario3 Condition Match Operator as CONTAINS_STRING will check whether the device configuration contains condition value or not. If device configuration contains value, the result will be Compliant, else Non-Compliant.

Here on Non-Match Action select Continue and on Match Action add Fix cli commands to remediate on the device

For Non-Compliant devices Fix CLI will show up later-on as below.

For Non-Compliant devices Fix CLI will show up later-on as below.

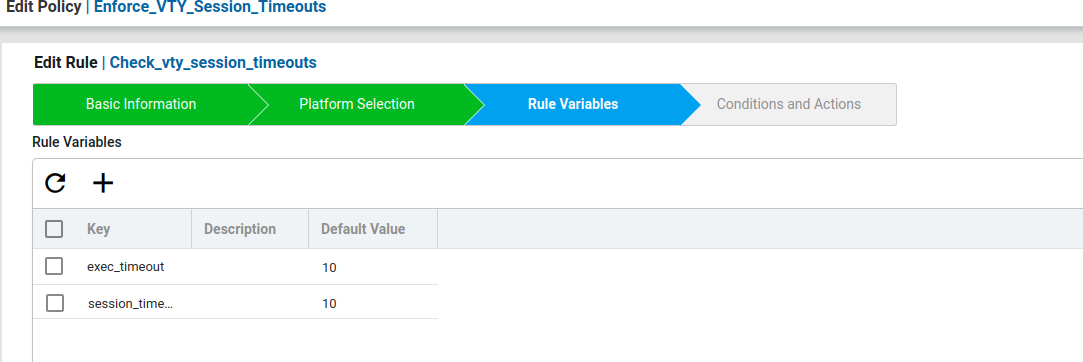

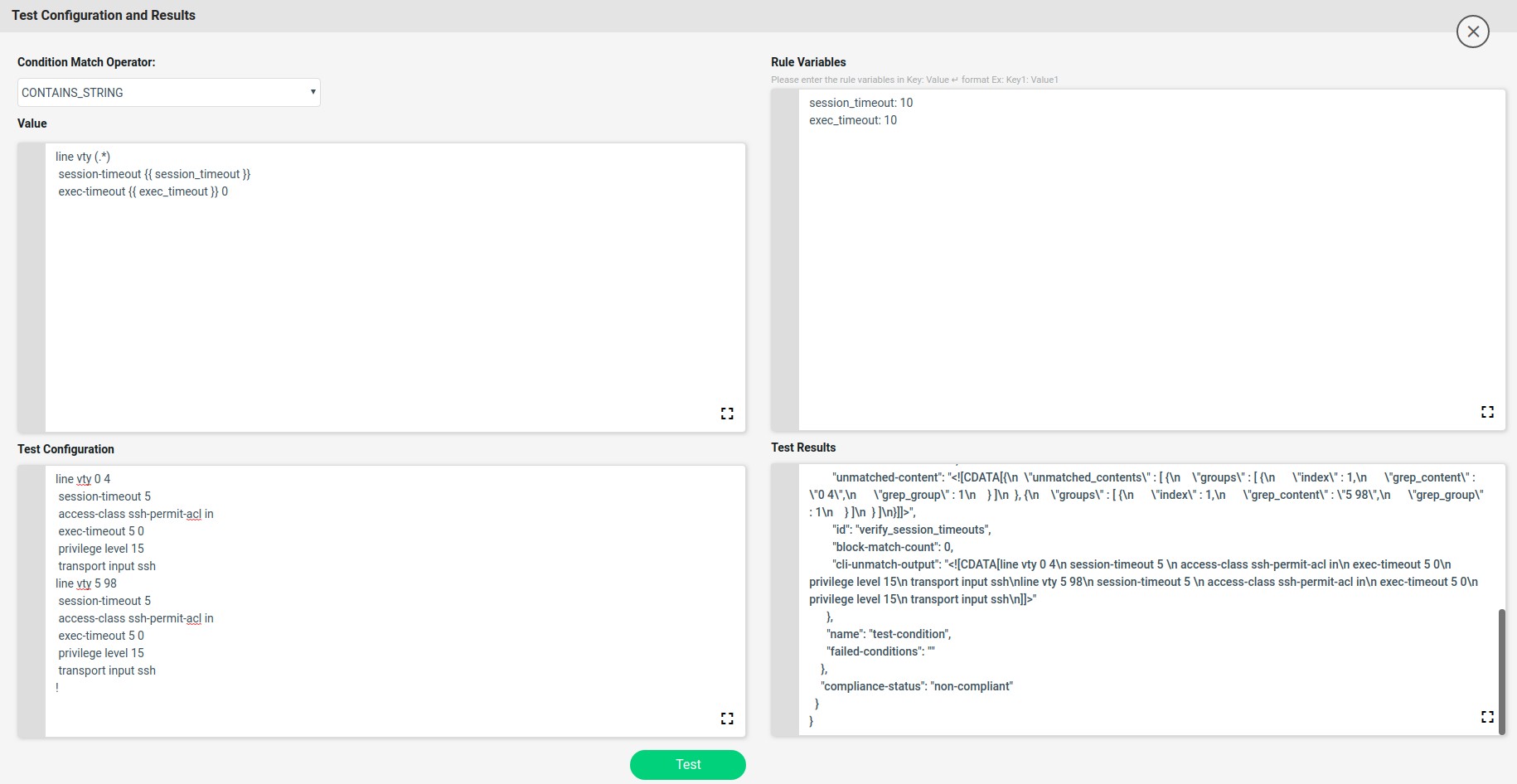

Scenario 4: Enforce VTY Session Timeouts

Scenario: All devices in the network should contain the network admin preferred VTY

session-timeout and exec-timeout on all vty lines. If VTY session-timeout and exec-timeout is not configured on the device or mis-match with the network admin preferred timeouts, ATOM CLI compliance can configure the devices with the user preferred VTY timeouts on all the vty lines.

In this example we are considering the VTY session-timeout and exec-timeout as 10 sec.

Platform:

Cisco IOS-XE

Expected Configuration:

line vty (.*)

session-timeout 10

exec-timeout 10 10

Fix-CLI Configuration:

line vty <>

session-timeout 10

exec-timeout 10 10

This use case is using the regex and rule variables and uses jinja2 template for fix-cli configuration.

Steps:

-

- Navigate to Resource Manager > Config Compliance -> Policies

- Click ‘+’ to create new Policy and provide the following information

- Policy Name – Enforce_VTY_Session_Timeouts

- Description

- Select the Policy and Click ‘+’ to create new Rule

- Rule Name – Check_Enforce_VTY_Session_Timeouts

- Navigate to Resource Manager > Config Compliance -> Policies -> Rules

- Select a Rule & Provide the following information

- Vendor – Cisco Systems

- OS type – IOSXE

- Device Family – ALL

- Device Type – ALL

- OS Version – ALL

- Now create the Rule variables for this scenario.

Here created user defined rule variables vty_exec_timeout and vty_session_timeout with default timeout as 10. These rule variables will be used in the condition value.

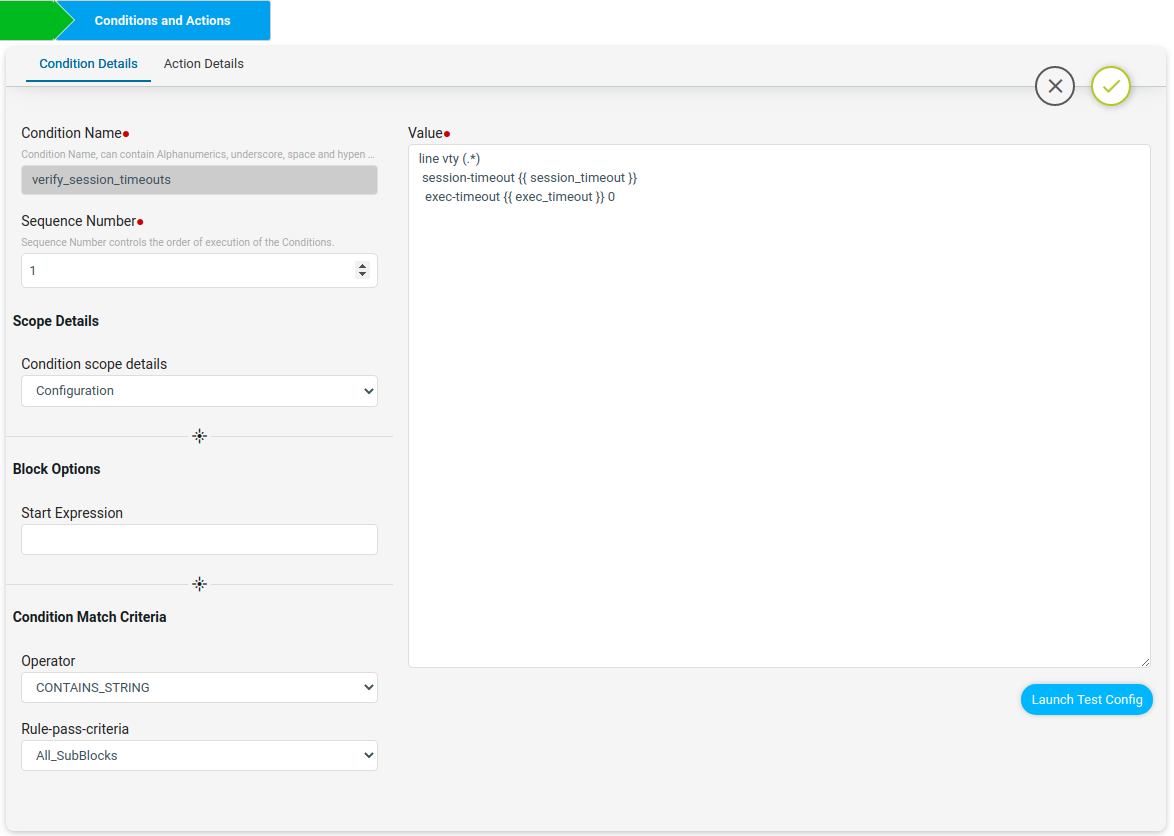

The verify_session_exec_timeouts condition will check whether the device in the network is configured with user preferred VTY timeouts or not.

The verify_session_exec_timeouts condition will check whether the device in the network is configured with user preferred VTY timeouts or not.

Here under Condition Match Criteria the Operator used was CONTAINS_STRING to check for session-timeout and exec-timeout in line vty config.

Here Rule-pass-criteria used All_SubBlocks to check the condition config in all line vty configurations of the device. If all the line vty is matching with the condition then compliant. If any of the line vty is not matching then non-compliant.

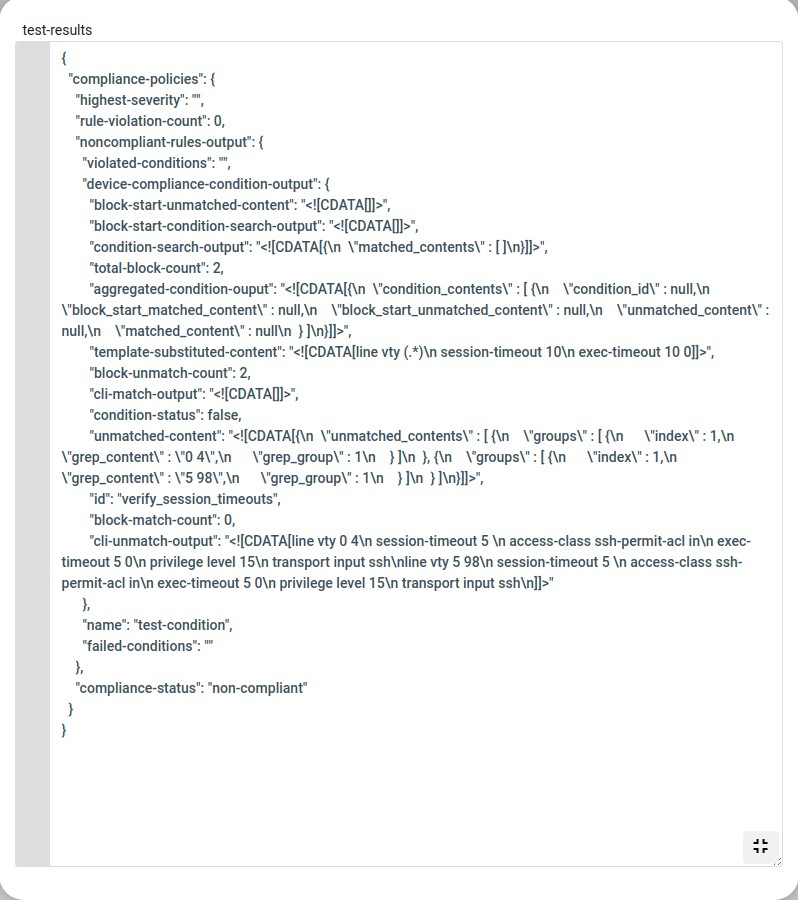

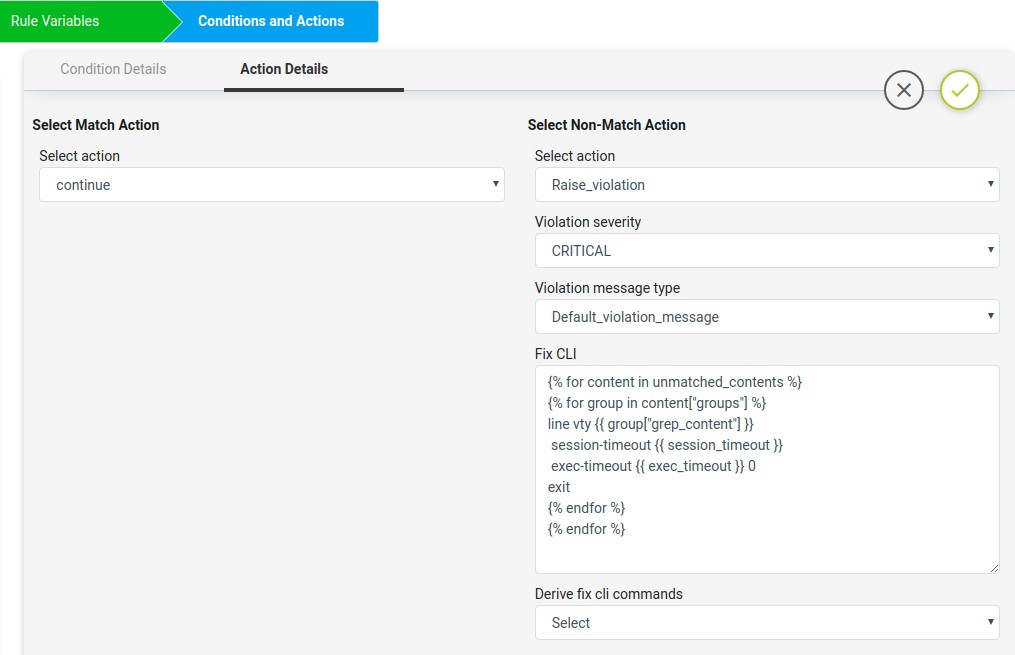

The launch Test Config will check values with the Test configuration and gives the Test Result whether compliant or not.

Here the unmatched line vty will be captured and stored in the backend data structure. The captured data structure maximizes the view shown below.

On Match action will continue and on Non-Match Action fix-cli will use the jinja2 template configuration written based on the above captured data structure.

The Non-compliant device fix-cli configurations derived from above jinja2 snippet will look like below.

The Non-compliant device fix-cli configurations derived from above jinja2 snippet will look like below.

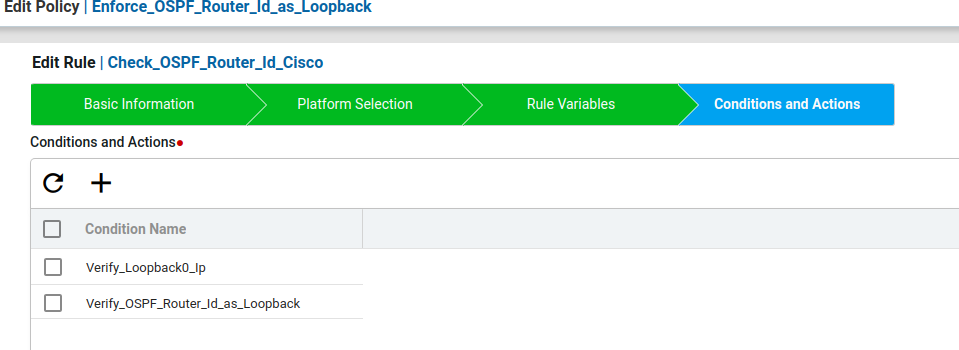

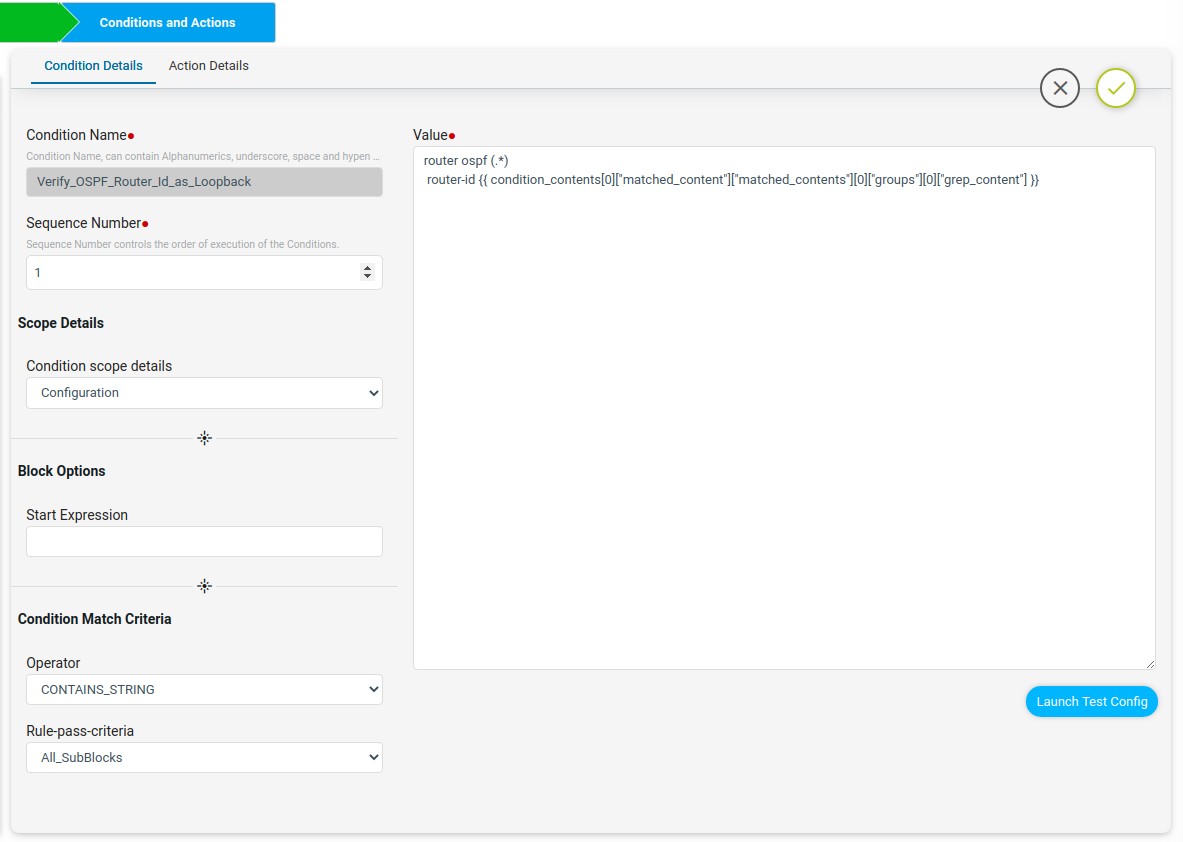

Scenario 5: Enforce OSPF Router Id as Loopback0

Scenario: All devices in the network should contain the OSPF router-id configured with loopback0 ip address. If OSPF router-id is not configured on the device it will configure the OSPF router-id with the value of loopback0 ip address on the devices.

Platform:

Cisco IOS-XE

Expected Configuration:

interface Loopback0

ip address 45.45.45.5 255.255.255.255

!

router ospf 100

router-id 45.45.45.5

Fix-CLI Configuration:

router ospf 100

router-id 45.45.45.5

This use case is using the regex and contains two conditions.

- First condition is to capture and store loopback0 ip address. It will not have a fix-cli configuration as the intention of the condition is to capture loopback0 ip address. Fix-cli Configuration :

<< no fix cli configuration >>

- Second condition will check whether the OSPF router id is the same as the first condition’s captured loopback0 ip address or not. if not matching then it will configure the OSPF router id with loopback0.

Fix-cli Configuration : router ospf 100

router-id 45.45.45.5

Steps:

-

- Navigate to Resource Manager > Config Compliance -> Policies

- Click ‘+’ to create new Policy and provide the following information

- Policy Name – Enforce_OSPF_Router_Id_as_Loopback

- Description

- Select the Policy and Click ‘+’ to create new Rule

- Rule Name – Check_OSPF_Router_Id_Cisco

- Navigate to Resource Manager > Config Compliance -> Policies -> Rules

- Select a Rule & Provide the following information

- Vendor – Cisco Systems

- OS type – IOSXE

- Device Family – ALL

- Device Type – ALL

- OS Version – ALL

- Rule variables are not required for this scenario.

- Now fill the Conditions and Actions

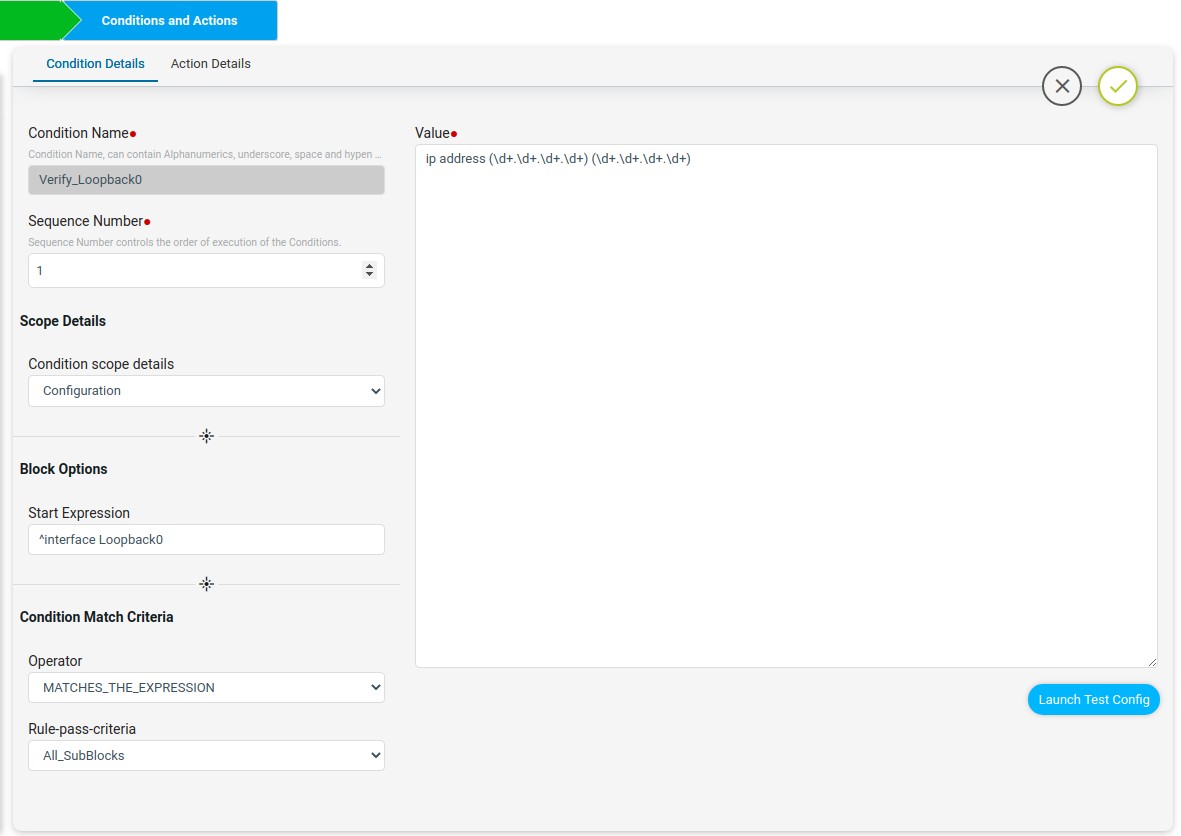

Condition1

Another way of writing the above block configuration using the Block Options Start Expression is shown below.

The first line “interface Loopback0” can be written in the start Expression with regex symbol ^ to indicate the block starts with interface Loopback0. The remaining configuration lines can be written in value.

The first line “interface Loopback0” can be written in the start Expression with regex symbol ^ to indicate the block starts with interface Loopback0. The remaining configuration lines can be written in value.

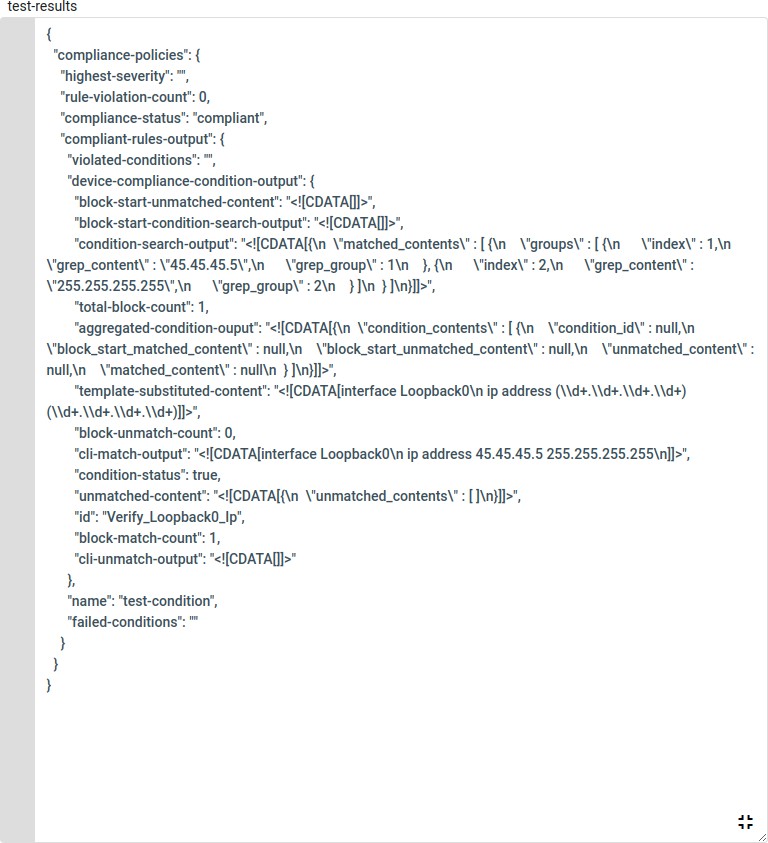

The launch test config will check the condition value with the Test configuration and will give the Test Result. Here the captured loopback0 ip address will be stored in the backend data structure as shown below.

The Test result in maximize view is shown below. This output will be used in condition2.

For Non-Match Action violation is being raised and fix-cli is having no commands as this condition is to capture the loopback0 ip.

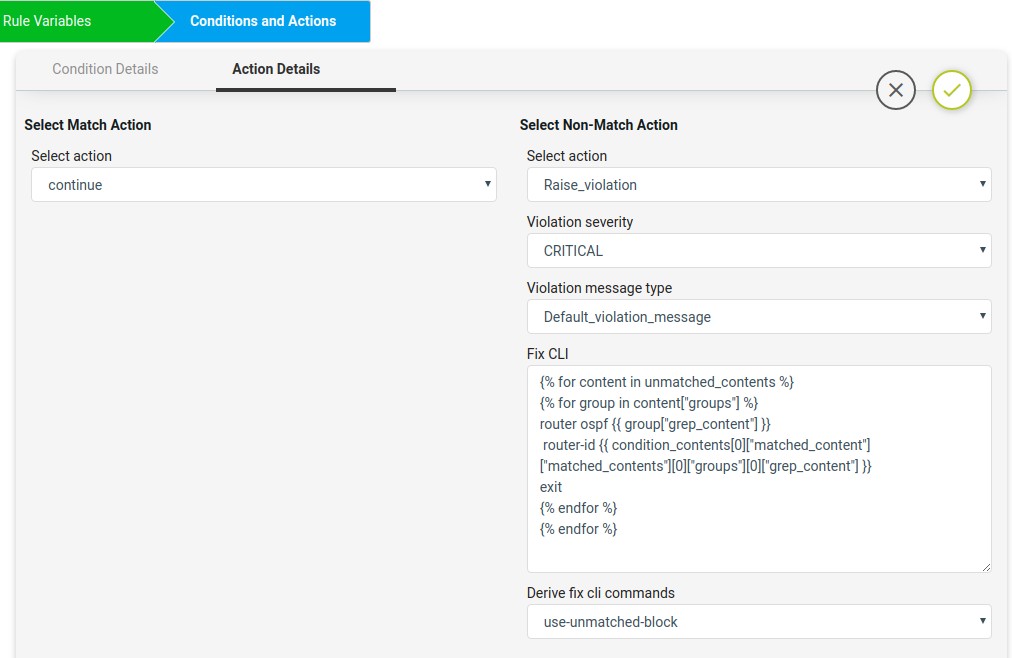

Condition2

The Verify_OSPF_Router_Id_as_Loopback condition will check whether the OSPF router id is the same as the first condtion’s captured loopback0 ip address or not. if not matching then in fix-cli it will configure the OSPF router id with loopback0.

On Match Action it will continue. On Non-Match Action it will use the jinja2 template configuration in fix-cli to configure the OSPF router id with loopback0 ip.

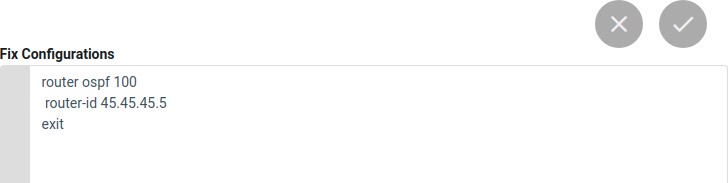

The Non-compliant device fix-cli configurations from the jinja2 template configuration is given below.

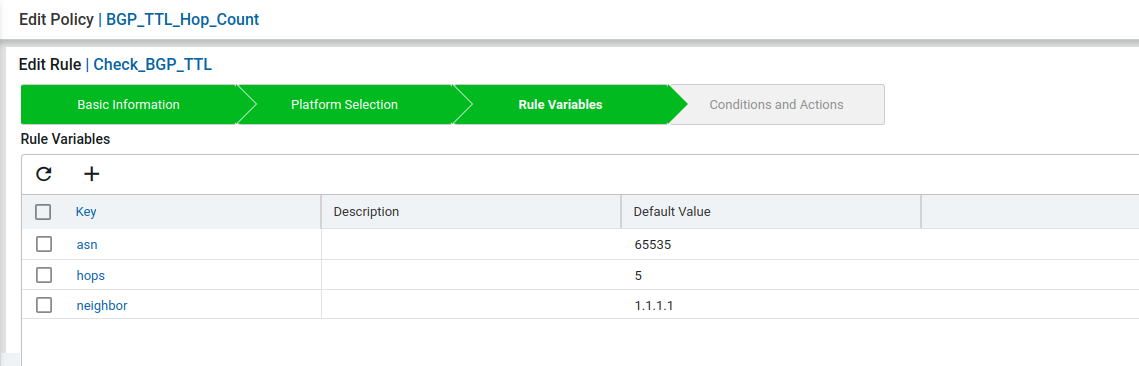

Scenario 6: BGP TTL Hop-count

Scenario: All devices in the network should contain the network admin preferred BGP

ttl-security hops. If hops is not configured on the device or mis-match with the network admin preferred ttl-security hops, ATOM CLI compliance can configure the devices with the user preferred hops.

In this example we are considering the ttl-security hops as 5.

Platform:

Cisco IOS-XE

Expected Configuration:

router bgp 65535

bgp log-neighbor-changes neighbor 2.3.2.6 ttl-security hops 5

Fix-CLI Configuration:

router bgp 65535

bgp log-neighbor-changes neighbor 2.3.2.6 ttl-security hops 5

This use case is using the regex and rule variables and contains two conditions.

- First condition is to match the block. It will not have a fix-cli configuration as the intention of the condition is to match the block.

Fix-cli Configuration :

<< no fix cli configuration >>

- Second condition will check whether the BGP ttl-security hops is in the first condition’s matched block or not. if not matching in the block then it will configure the BGP hops. Fix-cli Configuration :

router bgp 65535

neighbor 2.3.2.6 ttl-security hops 5

Steps:

-

- Navigate to Resource Manager > Config Compliance -> Policies

- Click ‘+’ to create new Policy and provide the following information

- Policy Name – BGP_TTL_Hop_Count

- Description

- Select the Policy and Click ‘+’ to create new Rule

- Rule Name – Check_BGP_TTL

- Navigate to Resource Manager > Config Compliance -> Policies -> Rules

- Select a Rule & Provide the following information

- Vendor – Cisco Systems

- OS type – IOSXE

- Device Family – ALL

- Device Type – ALL

- OS Version – ALL

- Now create the Rule variables for this scenario.

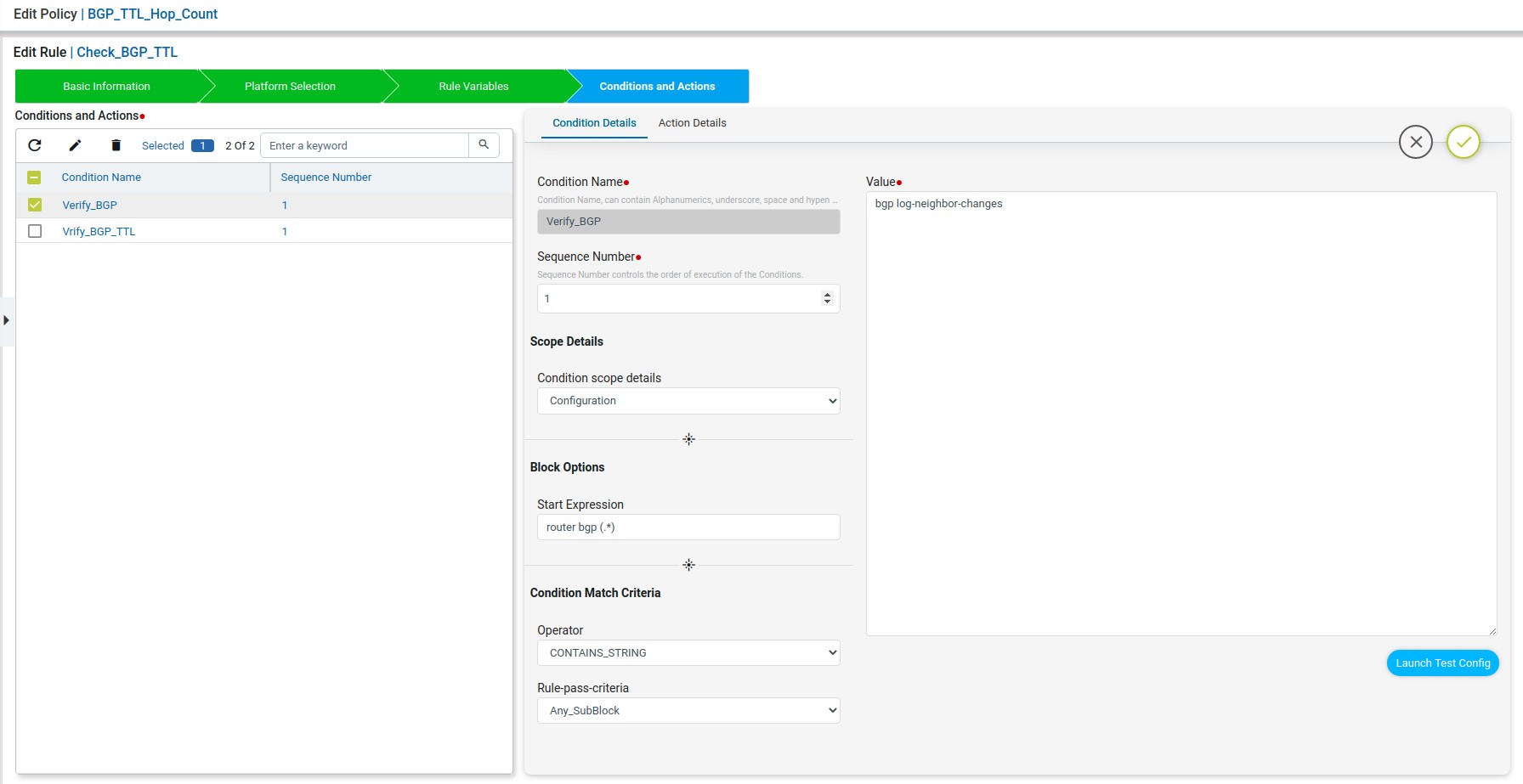

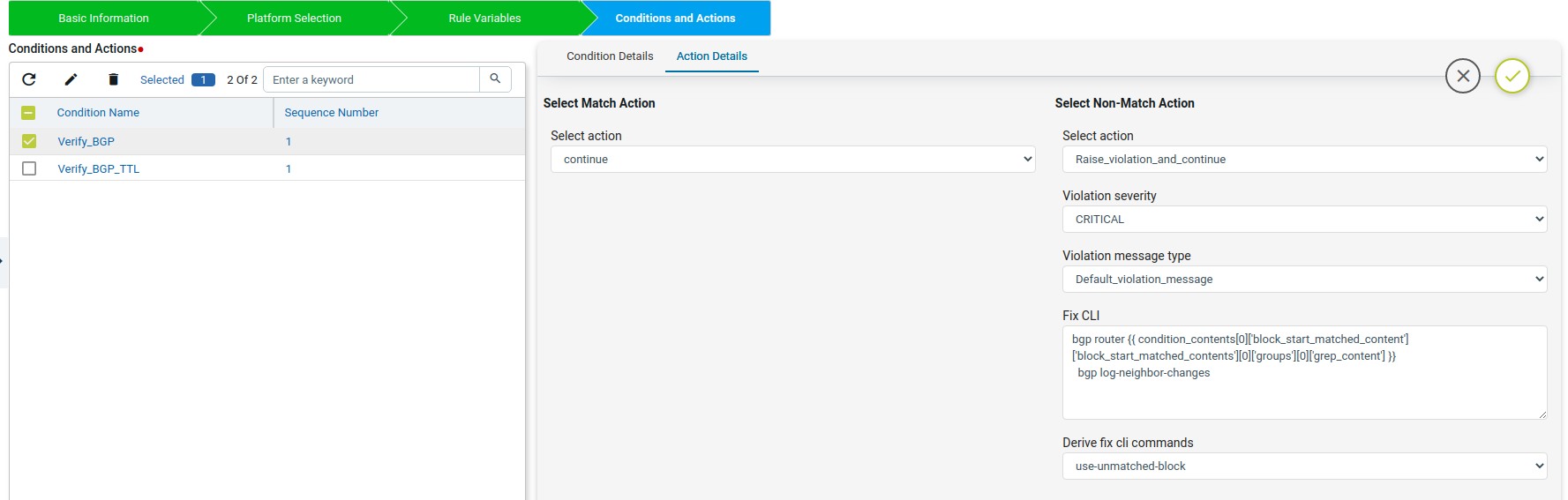

Condition1

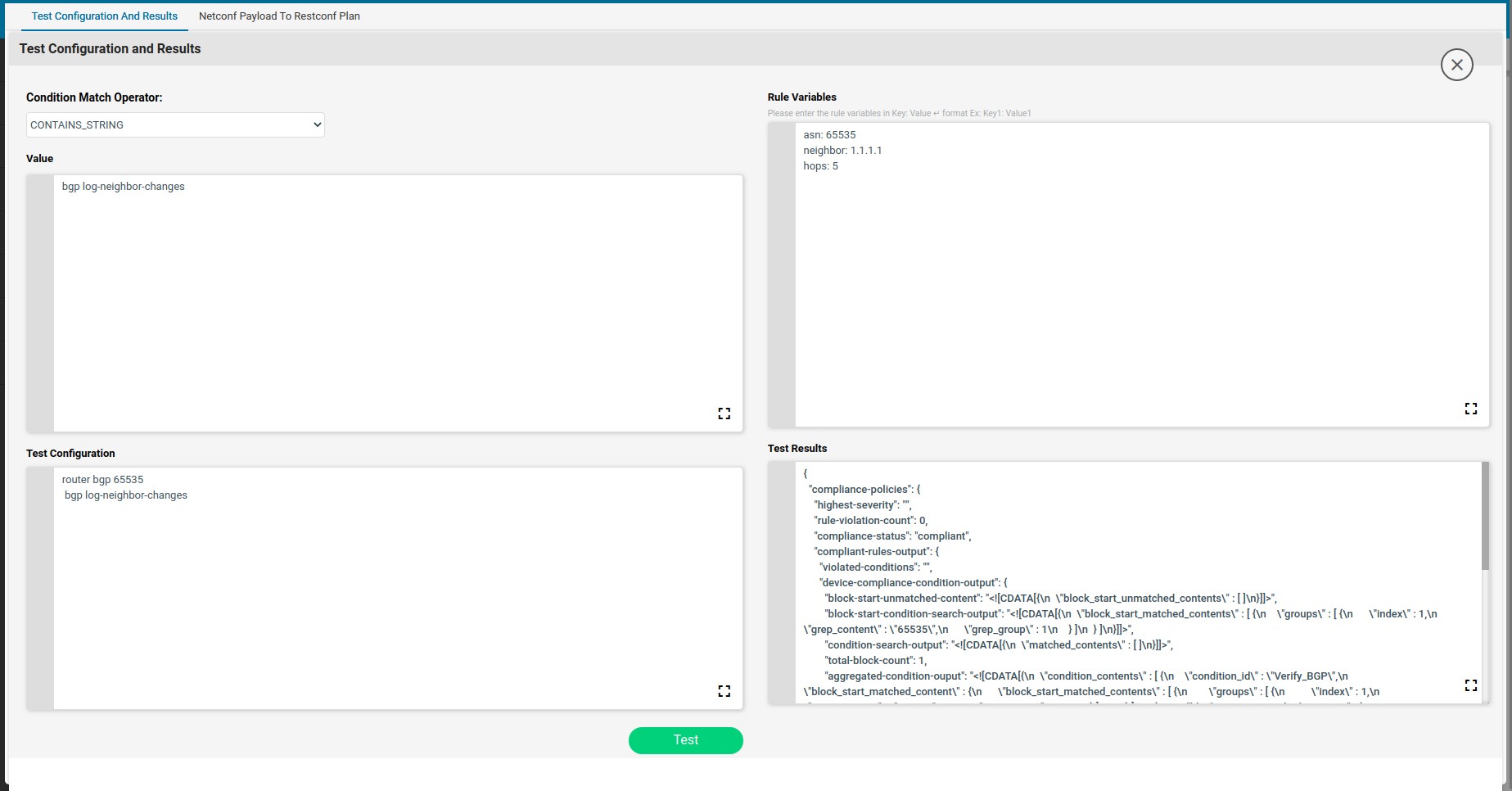

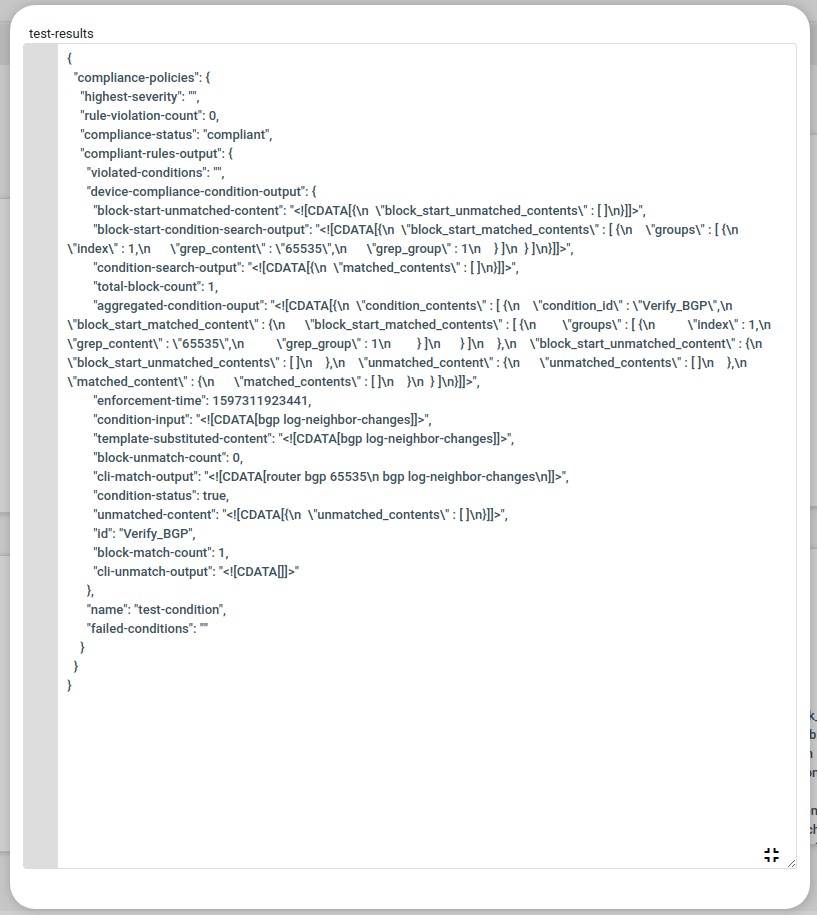

The first line “router bgp (.*)” to be written in the start Expression with regex to indicate the block starts with router bgp. The remaining configuration lines can be written in value.

On Match Action execution continues to the next condition. On Non-Match Action it will raise a violation and continue next condition. The “Fix-CLI” for the condition was written based on the test results obtained from “Launch Test Config”.

When the start Expression is used the regex captured data will be stored in “condition_contents” of “aggregated-condition-ouput” in test results.

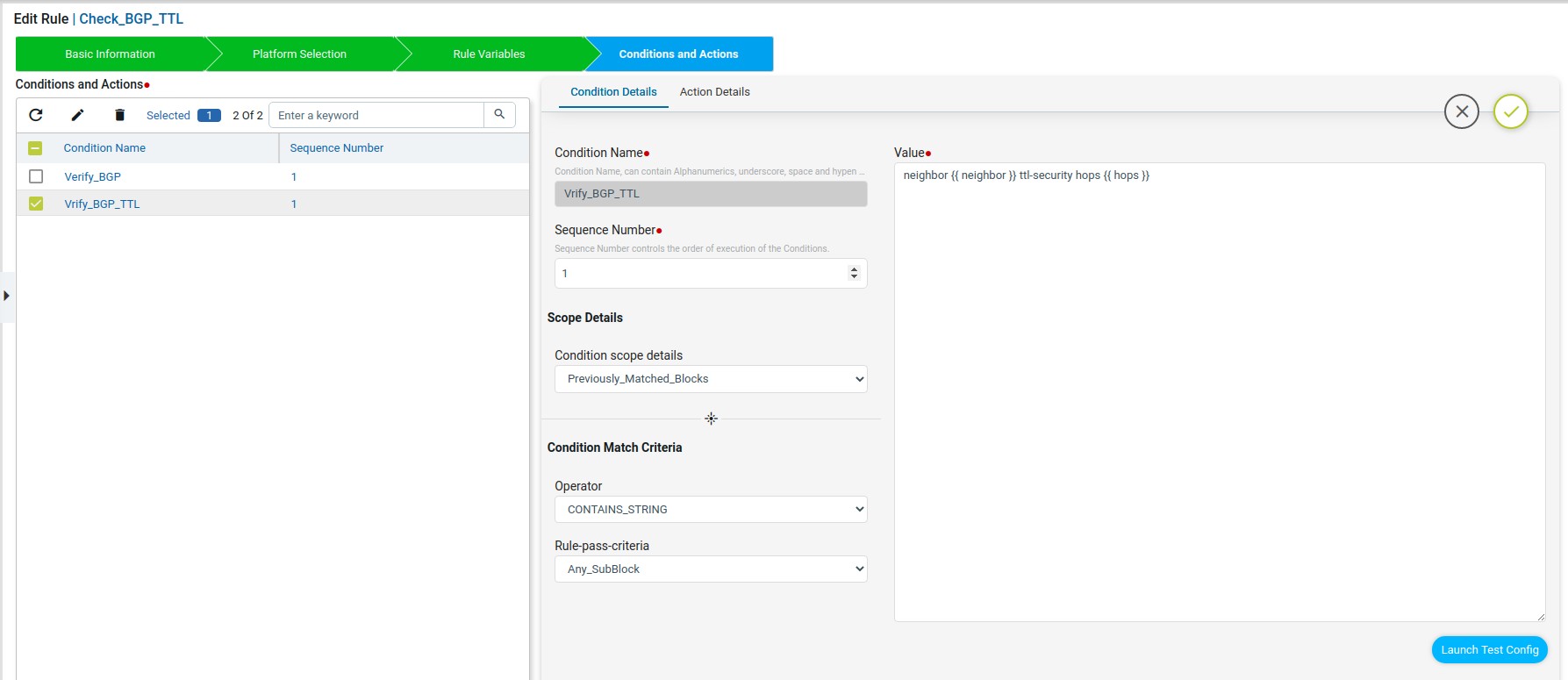

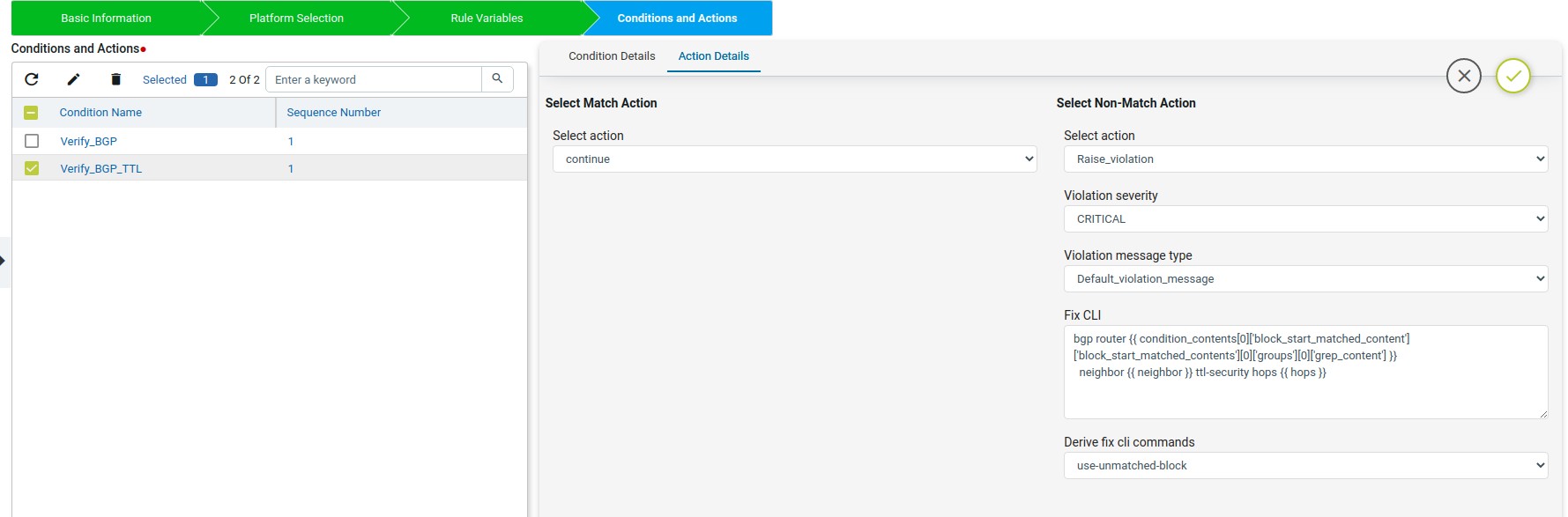

Condition2

The Verify_BGP_TTL condition will check whether the router bgp block config matched in the previous condition has the ttl-security hops or not. if not matching then in fix-cli it will configure the ttl-security hops.

This condition uses the condition scope details as Previously_Matched_Blocks to check on previous condition matched block.

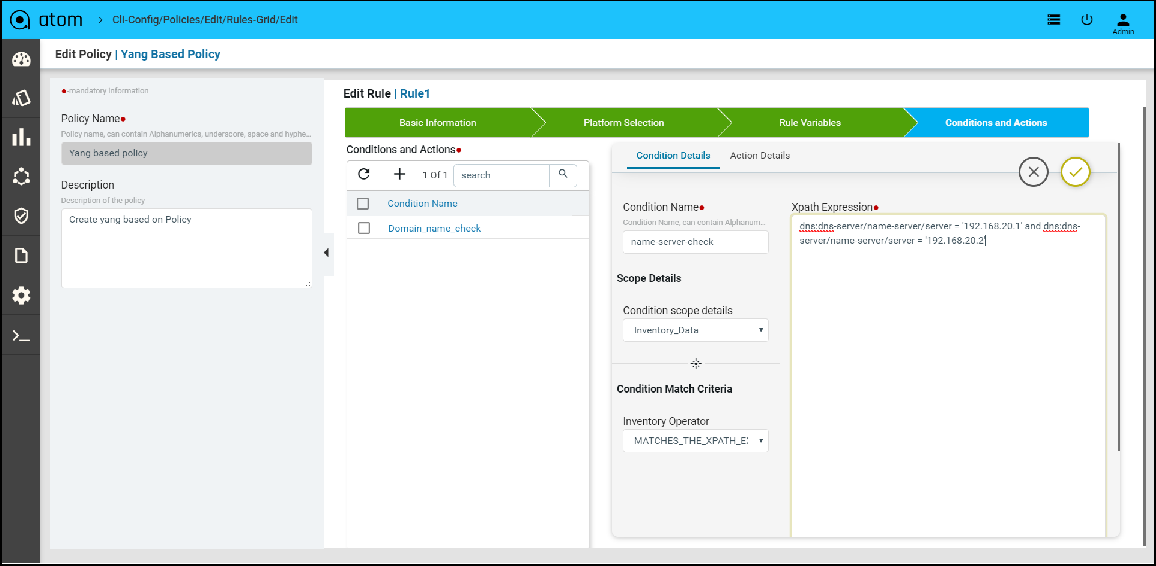

YANG Compliance

Note: In order to use Yang Compliance make sure that the config-snapshot is provided in the Credential profile, which lets ATOM to parse the configuration and store it. For more information on Credential profile please refer to credential profile section in ATOM User guide.

For Yang based Configuration Compliance, make sure to select the option of Inventory_Data for Condition scope during Compliance Policy creation. This gives two ways of defining the Condition Match Criteria

-

- Xpath Expressions

- XML Template Payload

Policy creation with Xpath Expressions

-

- Within Condition Match Criteria select “Matches_the_Xpath_Expression”

/”Doesn’t_Matches_the_Xpath_Expression” option for Inventory Operator field

-

- The Fix Mutation Payload is in Netconf xml RPC format written using the XML template details for the yang parsed entities.

Navigate to Resource Manager > Config Compliance > Policy > + (Add Policies)

Few examples

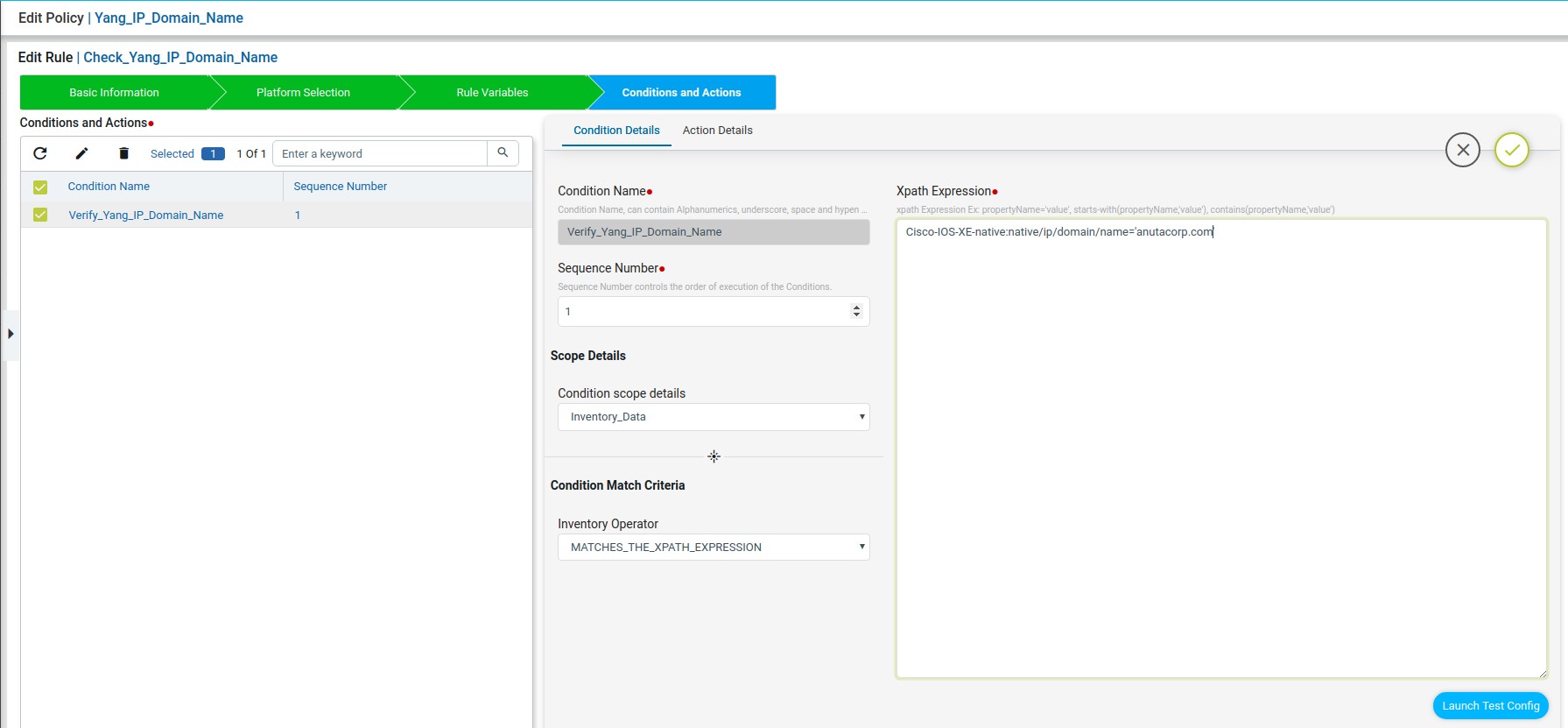

Scenario 7: IP Domain Name

In this example we are looking for the domain name as anutacorp.com across all devices in the lab using X-path expression.

Xpath Expression:

Fix Mutation Payload:

Note: we can use ATOM_DEVICE_ID or inputDeviceId for substituting the deviceId.

Defining Xpath Expression

Defining Fix Payload

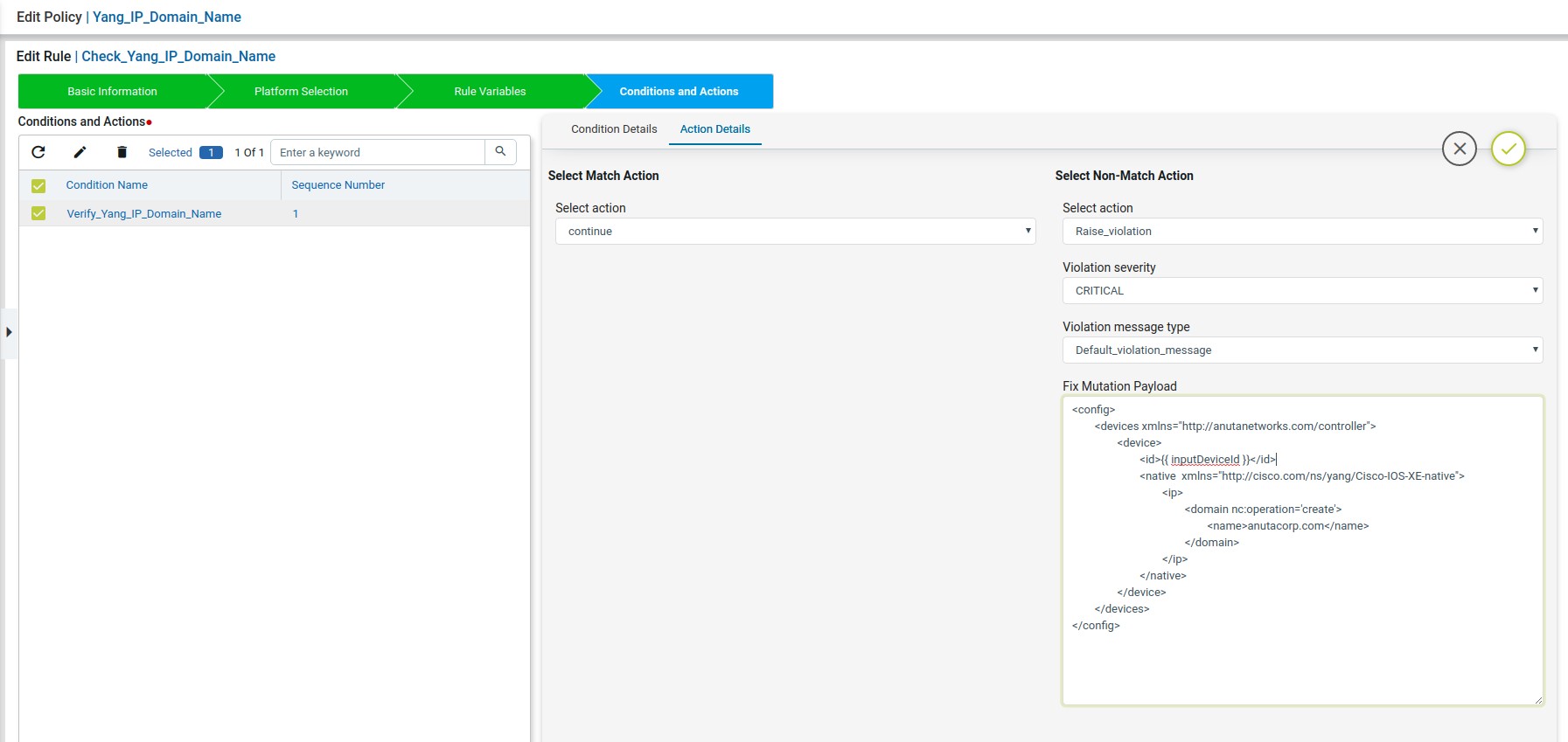

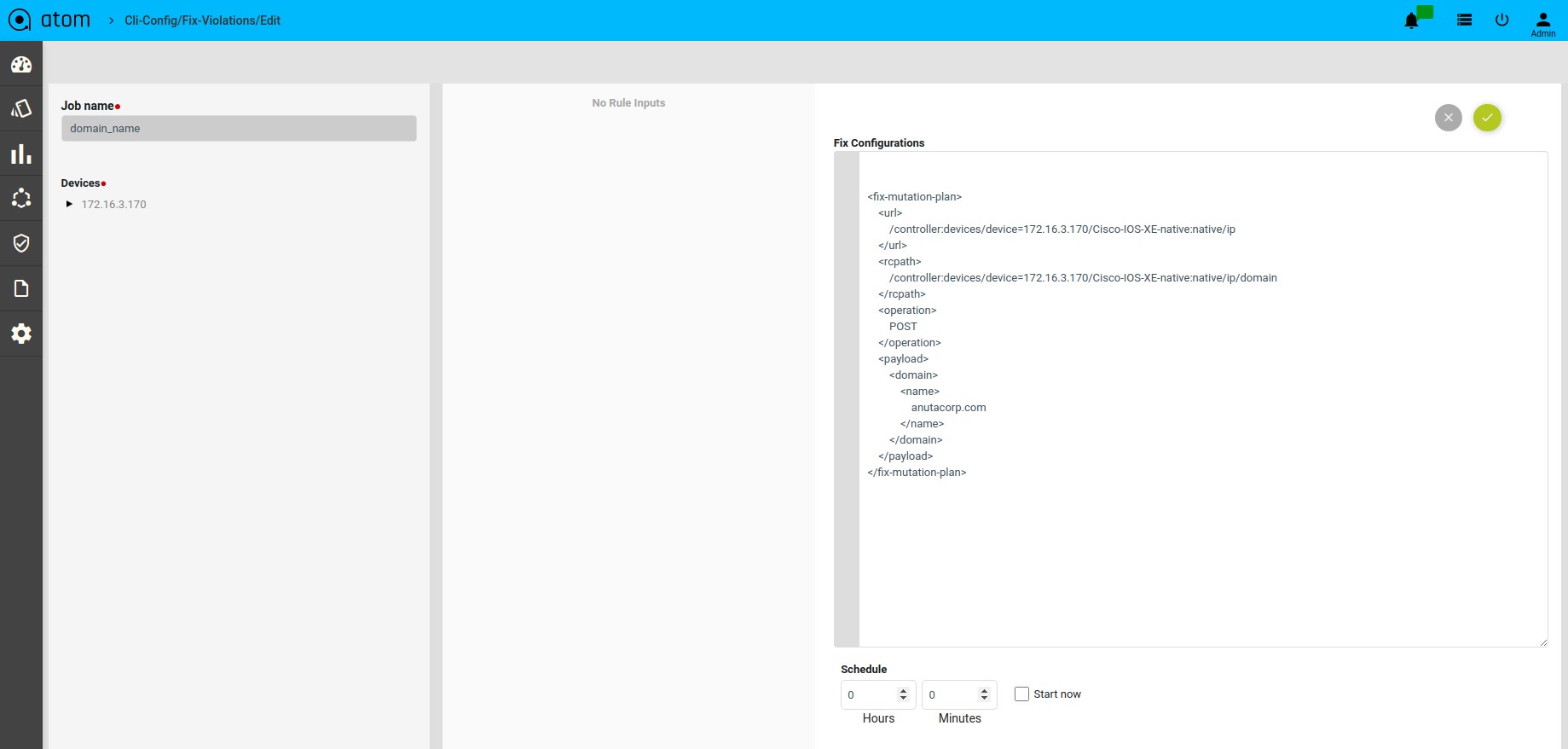

Fix Configuration Display in Remediation

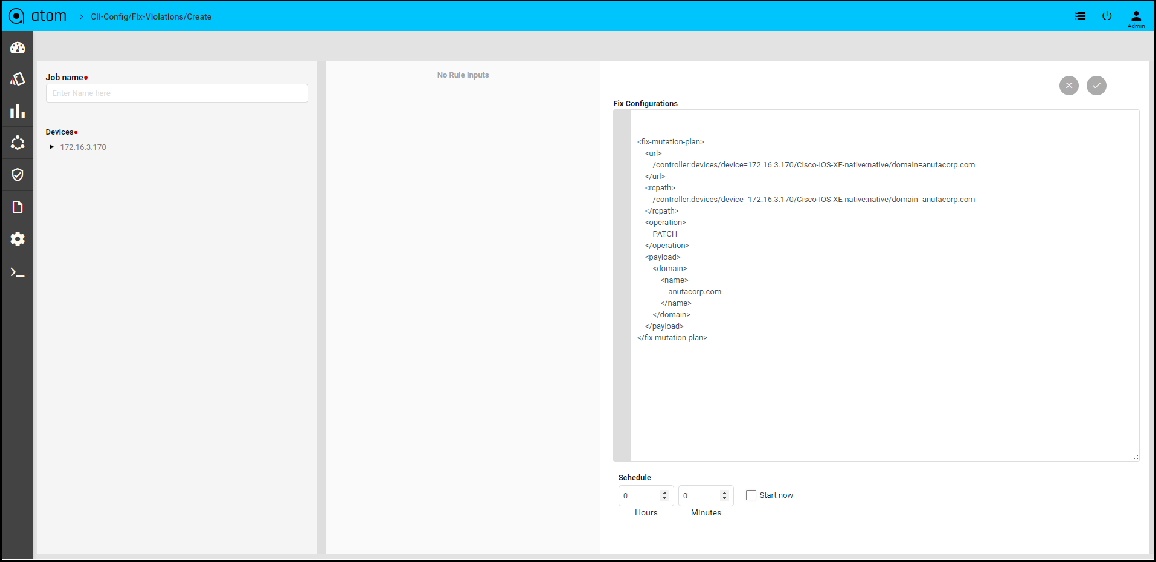

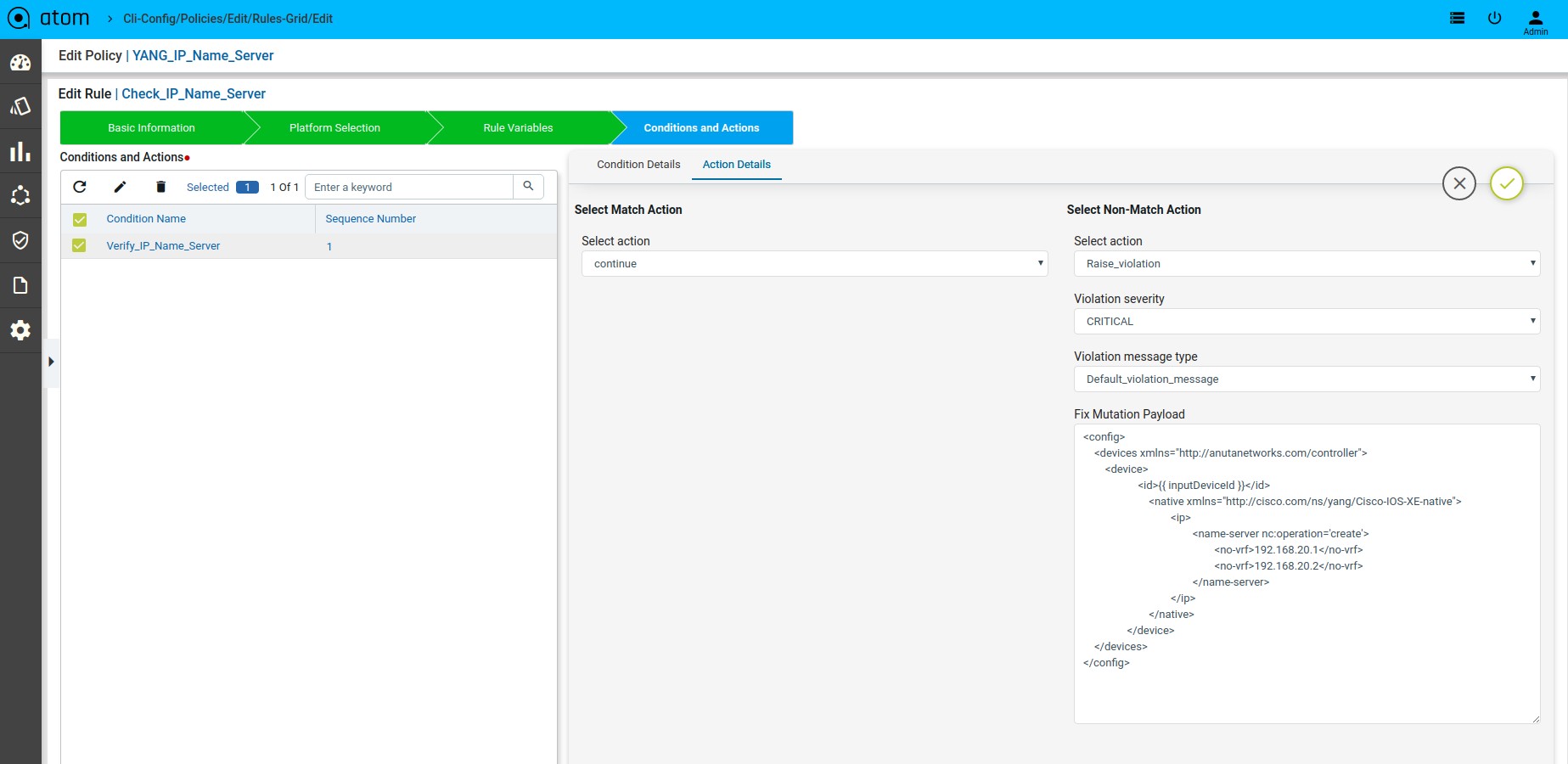

Scenario 8: IP Name-server check

Xpath Expression:

Fix Mutation Payload:

Defining Xpath Expression

Defining Fix Payload

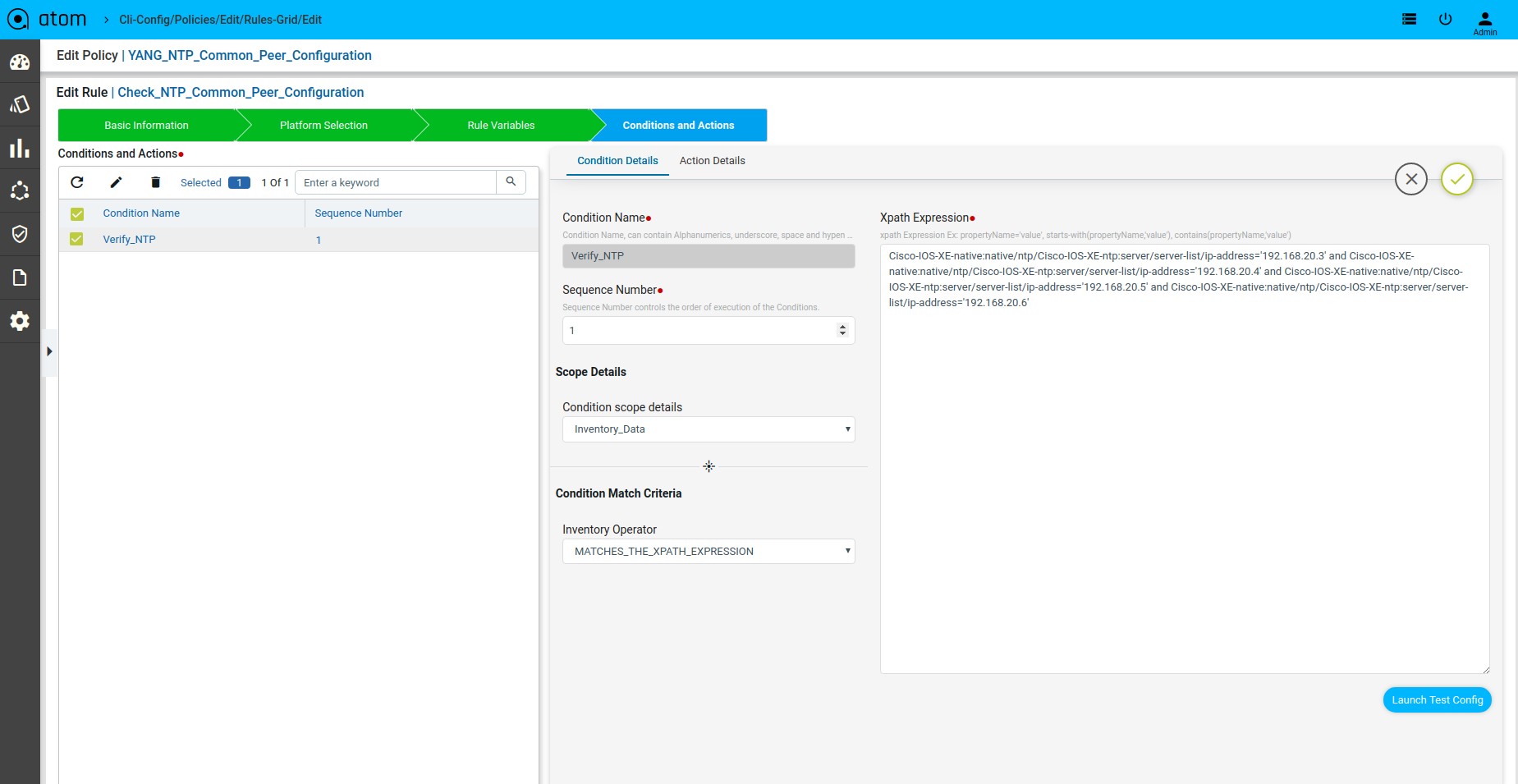

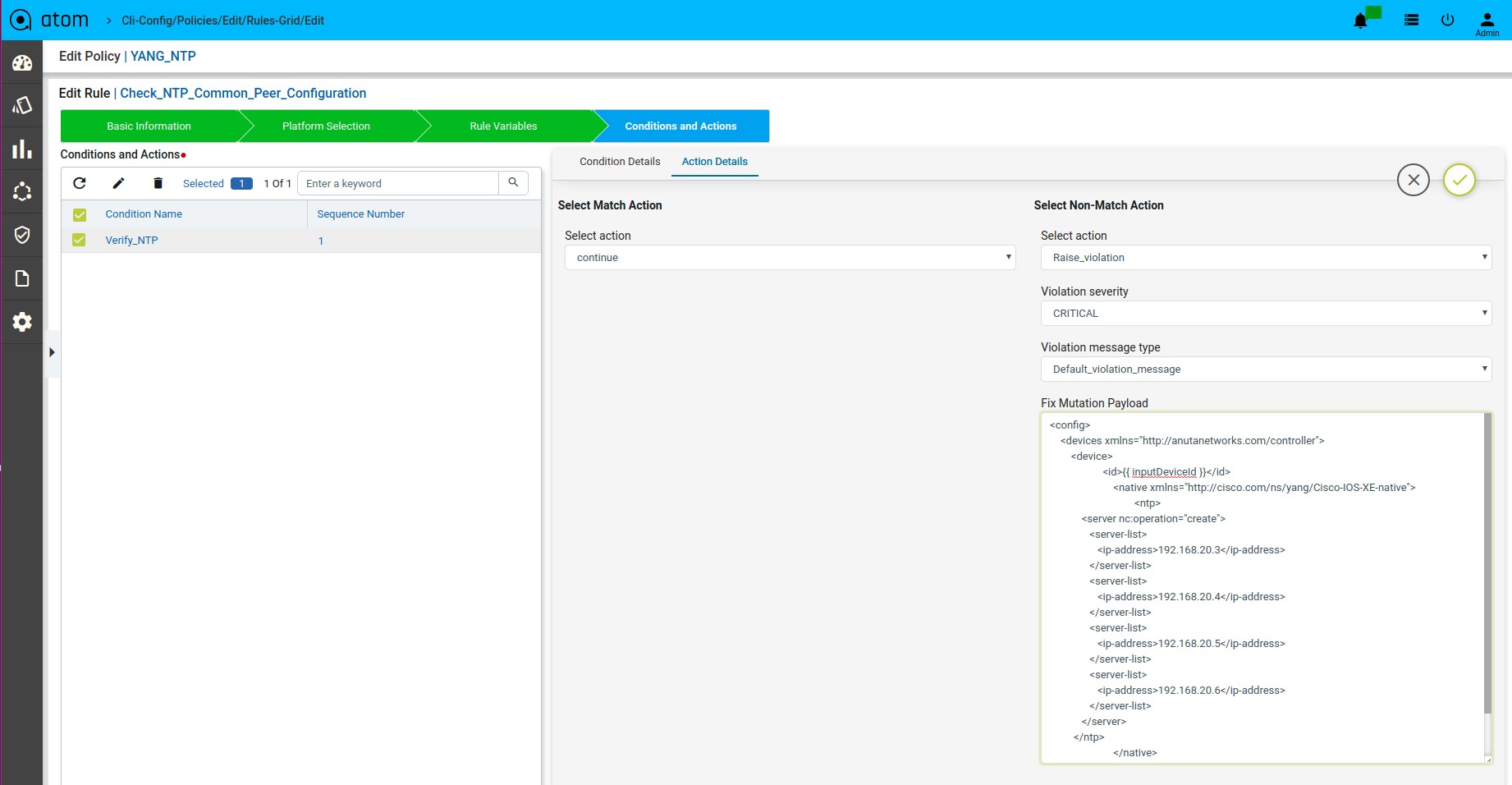

Scenario 9 : NTP server Check

Xpath Expression:

Fix Mutation Payload:

Defining Xpath Expression

Defining Fix Payload

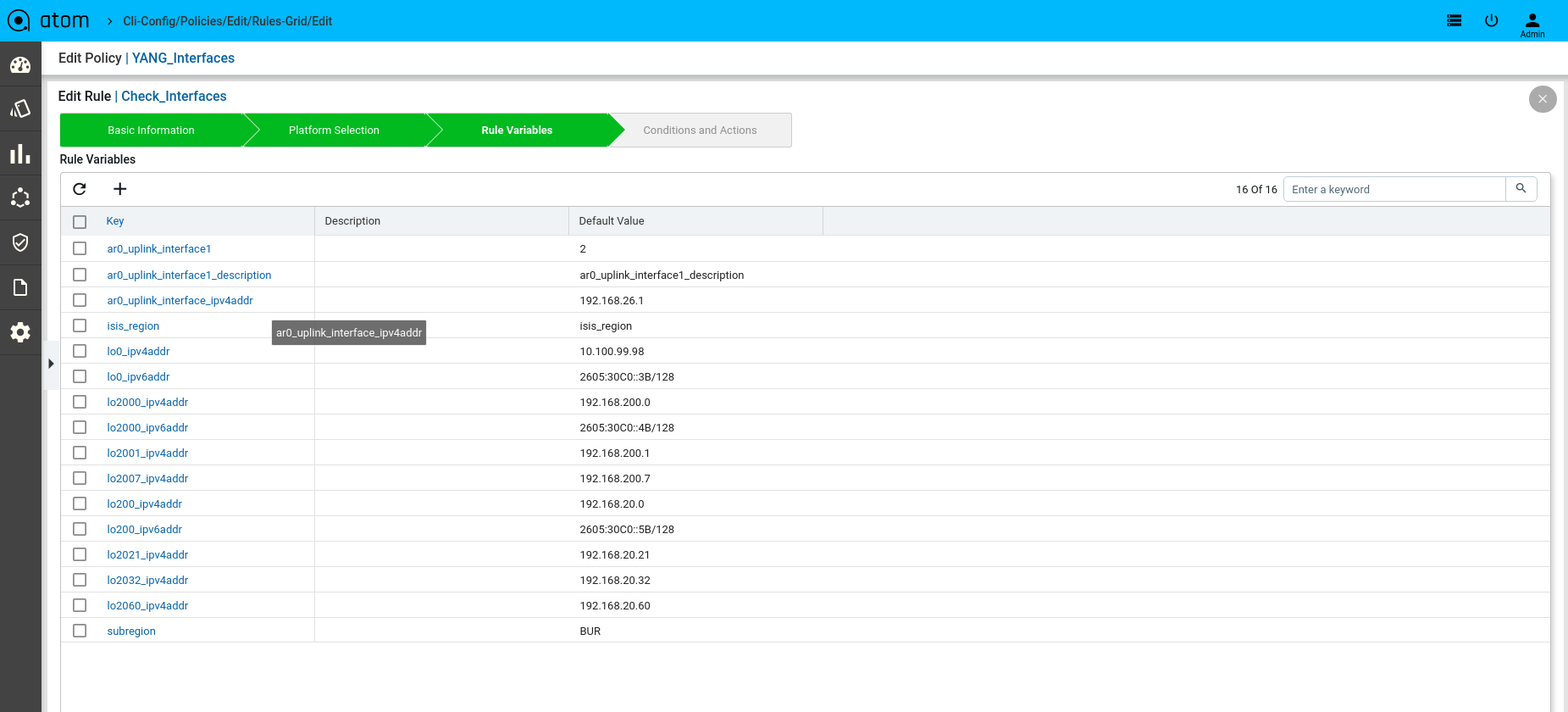

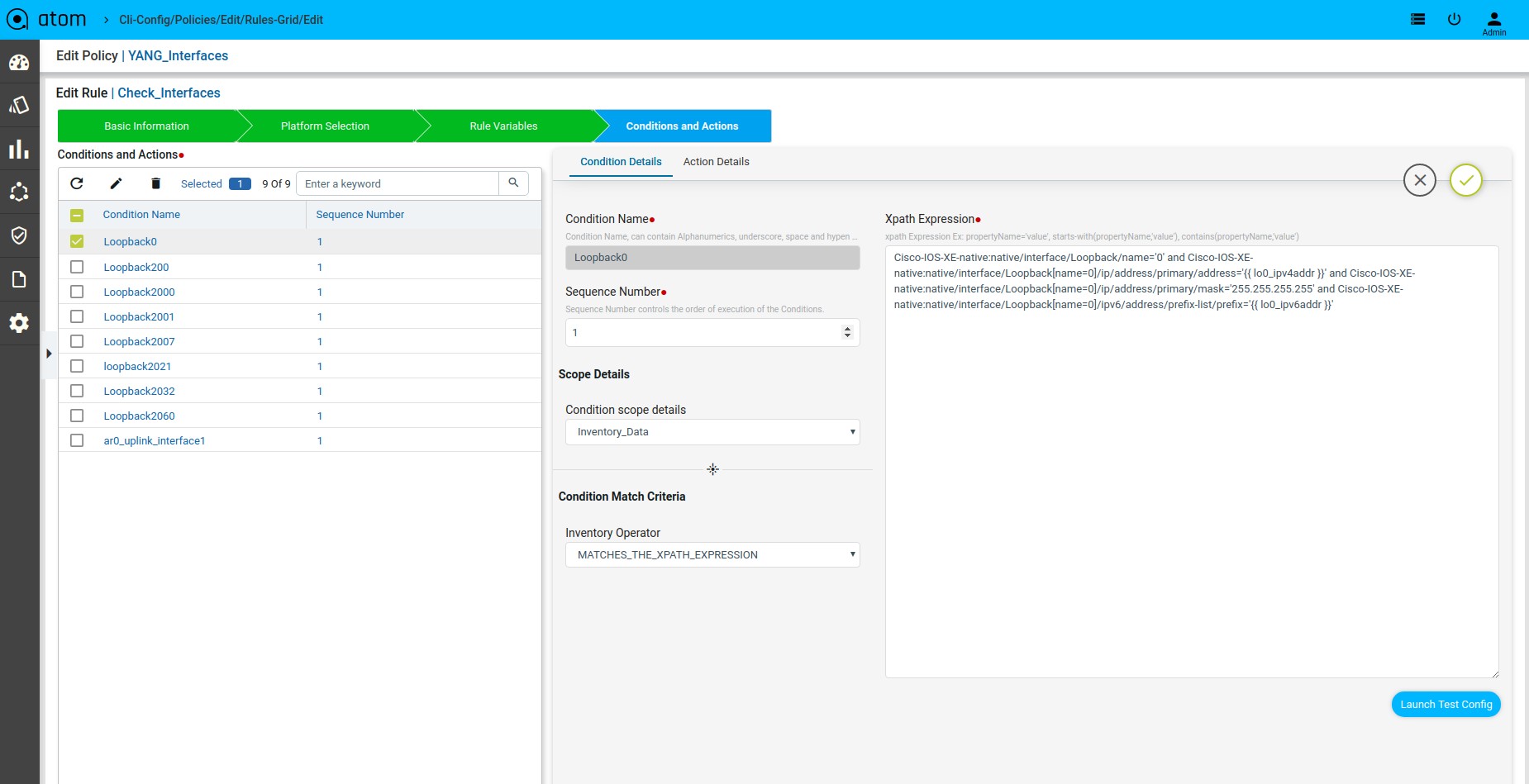

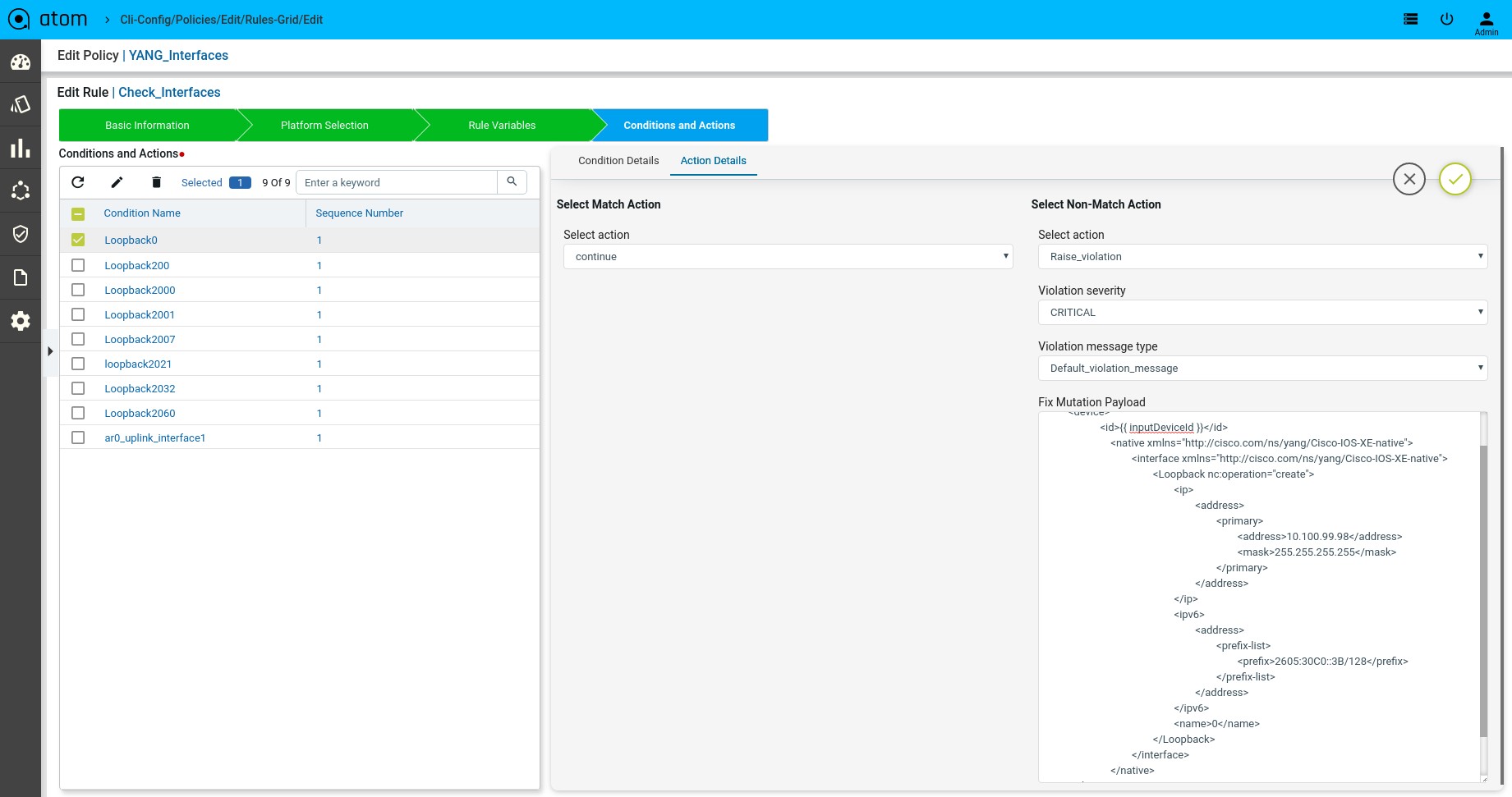

Scenario 10 : Interface Check with rule_variable

Xpath Expression:

Fix Mutation Payload:

Defining Rule Variables

Defining Xpath Expression

Defining Fix Payload

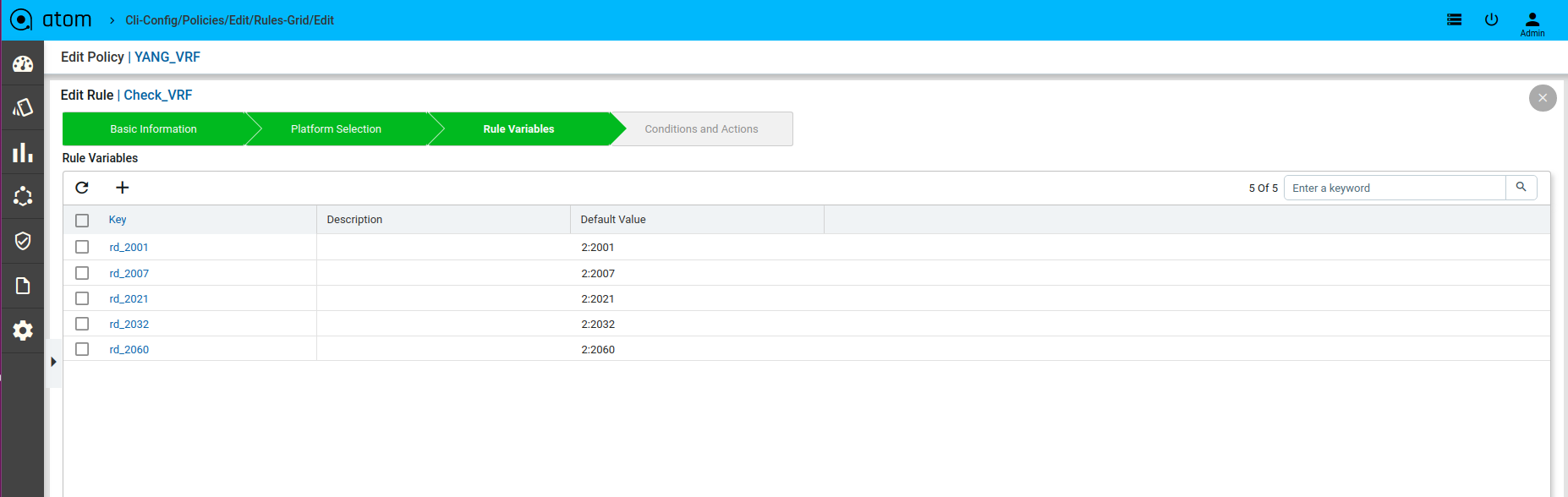

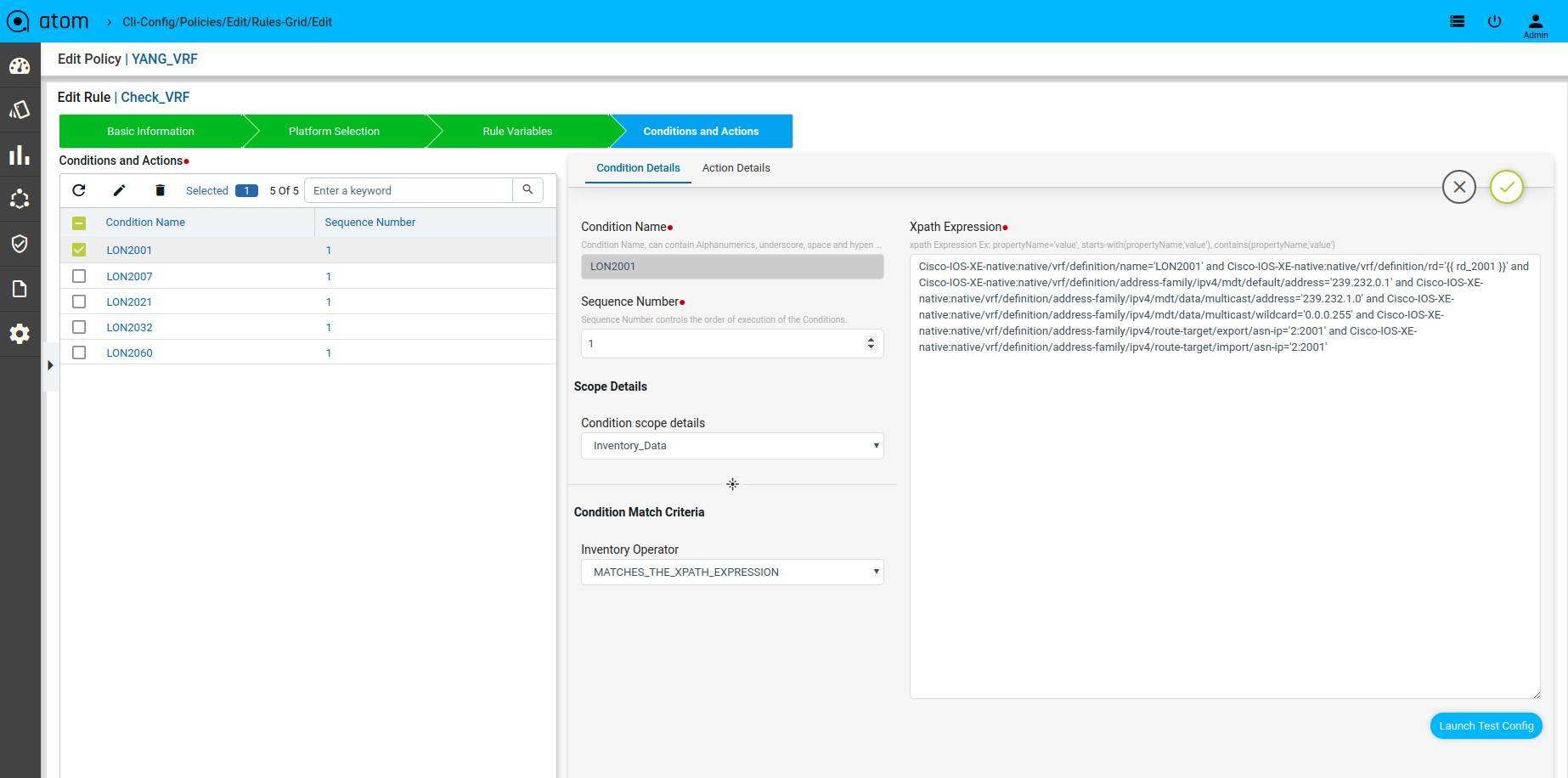

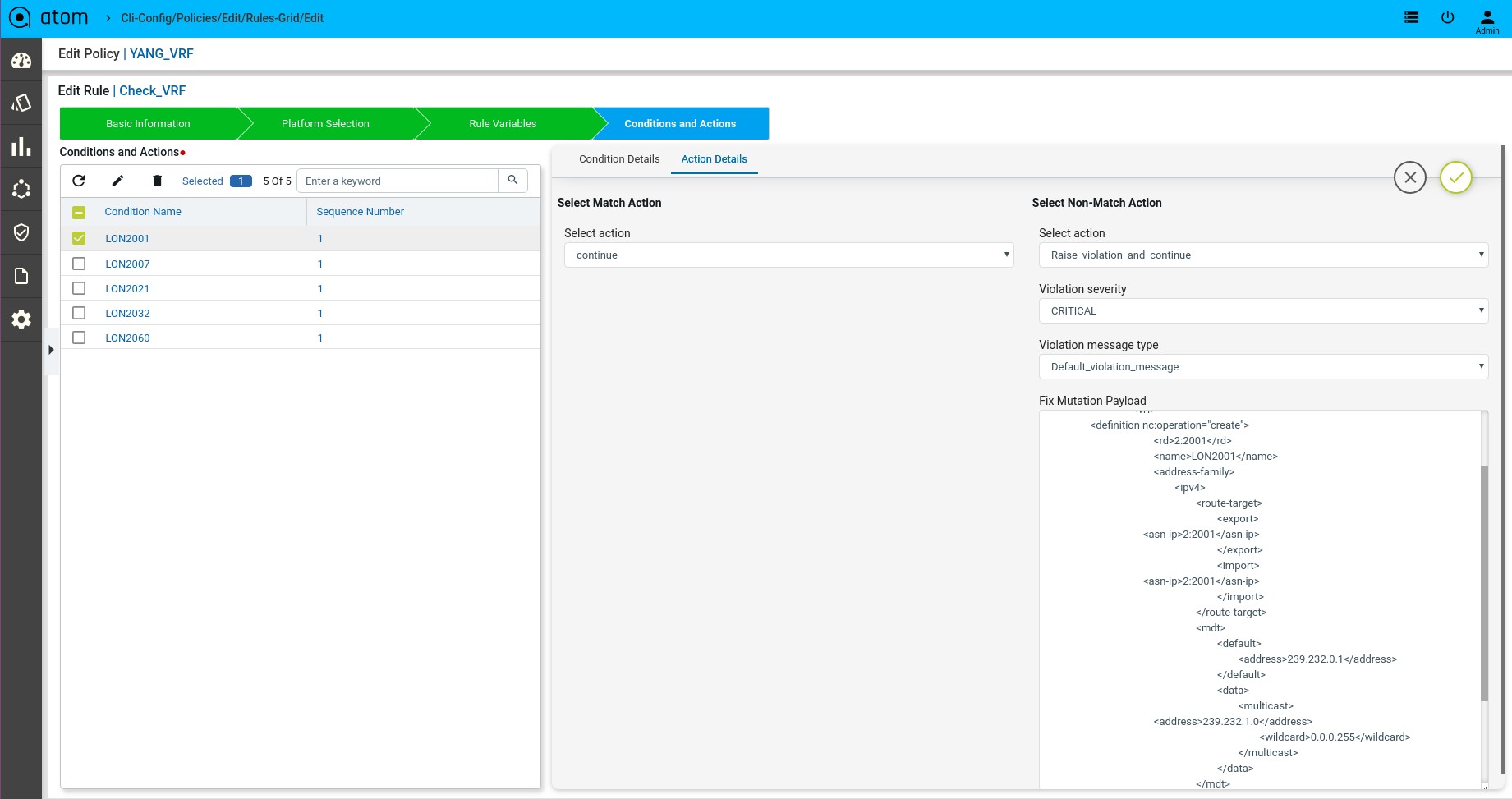

Scenario 11 : VRF Check with rule_variable

Xpath Expression:

Fix Mutation Payload:

Defining Rule Variables

Defining Xpath Expression

Defining Fix Payload

-

- Snmp-string with rule_variable :

basicDeviceConfigs:snmp/snmp-community-list/snmp-string = “{{ community }}”

-

- Logical A|B : starts-with(vendor-string,’Cisco’) or contains(device-family-string,’Cisco 800′)

- Logical A&B : starts-with(vendor-string,’Cisco’) and contains(device-family-string,’Cisco 800′)

- Logical A&(B|C) : contains(vendor-string,’Cisco Systems’) and

(contains(device-family-string,’Cisco 800′) or contains(device-family-string,’Cisco CSR 1000V’))

-

- Logical A&(B|(C&D)) :

contains(interface:interfaces/interface/if-name,’GigabitEthernet1′) and (contains(os-version,’15.6(1)S’) or (contains(vendor-string,’Cisco Systems’) and contains(device-family-string,’Cisco CSR 1000V’)))

-

- Logical not(A&B) :

not(contains(basicDeviceConfigs:local-credentials/local-credential/name , ‘admin’) and contains(basicDeviceConfigs:local-credentials/local-credential/name , ‘cisco’))

How to derive the X-path expressions

There can be two ways by which you can derive the X-path expressions

-

- Navigate to the Device profile page to get the X-path Expression Details for the yang parsed entities

Resource Manager → Devices → Select a Device → Configuration → Config Data →

Entities → Select Entity

For Example: If we want to write xpath expression for VRF name to match as “anuta”, then below is how condition needs to be written

l3features:vrfs/vrf/name = ‘anuta’

l3features:vrfs/vrf : This is x-path derived based on model under device

name : Attribute of vrf name.

-

- Navigate to Schema Browser to see all yang models under path

/controller:devices/device

Policy creation with XML Template Payload

-

- Within Condition Match Criteria select “Matches_the_template_payload”

/”Doesn’t_matches_the_template_payload” option for Inventory Operator field

-

- The Fix Mutation Payload is a Jinja2 template configuration in Netconf xml RPC format written using the unmatched content from the test results tab.

Navigate to Resource Manager > Config Compliance > Policy > + (Add Policies) Few examples

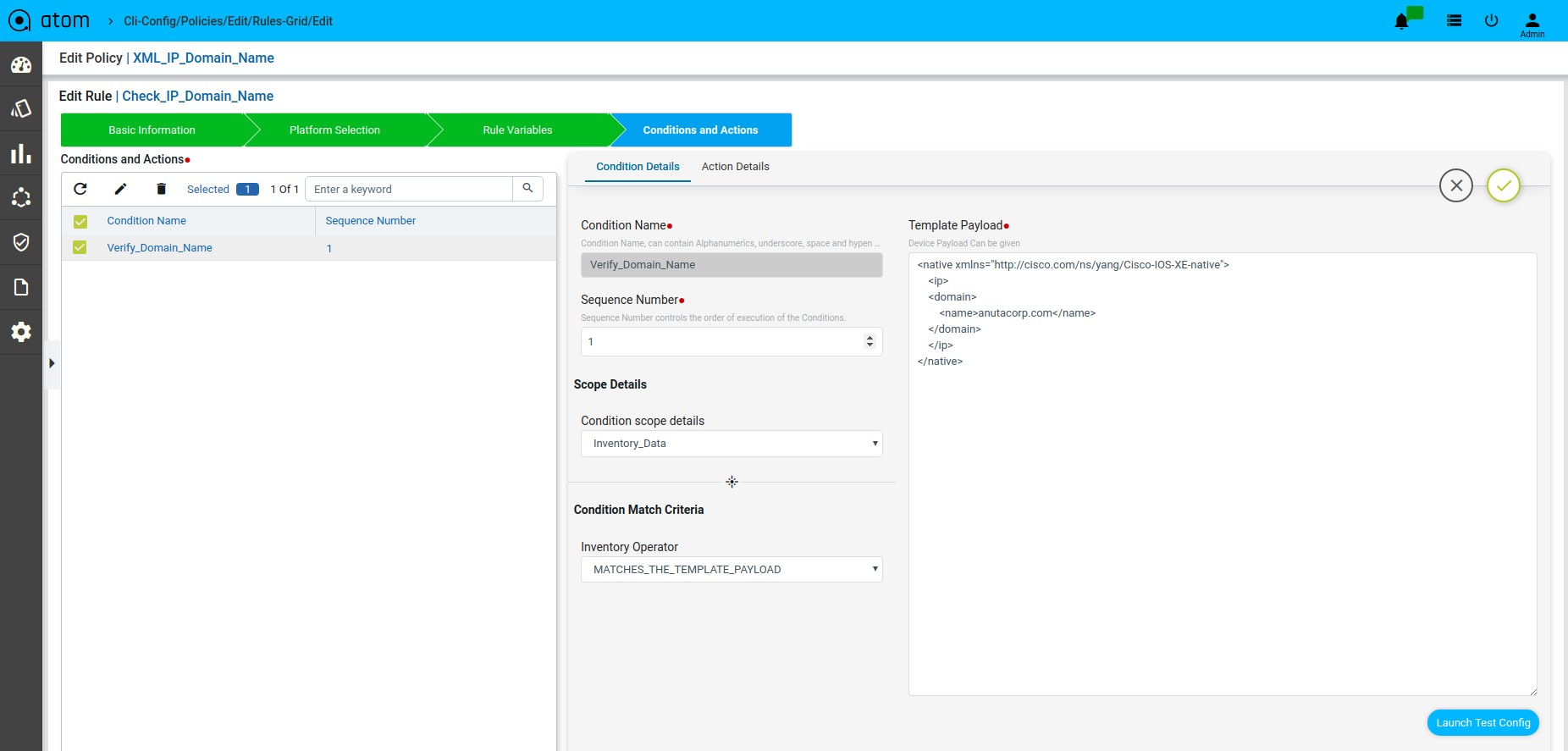

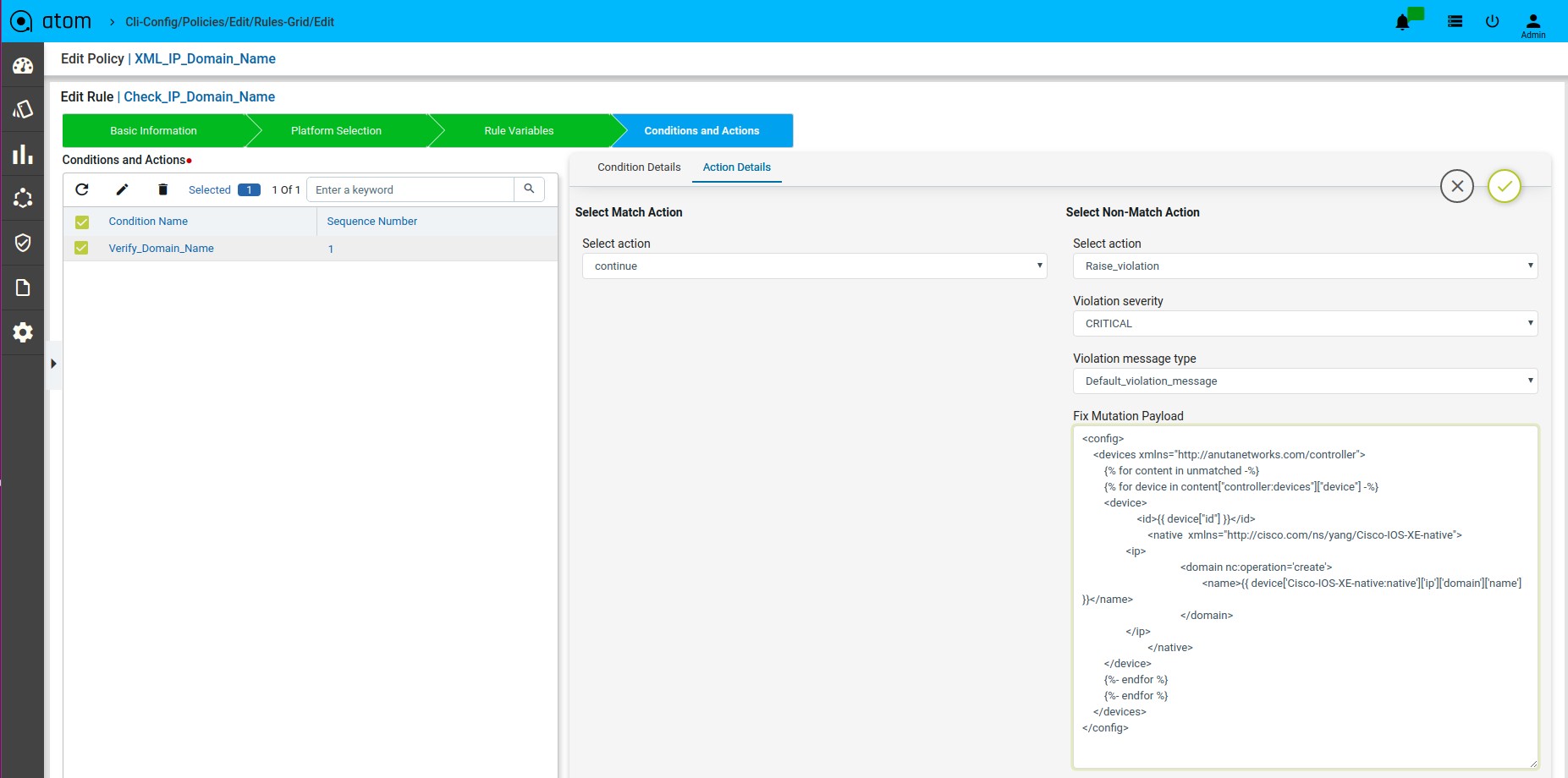

Scenario 12 : IP Domain name check

Template Payload:

Fix Mutation Payload :

Defining Template Payload

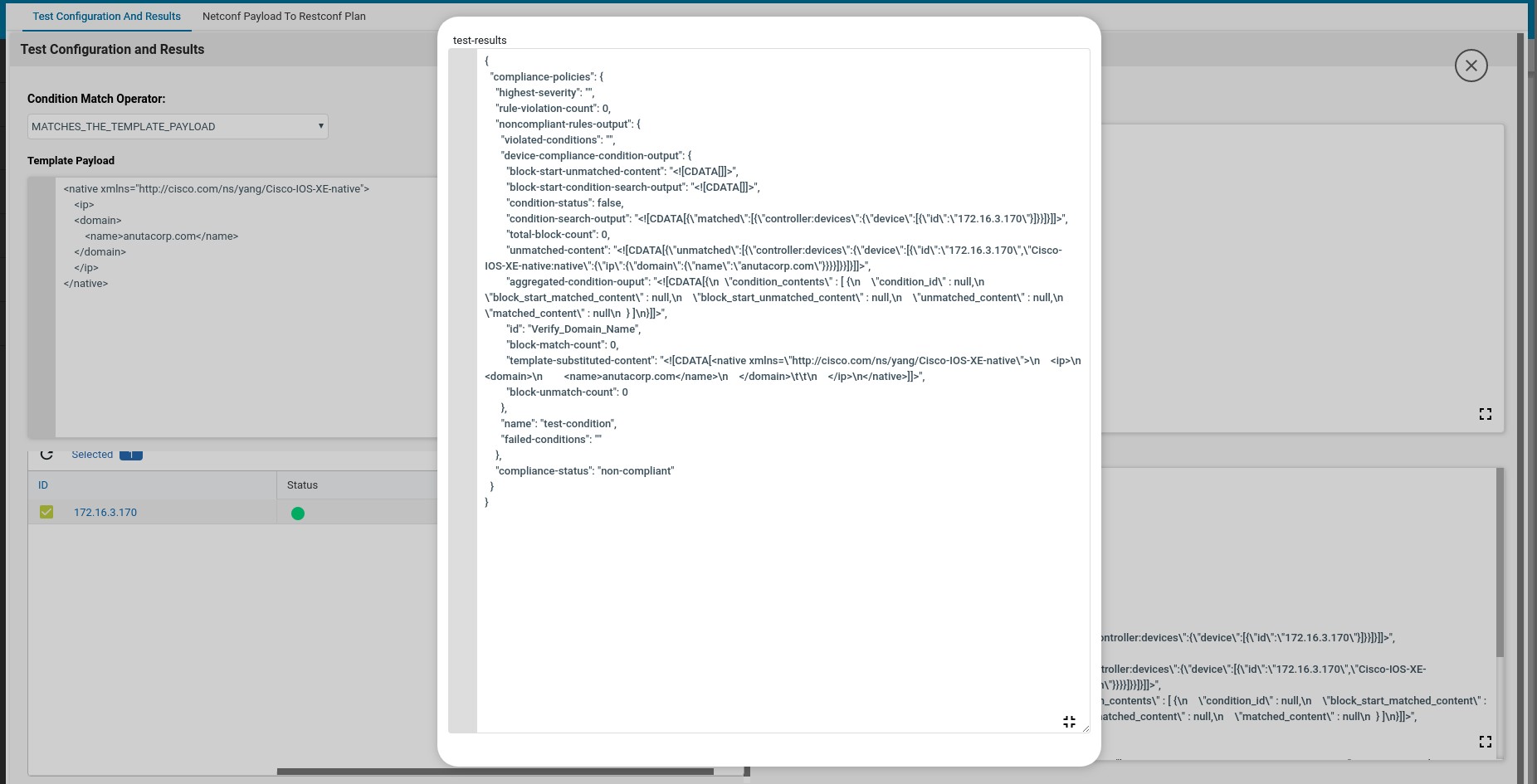

Here the matched and unmatched data will be stored in the backend data structure which is shown in the Test Results tab. The matched data will be stored in the condition-search-output. The unmatched data will be stored in unmatched-content.

Here the matched and unmatched data will be stored in the backend data structure which is shown in the Test Results tab. The matched data will be stored in the condition-search-output. The unmatched data will be stored in unmatched-content.

The Fix Mutation Payload is a Jinja2 template configuration in Netconf xml RPC format written using the unmatched content from the test results tab.

Defining Fix Payload

Fix Configuration Display in Remediation

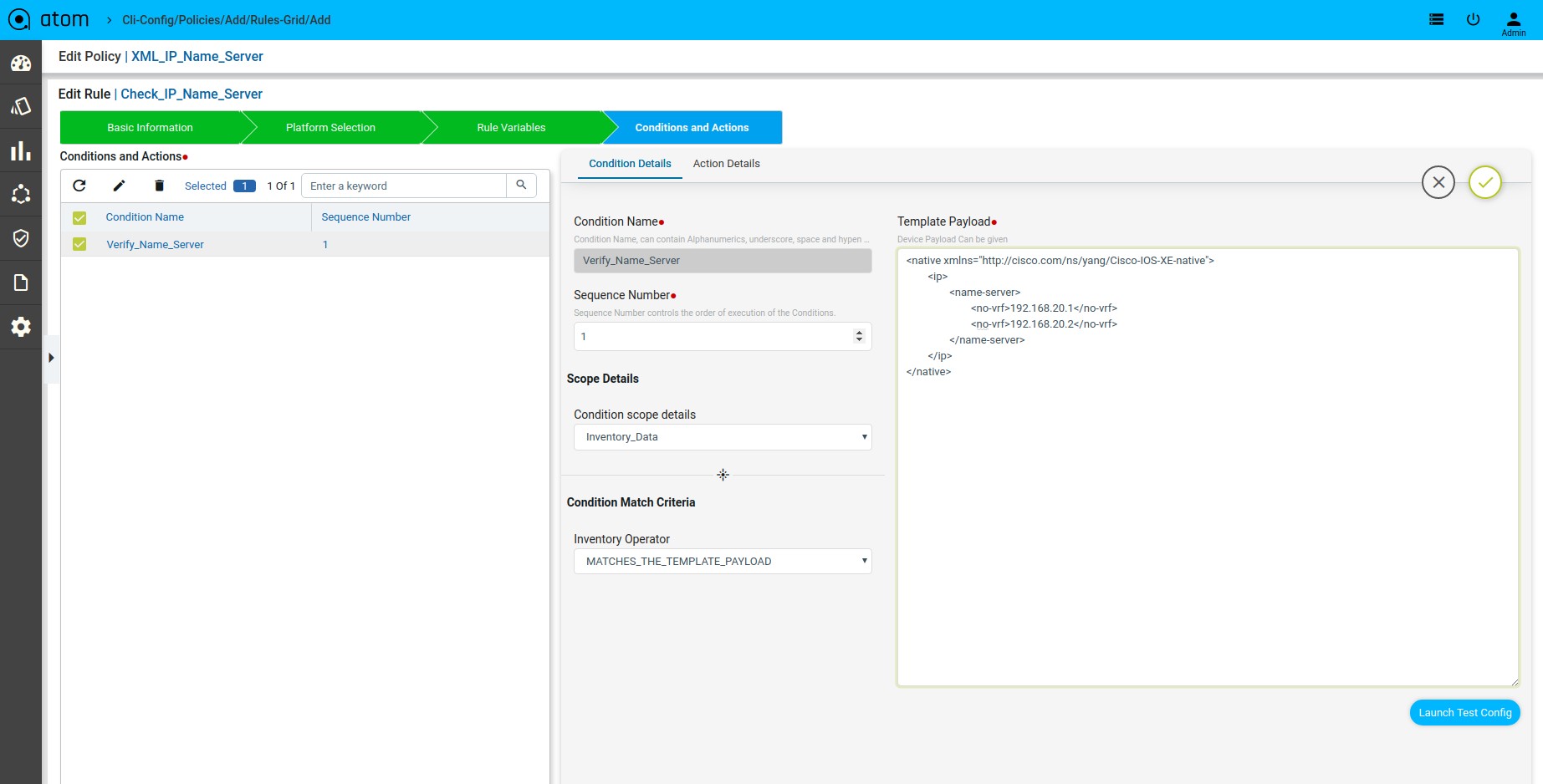

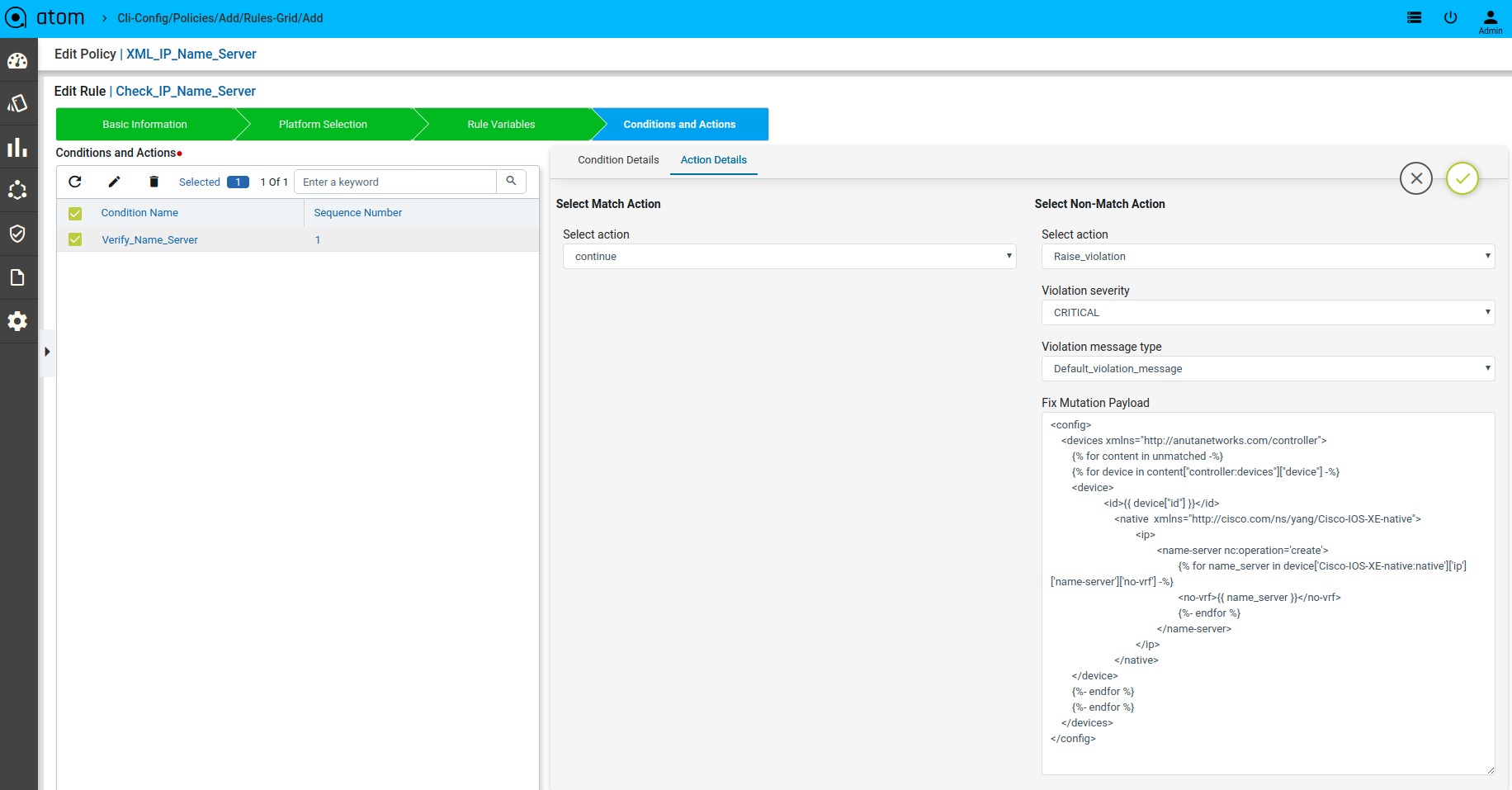

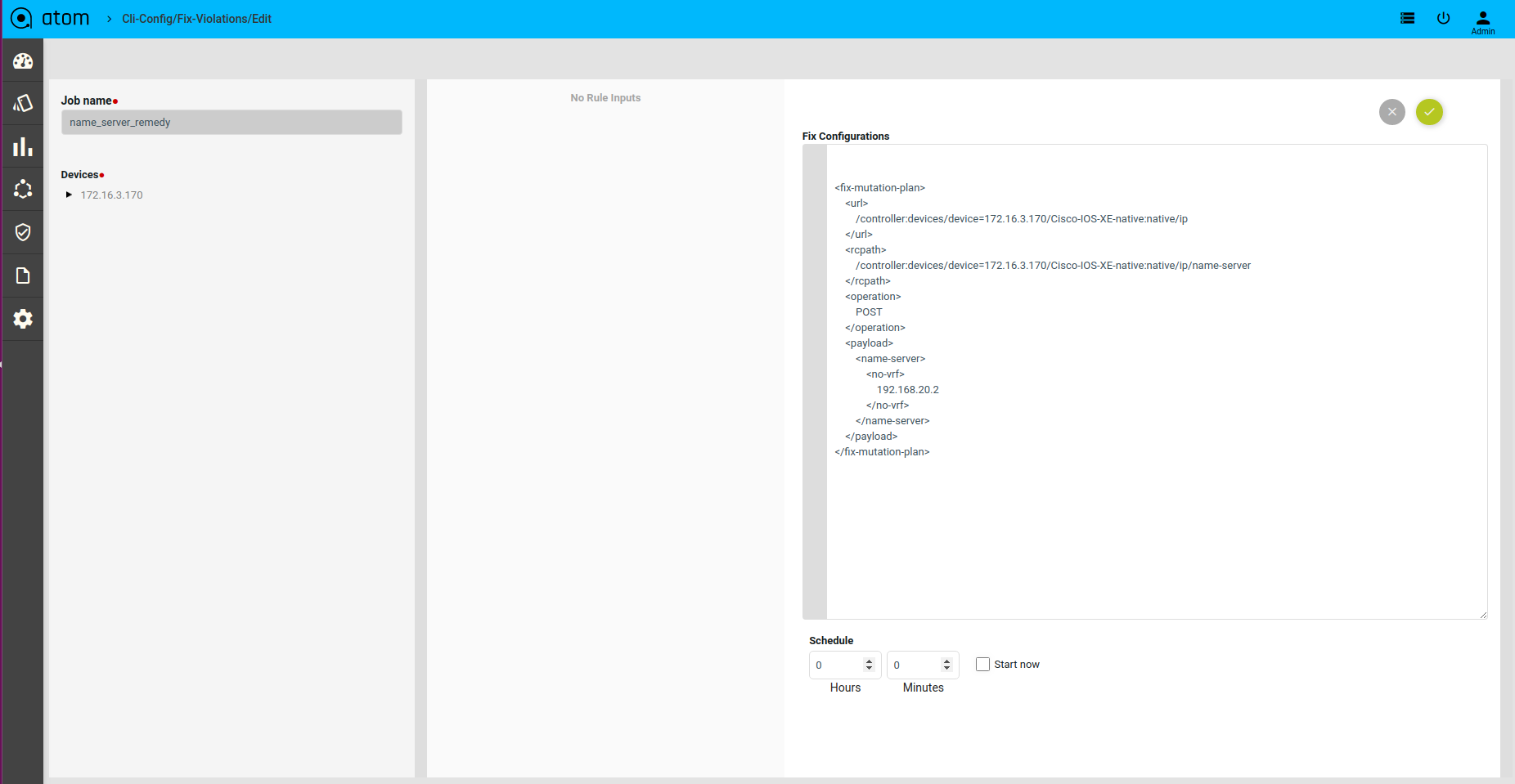

Scenario 13 : IP Name Server check

Template Payload:

Fix Mutation Payload :

Defining Fix Payload

Fix Configuration Display in Remediation

Scenario 14 : Interface check

Template Payload:

Fix Mutation Payload :

<config>

<devices xmlns=”http://anutanetworks.com/controller”>

{% for content in unmatched –%}

{% for device in content[“controller:devices”][“device”] –%}

<device>

<id>{{ device[“id”] }}</id>

<native xmlns=”http://cisco.com/ns/yang/Cisco-IOS-XE-native”>

<interface>

{% for loopback in

device[‘Cisco-IOS-XE-native:native’][‘interface’][‘Loopback’] –%}

<Loopback nc:operation=”create”>

{% if loopback[‘ip’] –%}

<ip>

<address>

<primary>

<address>{{ loopback[‘ip’][‘address’][‘primary’][‘address’]

}}</address>

<mask>{{ loopback[‘ip’][‘address’][‘primary’][‘mask’] }}</mask>

</primary>

</address>

</ip>

{%- endif %}

{% if loopback[‘ipv6’] –%}

<ipv6>

<address>

<prefix-list>

{% for prefix in loopback[‘ipv6’][‘address’][‘prefix-list’] –%}

<prefix>{{ prefix[‘prefix’] }}</prefix>

{%- endfor %}

</prefix-list>

</address>

</ipv6>

{%- endif %}

<name>{{ loopback[‘name’] }}</name>

</Loopback>

{%- endfor %}

</interface>

</native>

Defining Template Payload

Defining Template Payload

Scenario 15 : VRF check

Template Payload:

Fix Mutation Payload :

–%}

{% for vrf_def in device[‘Cisco-IOS-XE-native:native’][‘vrf’][‘definition’]

{% if vrf_def[‘name’] == ‘LON2001’ –%}

<definition nc:operation=”create”>

{% if vrf_def[‘rd’] –%}

<rd>{{ rd_2001 }}</rd>

{%- endif %}

<name>{{ vrf_def[‘name’] }}</name>

{% if vrf_def[‘address-family’] –%}

<address-family>

<ipv4>

{% if vrf_def[‘address-family’][‘ipv4’][‘route-target’] –%}

<route-target>

{% for export in

vrf_def[‘address-family’][‘ipv4’][‘route-target’][‘export’] –%}

<export>

<asn-ip>{{ export[‘asn-ip’] }}</asn-ip>

</export>

{%- endfor %}

{% for import in vrf_def[‘address-family’][‘ipv4’][‘route-target’][‘import’] -%}

<import>

<asn-ip>{{ import[‘asn-ip’] }}</asn-ip>

</import>

{%- endfor %}

</route-target>

{%- endif %}

{% if vrf_def[‘address-family’][‘ipv4’][‘import’] –%}

<import>

<map>{{ vrf_def[‘address-family’][‘ipv4’][‘import’][‘map’] }}</map>

</import>

{%- endif %}

{% if vrf_def[‘address-family’][‘ipv4’][‘mdt’] –%}

<mdt>

{% if vrf_def[‘address-family’][‘ipv4’][‘mdt’][‘default’] -%}

<default>

<address>{{

vrf_def[‘address-family’][‘ipv4’][‘mdt’][‘default’][‘address’] }}</address>

Defining Template Payload

Defining Fix Payload

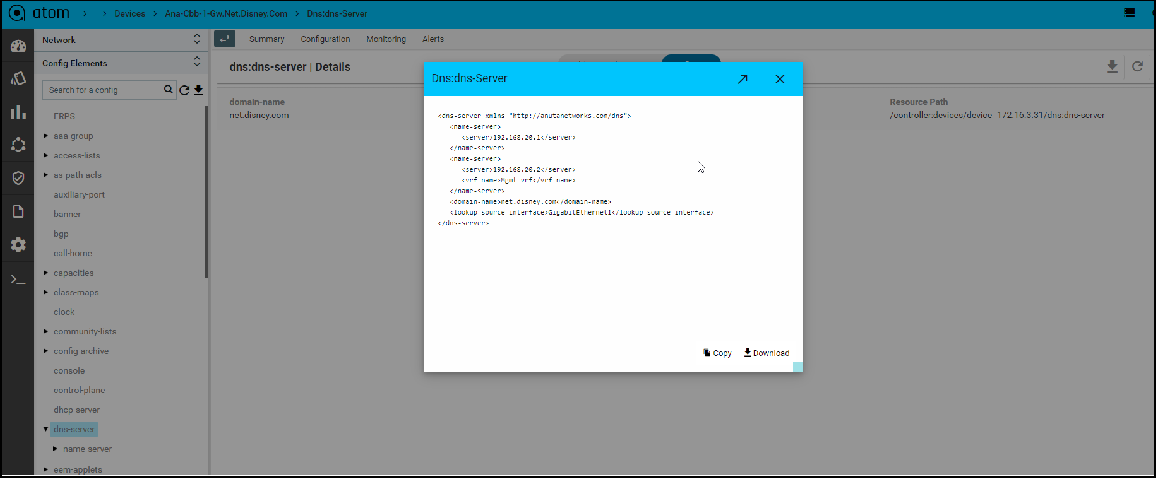

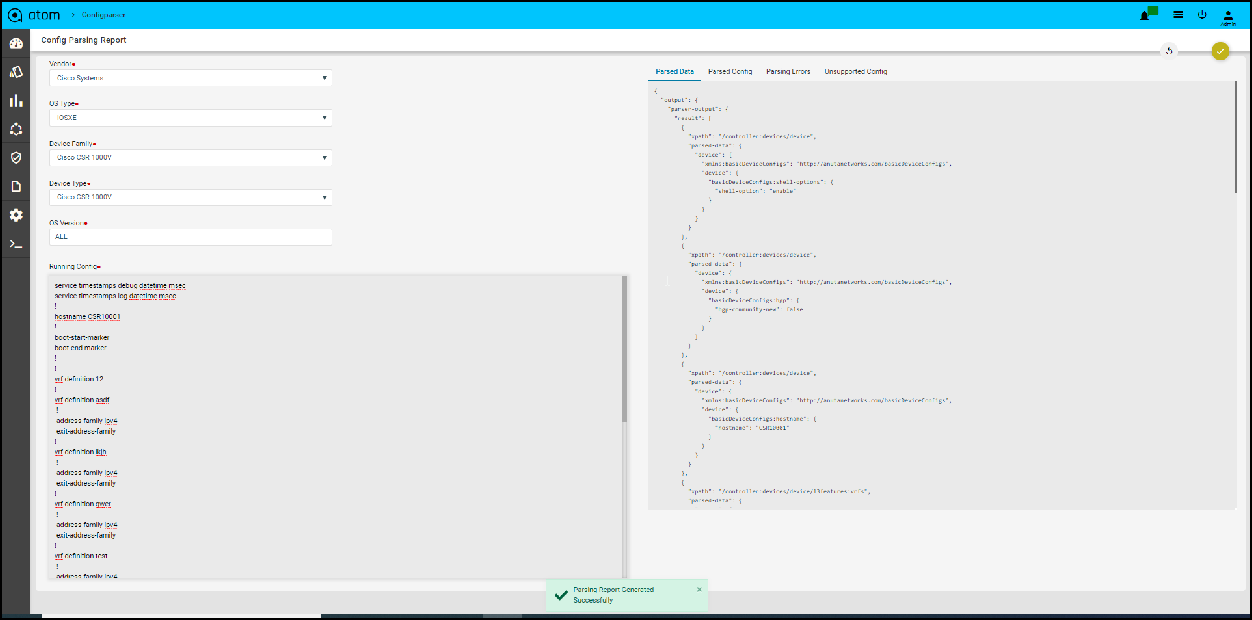

How to derive the XML Template payload

-

- Navigate to the Device profile page and export the XML template details for the yang parsed entities

Resource Manager → Devices → Select a Device → Configuration →Config Data → Entities → Select Abstract entity → Use Download button to export/copy the XML payload

Example : Let’s derive domain-name XML Template payload

Navigate to Devices → select a device→ configuration -> Config Data → Entities →

dns-server → Export XML payload using download button.

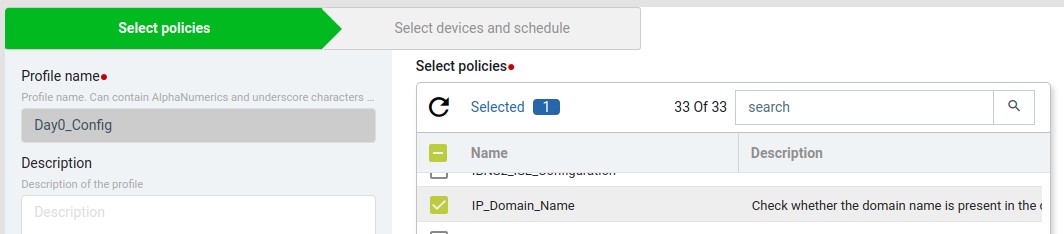

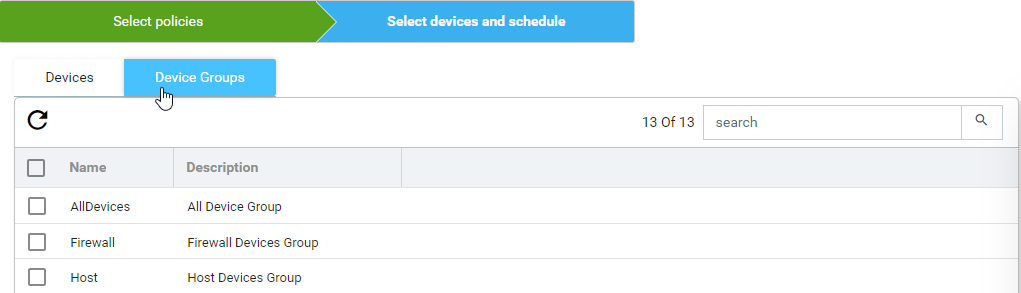

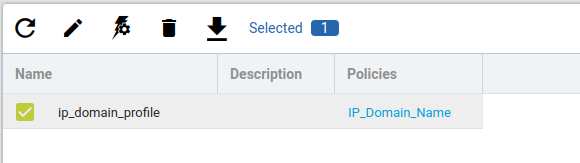

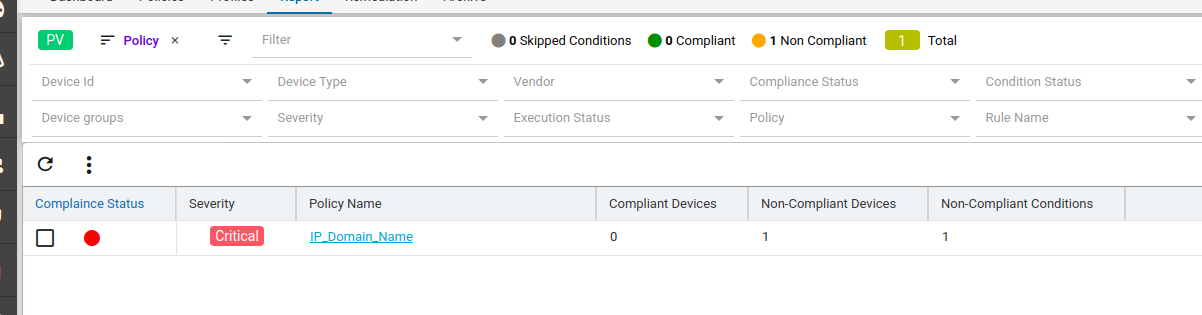

Profiles

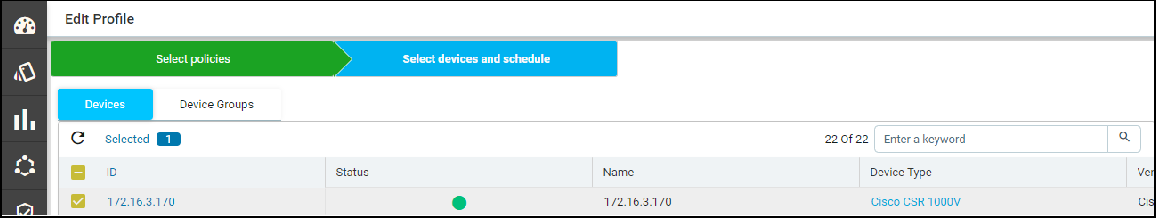

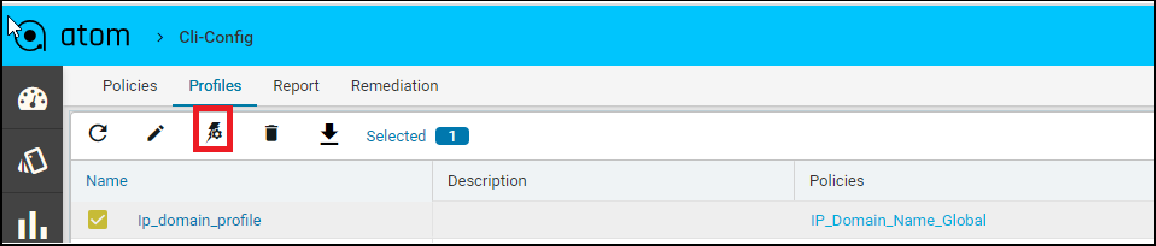

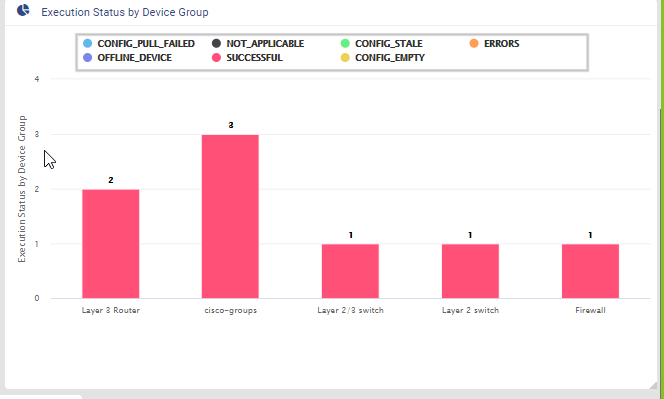

A profile allows one or more Policies to be grouped and executed on one or more devices either on-demand or as per Schedule. Profile execution results in a per-device compliance report included in the execution.

Steps:

- Navigate to Resource Manager > Config Compliance -> Profiles

- Select “+” to Create a Profile

- ATOM opens up a new wizard and displays 2 sections.

- Policies – Select one/more policies

- Devices & Schedule – Select one/more devices or Device groups

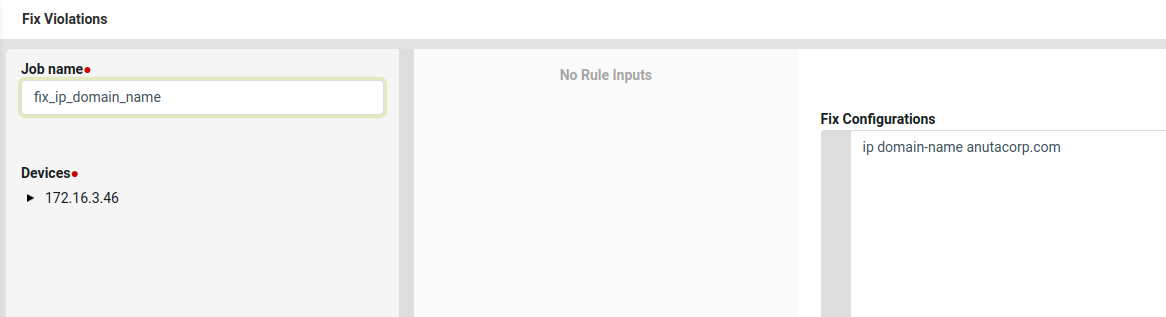

Create profile by providing name, description and select policy which was created previously IP_Domain_Name.

Create profile by providing name, description and select policy which was created previously IP_Domain_Name.

- Navigate to the next tab, Select devices and schedule. We can select either device(s) from

Devices or Device Groups tab

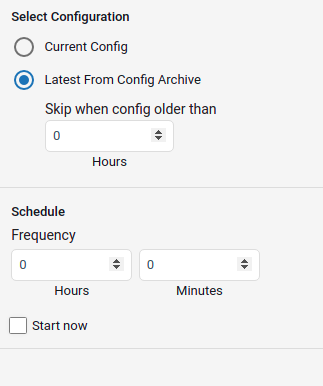

- After device(s) are selected, choose if the compliance checks need to be run against an archived config or current running-configuration of the device. By default Latest From Config Archive is selected.

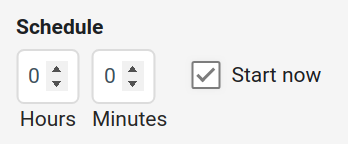

Schedule: The profile job can be scheduled in Hours or Minutes. Alternatively, a job can be started right away by enabling Start now option.

Configuration:

- Current Config: This will pull the current device configuration and evaluate against the polices.

- Latest From Config Archive: This will use the latest configuration that is stored in the ATOM. Optionally you can add a check to skip the compliance check when the configuration is older than n hrs

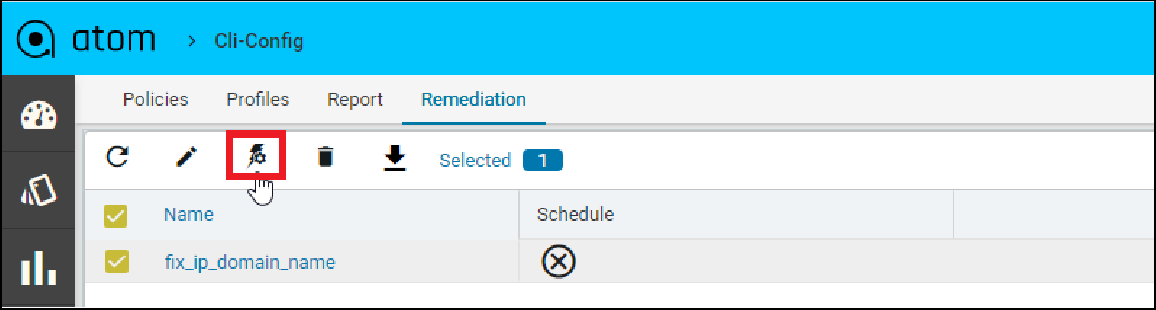

Or the profile job can triggered at a later point of time using run job icon on the profiles view

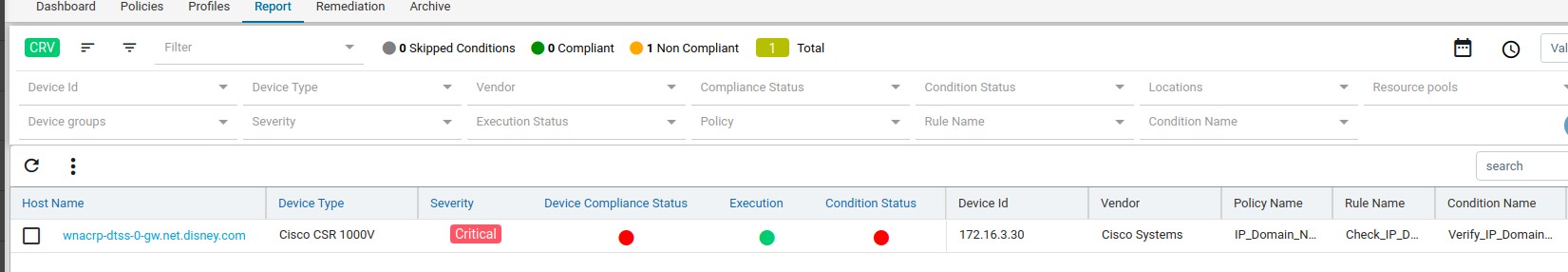

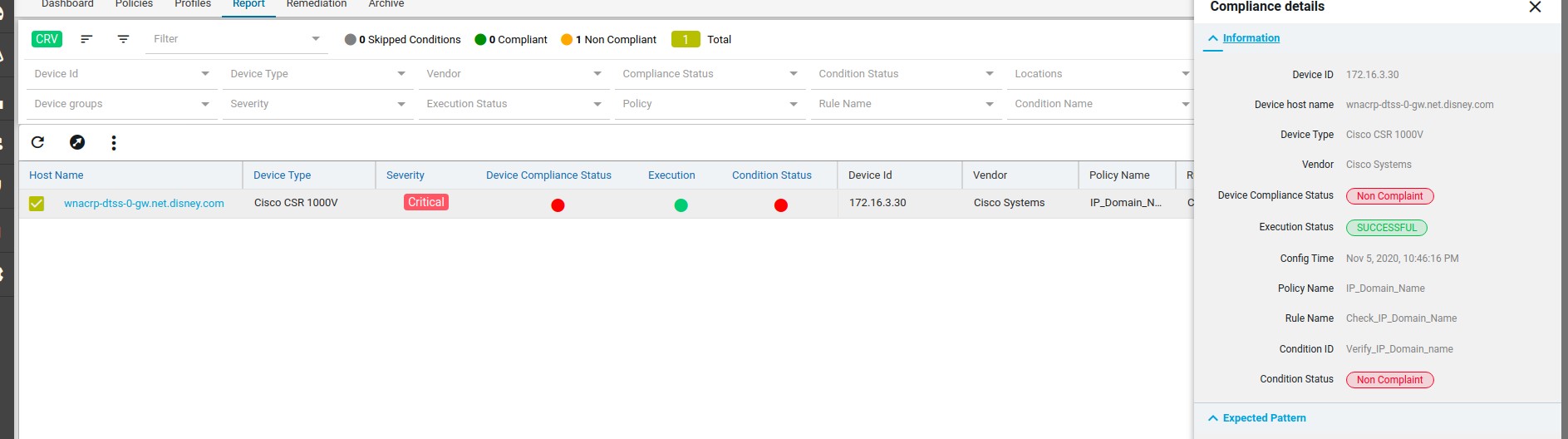

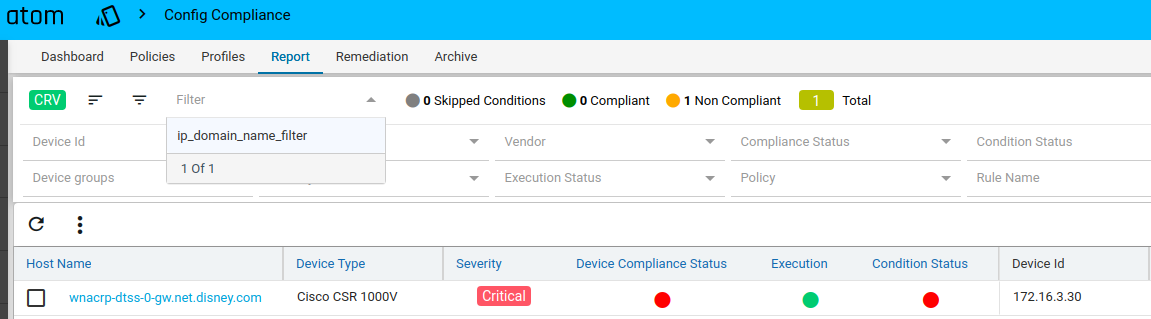

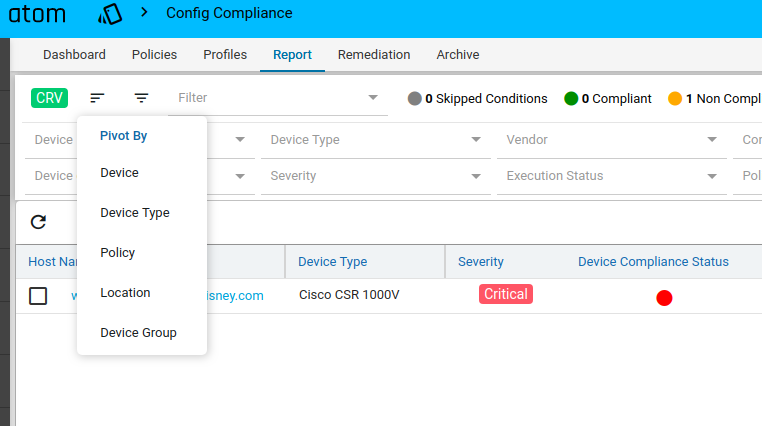

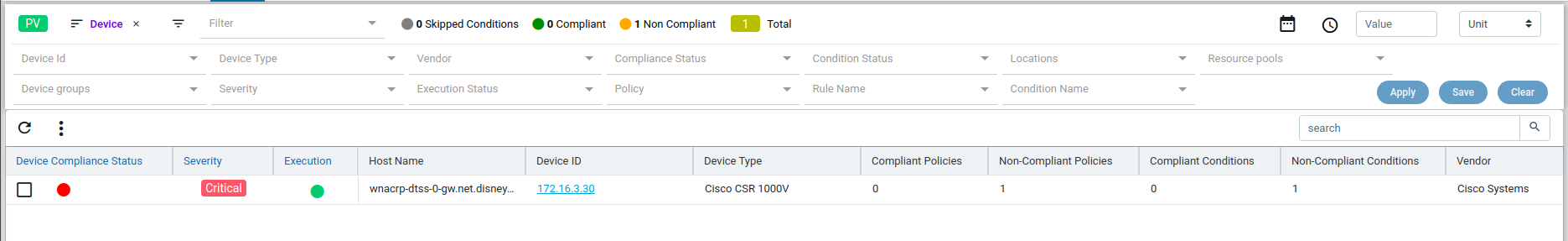

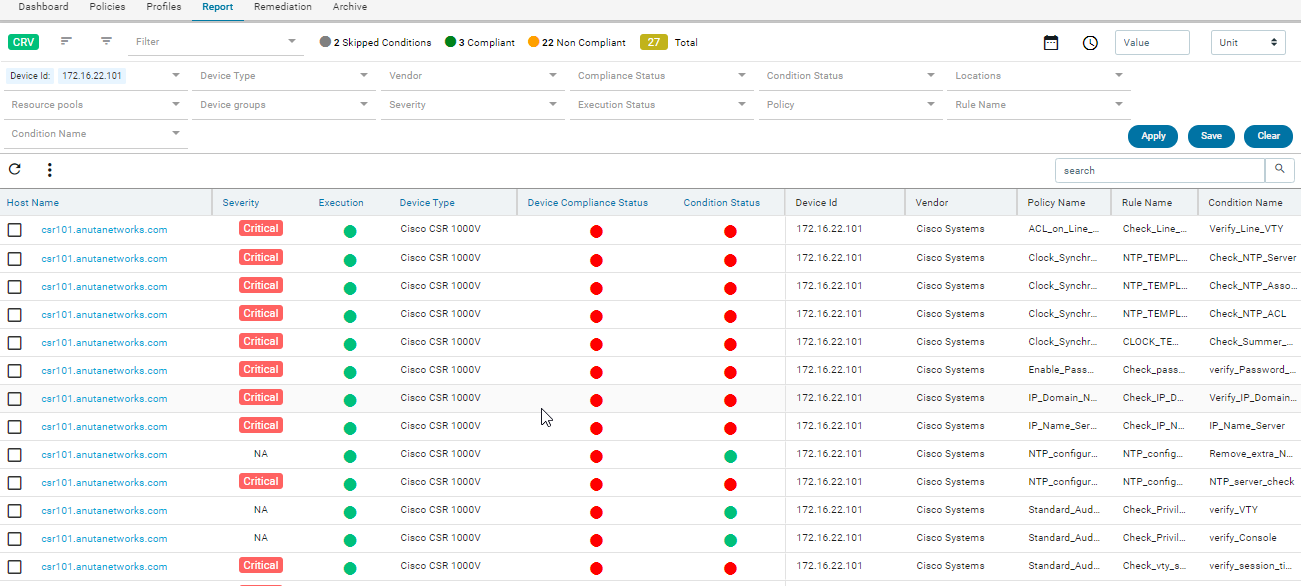

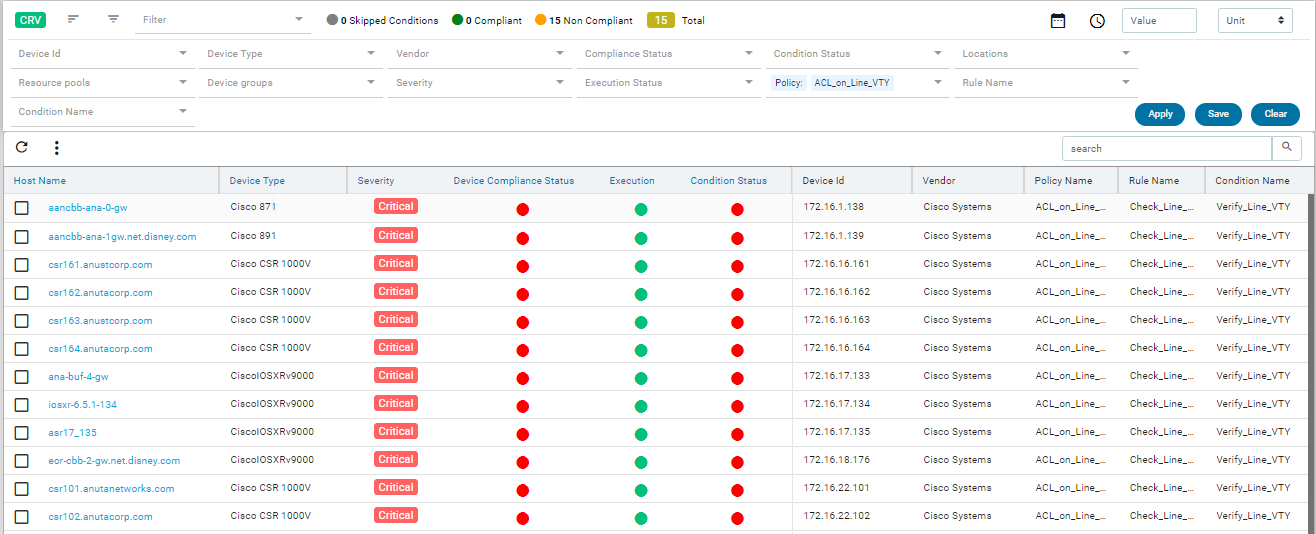

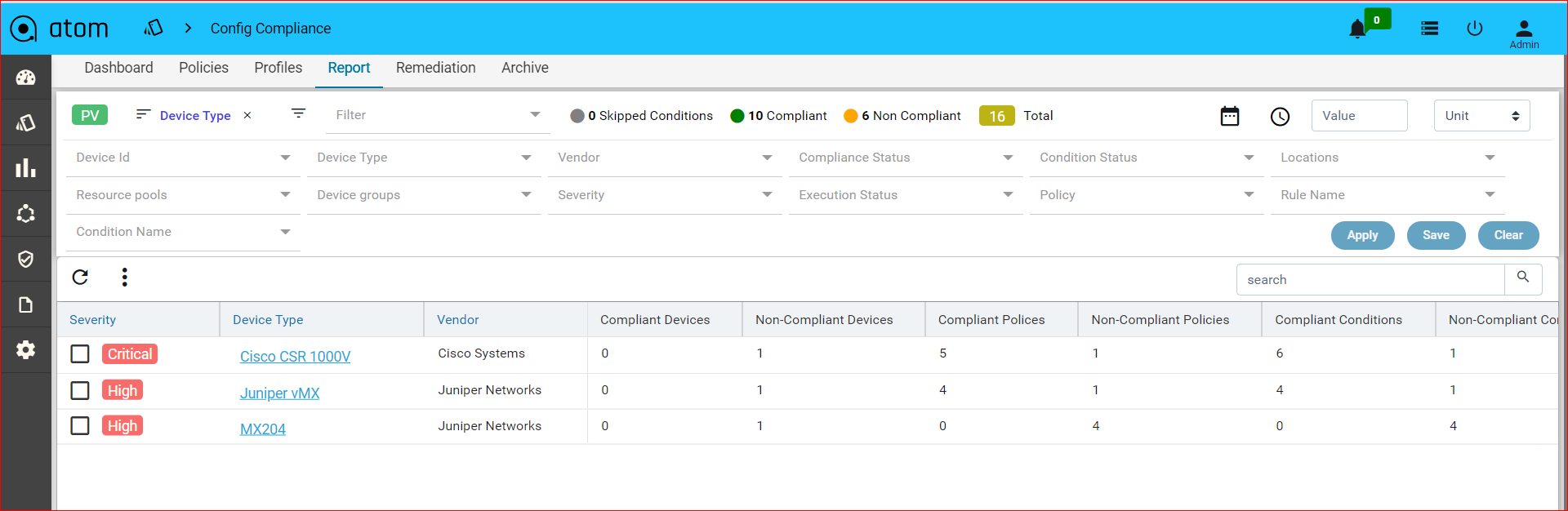

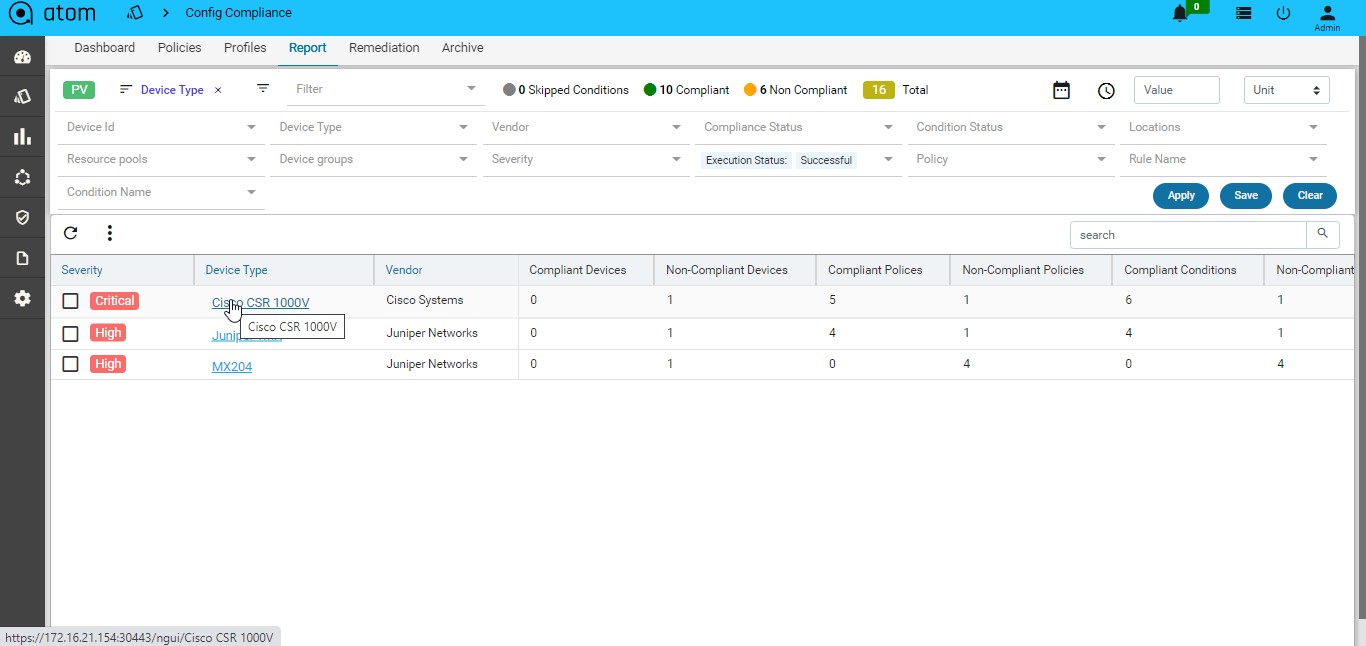

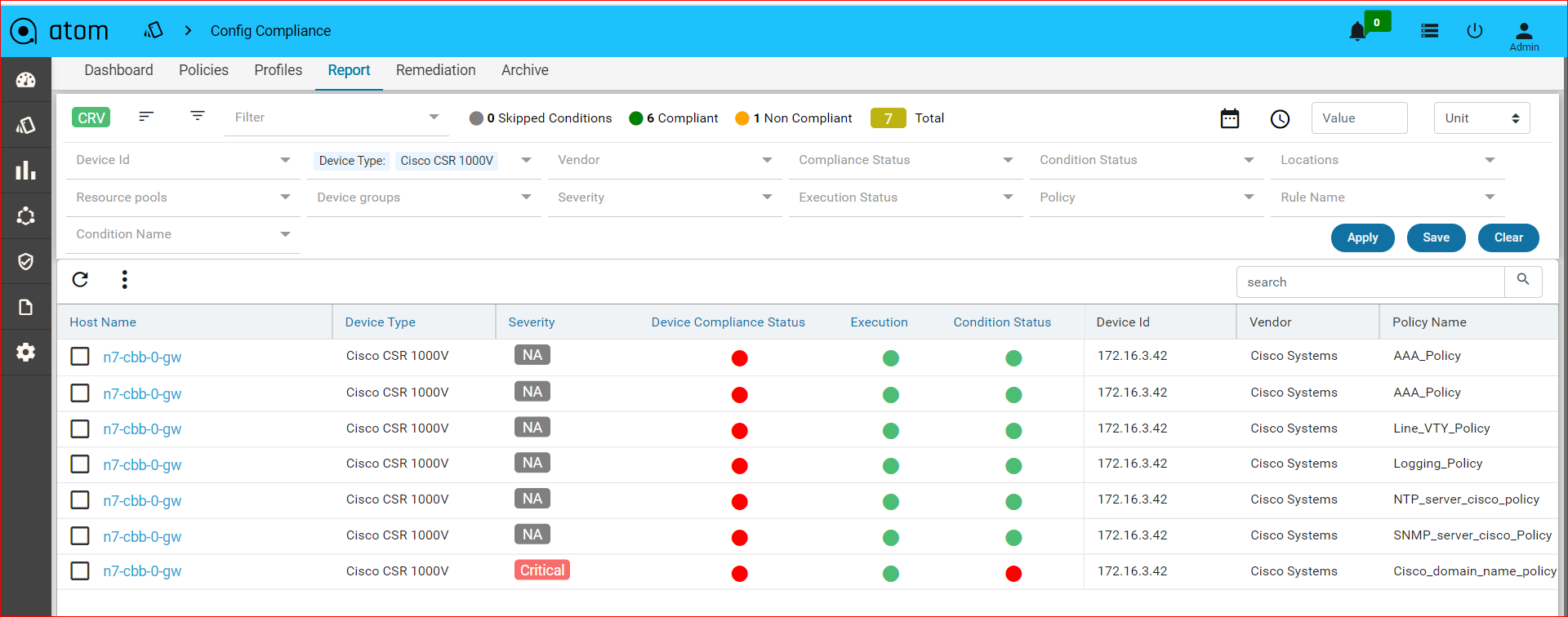

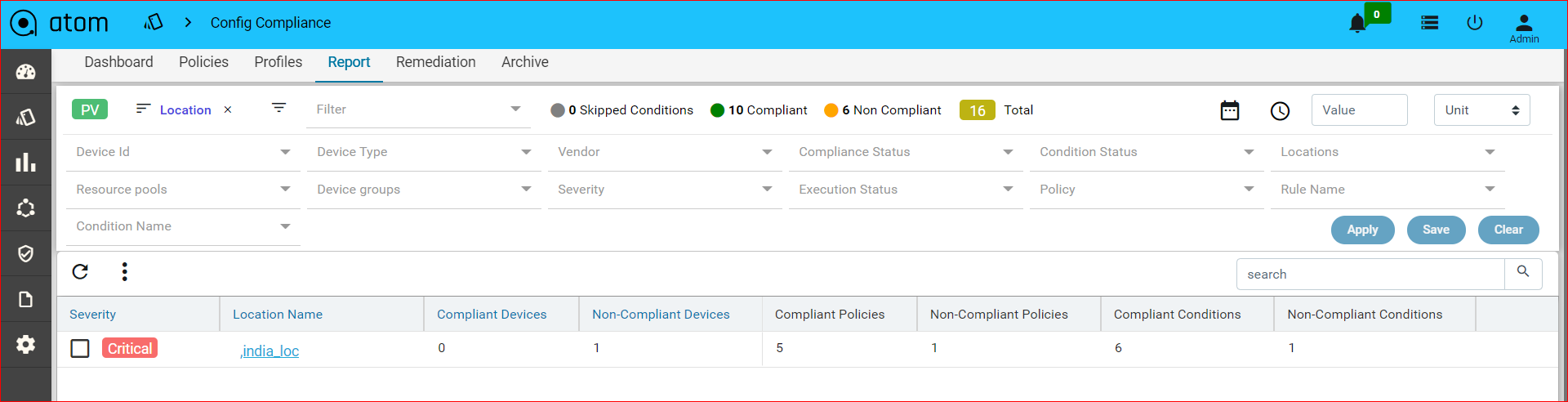

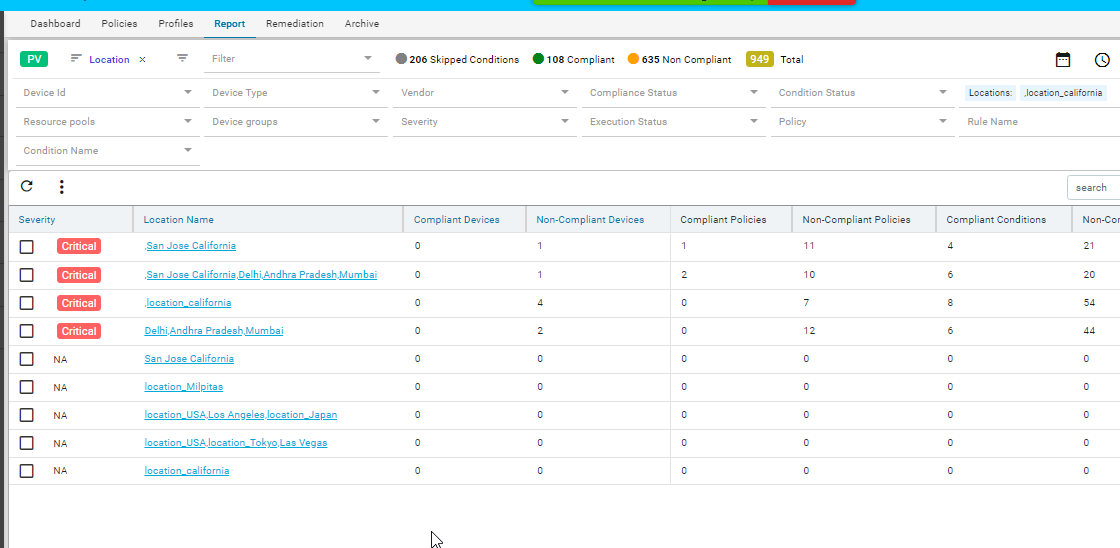

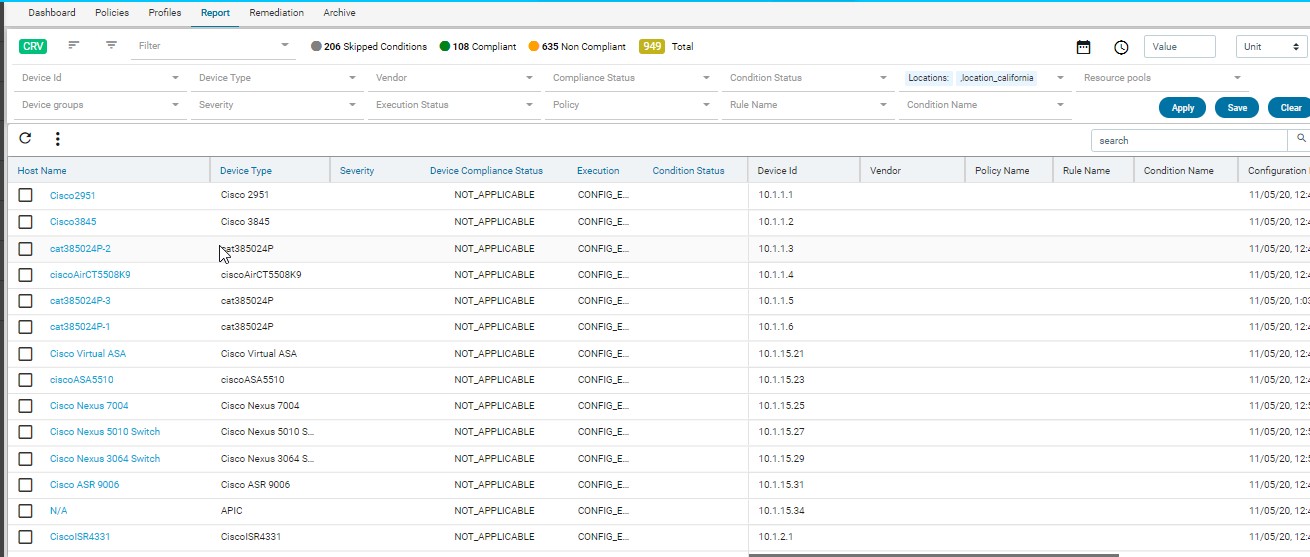

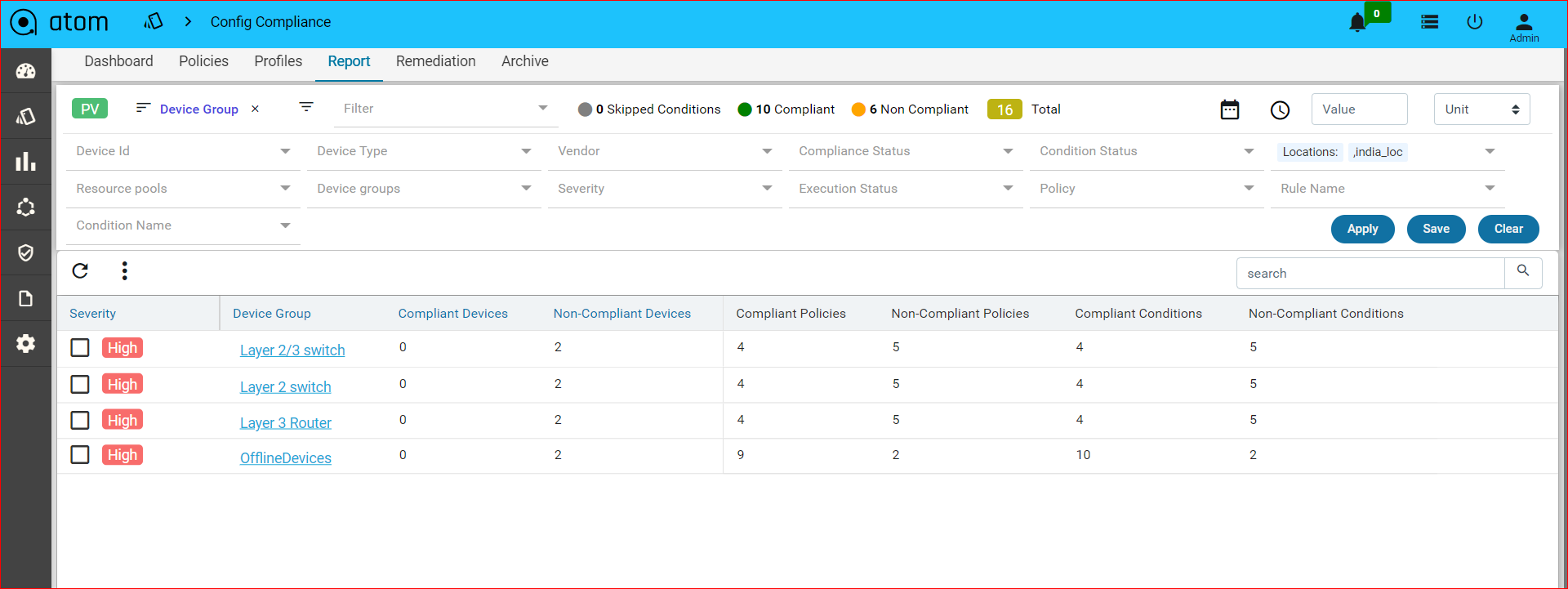

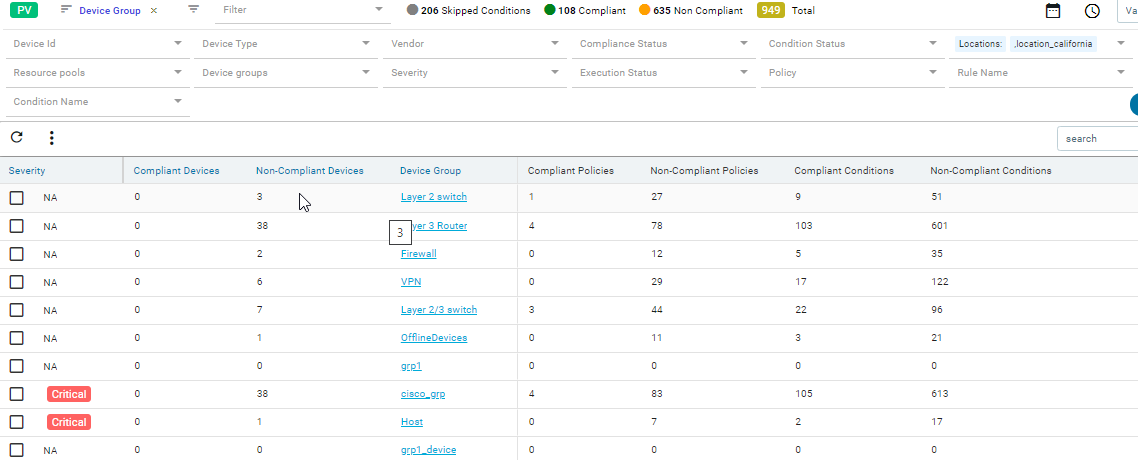

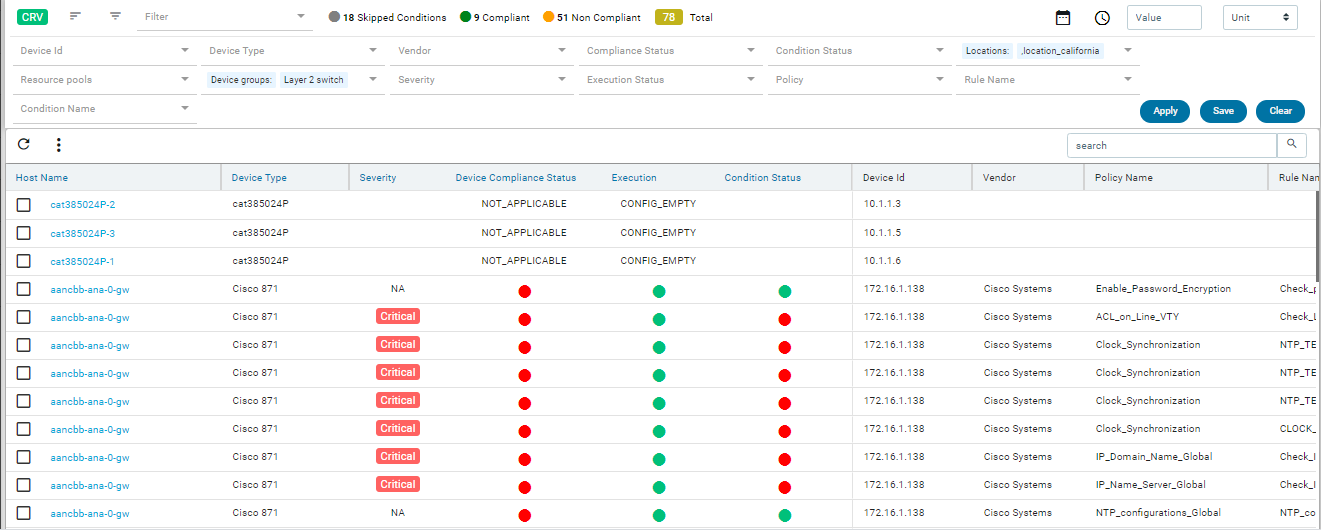

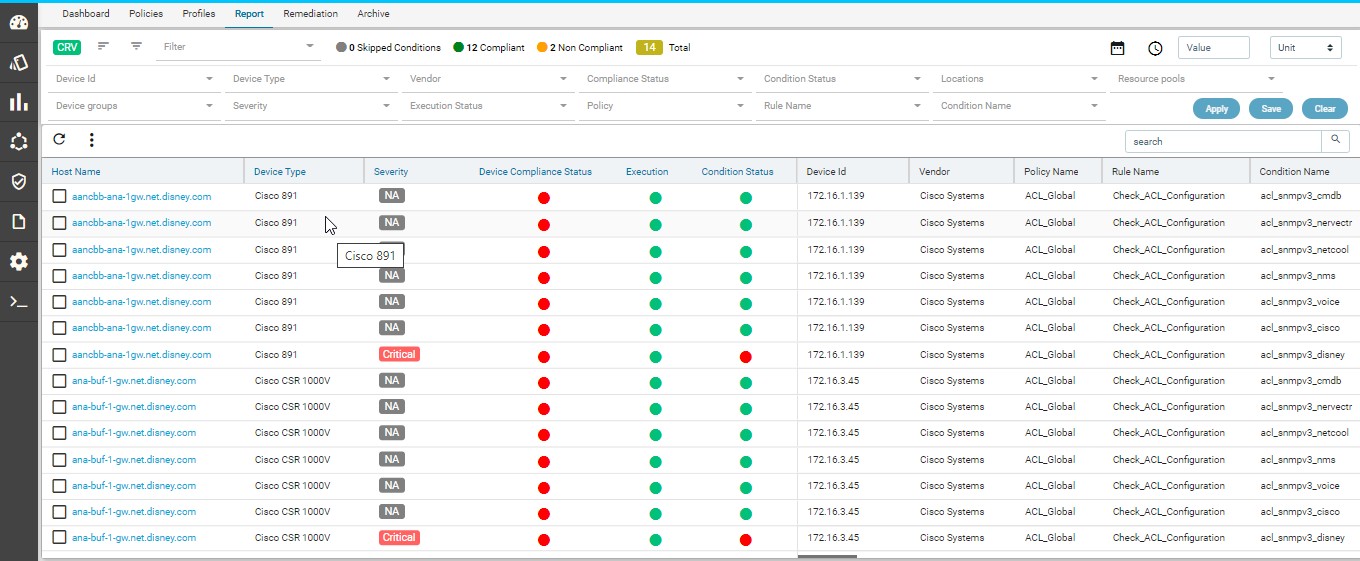

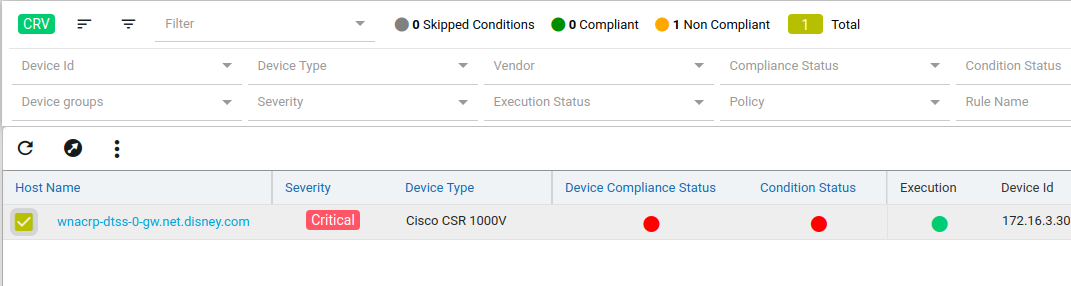

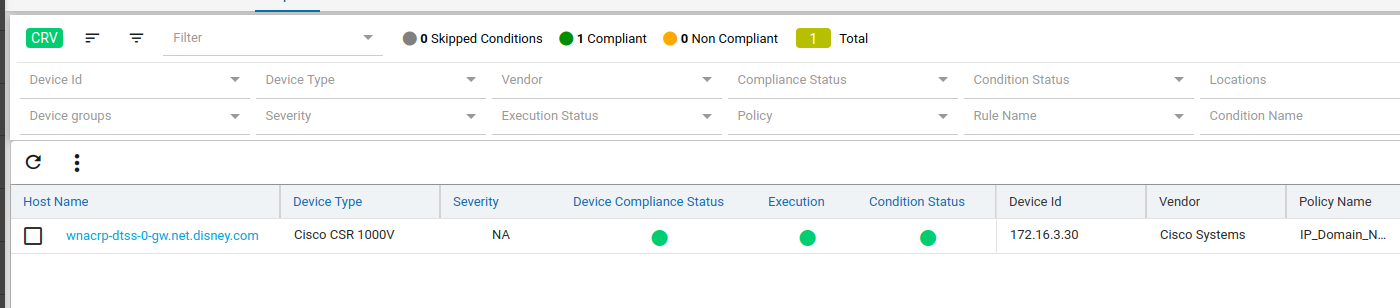

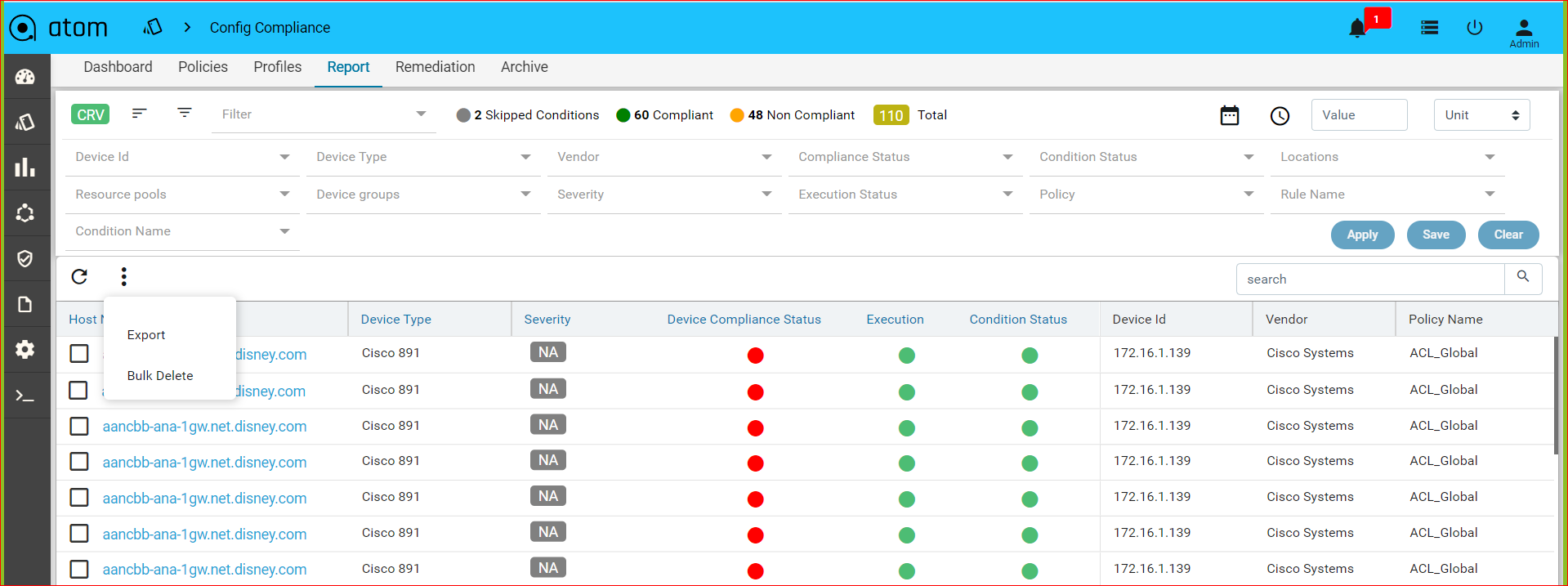

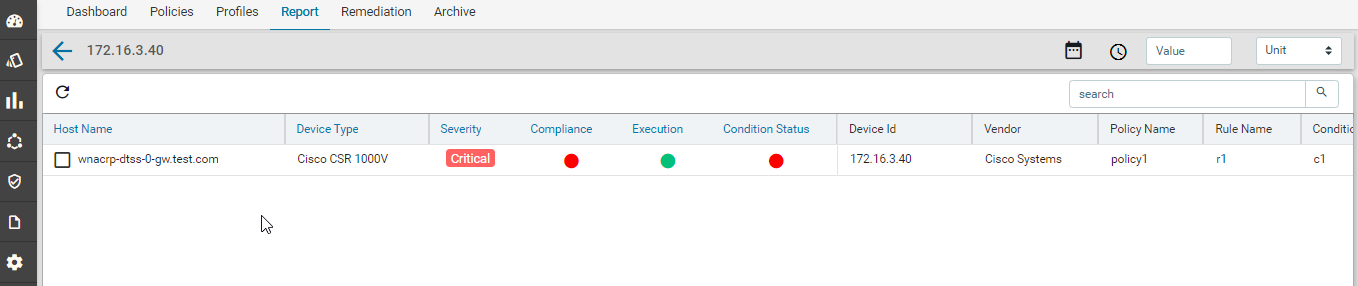

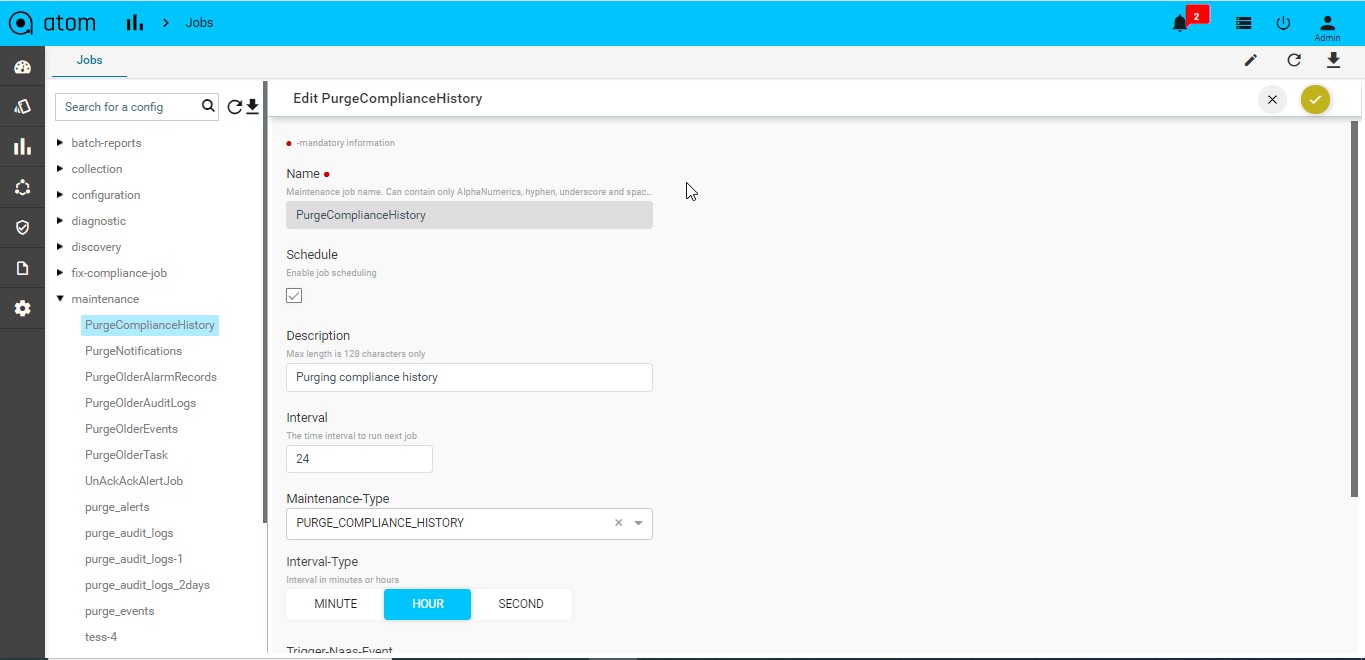

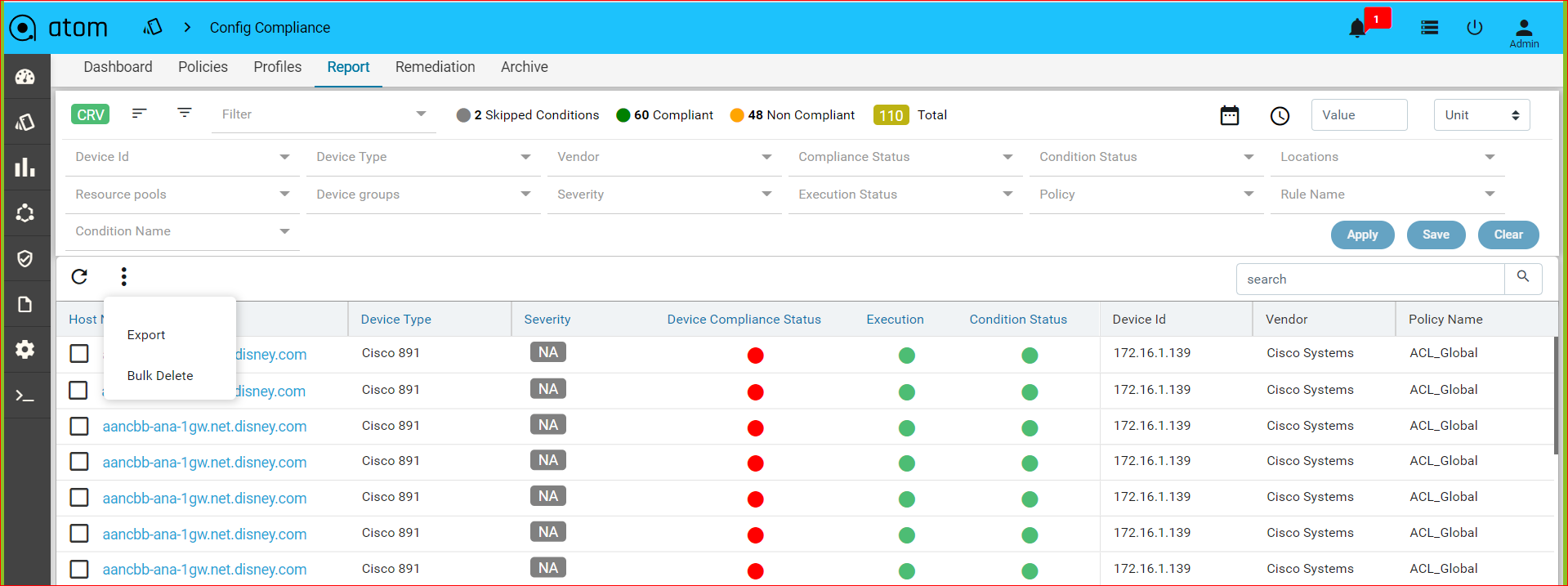

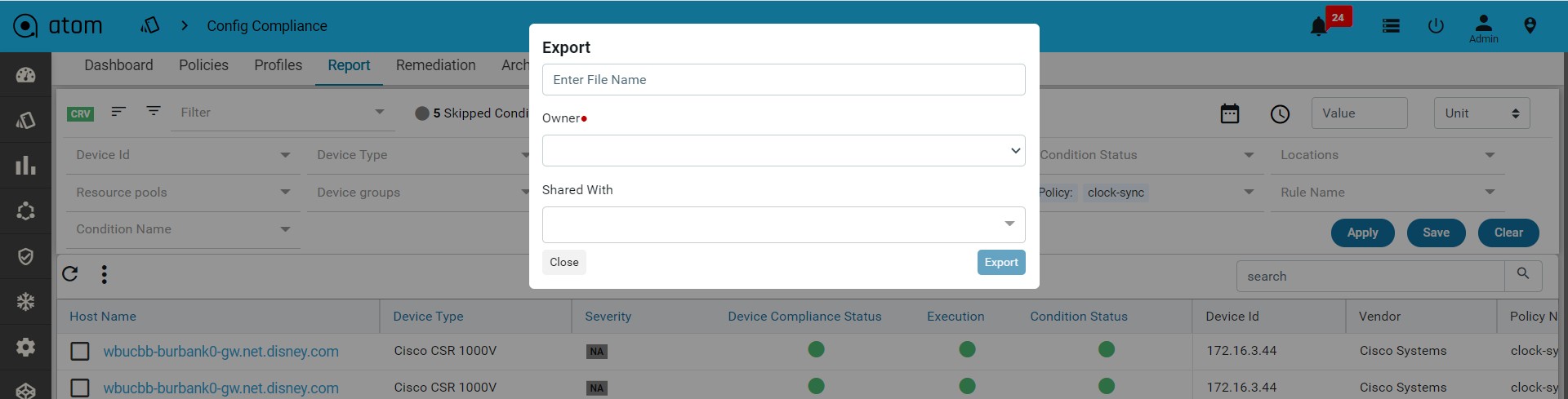

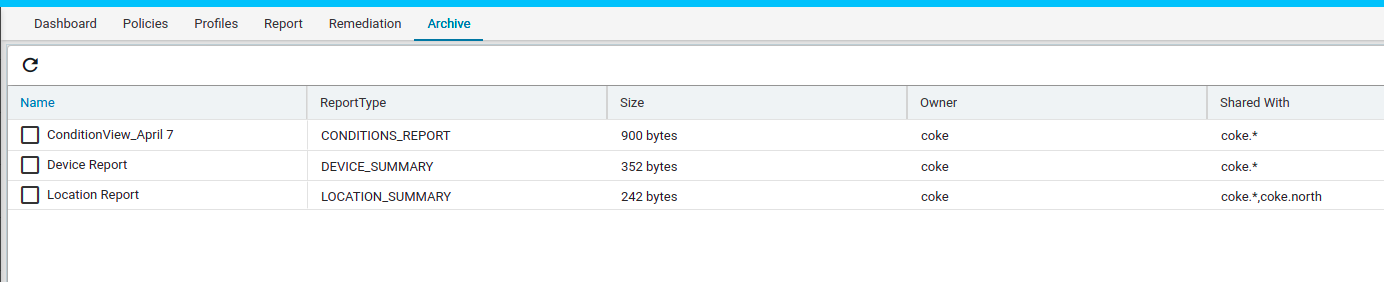

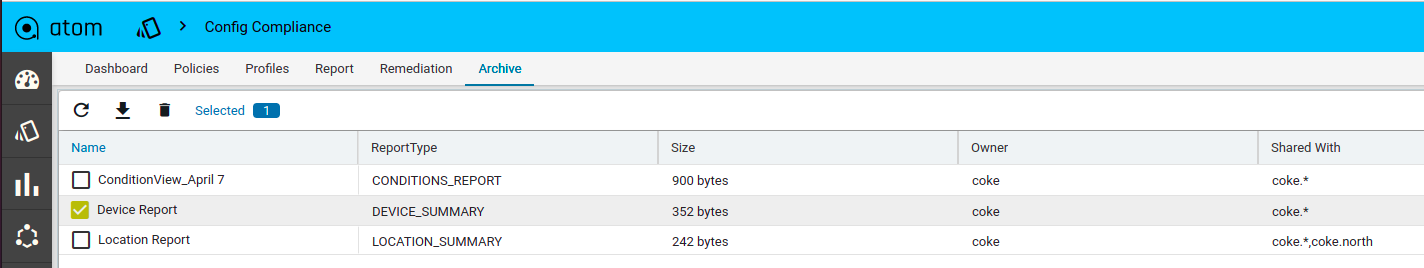

Report

Navigate to Resource Manager > Config Compliance -> reports

Compliance report is generated upon completion of Profile run. For each device, the report lists the compliant and non-compliant policies, rules and conditions .

After profile job is run, audit details can be viewed in Report View

After profile job is run, audit details can be viewed in Report View

Since IP_Domain_Name policy has a condition named Verify_IP_Domain_name, where it didn’t meet the required criteria. The condition is marked as Non_compliant.

Severity: Severity the condition where the condition Match Action or Non Match Action is of type Raise_violation or Raise_violation_and_continue.

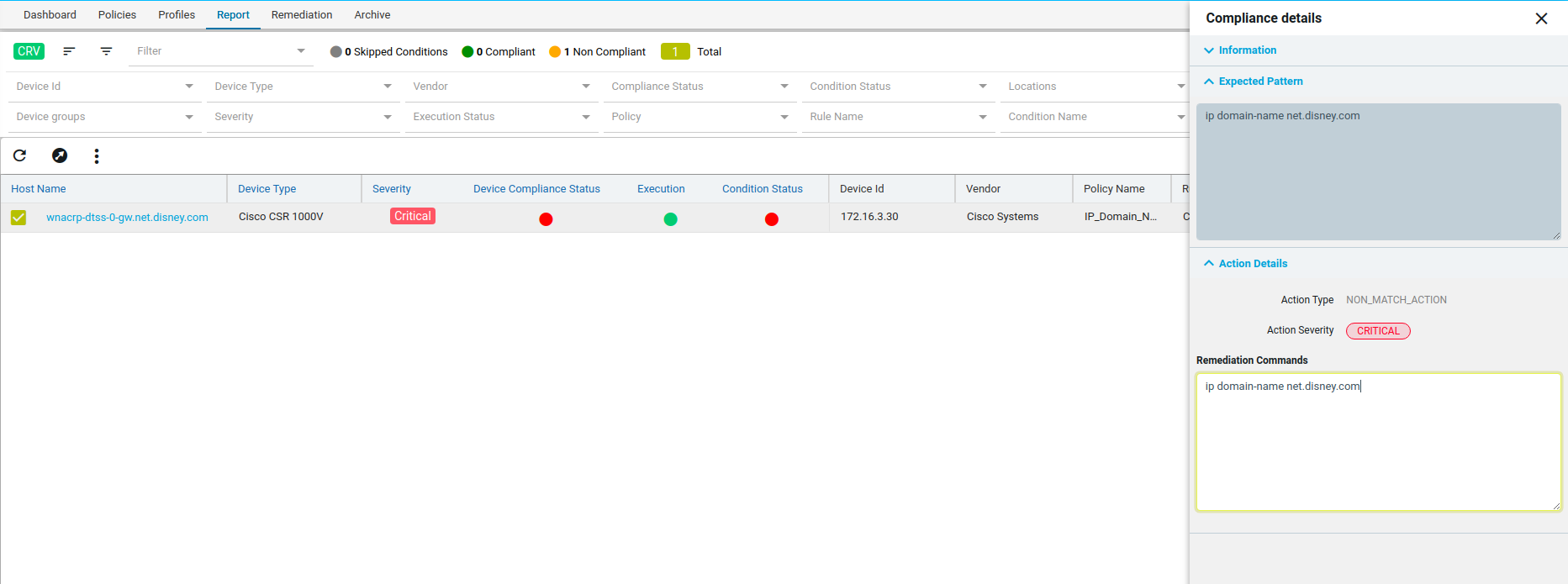

Upon checking the row you can see the expected and the fix commands for that condition along with action-severity, action-type and other metadata related to device & condition.

Upon checking the row you can see the expected and the fix commands for that condition along with action-severity, action-type and other metadata related to device & condition.

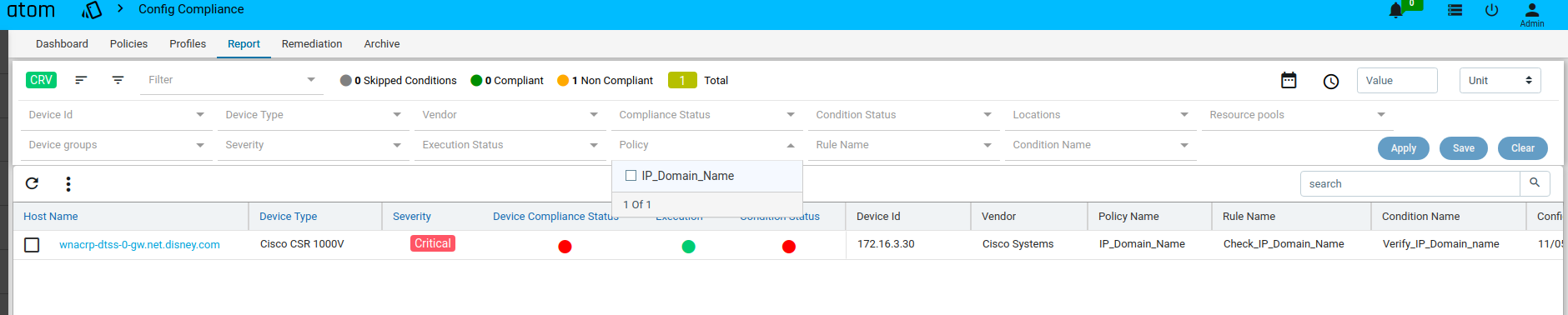

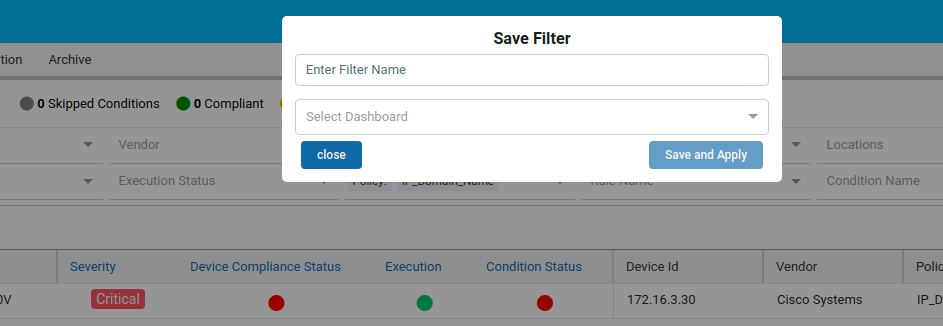

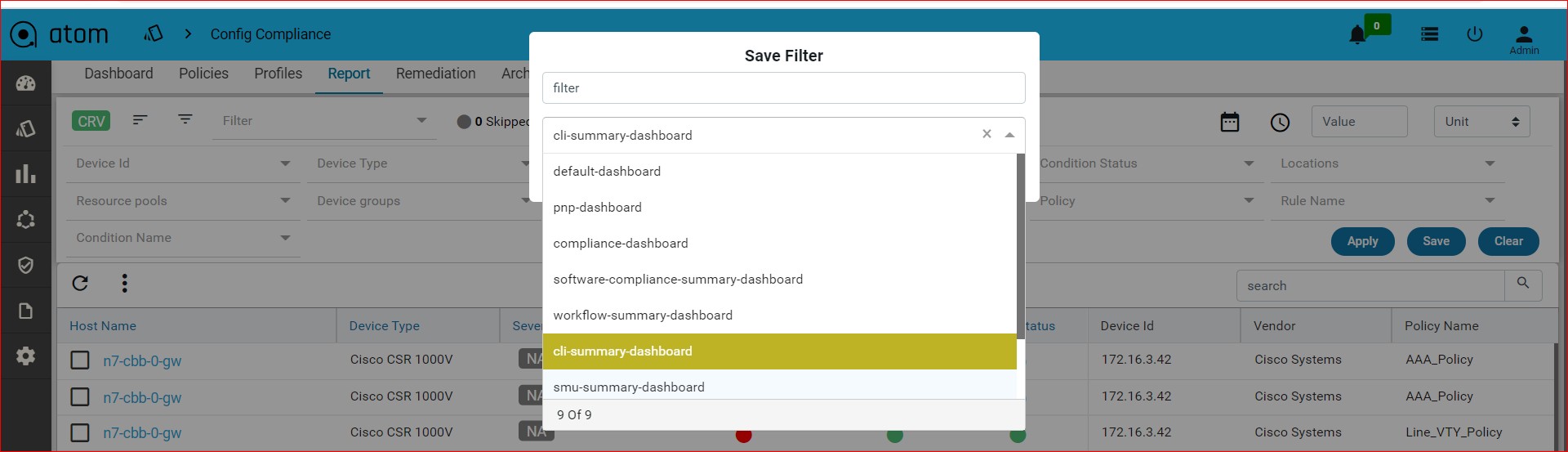

The reports section also facilitates users to filter the results of what user is interested in. The dropdown will display all the possible values for the filters. Users can try out any combination and see the results. By clicking on the apply button.

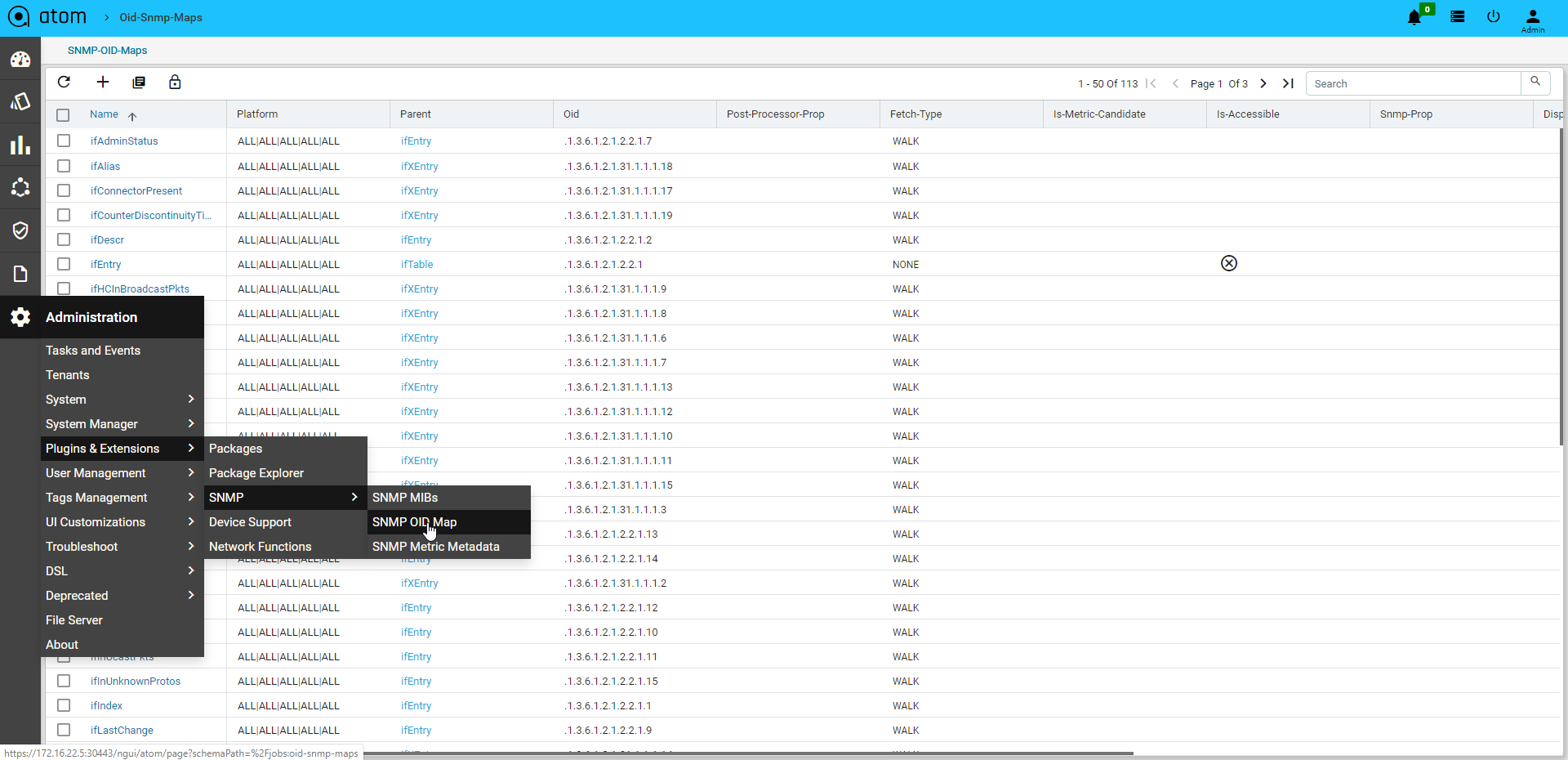

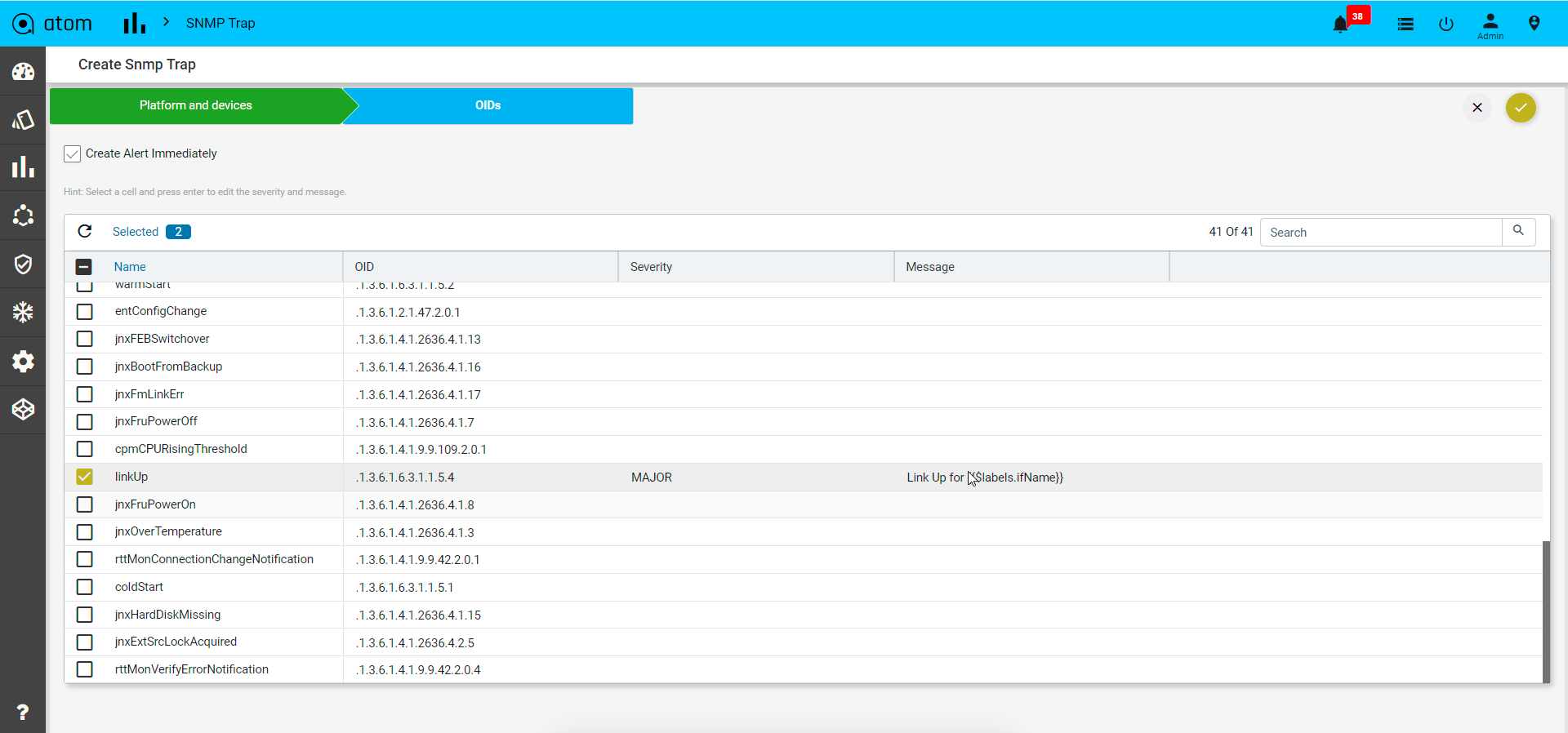

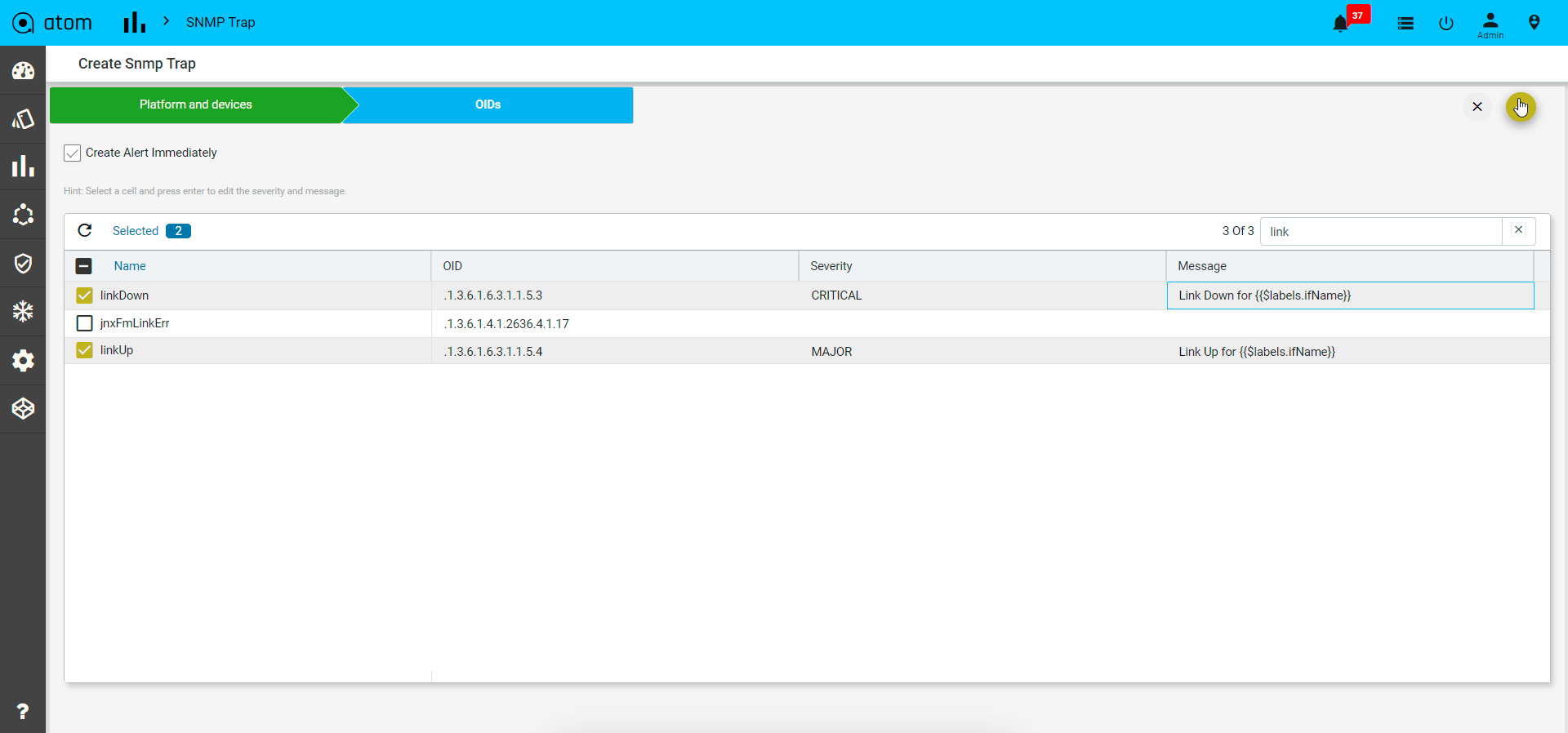

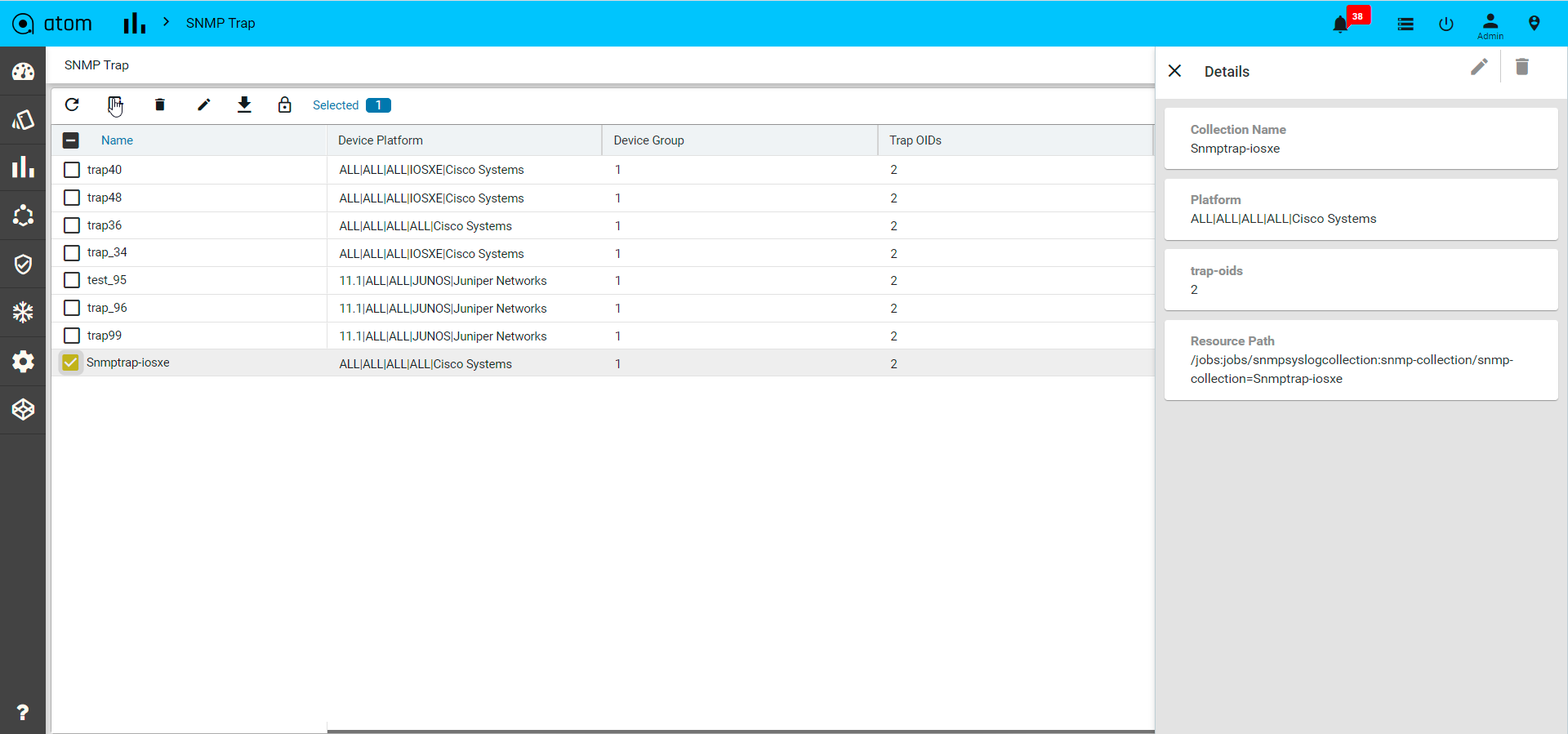

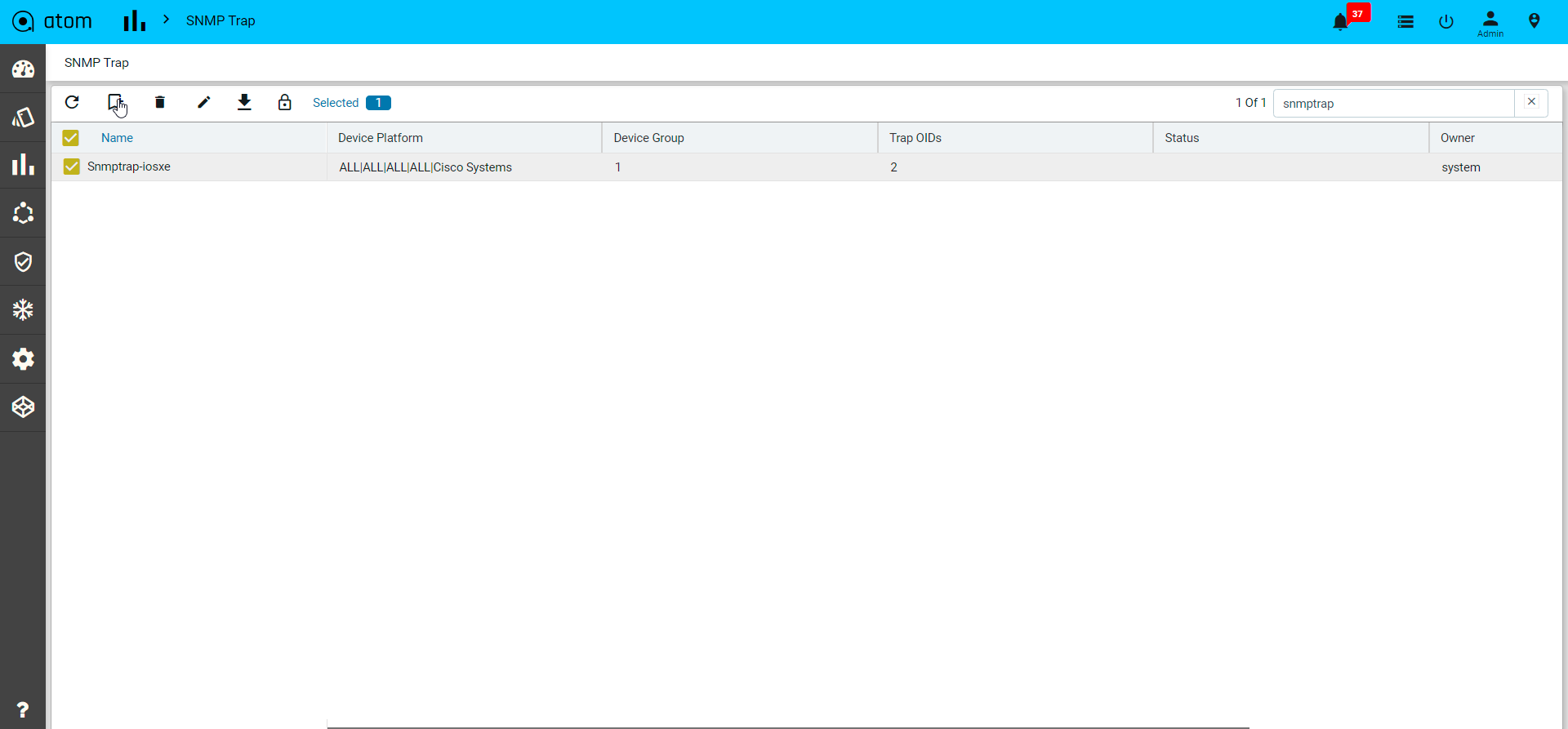

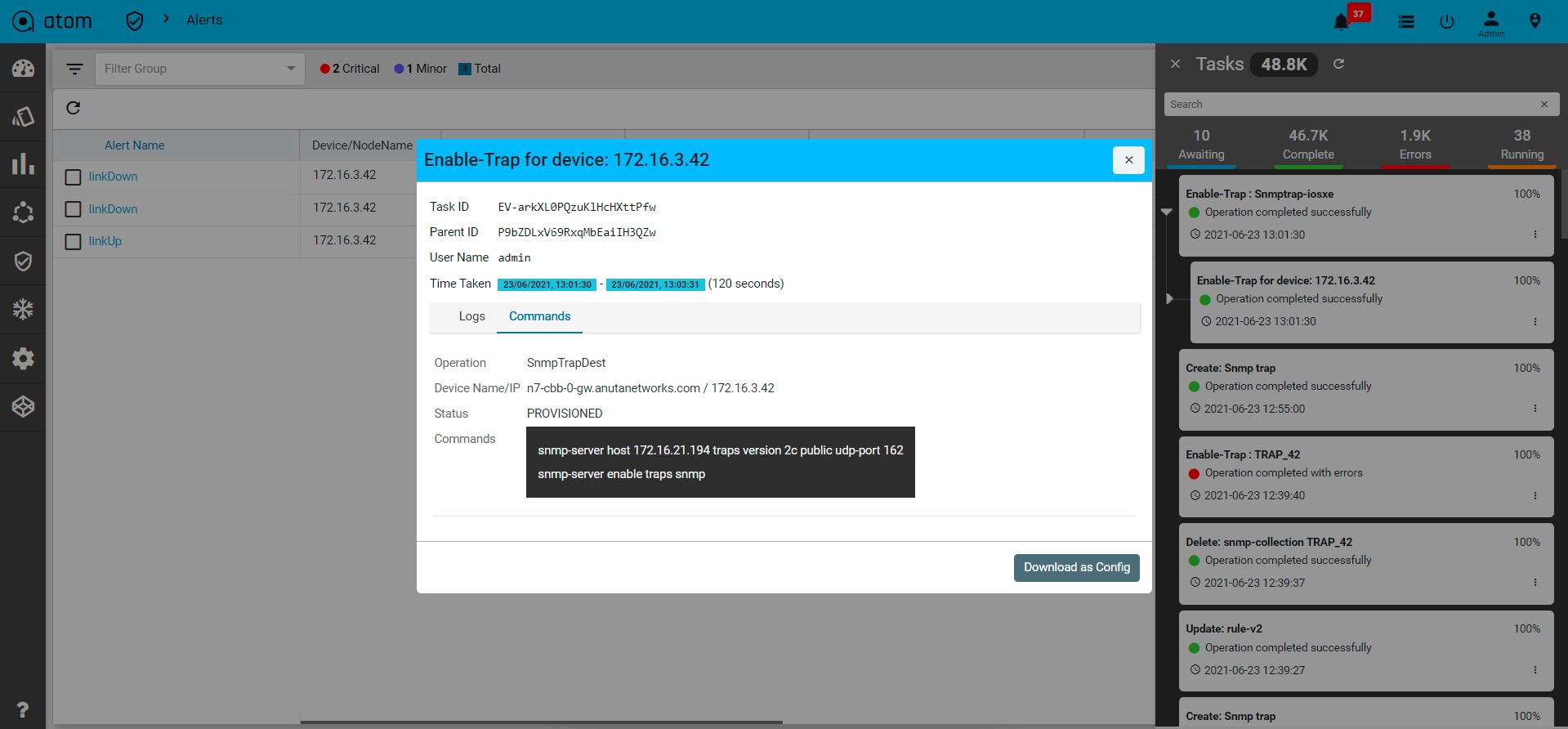

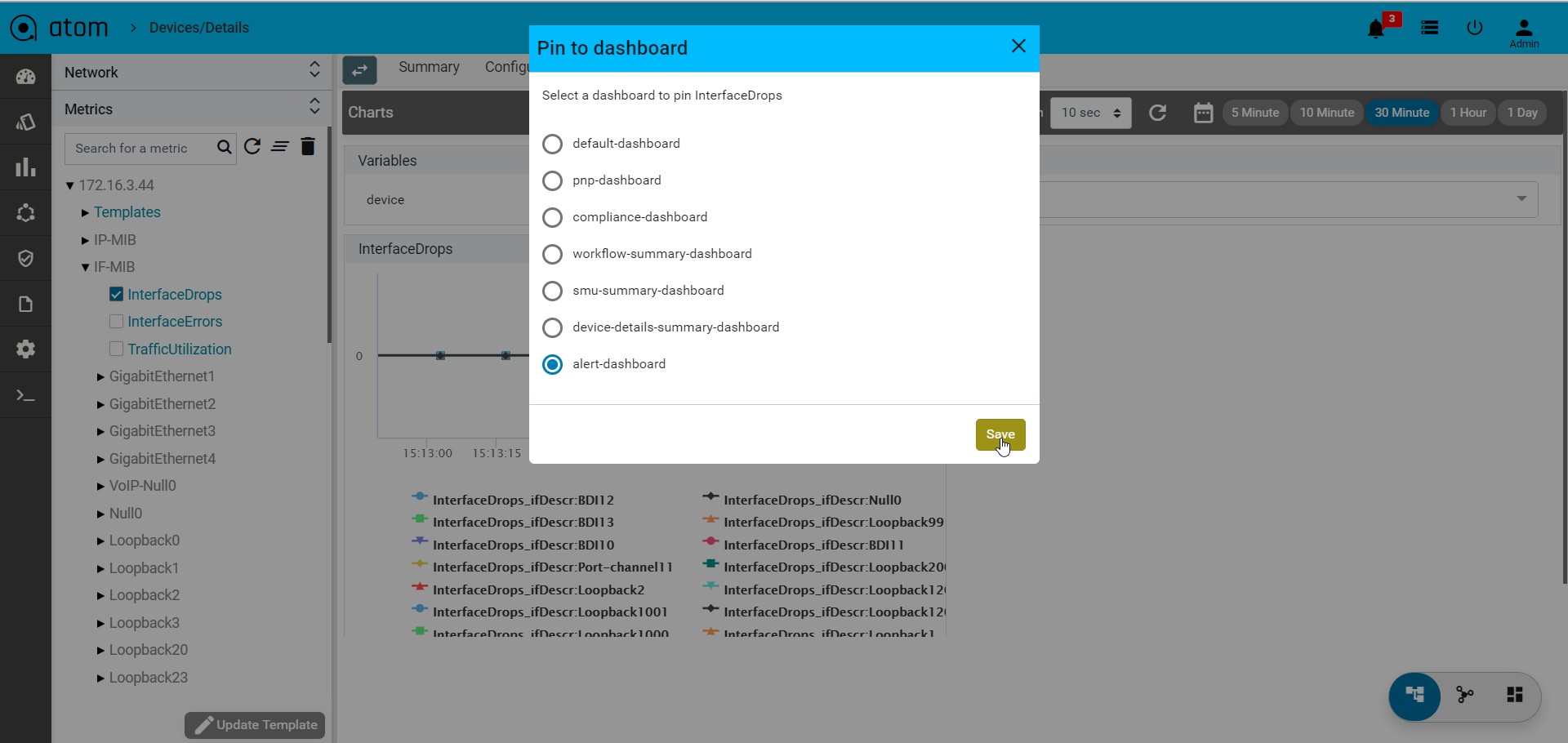

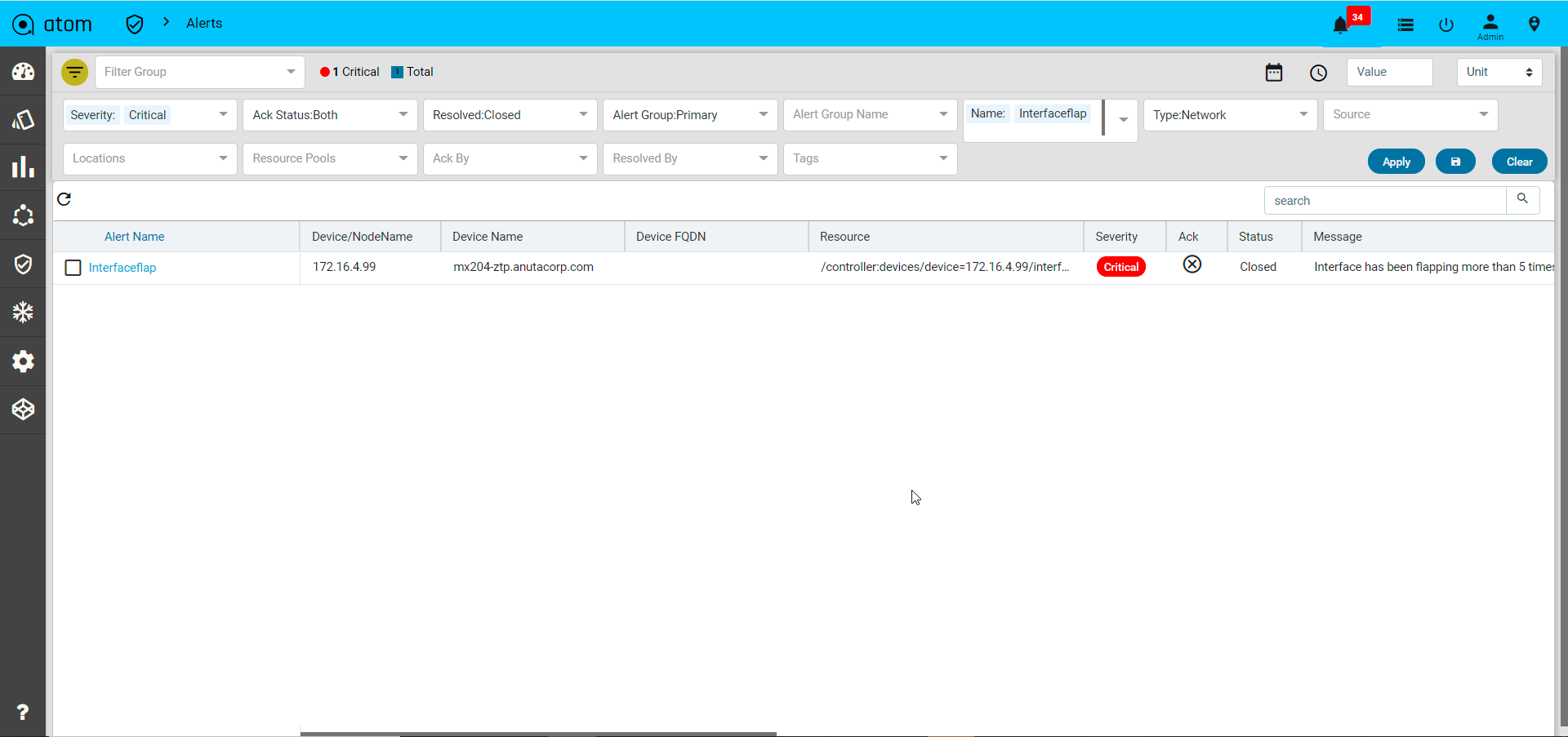

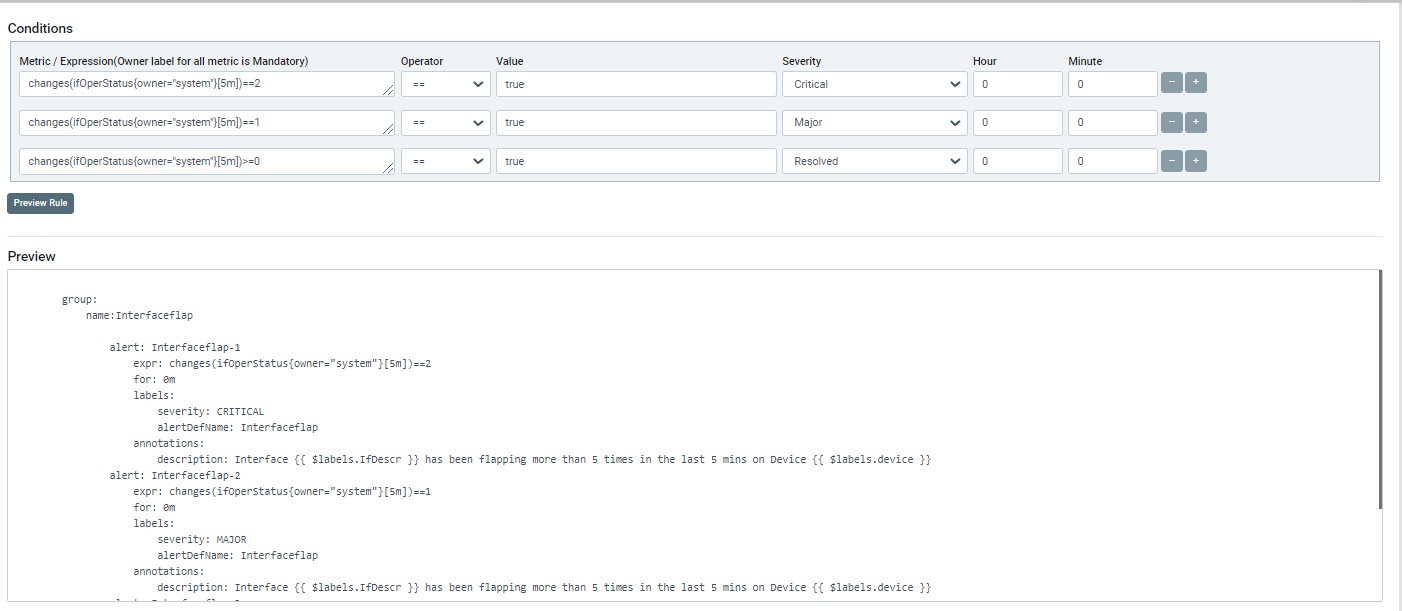

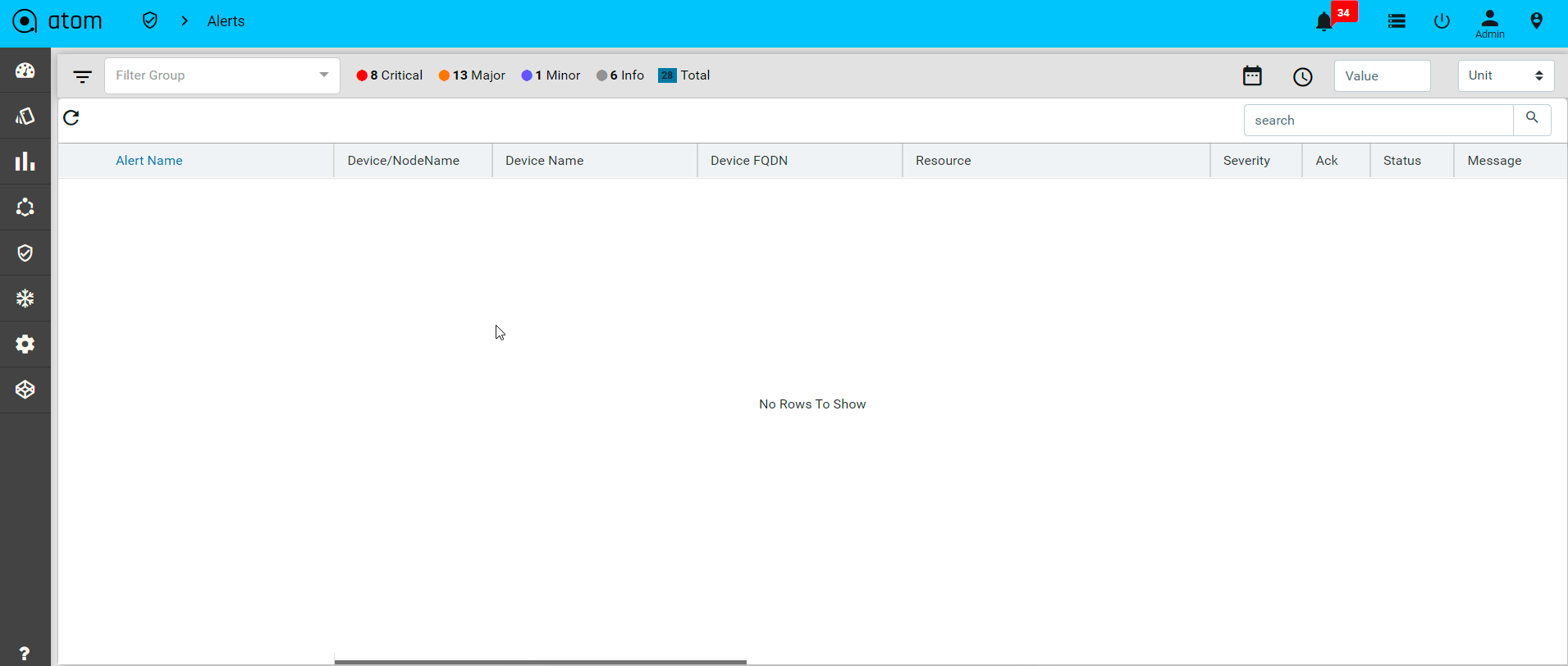

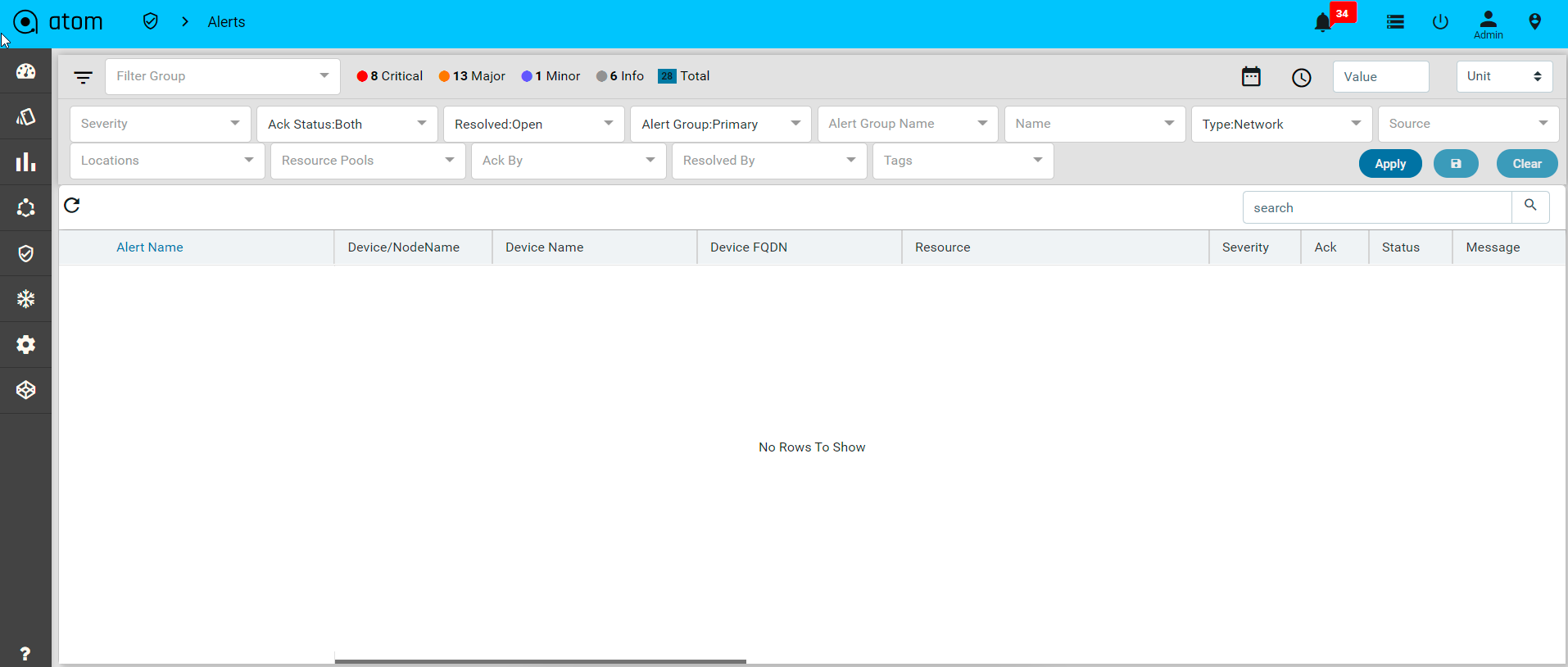

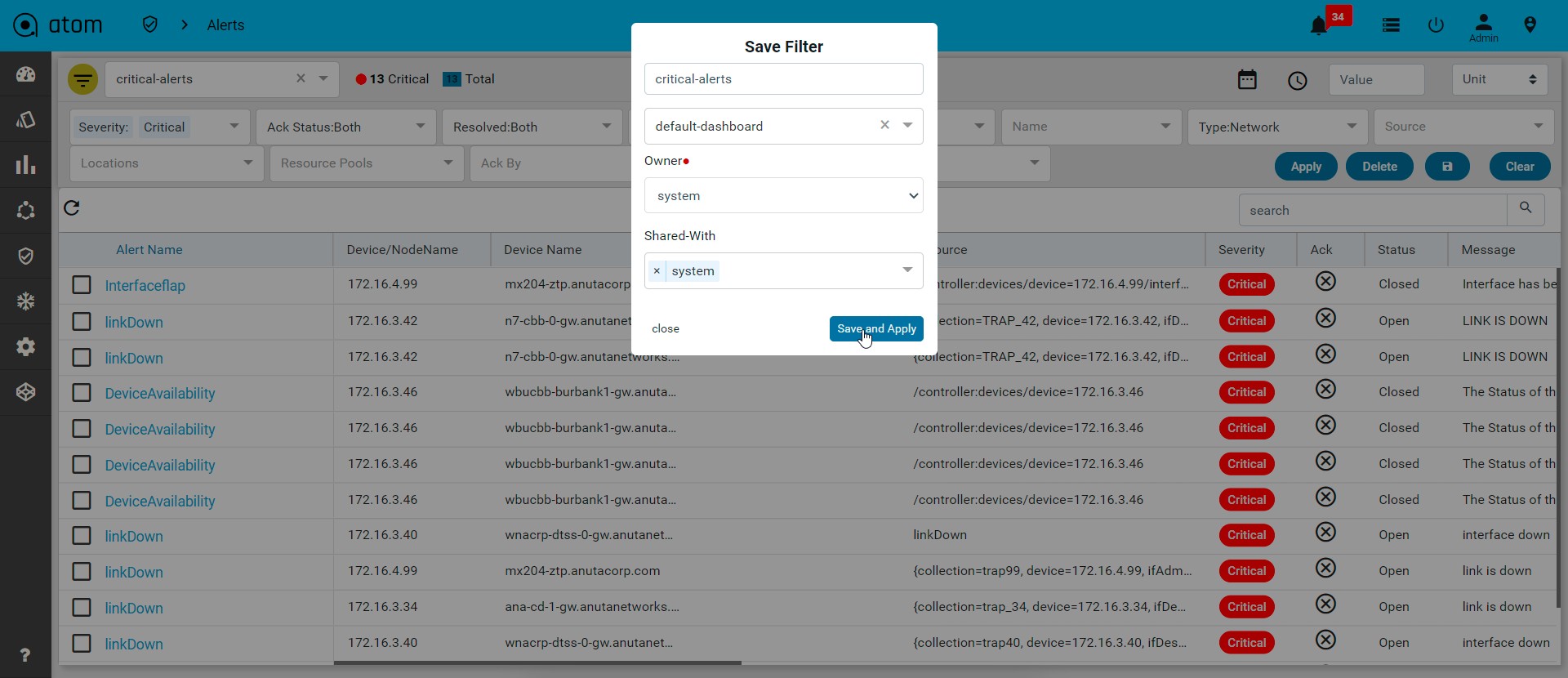

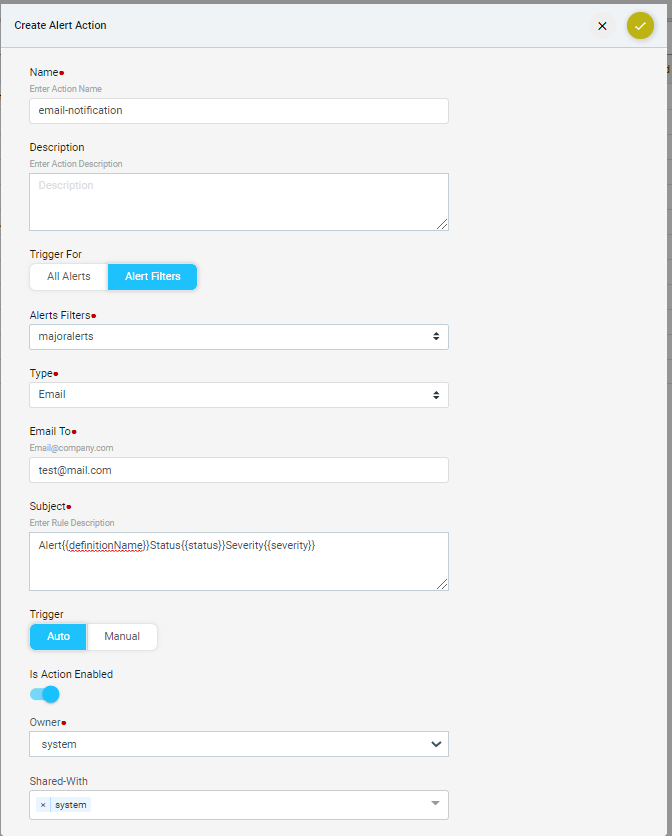

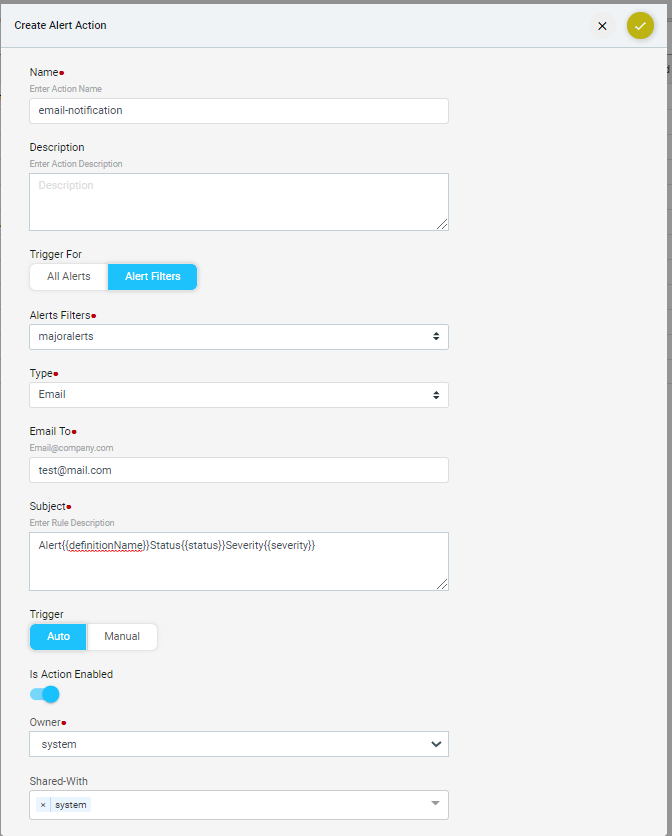

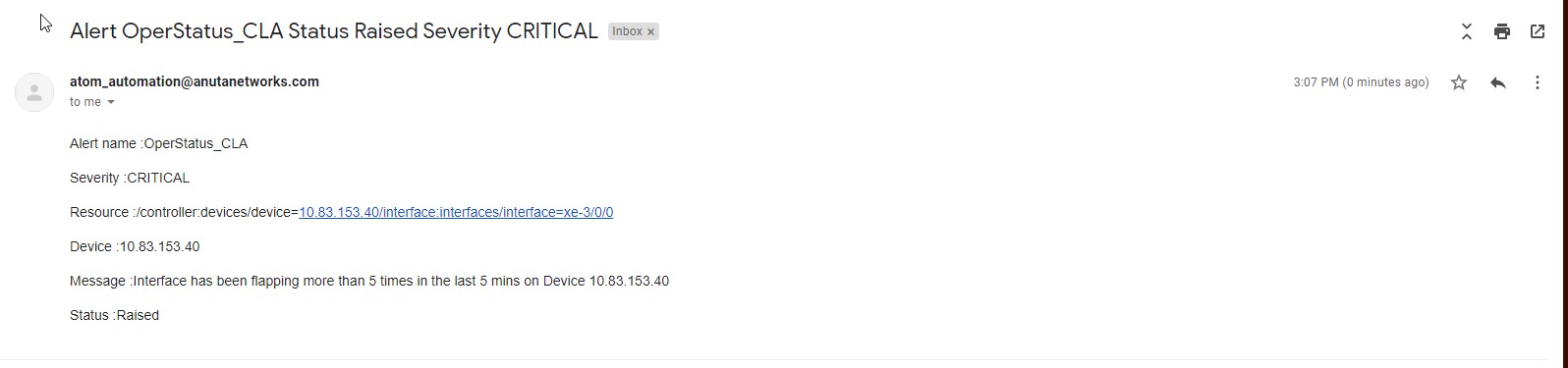

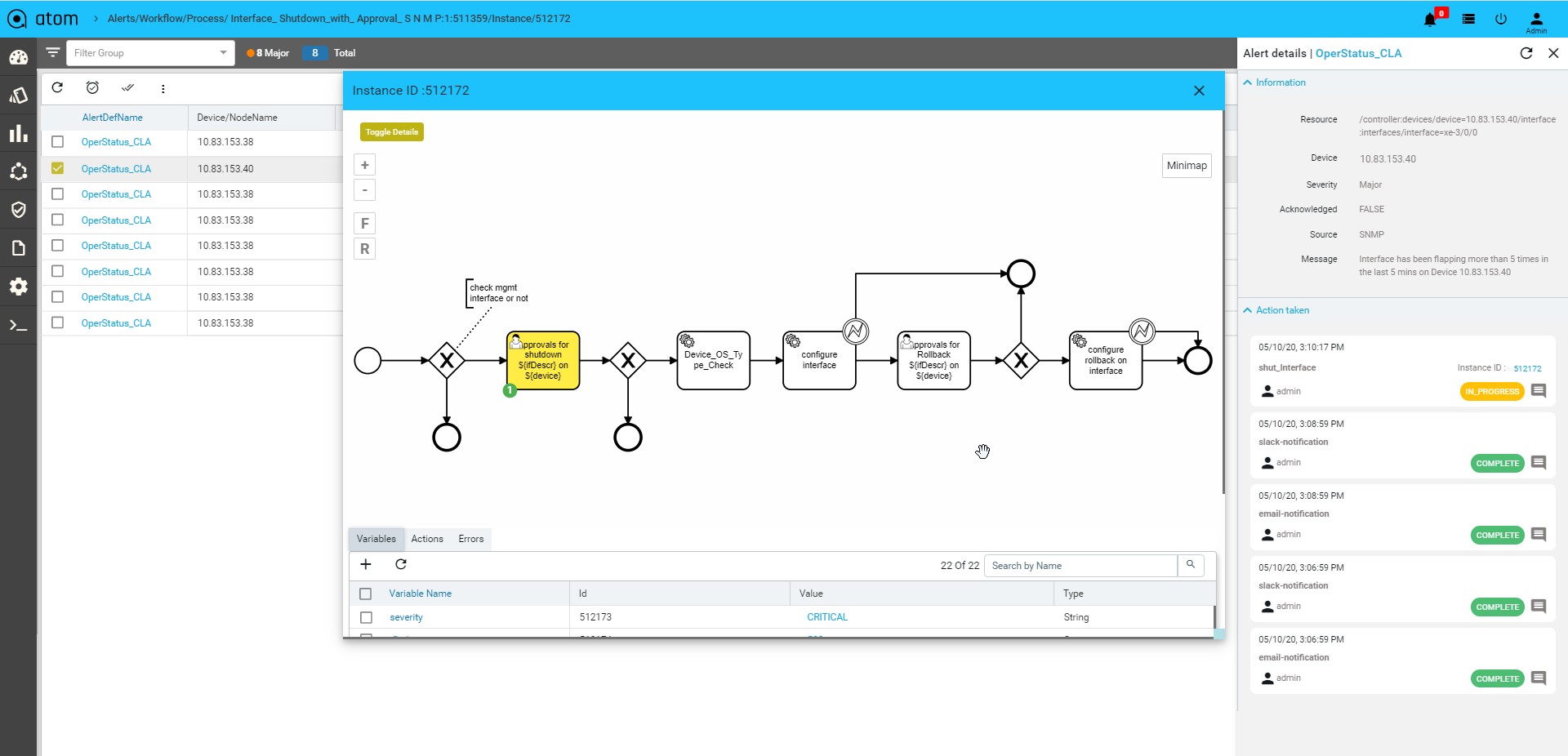

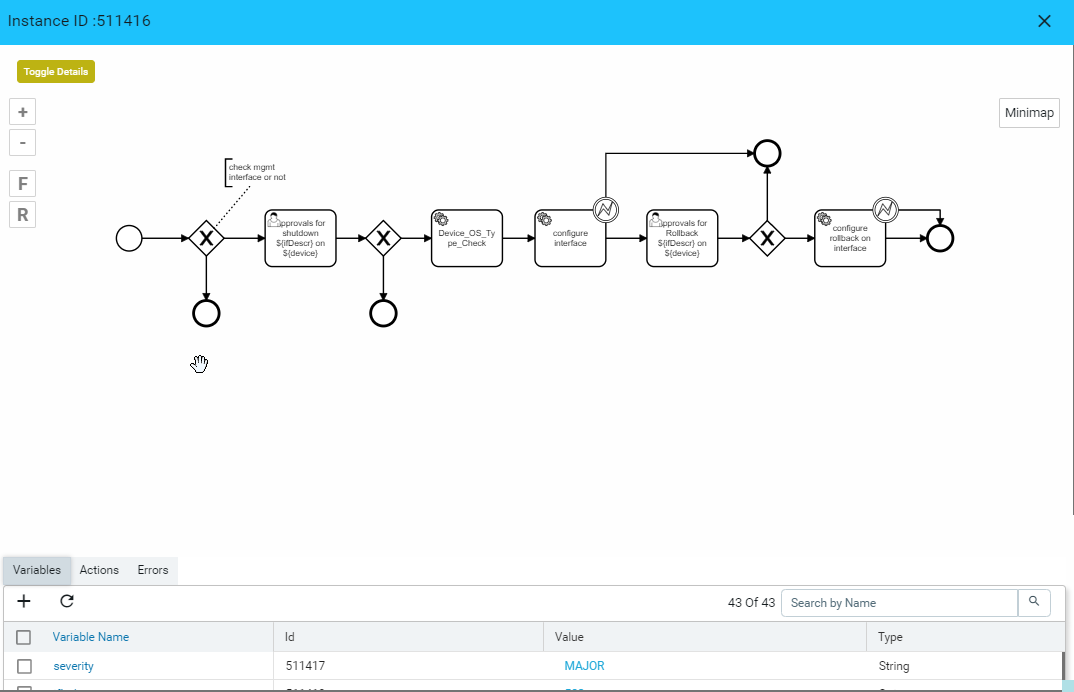

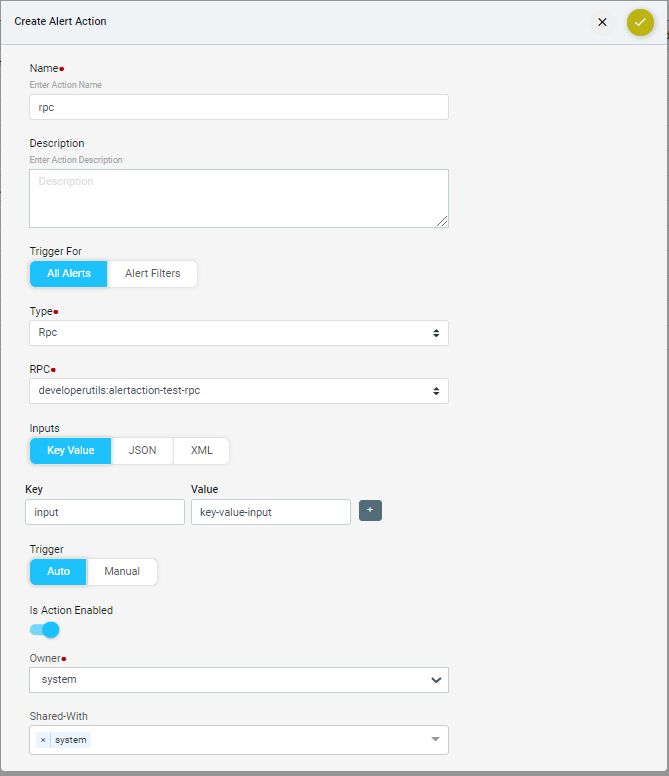

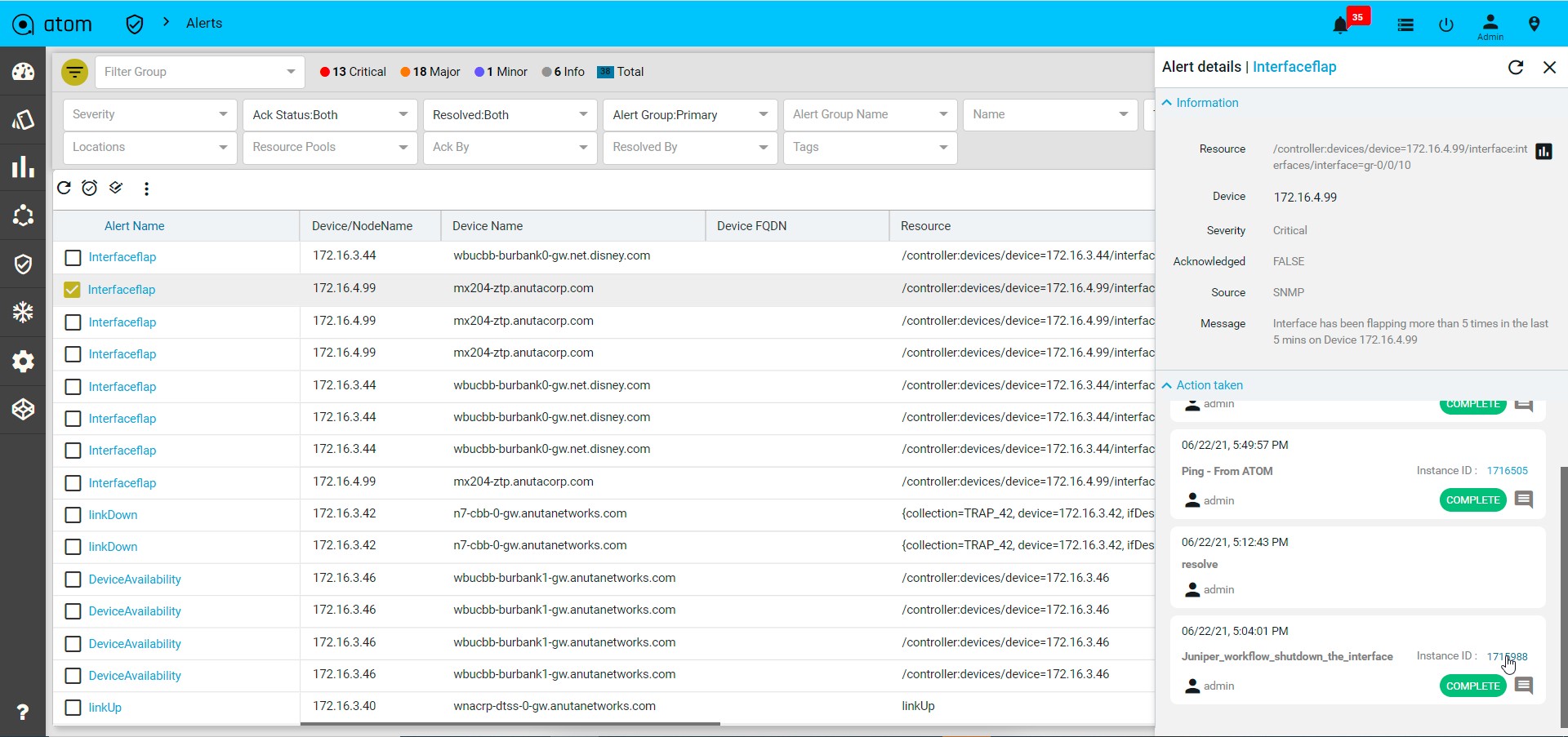

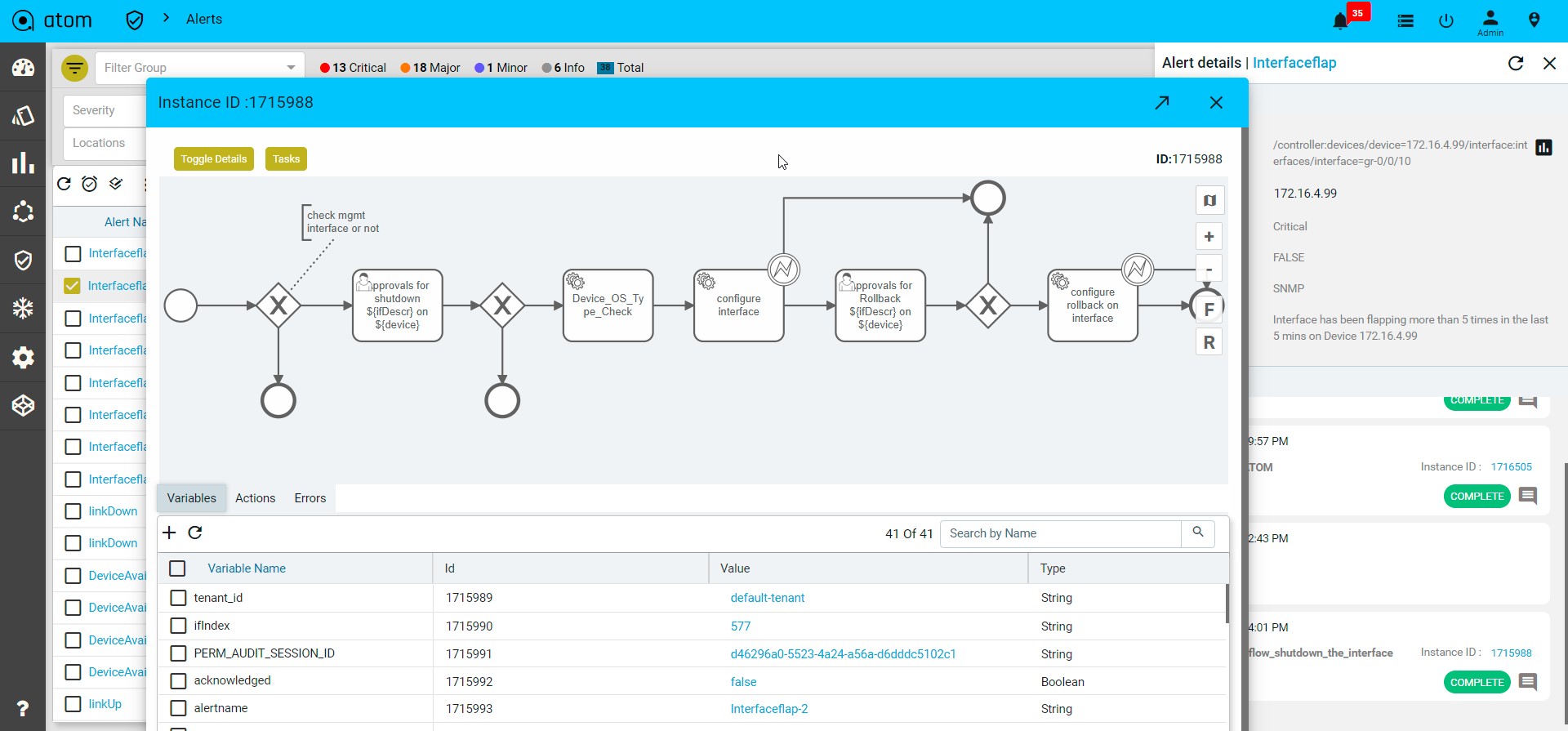

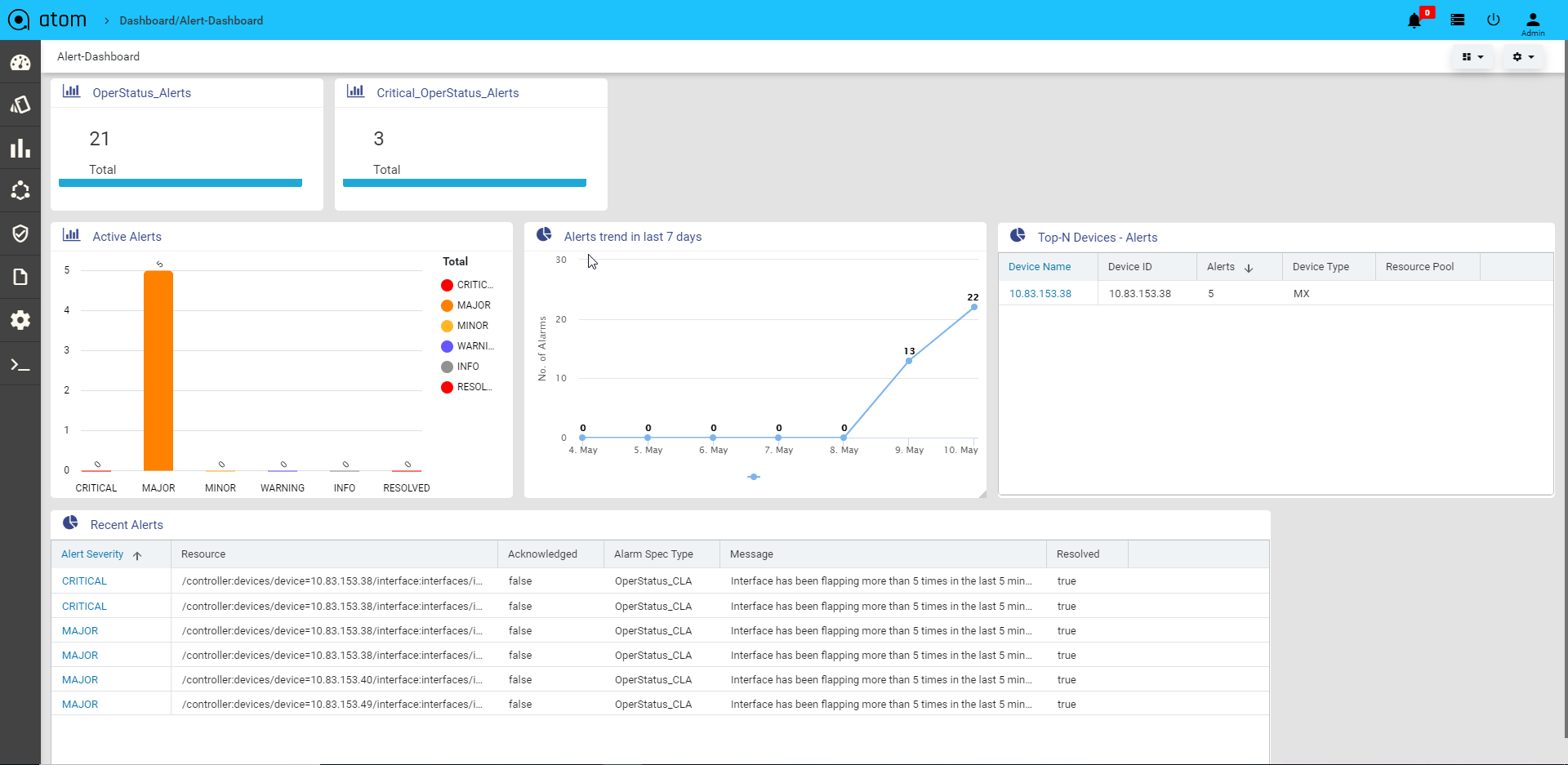

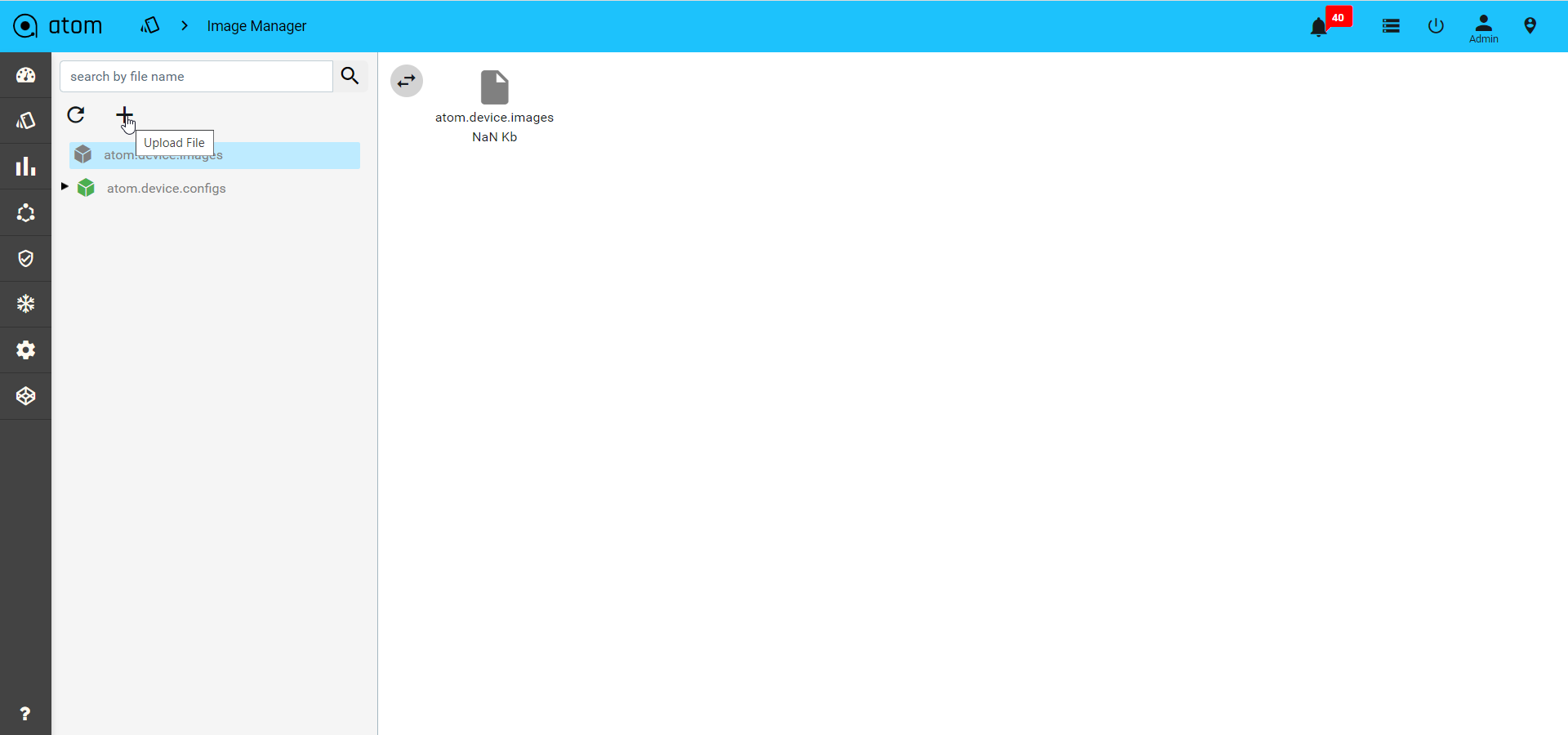

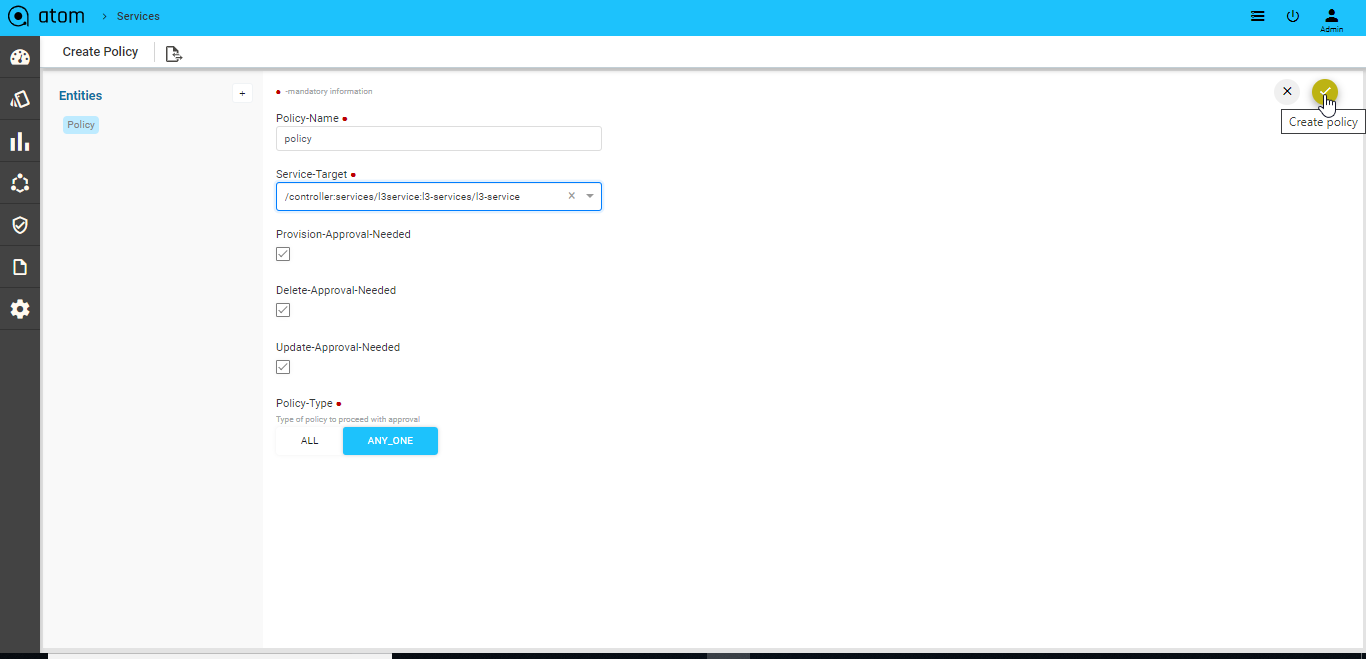

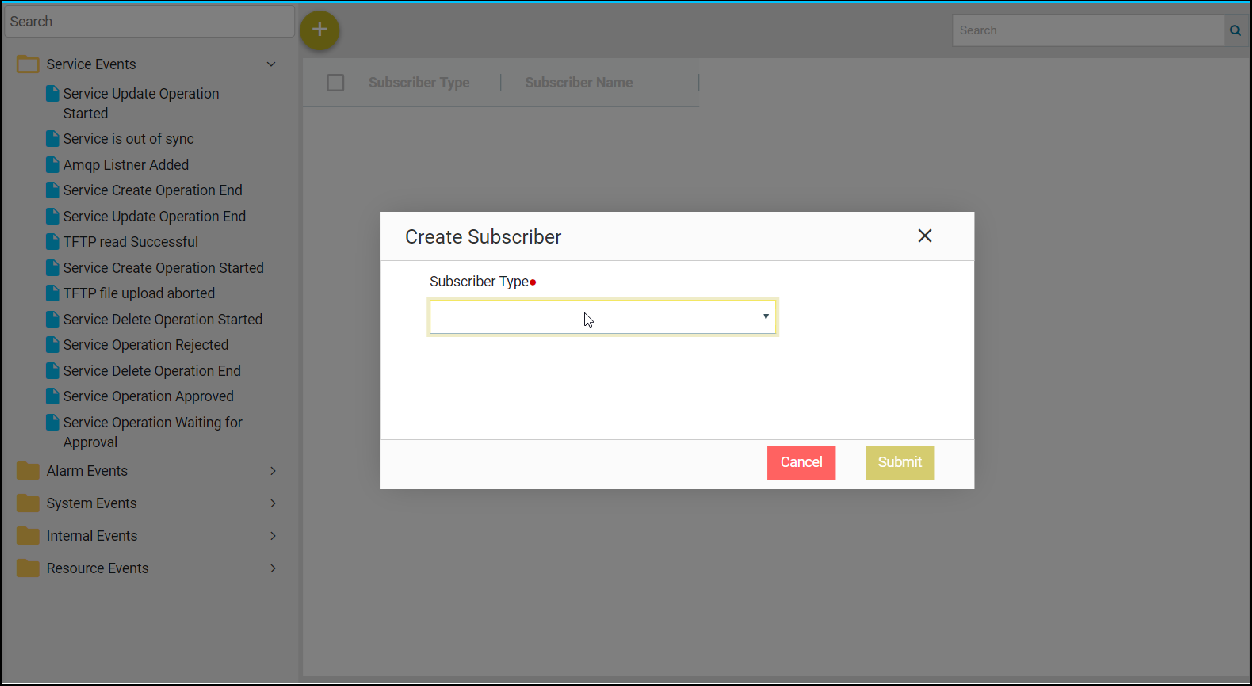

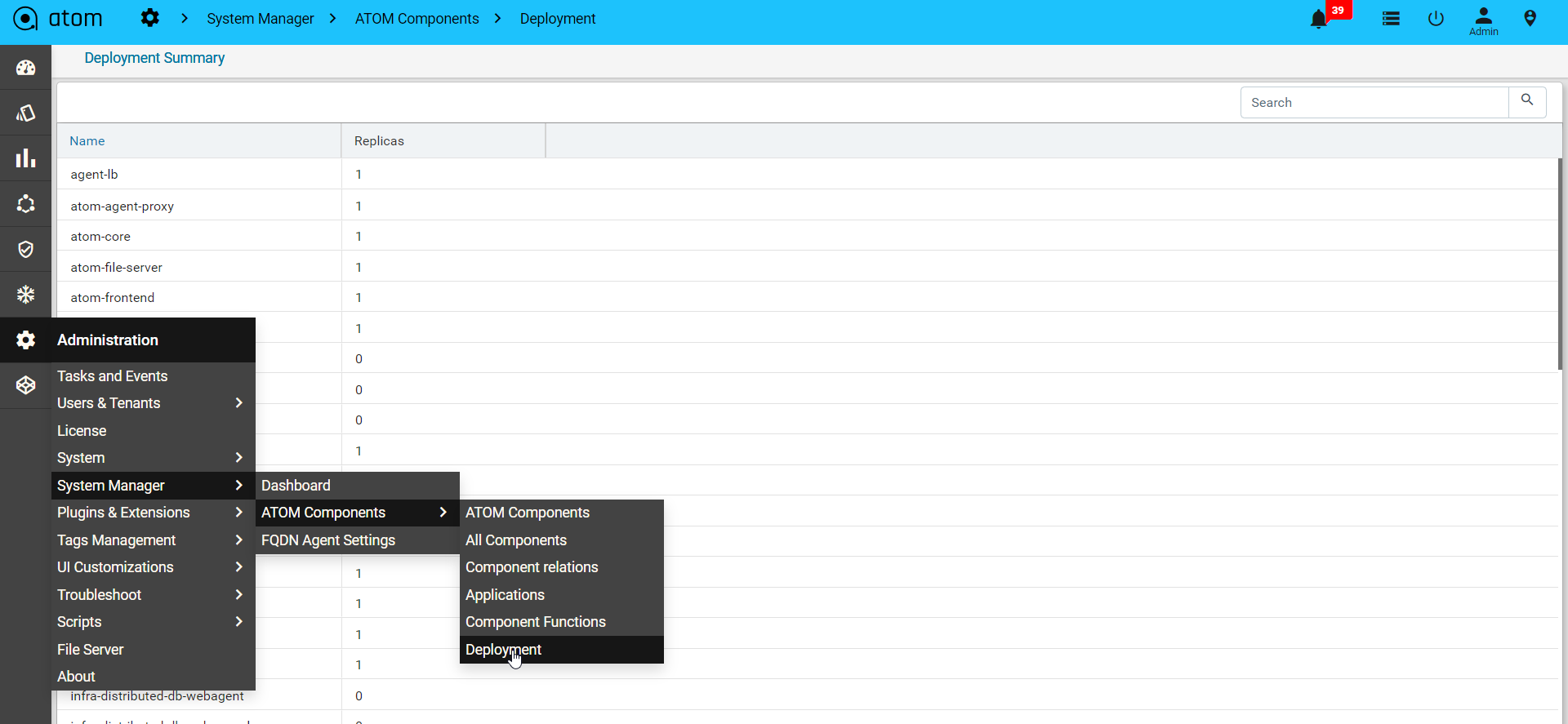

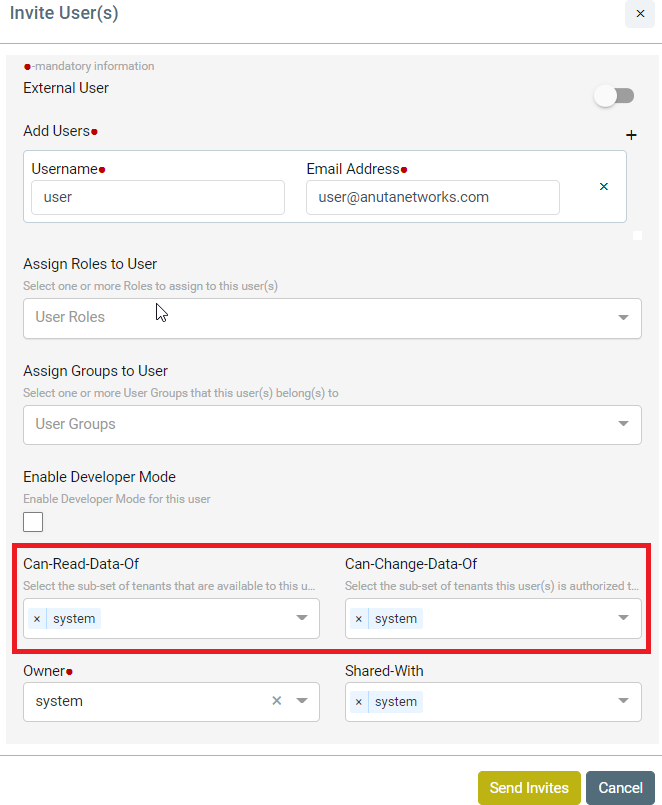

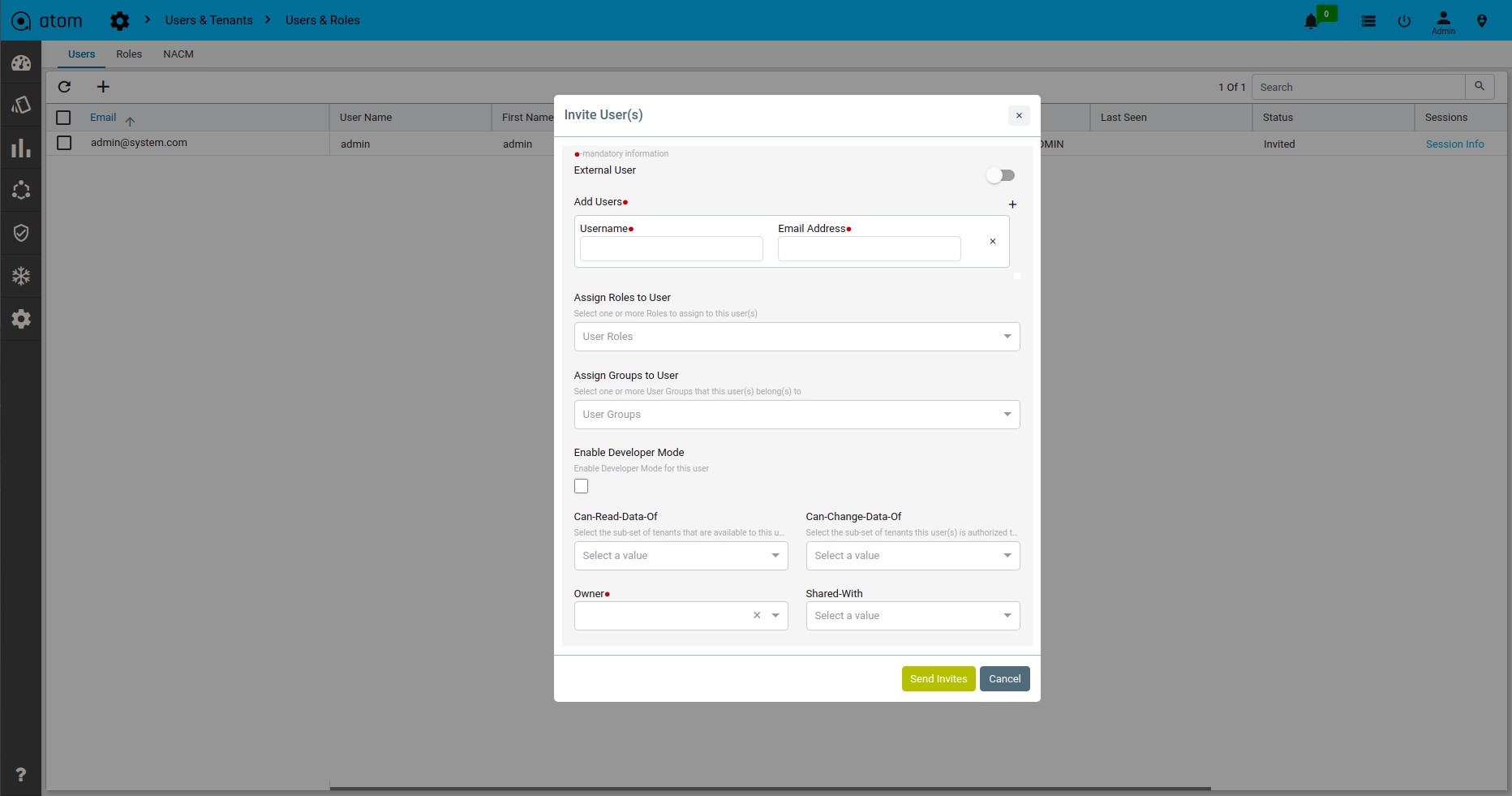

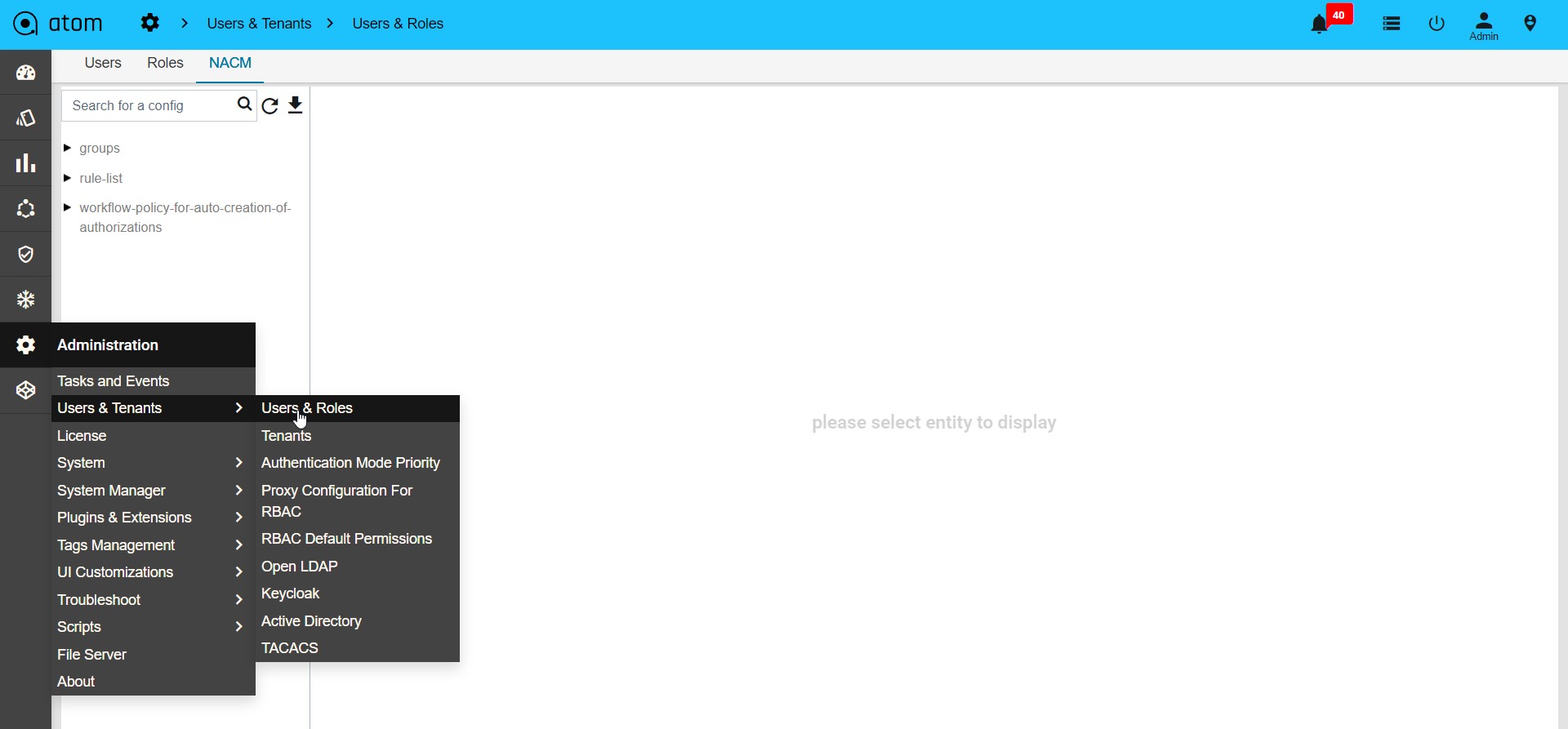

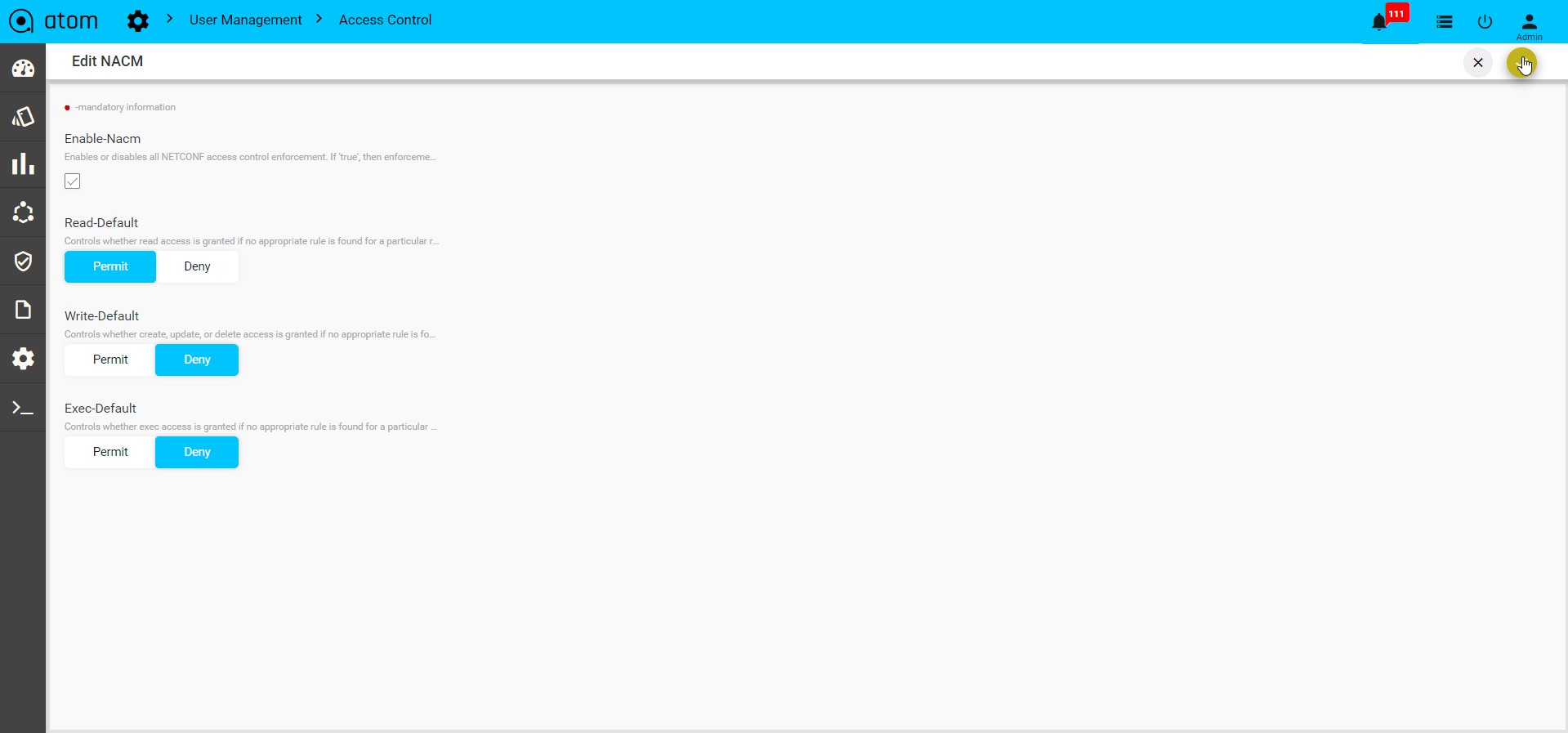

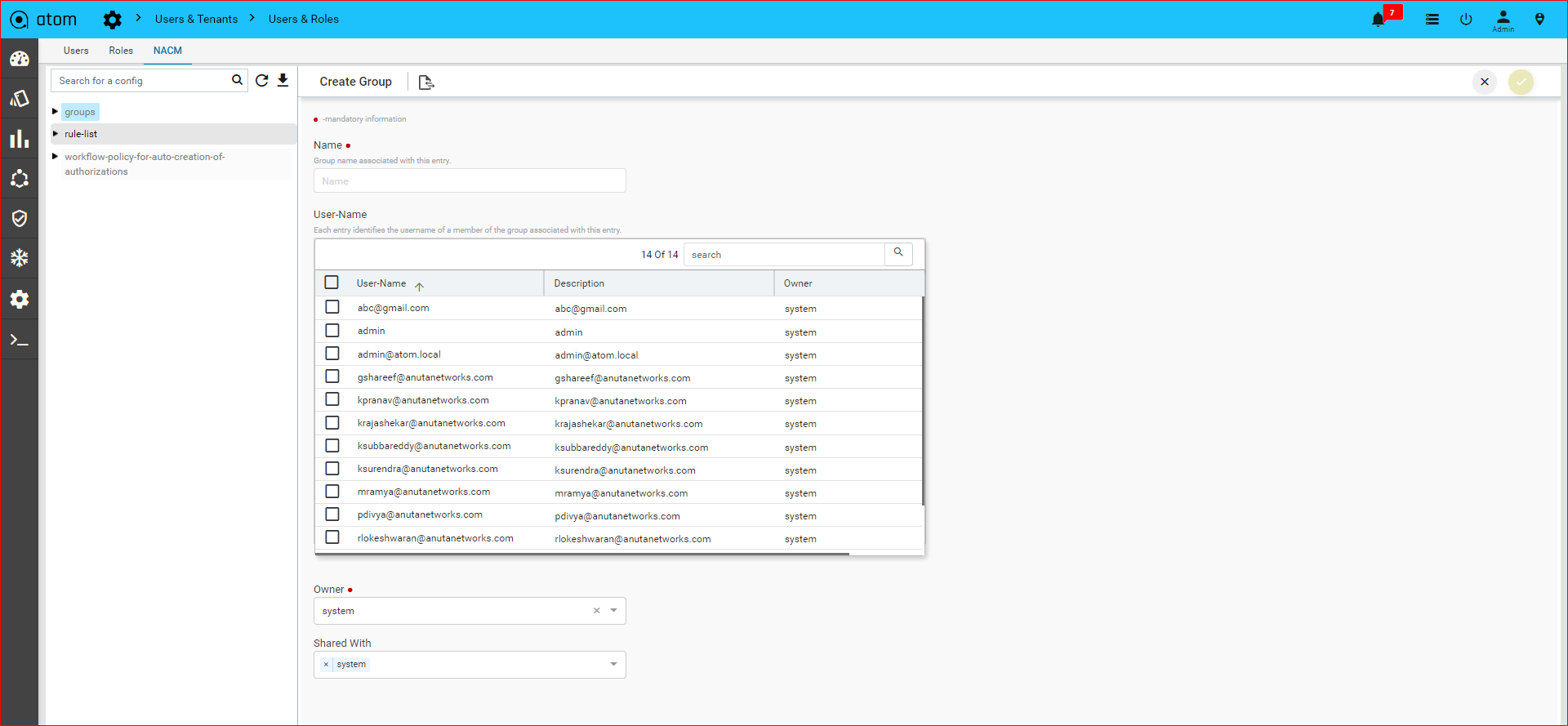

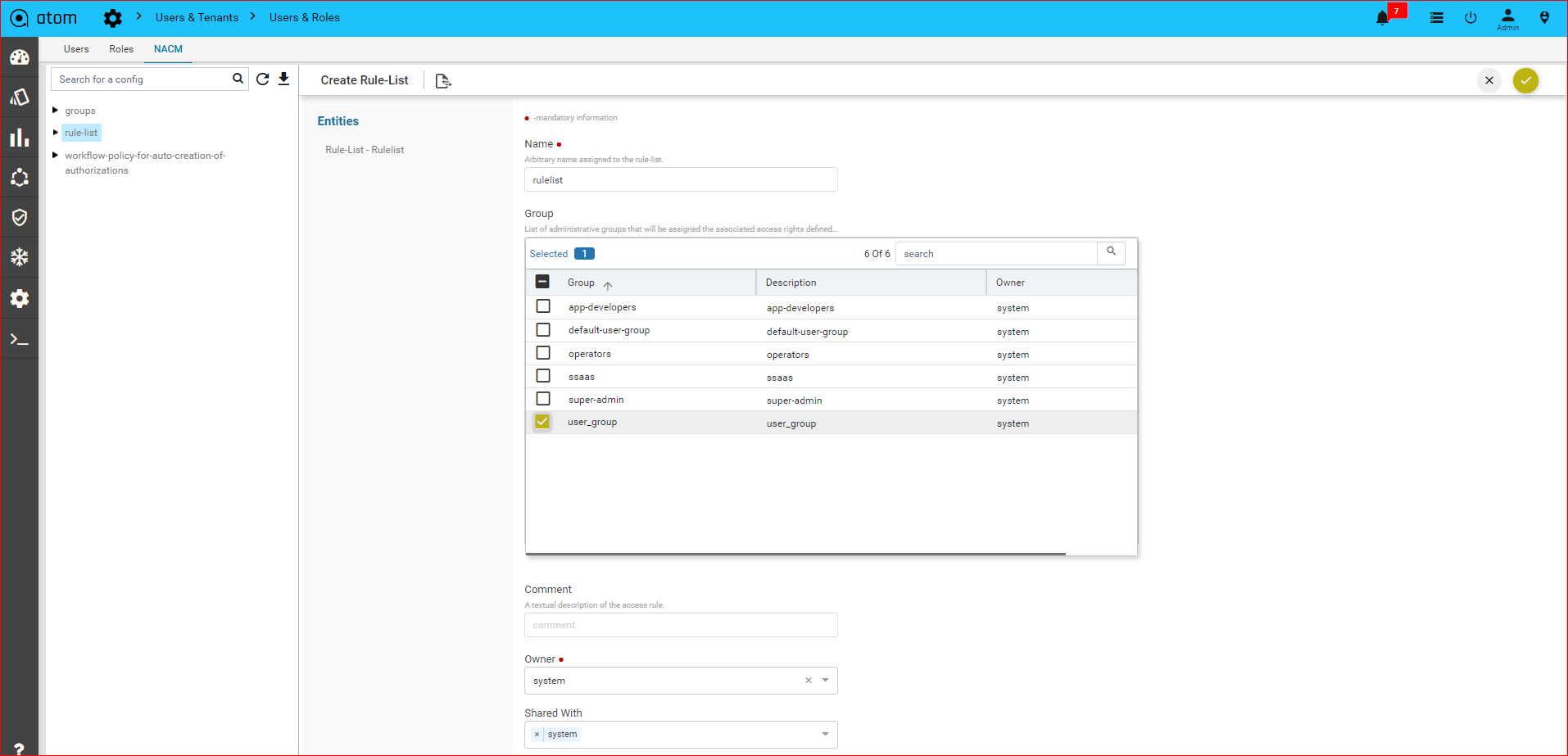

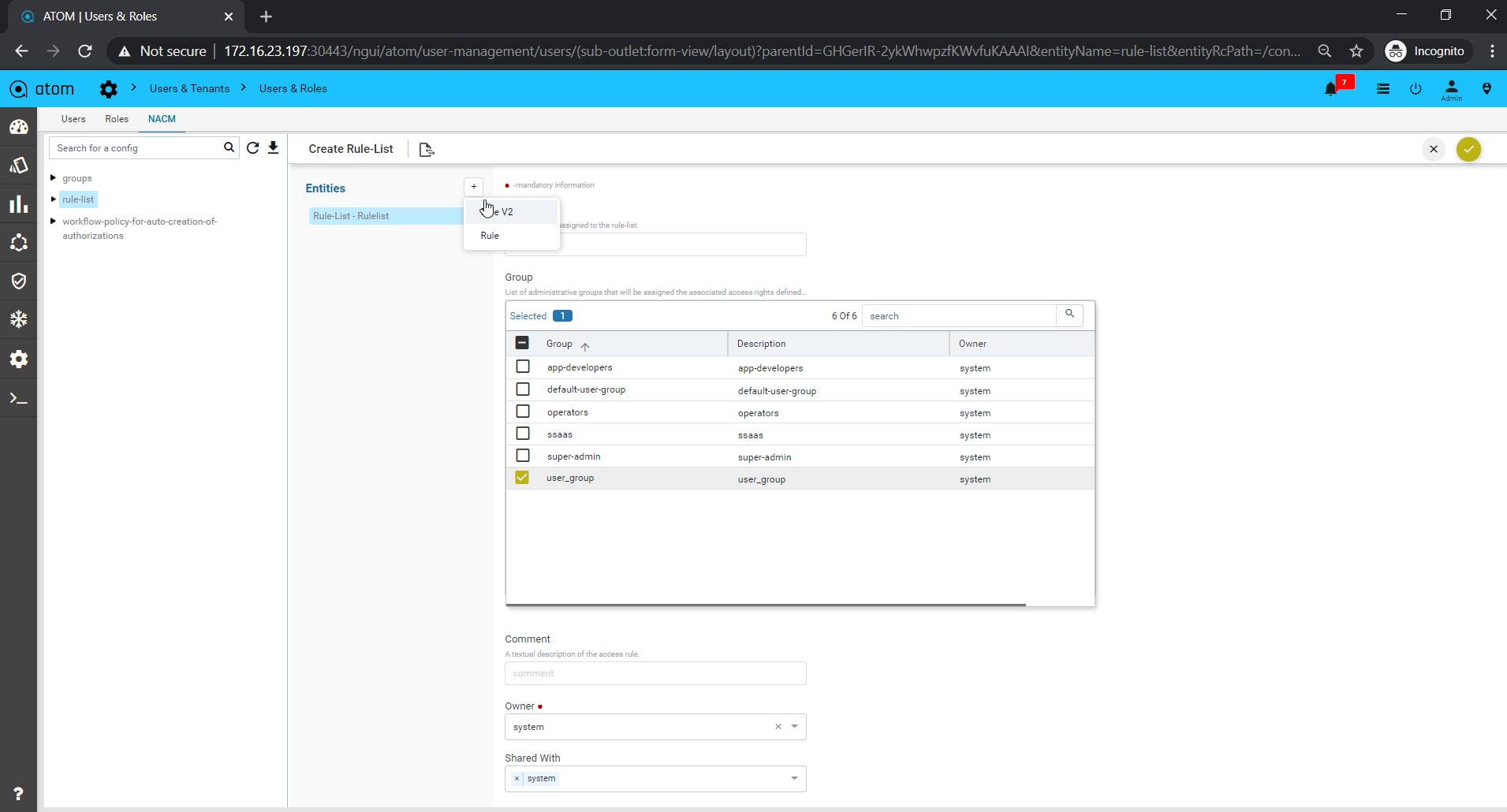

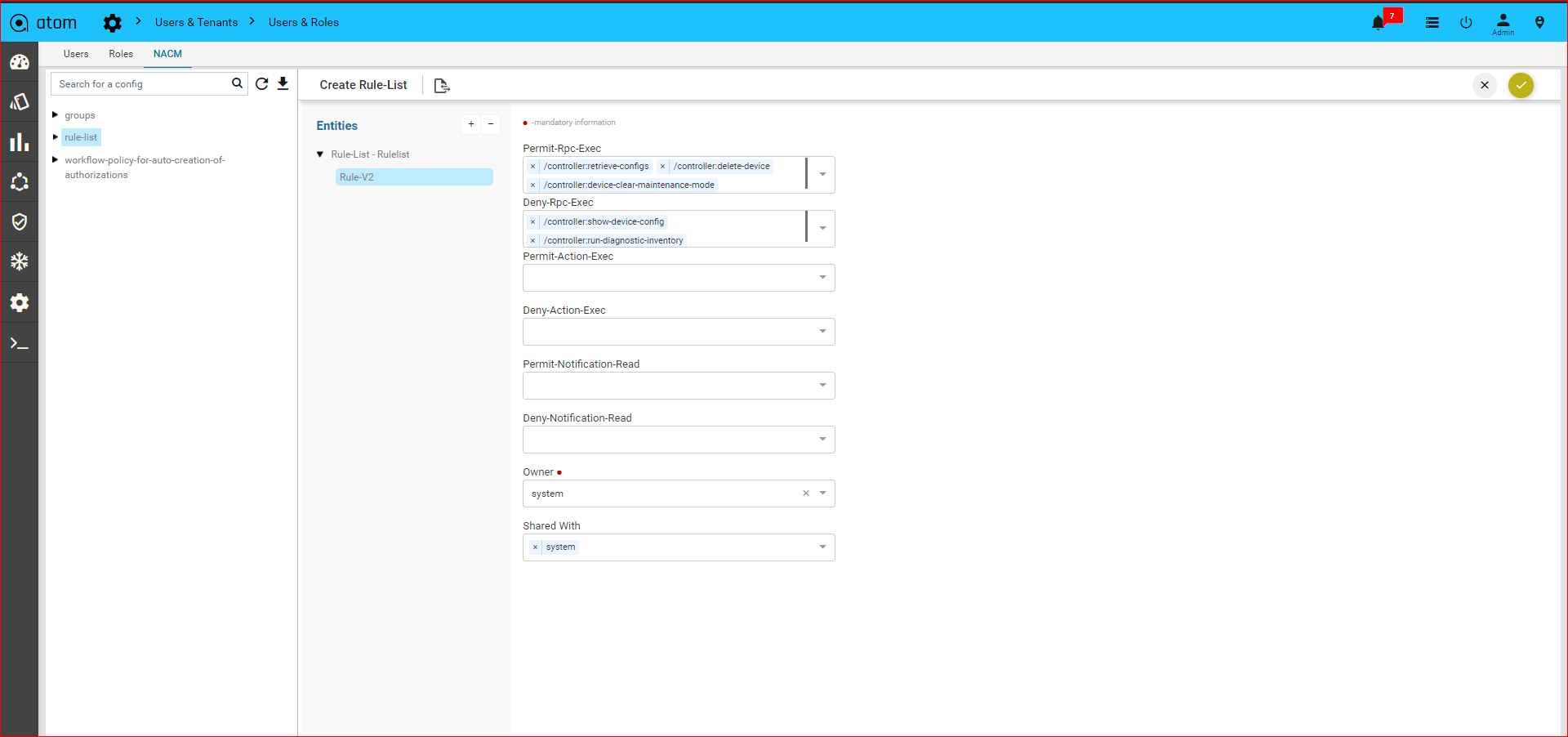

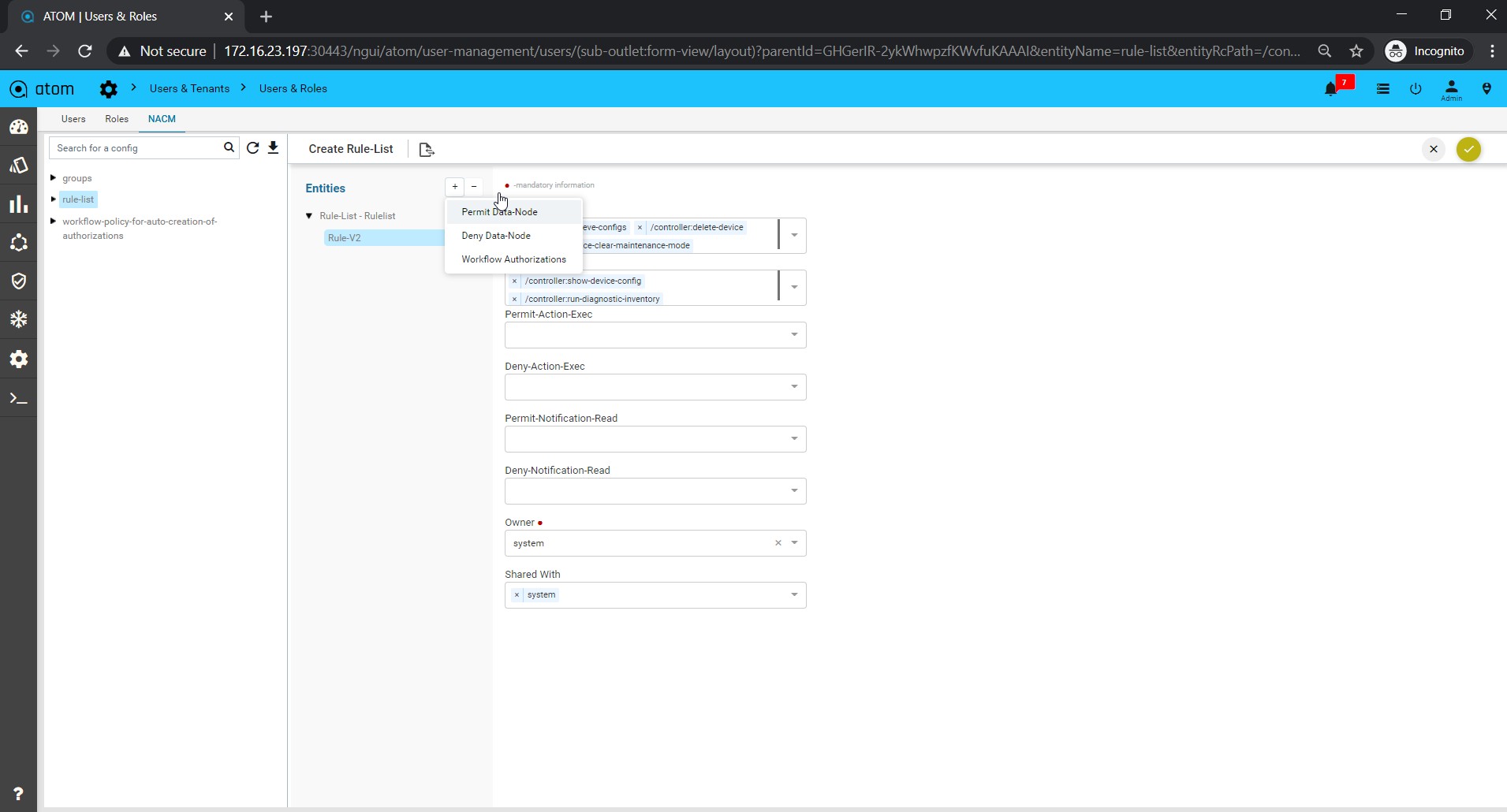

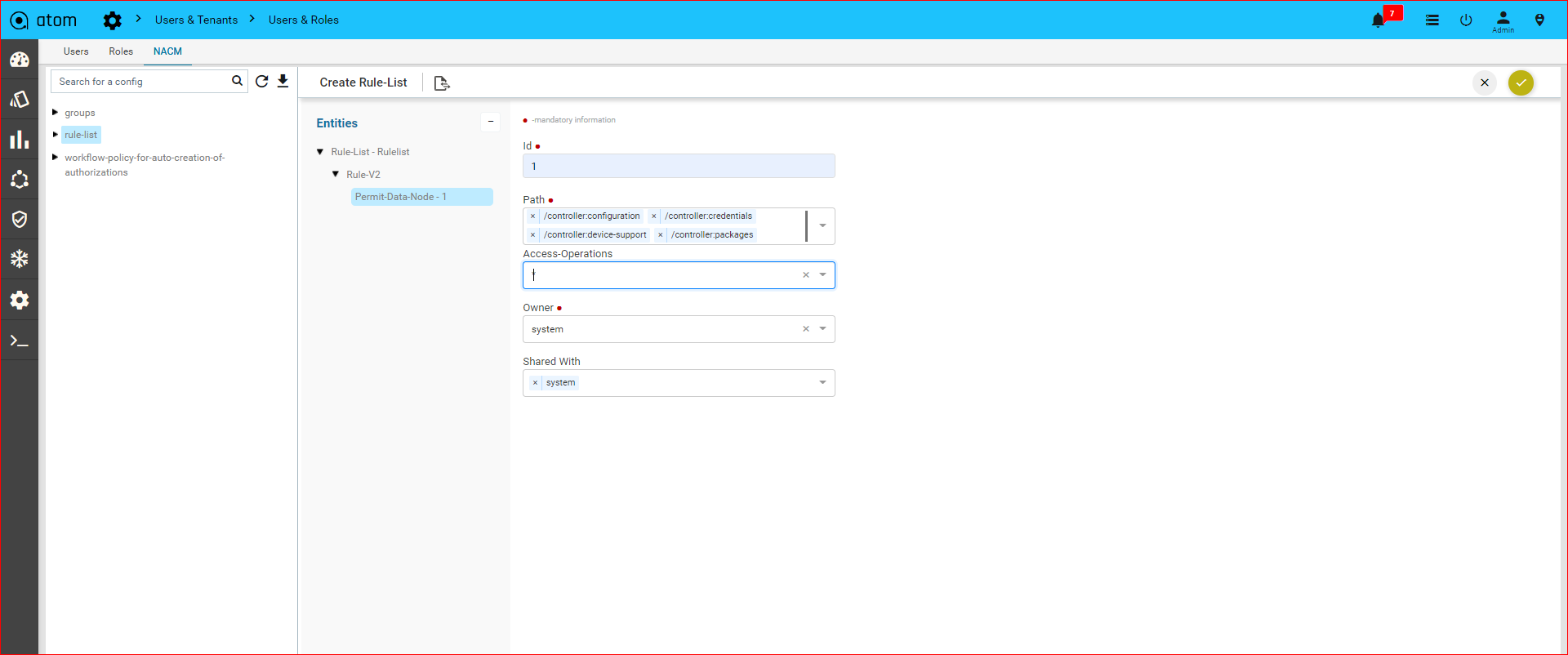

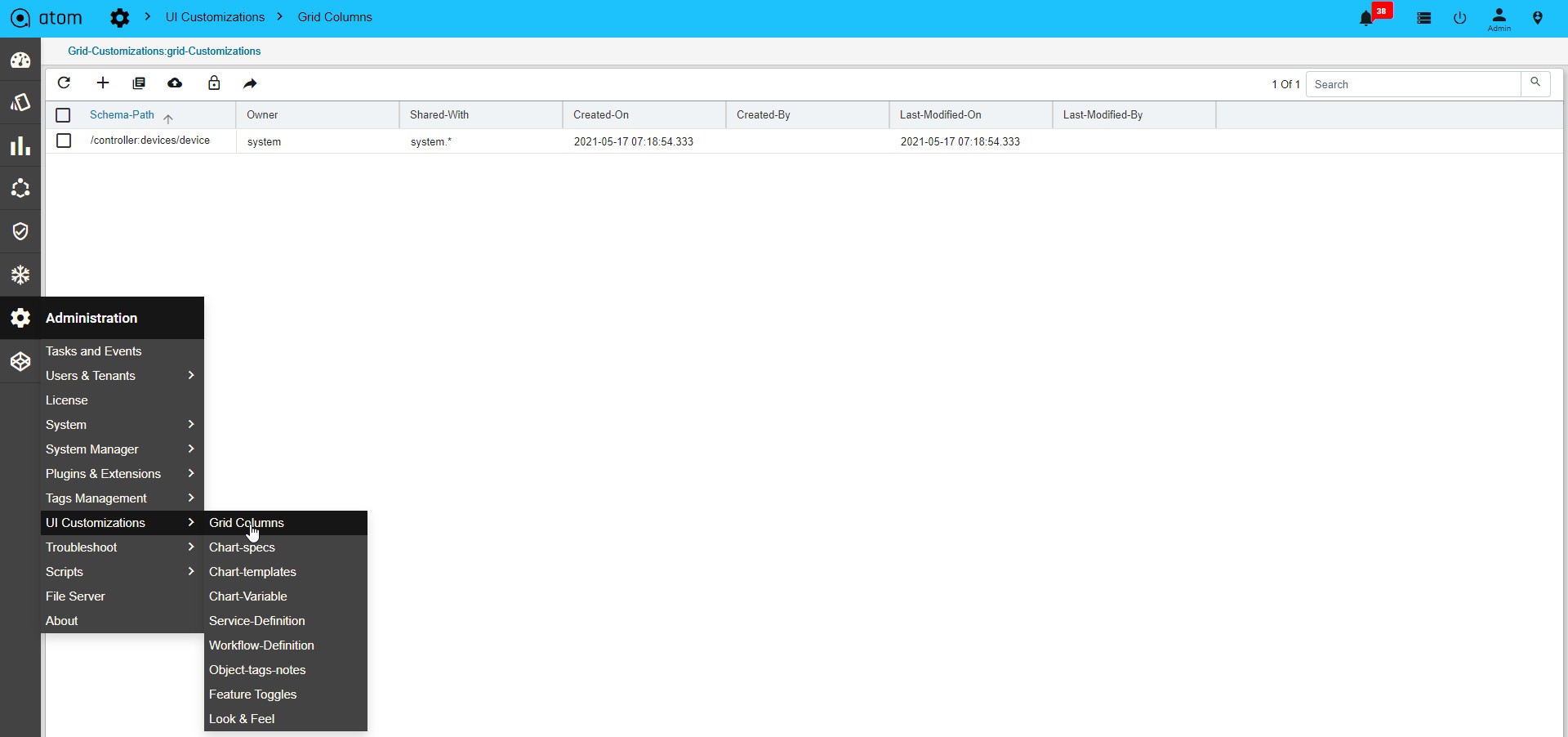

Inorder to revert the filter that are applied you can click on the clear button.